Dr Rajiv Desai

An Educational Blog

Robot

Robot:

_

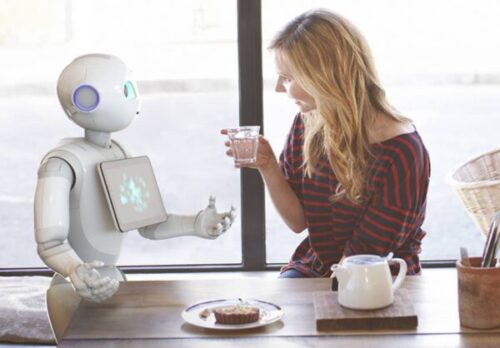

Pepper (above), a humanoid robot developed by SoftBank Robotics, was launched in 2014 and can read human emotions. Over 2,000 companies around the world have adopted Pepper as an assistant to welcome, inform and guide visitors in an innovative way.

____

Section-1

Prologue:

What is the first thing that comes to mind when you think of a robot?

For many people it is a machine that imitates a human—like the androids in Star Wars, Terminator and Star Trek: The Next Generation. However much these robots capture our imagination, such robots still only inhabit Science Fiction. Science-fiction films and novels usually portray robots as one of two things: destroyers of the human race or friendly helpers. People still haven’t been able to give a robot enough ‘common sense’ to reliably interact with a dynamic world and the independent intelligent machines seen in science fiction are still a long way off. The type of robots that you will encounter most frequently are robots that do work that is too dangerous, repetitive, boring, onerous, or just plain nasty. Most of the robots in the world are of this type. They can be found in manufacturing, military, medical and space industries. Some robots like the Mars Rover Sojourner or the underwater robot Caribou help us learn about places that are too dangerous for us to go. Many robots are just disembodied hands that sit at the ends of conveyor belts, picking up things and moving them while other types of robots are just plain fun for kids of all ages.

_

What is a robot?

There’s no precise definition, but by general agreement a robot is a programmable machine that imitates the actions or appearance of an intelligent creature–usually a human. To qualify as a robot, a machine has to be able to do two things: 1) get information from its surroundings, and 2) do something physical–such as move or manipulate objects. Robots are machines capable of carrying out physical tasks. They can be directly controlled by humans, or have some ability to operate by themselves. Although intelligent humanoid robots still remain mostly in the realm of science fiction, robotic machines are all around us. These feats of engineering already help us with many areas of life, and could transform the future for us. I have published articles on ‘Driverless car’ and ‘Drone’, both of them are kind of robots.

_

Robots have been with us for less than 60 years, but the idea of inanimate creations to do our bidding is much, much older. The ancient Greek poet Homer described maidens of gold, metallic helpers for the Hephaistos, the Greek god of the forge. The golems of medieval Jewish legend were robot-like servants made of clay, brought to life by a spoken charm. Leonardo da Vinci drew plans for a mechanical man in 1495. The word robot comes from the Czech word robota, meaning drudgery or slave-like labor. It was first used to describe fabricated workers in a fictional 1920s play by Czech author Karel Capek called Rossum’s Universal Robots. In the story, a scientist invents robots to help people by performing simple, repetitive tasks. However, once the robots are used to fight wars, they turn on their human owners and take over the world. The real robots wouldn’t become possible until the 1950’s and 60’s, with the invention of transistors and integrated circuits. Compact, reliable electronics and a growing computer industry added brains to the brawn of already existing machines.

_

A robot has these essential characteristics:

- Sensing

First of all your robot would have to be able to sense its surroundings. It would do this in ways that are not unsimilar to the way that you sense your surroundings. Giving your robot sensors: light sensors (eyes), touch and pressure sensors (hands), chemical sensors (nose), hearing and sonar sensors (ears), and taste sensors (tongue) will give your robot awareness of its environment.

- Movement

A robot needs to be able to move around its environment. Whether rolling on wheels, walking on legs or propelling by thrusters a robot needs to be able to move. To count as a robot either the whole robot moves, like the Sojourner or just parts of the robot moves, like the Canadarm.

- Energy

A robot needs to be able to power itself. A robot might be solar powered, electrically powered, battery powered. The way your robot gets its energy will depend on what your robot needs to do.

- Intelligence

A robot needs some kind of “smarts.” This is where programming enters the pictures. A programmer is the person who gives the robot its ‘smarts.’ The robot will have to have some way to receive the program so that it knows what it is to do.

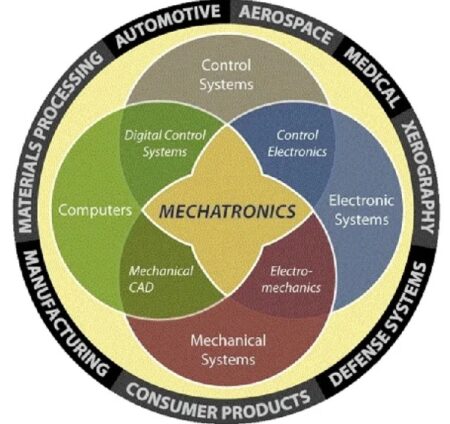

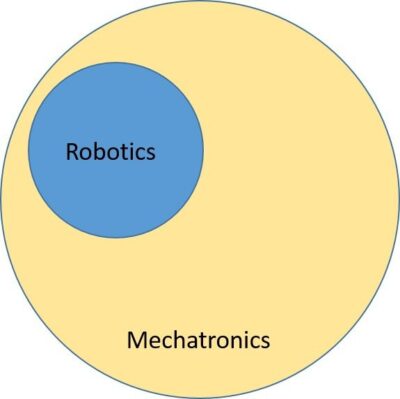

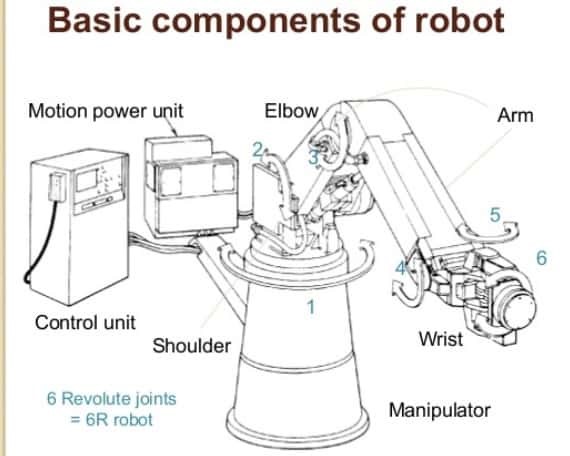

Robotics is an interdisciplinary branch of computer science and engineering involving design, construction, operation, and use of robots. Robotics brings together several very different engineering areas and skills. Robot is a system that contains sensors, control systems, manipulators, power supplies and software all working together to perform a task.

_

If you think robots are mainly the stuff of space movies, think again. Right now, all over the world, robots are on the move. They’re painting cars at Ford plants, assembling Milano cookies for Pepperidge Farms, walking into live volcanoes, driving trains in Paris, and defusing bombs in Northern Ireland. As they grow tougher, nimbler, and smarter, today’s robots are doing more and more things we can’t –or don’t want to–do. The applications of robotics in recent years have emerged beyond the field of manufacturing or industrial robots itself. Robotics applications are now widely used in medical, transport, underwater, entertainment and military sector. Robots are found everywhere: in factories, homes and hospitals, and even in outer space. Much research and development are being invested in developing robots that interact with humans directly. There are humanoid robots that resemble humans that serve as companions to the elderly and remind them to take their medications and that hold intelligent conversations. Robots are used in schools in order to increase students’ motivation to study STEM and as a pedagogical tool to teach STEM in a concrete environment. You may be worried a robot is going to steal your job, but you may be more likely to work alongside a robot in the near future than have one replace you.

_

Since antiquity, humankind has dreamed of creating intelligent machines. The invention of the computer and the breathtaking pace of technological progress appear to be bringing the realization of this dream within our grasp. Scientists and engineers across the world are working on the development of intelligent robots, which are poised to become an integral part of all areas of human life. Robots are to do the housework, look after the children, care for the elderly… Yet, the ultimate vision goes even further, envisioning a merger of man and machine that will throw off the biological shackles of evolution and finally make eternal life a reality!

_____

_____

Abbreviations and synonyms:

IFR = International Federation of Robotics

R.U.R. = Rossum’s Universal Robots.

KUKA = Keller und Knappich Augsburg.

FANUC = Fuji Automatic NUmerical Control

ERC = Electronic Review Comments

ASIMO = Advanced Step in Innovative Mobility

AIBO = Artificial Intelligence bot (“bot” is short for “robot”)

TOPIO = TOSY Ping Pong Playing Robot

ROS = Robotic Operating System

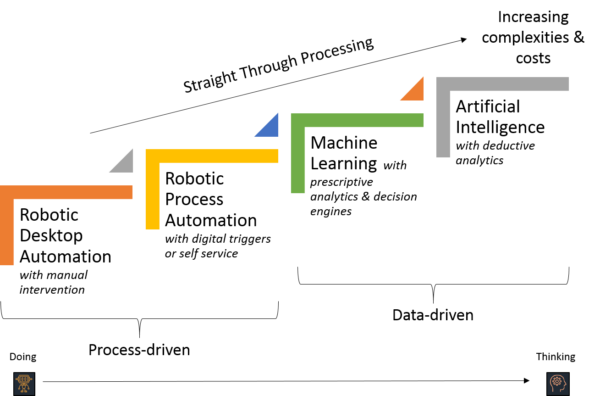

RPA = Robotic Process Automation

AMR = Autonomous Mobile Robots

IoRT = Internet of Robotic Things

SCARA = Selective Compliance Arm for Robotic Assembly

SCOT = Smart Cyber Operating Theater

AMIGO = Advanced Multimodality Image Guided Operating

_____

_____

Robo-cabulary:

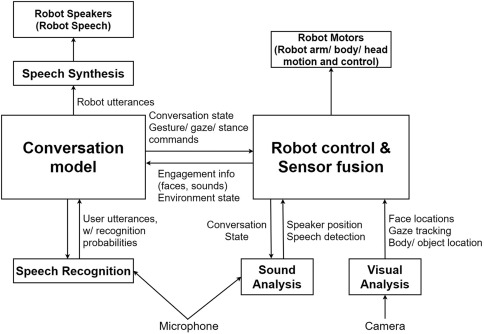

Human-robot interaction:

A field of robotics that studies the relationship between people and machines. For example, a self-driving car could see a stop sign and hit the brakes at the last minute, but that would terrify pedestrians and passengers alike. By studying human-robot interaction, roboticists can shape a world in which people and machines get along without hurting each other.

Humanoid:

The classical sci-fi robot. This is perhaps the most challenging form of robot to engineer, on account of it being both technically difficult and energetically costly to walk and balance on two legs. But humanoids may hold promise in rescue operations, where they’d be able to better navigate an environment designed for humans, like a nuclear reactor.

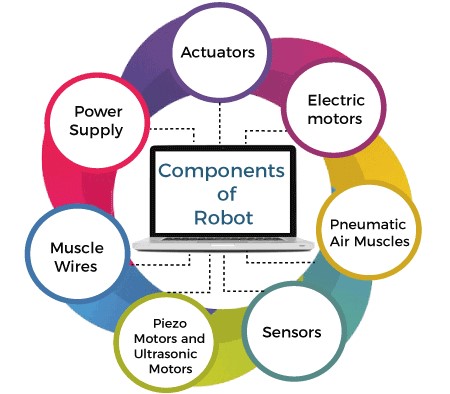

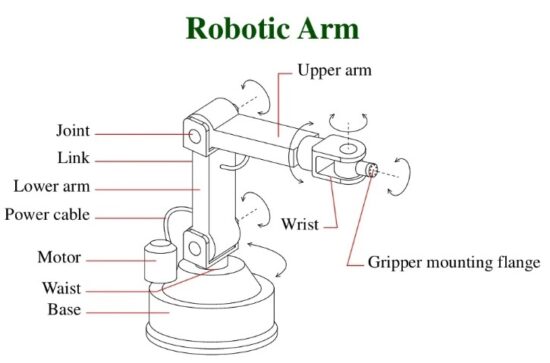

Actuator:

Actuators are the generators of the forces that robots employ to move themselves and other objects. Actuators are what power most robots. The control signals are usually electrical but may, more rarely, be pneumatic or hydraulic.

End-effector:

Accessory device or tool specifically designed for attachment to the robot wrist or tool mounting plate to enable the robot to perform its intended task. (Examples may include gripper, spot-weld gun, arc-weld gun, spray- paint gun, or any other application tools.)

Hydraulics:

Control of mechanical force and movement, generated by the application of liquid under pressure.

Pneumatics:

Control of mechanical force and movement, generated by the application of compressed gas.

Soft robotics:

A field of robotics that foregoes traditional materials and motors in favor of generally softer materials and pumping air or oil to move its parts.

Lidar:

Lidar, or light detection and ranging, is a system that blasts a robot’s surroundings with lasers to build a 3-D map. This is pivotal both for self-driving cars and for service robots that need to work with humans without running them down.

Singularity:

The hypothetical point where the machines grow so advanced that humans are forced into a societal and existential crisis. Singularity also means a condition caused by the collinear alignment of two or more robot axes resulting in unpredictable robot motion and velocities.

Multiplicity:

The idea that robots and AI won’t supplant humans, but complement them.

_

Types of robots:

- Aerobot – robot capable of independent flight on other planets.

- Android – humanoid robot; resembling the shape or form of a human.

- Automaton – early self-operating robot, performing exactly the same actions, over and over.

- Animatronic – an robot that is usually used for theme parks and movie/TVs show set.

- Autonomous vehicle – vehicle equipped with an autopilot system, which is capable of driving from one point to another without input from a human operator.

- Ballbot – dynamically-stable mobile robot designed to balance on a single spherical wheel (i.e., a ball).

- Cyborg – also known as a cybernetic organism, a being with both biological and artificial (e.g. electronic, mechanical or robotic) parts.

- Explosive ordnance disposal robot – mobile robot designed to assess whether an object contains explosives; some carry detonators that can be deposited at the object and activated after the robot withdraws.

- Gynoid – humanoid robot designed to look like a human female.

- Hexapod (walker) – a six-legged walking robot, using a simple insect-like locomotion.

- Industrial robot – reprogrammable, multifunctional manipulator designed to move material, parts, tools, or specialized devices through variable programmed motions for the performance of a variety of tasks.

- Insect robot – small robot designed to imitate insect behaviors rather than complex human behaviors.

- Microbot – microscopic robots designed to go into the human body and cure diseases.

- Military robot – exosuit which is capable of merging with its user for enhanced strength, speed, handling, etc.

- Mobile robot – self-propelled and self-contained robot that is capable of moving over a mechanically unconstrained course.

- Music entertainment robot – robot created to perform music entertainment by playing custom made instrument or human developed instruments.

- Nanobot – the same as a microbot, but smaller. The components are at or close to the scale of a nanometer.

- Prosthetic robot – programmable manipulator or device replacing a missing human limb.

- Rover – a robot with wheels designed to walk on other planets’ terrain.

- Service robot – machines that extend human capabilities.

- Surgical robot – remote manipulator used for keyhole surgery.

- Walking robot – robot capable of locomotion by walking. Owing to the difficulties of balance, two-legged walking robots have so far been rare, and most walking robots have used insect-like multilegged walking gaits.

_____

_____

Section-2

History of robots & robotics:

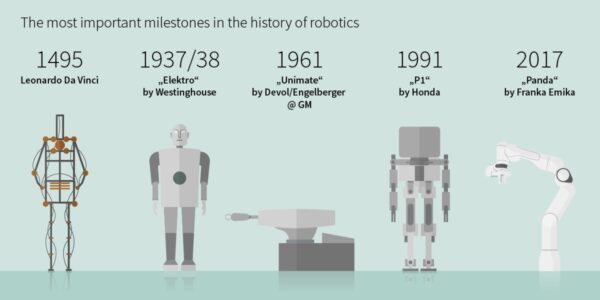

The history of robots has its origins in the ancient world. During the industrial revolution, humans developed the structural engineering capability to control electricity so that machines could be powered with small motors. In the early 20th century, the notion of a humanoid machine was developed. The first uses of modern robots were in factories as industrial robots. These industrial robots were fixed machines capable of manufacturing tasks which allowed production with less human work. Digitally programmed industrial robots with artificial intelligence have been built since the 2000s.

_

The robot notion derives from two strands of thought, humanoids and automata. The notion of a humanoid (or human- like nonhuman) dates back to Pandora in The Iliad, 2,500 years ago and even further. Egyptian, Babylonian, and ultimately Sumerian legends fully 5,000 years old reflect the widespread image of the creation, with god- men breathing life into clay models. One variation on the theme is the idea of the golem, associated with the Prague ghetto of the sixteenth century. This clay model, when breathed into life, became a useful but destructive ally. The golem was an important precursor to Mary Shelley’s Frankenstein: The Modern Prometheus (1818). This story combined the notion of the humanoid with the dangers of science (as suggested by the myth of Prometheus, who stole fire from the gods to give it to mortals). In addition to establishing a literary tradition and the genre of horror stories, Frankenstein also imbued humanoids with an aura of ill fate.

Automata, the second strand of thought, are literally “self- moving things” and have long interested mankind. The idea of automata originates in the mythologies of many cultures around the world. Engineers and inventors from ancient civilizations, including Ancient China, Ancient Greece, and Ptolemaic Egypt, attempted to build self-operating machines, some resembling animals and humans. Early descriptions of automata include the artificial doves of Archytas, the artificial birds of Mozi and Lu Ban, a “speaking” automaton by Hero of Alexandria, a washstand automaton by Philo of Byzantium, and a human automaton described in the Lie Zi. Early models depended on levers and wheels, or on hydraulics. Clockwork technology enabled significant advances after the thirteenth century, and later steam and electro- mechanics were also applied. The primary purpose of automata was entertainment rather than employment as useful artifacts. Although many patterns were used, the human form always excited the greatest fascination. During the twentieth century, several new technologies moved automata into the utilitarian realm. Geduld and Gottesman and Frude review the chronology of clay model, water clock, golem, homunculus, android, and cyborg that culminated in the contemporary concept of the robot.

_

Early beginnings:

Many ancient mythologies, and most modern religions include artificial people, such as the mechanical servants built by the Greek god Hephaestus (Vulcan to the Romans), the clay golems of Jewish legend and clay giants of Norse legend, and Galatea, the mythical statue of Pygmalion that came to life. Since circa 400 BC, myths of Crete include Talos, a man of bronze who guarded the island from pirates.

In ancient Greece, the Greek engineer Ctesibius (c. 270 BC) “applied a knowledge of pneumatics and hydraulics to produce the first organ and water clocks with moving figures.” In the 4th century BC, the Greek mathematician Archytas of Tarentum postulated a mechanical steam-operated bird he called “The Pigeon”. Hero of Alexandria (10–70 AD), a Greek mathematician and inventor, created numerous user-configurable automated devices, and described machines powered by air pressure, steam and water.

In ancient China, the 3rd-century text of the Lie Zi describes an account of humanoid automata, involving a much earlier encounter between Chinese emperor King Mu of Zhou and a mechanical engineer known as Yan Shi, an ‘artificer’. Yan Shi proudly presented the king with a life-size, human-shaped figure of his mechanical ‘handiwork’ made of leather, wood, and artificial organs. There are also accounts of flying automata in the Han Fei Zi and other texts, which attributes the 5th century BC Mohist philosopher Mozi and his contemporary Lu Ban with the invention of artificial wooden birds (ma yuan) that could successfully fly.

In 1066, the Chinese inventor Su Song built a water clock in the form of a tower which featured mechanical figurines which chimed the hours. His mechanism had a programmable drum machine with pegs (cams) that bumped into little levers that operated percussion instruments. The drummer could be made to play different rhythms and different drum patterns by moving the pegs to different locations.

Samarangana Sutradhara, a Sanskrit treatise by Bhoja (11th century), includes a chapter about the construction of mechanical contrivances (automata), including mechanical bees and birds, fountains shaped like humans and animals, and male and female dolls that refilled oil lamps, danced, played instruments, and re-enacted scenes from Hindu mythology.

_

13th century Muslim Scientist Ismail al-Jazari created several automated devices. He built automated moving peacocks driven by hydropower. He also invented the earliest known automatic gates, which were driven by hydropower, created automatic doors as part of one of his elaborate water clocks. Al-Jazari is not only known as the “father of robotics” he also documented 50 mechanical inventions (along with construction drawings) and is considered to be the “father of modern-day engineering.” The inventions he mentions in his book include the crank mechanism, connecting rod, programmable automaton, humanoid robot, reciprocating piston engine, suction pipe, suction pump, double-acting pump, valve, combination lock, cam, camshaft, segmental gear, the first mechanical clocks driven by water and weights, and especially the crankshaft, which is considered the most important mechanical invention in history after the wheel. One of al-Jazari’s humanoid automata was a waitress that could serve water, tea or drinks. The drink was stored in a tank with a reservoir from where the drink drips into a bucket and, after seven minutes, into a cup, after which the waitress appears out of an automatic door serving the drink. Al-Jazari invented a hand washing automaton incorporating a flush mechanism now used in modern flush toilets. It features a female humanoid automaton standing by a basin filled with water. When the user pulls the lever, the water drains and the female automaton refills the basin.

Mark E. Rosheim summarizes the advances in robotics made by Muslim engineers, especially al-Jazari, as follows:

Unlike the Greek designs, these Arab examples reveal an interest, not only in dramatic illusion, but in manipulating the environment for human comfort. Thus, the greatest contribution the Arabs made, besides preserving, disseminating and building on the work of the Greeks, was the concept of practical application. This was the key element that was missing in Greek robotic science.

_

In Renaissance Italy, Leonardo da Vinci (1452–1519) sketched plans for a humanoid robot around 1495. Da Vinci’s notebooks, rediscovered in the 1950s, contained detailed drawings of a mechanical knight now known as Leonardo’s robot, able to sit up, wave its arms and move its head and jaw. The design was probably based on anatomical research recorded in his Vitruvian Man. It is not known whether he attempted to build it. According to Encyclopædia Britannica, Leonardo da Vinci may have been influenced by the classic automata of al-Jazari.

_

In Japan, complex animal and human automata were built between the 17th to 19th centuries, with many described in the 18th century Karakuri zui (Illustrated Machinery, 1796). One such automaton was the karakuri ningyō, a mechanized puppet. Different variations of the karakuri existed: the Butai karakuri, which were used in theatre, the Zashiki karakuri, which were small and used in homes, and the Dashi karakuri which were used in religious festivals, where the puppets were used to perform reenactments of traditional myths and legends.

In France, between 1738 and 1739, Jacques de Vaucanson exhibited several life-sized automatons: a flute player, a pipe player and a duck. The mechanical duck could flap its wings, crane its neck, and swallow food from the exhibitor’s hand, and it gave the illusion of digesting its food by excreting matter stored in a hidden compartment.

_

Remote-controlled systems:

Remotely operated vehicles were demonstrated in the late 19th century in the form of several types of remotely controlled torpedoes. The early 1870s saw remotely controlled torpedoes by John Ericsson (pneumatic), John Louis Lay (electric wire guided), and Victor von Scheliha (electric wire guided).

The Brennan torpedo, invented by Louis Brennan in 1877, was powered by two contra-rotating propellers that were spun by rapidly pulling out wires from drums wound inside the torpedo. Differential speed on the wires connected to the shore station allowed the torpedo to be guided to its target, making it “the world’s first practical guided missile”. In 1897 the British inventor Ernest Wilson was granted a patent for a torpedo remotely controlled by “Hertzian” (radio) waves and in 1898 Nikola Tesla publicly demonstrated a wireless-controlled torpedo that he hoped to sell to the US Navy.

In 1903, the Spanish engineer Leonardo Torres y Quevedo demonstrated a radio control system called “Telekino”, which he wanted to use to control an airship of his own design. Unlike the previous systems, which carried out actions of the ‘on/off’ type, Torres device was able to memorize the signals received to execute the operations on its own and could carry out to 19 different orders.

Archibald Low, known as the “father of radio guidance systems” for his pioneering work on guided rockets and planes during the First World War. In 1917, he demonstrated a remote controlled aircraft to the Royal Flying Corps and in the same year built the first wire-guided rocket.

_

Origin of the term robot and robotics:

The term robot derives from the Czech word robota, meaning forced work or compulsory service, or robotnik, meaning serf. It was first used by the Czech playwright Karel Çapek in 1918 in a short story and again in his 1921 play R. U. R., which stood for Rossum’s Universal Robots. Rossum, a fictional Englishman, used biological methods to invent and mass- produce “men” to serve humans. Eventually they rebelled, became the dominant race, and wiped out humanity. The play was soon well known in English- speaking countries. According to Karel Čapek, the word was created by his brother Josef Capek from the Czech word robota. The word robota means literally “corvée”, “serf labor”, and figuratively “drudgery” or “hard work” in Czech and also (more general) “work”, “labor” in many Slavic languages (e.g.: Bulgarian, Russian, Serbian, Slovak, Polish, Macedonian, Ukrainian, archaic Czech, as well as robot in Hungarian). Traditionally the robota (Hungarian robot) was the work period a serf (corvée) had to give for his lord, typically 6 months of the year.

The word “robotics” also comes from science fiction – it first appeared in the short story “Runaround” (1942) by Isaac Asimov. This story was later included in Asimov’s famous book “I, Robot.” The robot stories of Isaac Asimov also introduced the idea of a “positronic brain” (used by the character “Data” in Star Trek) and the “three laws of robotics.”

_

Asimov was not the first to conceive of well-engineered, non-threatening robots, but he pursued the theme with such enormous imagination and persistence that most of the ideas that have emerged in this branch of science fiction are identifiable with his stories. To cope with the potential for robots to harm people, Asimov, in 1940, in conjunction with science fiction author and editor John W. Campbell, formulated the Laws of Robotics. He subjected all of his fictional robots to these laws by having them incorporated within the architecture of their (fictional) “platinum-iridium positronic brains”. The laws (see below) first appeared publicly in his fourth robot short story, “Runaround”.

The 1940 Laws of Robotics:

First Law:

A robot may not injure a human being, or, through inaction, allow a human being to come to harm.

Second Law:

A robot must obey orders given it by human beings, except where such orders would conflict with the First Law.

Third Law:

A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

The laws quickly attracted – and have since retained – the attention of readers and other science fiction writers.

Only two years later, another established writer, Lester Del Rey, referred to “the mandatory form that would force built-in unquestioning obedience from the robot”. As Asimov later wrote (with his characteristic clarity and lack of modesty), “Many writers of robot stories, without actually quoting the three laws, take them for granted, and expect the readers to do the same”. Asimov’s fiction even influenced the origins of robotic engineering. “Engelberger, who built the first industrial robot, called Unimate, in 1958, attributes his long-standing fascination with robots to his reading of [Asimov’s] ‘I, Robot’ when he was a teenager”, and Engelberger later invited Asimov to write the foreword to his robotics manual. The laws are simple and straightforward, and they embrace “the essential guiding principles of a good many of the world’s ethical systems”. They also appear to ensure the continued dominion of humans over robots, and to preclude the use of robots for evil purposes. In practice, however – meaning in Asimov’s numerous and highly imaginative stories – a variety of difficulties arise.

_

Early robots:

In 1928, one of the first humanoid robots, Eric, was exhibited at the annual exhibition of the Model Engineers Society in London, where it delivered a speech. Invented by W. H. Richards, the robot’s frame consisted of an aluminium body of armour with eleven electromagnets and one motor powered by a twelve-volt power source. The robot could move its hands and head and could be controlled through remote control or voice control. Both Eric and his “brother” George toured the world. Westinghouse Electric Corporation built Televox in 1926; it was a cardboard cutout connected to various devices which users could turn on and off. In 1939, the humanoid robot known as Elektro was debuted at the 1939 New York World’s Fair. Seven feet tall (2.1 m) and weighing 265 pounds (120.2 kg), it could walk by voice command, speak about 700 words (using a 78-rpm record player), smoke cigarettes, blow up balloons, and move its head and arms. The body consisted of a steel gear, cam and motor skeleton covered by an aluminum skin. In 1928, Japan’s first robot, Gakutensoku, was designed and constructed by biologist Makoto Nishimura.

_

Modern autonomous robots:

The first electronic autonomous robots with complex behaviour were created by William Grey Walter of the Burden Neurological Institute at Bristol, England in 1948 and 1949. He wanted to prove that rich connections between a small number of brain cells could give rise to very complex behaviors – essentially that the secret of how the brain worked lay in how it was wired up. His first robots, named Elmer and Elsie, were constructed between 1948 and 1949 and were often described as tortoises due to their shape and slow rate of movement. The three-wheeled tortoise robots were capable of phototaxis, by which they could find their way to a recharging station when they ran low on battery power. Walter stressed the importance of using purely analogue electronics to simulate brain processes at a time when his contemporaries such as Alan Turing and John von Neumann were all turning towards a view of mental processes in terms of digital computation. His work inspired subsequent generations of robotics researchers such as Rodney Brooks, Hans Moravec and Mark Tilden. Modern incarnations of Walter’s turtles may be found in the form of BEAM robotics. BEAM robotics (from biology, electronics, aesthetics and mechanics) is a style of robotics that primarily uses simple analogue circuits, such as comparators, instead of a microprocessor in order to produce an unusually simple design.

_

Unimation, the company that developed the Unimate:

In 1956, George Devol and Joe Engelberger, established a company called Unimation, a shortened form of the words Universal Animation. Engelberger, a physicist working on the design of control systems for nuclear power plants and jet engines, met inventor Devol by chance at a cocktail party. Devol had recently received a patent called “Programmed Article Transfer.” Inspired by the short stories and novels of Isaac Asimov, Devol and Engelberger brainstormed to derive the first industrial robot arm, based upon Devol’s patent, called the Unimate. After almost two years in development, Engelberger and Devol produced a prototype – the Unimate #001, the first digitally operated and programmable robot. This ultimately laid the foundations of the modern robotics industry. Programmed Article Transfer became the seminal industrial robot patent which was ultimately sub-licensed around the world. Devol sold the first Unimate to General Motors in 1960, and it was installed in 1961 in a plant in Trenton, New Jersey to lift hot pieces of metal from a die casting machine and stack them. The first industrial robot in Europe, a Unimate, was installed at Metallverken, Uppsland Väsby, Sweden in 1967. Devol’s patent for the first digitally operated programmable robotic arm represents the foundation of the modern robotics industry.

_

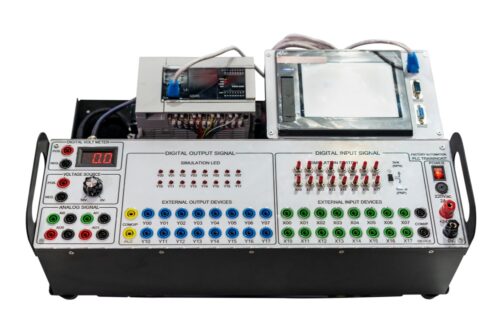

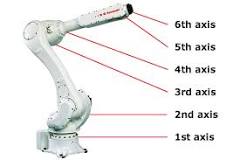

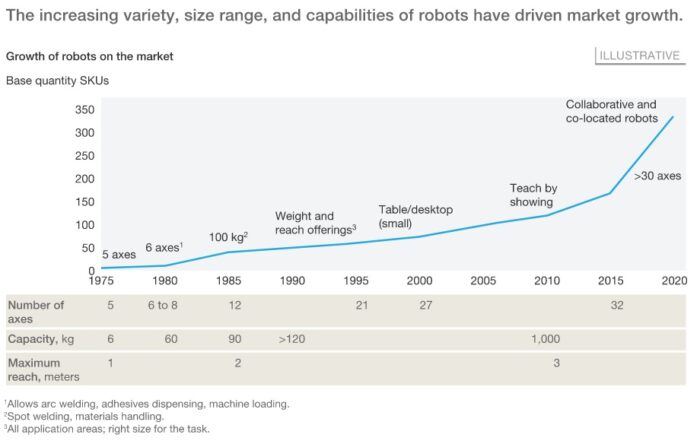

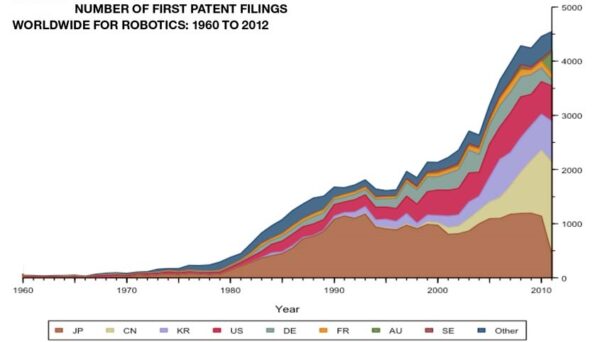

The first palletizing robot was introduced in 1963 by the Fuji Yusoki Kogyo Company. In 1973, a robot with six electromechanically driven axes was patented by KUKA robotics in Germany, and the programmable universal manipulation arm was invented by Victor Scheinman in 1976, and the design was sold to Unimation. From 1980 on, the rate of new robotics started to climb exponentially. Takeo Kanade created the first robotic arm with motors installed directly in the joint in 1981. It was much faster and more accurate than its predecessors. Yaskawa America Inc. introduced the Motorman ERC control system in 1988. This has the power to control up to 12 axes, which was the highest number possible at the time. FANUC robotics also created the first prototype of an intelligent robot in 1992. Two years later, in 1994, the Motorman ERC system was upgraded to support up to 21 axes. The controller increased this to 27 axes in 1998 and added the ability to synchronize up to four robots. The first collaborative robot (cobot) was installed at Linatex in 2008. This Danish supplier of plastics and rubber decided to place the robot on the floor, as opposed to locking it behind a safety fence. Instead of hiring a programmer, they were able to program the robot through a touchscreen tool. It was clear from that point on, that this was the way of the future.

_

In April 2001, the Canadarm2 was launched into orbit and attached to the International Space Station. The Canadarm2 is a larger, more capable version of the arm used by the Space Shuttle, and is hailed as “smarter”. Also in April, the Unmanned Aerial Vehicle Global Hawk made the first autonomous non-stop flight over the Pacific Ocean from Edwards Air Force Base in California to RAAF Base Edinburgh in Southern Australia. The flight was made in 22 hours.

The popular Roomba, a robotic vacuum cleaner, was first released in 2002 by the company iRobot.

In 2005, Cornell University revealed a robotic system of block-modules capable of attaching and detaching, described as the first robot capable of self-replication, because it was capable of assembling copies of itself if it was placed near more of the blocks which composed it. Launched in 2003, on 3 and 24 January, the Mars rovers Spirit and Opportunity landed on the surface of Mars. Both robots drove many times the distance originally expected, and Opportunity was still operating as of mid-2018 although communications were subsequently lost due to a major dust storm.

Self-driving cars had made their appearance by around 2005, but there was room for improvement. None of the 15 devices competing in the DARPA Grand Challenge (2004) successfully completed the course; in fact no robot successfully navigated more than 5% of the 150-mile (240 km) off-road course, leaving the $1 million prize unclaimed. In 2005, Honda revealed a new version of its ASIMO robot, updated with new behaviors and capabilities. In 2006, Cornell University revealed its “Starfish” robot, a four-legged robot capable of self modeling and learning to walk after having been damaged. In 2007, TOMY launched the entertainment robot, i-sobot, a humanoid bipedal robot that can walk like a human and performs kicks and punches and also some entertaining tricks and special actions under “Special Action Mode”.

_

Robonaut 2, the latest generation of the astronaut helpers, was launched to the space station aboard Space Shuttle Discovery on the STS-133 mission in 2011. It is the first humanoid robot in space, and although its primary job for now is teaching engineers how dextrous robots behave in space; the hope is that through upgrades and advancements, it could one day venture outside the station to help spacewalkers make repairs or additions to the station or perform scientific work.

On 25 October 2017 at the Future Investment Summit in Riyadh, a robot called Sophia and referred to with female pronouns was granted Saudi Arabian citizenship, becoming the first robot ever to have a nationality. This has attracted controversy, as it is not obvious whether this implies that Sophia can vote or marry, or whether a deliberate system shutdown can be considered murder; as well, it is controversial considering how few rights are given to Saudi human women.

Commercial and industrial robots are now in widespread use performing jobs more cheaply or with greater accuracy and reliability than humans. They are also employed for tasks that are too dirty, dangerous or dull to be suitable for humans. Robots are widely used in manufacturing, assembly and packing, transport, Earth and space exploration, surgery, weaponry, laboratory research, and mass production of consumer and industrial goods.

In 2019, engineers at the University of Pennsylvania created millions of nanorobots in just a few weeks using technology borrowed from semiconductors. These microscopic robots, small enough to be hypodermically injected into the human body and controlled wirelessly, could one day deliver medications and perform surgeries, revolutionizing medicine and health.

______

______

Section-3

Introduction to robots and robotics:

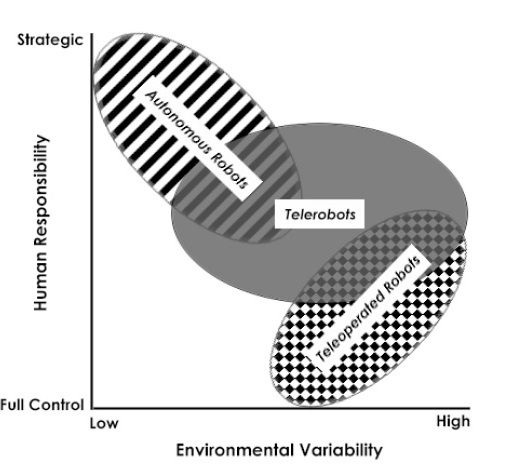

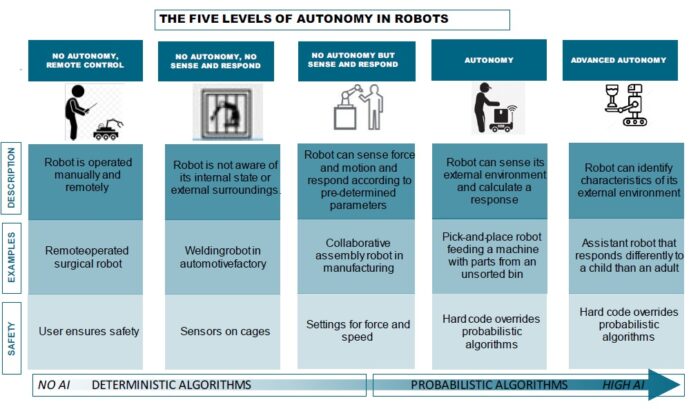

A robot is the product of the robotics field, where programmable machines are built that can assist humans or mimic human actions. Robots were originally built to handle monotonous tasks (like building cars on an assembly line), but have since expanded well beyond their initial uses to perform tasks like fighting fires, cleaning homes and assisting with incredibly intricate surgeries. Each robot has a differing level of autonomy, ranging from human-controlled robots that carry out tasks that a human has full control over to fully-autonomous robots that perform tasks without any external influences.

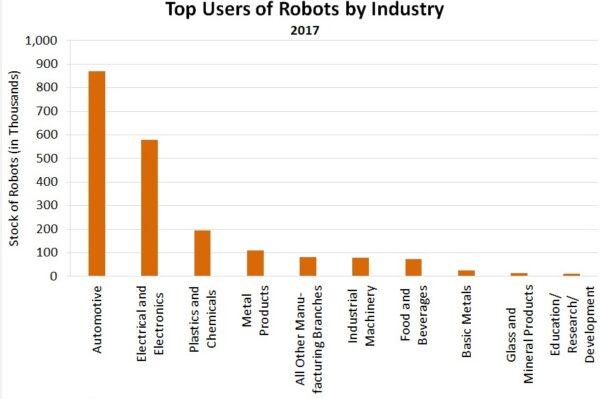

As technology progresses, so too does the scope of what is considered robotics. In 2005, 90% of all robots could be found assembling cars in automotive factories. These robots consist mainly of mechanical arms tasked with welding or screwing on certain parts of a car. Today, we’re seeing an evolved and expanded definition of robotics that includes the development, creation and use of robots that explore Earth’s harshest conditions, robots that assist law-enforcement and even robots that assist in almost every facet of healthcare.

We’re really bound to see the promise of the robotics industry sooner, rather than later, as artificial intelligence and software also continue to progress. In the near future, thanks to advances in these technologies, robots will continue getting smarter, more flexible and more energy efficient. They’ll also continue to be a main focal point in smart factories, where they’ll take on more difficult challenges and help to secure global supply chains.

Though relatively young, the robotics industry is filled with an admirable promise of progress that science fiction could once only dream about. From the deepest depths of our oceans to thousands of miles in outer space, robots will be found performing tasks that humans couldn’t dream of achieving alone.

_

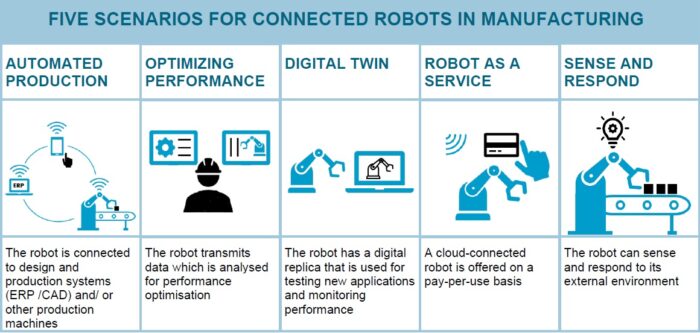

When we think of robots, we tend to imagine large, powerful machines hammering out metal parts or mounting car doors. Industrial robots have indeed been used for over 50 years in manufacturing for these and other tasks aimed at improving productivity. However, the last ten years have seen a dramatic shift in the capabilities and uses of robots. Today, many robots are out of their cages, moving around factories, warehouses, homes, and public spaces.

For decades, industrial robots have made manufacturing safer for workers, carrying out tasks that are dangerous, dirty – or plain dull. The range of tasks robots perform in factories has expanded greatly over the past 20 years. Robot grippers are now far more dexterous, and so can handle a greater range of materials. Robots are smaller and lighter, meaning they can be used in factories that are short on space and in which robots and humans need to work alongside one another – from pharmaceutical research to food processing, as well as in later stages of traditional manufacturing such as product assembly. The advent of robots that can sense and respond to their environment – and in many cases move around within it – has taken robots outside of industrial settings and into public life. Whether in direct contact with people, or behind the scenes, performing tasks we rely on but never think about, robots are making our daily lives healthier, safer, and more convenient. Robots also have an increasing role to play in making our planet sustainable for a rapidly rising global population.

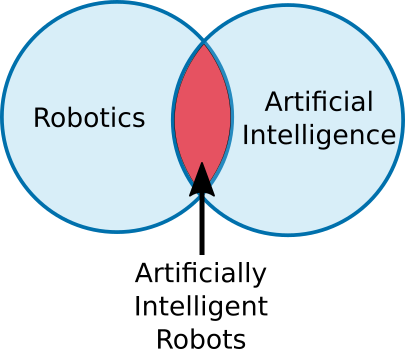

__

Robots are widely used in such industries as automobile manufacture to perform simple repetitive tasks, and in industries where work must be performed in environments hazardous to humans. Many aspects of robotics involve artificial intelligence; robots may be equipped with the equivalent of human senses such as vision, touch, and the ability to sense temperature. Some are even capable of simple decision making, and current robotics research is geared toward devising robots with a degree of self-sufficiency that will permit mobility and decision-making in an unstructured environment. Today’s industrial robots do not resemble human beings; a robot in human form is called an android. Robotics is a field where science, technology, and engineering meet to make the machines we know as robots. It helps to have a good understanding of science to work in robotics and a fair amount of creativity to solve problems that have never been seen before. Robotics is a popular field, and one of the fastest-growing industries out there, and there’s been a lot of advancements in the last few years. Machine learning, artificial intelligence, and many more advances in technology have made it easier than ever before to get into the field. Robots are also commonly used in many industries today; businesses love robots because they help them make more products more efficiently.

_

During the 1980s, the scope of information technology applications and their impact on people increased dramatically. Control systems for chemical processes and air conditioning are examples of systems that already act directly and powerfully on their environments. And consider computer- integrated manufacturing, just- in- time logistics, and automated warehousing systems. Even data processing systems have become integrated into organizations’ operations and constrain the ability of operations- level staff to query a machine’s decisions and conclusions. In short, many modern computer systems are arguably robotic in nature already; their impacts are visible.

_

When people hear the word “robot,” they often think about two-legged, two-handed machines that walk and talk like people. But that’s not always the case. Many robots are just disembodied hands that sit at the ends of conveyor belts, picking up things and moving them. While most robots aren’t human-like, this is quickly changing as technology moves forward and humanoid robots become more popular.

Robots can:

- Help fight forest fires.

- Work alongside humans in factories, helping them build things like automobiles.

- Deliver online orders to people.

- Work in dangerous situations and save human lives.

- Do household chores like cleaning and vacuuming your pool.

- Find and carry boxes in warehouses.

- Discover landmines in dangerous war zones.

- Search for people during emergency search and rescue programs.

____

On the most basic level, human beings are made up of five major components:

-A body structure

-A muscle system to move the body structure

-A sensory system that receives information about the body and the surrounding environment

-A power source to activate the muscles and sensors

-A brain system that processes sensory information and tells the muscles what to do

Of course, we also have some intangible attributes, such as intelligence and morality, but on the sheer physical level, the list above about covers it.

A robot is made up of the very same components. A basic typical robot has a movable physical structure, a motor of some sort, a sensor system, a power supply and a computer “brain” that controls all of these elements. Essentially, robots are human-made versions of animal life — they are machines that replicate human and animal behavior. Many early robots were big machines, with significant brawn and little else. Old hydraulically powered robots were relegated to tasks in the 3-D category – dull, dirty and dangerous. The technological advances since the first industry implementation have completely revised the capability, performance and strategic benefits of robots. For example, by the 1980s robots transitioned from being hydraulically powered to become electrically driven units. Accuracy and performance improved.

Although the appearance and capabilities of robots vary vastly, all robots share the features of a mechanical, movable structure under some form of autonomous control. The structure of a robot is usually mostly mechanical and can be called a kinematic chain (its functionality being similar to the skeleton of the human body). The chain is formed of links (its bones), actuators (its muscles) and joints which can allow one or more degrees of freedom. Most contemporary robots use open serial chains in which each link connects the one before to the one after it. These robots are called serial robots and often resemble the human arm. Some robots, such as the Stewart platform, use closed parallel kinematic chains. Other structures, such as those that mimic the mechanical structure of humans, various animals and insects, are comparatively rare. However, the development and use of such structures in robots is an active area of research (e.g. biomechanics). Robots used as manipulators have an end effector mounted on the last link. This end effector can be anything from a welding device to a mechanical hand used to manipulate the environment.

_

Joseph Engelberger, a pioneer in industrial robotics, once remarked, “I don’t know how to define one, but I know one when I see one!” If you consider all the different machines people call robots, you can see that it’s nearly impossible to come up with a comprehensive definition. Everybody has a different idea of what constitutes a robot.

You’ve probably heard of several of these famous robots:

-R2-D2 and C-3PO: The intelligent, speaking robots with loads of personality in the “Star Wars” movies

-Sony’s AIBO: A robotic dog that learns through human interaction

-Honda’s ASIMO: A robot that can walk on two legs like a person

-Industrial robots: Automated machines that work on assembly lines

-Lieutenant Commander Data: The almost-human android from “Star Trek”

-BattleBots: The remote control fighters from the long-running TV show

-Bomb-defusing robots

-NASA’s Mars rovers

-HAL: The ship’s computer in Stanley Kubrick’s “2001: A Space Odyssey”

-Roomba: The vacuuming robot from iRobot

-The Robot in the television series “Lost in Space”

-MINDSTORMS: LEGO’s popular robotics kit

All of these things are considered robots, at least by some people. But you could say that most people define a robot as anything that they recognize as a robot. Most roboticists (people who build robots) use a more precise definition. They specify that robots have a reprogrammable brain (a computer) that moves a body. By this definition, robots are distinct from other movable machines such as tractor-trailer trucks because of their computer elements. Even considering sophisticated onboard electronics, the driver controls most elements directly by way of various mechanical devices. Robots are distinct from ordinary computers in their physical nature — normal computers don’t have physical bodies attached to them.

_

Robots can be used in many situations for many purposes, but today many are used in dangerous environments (including inspection of radioactive materials, bomb detection and deactivation), manufacturing processes, or where humans cannot survive (e.g., in space, underwater, in high heat, and clean up and containment of hazardous materials and radiation). Robots can take any form, but some are made to resemble humans in appearance. This is claimed to help in the acceptance of robots in certain replicative behaviors which are usually performed by people. Such robots attempt to replicate walking, lifting, speech, cognition, or any other human activity. Many of today’s robots are inspired by nature, contributing to the field of bio-inspired robotics. Certain robots require user input to operate while other robots function autonomously. The AI and machine learning allows autonomous robots to manipulate physical objects far more efficiently than humans, while continuously improving and optimizing their performance over time.

_

Most of the robots are several forms of mechanical design. A robot ‘s metal presence helps it accomplish projects in the world it is built for. For instance, the wheels of a Mars 2020 Rover are independently remote-controlled and constructed of aluminum tubing which helps it securely grip the red planet ‘s harmful landscape. In the army nowadays, robotics is an important element that is being developed and applied with each day. Notable success has already been accomplished for unmanned aircraft such as the drone, that is capable of taking surveillance images, and even firing missiles accurately at ground targets, without a pilot. Even so, there are several benefits in robotic technology in combat. Machines never get sick. They don’t turn a blind eye. If it falls, they don’t hide underneath trees. They don’t speak to their friends. And machines do not know fear.

Can a robot be afraid?

No. A robot does not experience emotions like a human. However, a programmer could program a robot to exhibit human-like emotions that are pre-programmed conditions. For example, a robot with heat sensors could exhibit fear if its temperature sensor exceeded 100-degrees.

_

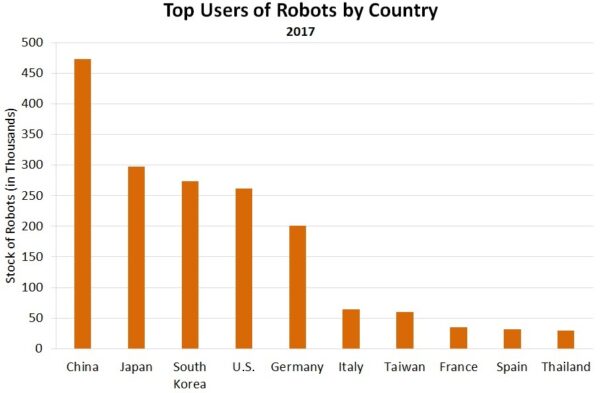

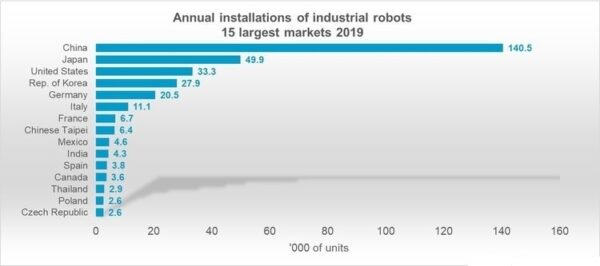

Robots in world:

Roughly half of all the robots in the world are in Asia, 32% in Europe, and 16% in North America, 1% in Australasia and 1% in Africa. 40% of all the robots in the world are in Japan, making Japan the country with the highest number of robots. A couple of decades ago, 90% of robots were used in car manufacturing, typically on assembly lines doing a variety of repetitive tasks. Today only 50% are in automobile plants, with the other half spread out among other factories, laboratories, warehouses, energy plants, hospitals, and many other industries. Robots are used for assembling products, handling dangerous materials, spray-painting, cutting and polishing, inspection of products. The number of robots used in tasks as diverse as cleaning sewers, detecting bombs and performing intricate surgery is increasing steadily, and will continue to grow in coming years.

_

Robot intelligence:

Even with primitive intelligence, robots have demonstrated ability to generate good gains in factory productivity, efficiency and quality. Beyond that, some of the “smartest” robots are not in manufacturing; they are used as space explorers, remotely operated surgeons and even pets – like Sony’s AIBO mechanical dog. In some ways, some of these other applications show what might be possible on production floors if manufacturers realize that industrial robots don’t have to be bolted to the floor, or constrained by the limitations of yesterday’s machinery concepts.

With the rapidly increasing power of the microprocessor and artificial intelligence techniques, robots have dramatically increased their potential as flexible automation tools. The new surge of robotics is in applications demanding advanced intelligence. Robotic technology is converging with a wide variety of complementary technologies – machine vision, force sensing (touch), speech recognition and advanced mechanics. This results in exciting new levels of functionality for jobs that were never before considered practical for robots.

The introduction of robots with integrated vision and touch dramatically changes the speed and efficiency of new production and delivery systems. Robots have become so accurate that they can be applied where manual operations are no longer a viable option. Semiconductor manufacturing is one example, where a consistent high level of throughput and quality cannot be achieved with humans and simple mechanization. In addition, significant gains are achieved through enabling rapid product changeover and evolution that can’t be matched with conventional hard tooling.

Robotic Assistance:

A key robotics growth arena is Intelligent Assist Devices (IAD) – operators manipulate a robot as though it were a bionic extension of their own limbs with increased reach and strength. This is robotics technology – not replacements for humans or robots, but rather a new class of ergonomic assist products that helps human partners in a wide variety of ways, including power assist, motion guidance, line tracking and process automation. IAD’s use robotics technology to help production people to handle parts and payloads – more, heavier, better, faster, with less strain. Using a human-machine interface, the operator and IAD work in tandem to optimize lifting, guiding and positioning movements. Sensors, computer power and control algorithms translate the operator’s hand movements into super human lifting power.

______

______

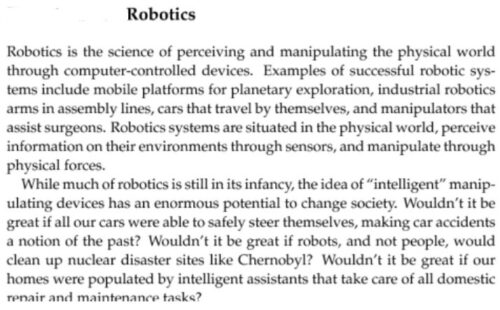

Robotics:

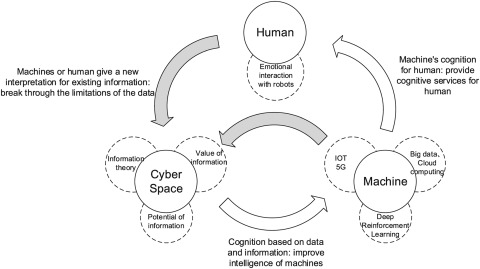

Robotics is an interdisciplinary branch of computer science and engineering. Robotics involves design, construction, operation, and use of robots. The goal of robotics is to design machines that can help and assist humans. Robotics requires a working knowledge of electronics, mechanics and software, and is usually accompanied by a large working knowledge of many subjects. Robotics integrates fields of mechanical engineering, electrical engineering, information engineering, mechatronics, electronics, bioengineering, computer engineering, control engineering, software engineering, mathematics, etc. A person working in the field is a roboticist. Robotics develops machines that can substitute for humans and replicate human actions. A robot is a unit that implements this interaction with the physical world based on sensors, actuators, and information processing. Industry is a key application of robots, or to be precise Industry 4.0, where industrial robots are used.

_

Robotics is the intersection of science, engineering and technology that produces machines, called robots, that substitute for (or replicate) human actions. These technologies deal with automated machines that can take the place of humans in dangerous environments or manufacturing processes, or resemble humans in appearance, behavior, or cognition. The field of robotics has greatly advanced with several new general technological achievements. One is the rise of big data, which offers more opportunity to build programming capability into robotic systems. Another is the use of new kinds of sensors and connected devices to monitor environmental aspects like temperature, air pressure, light, motion and more. All of this serves robotics and the generation of more complex and sophisticated robots for many uses, including manufacturing, health and safety, and human assistance. The field of robotics also intersects with issues around artificial intelligence. Since robots are physically discrete units, they are perceived to have their own intelligence, albeit one limited by their programming and capabilities.

_

From the viewpoint of biology, human beings can be discriminated from other species by three distinctive features or capabilities. They are biped walking, use instrumentation, and have the invention/use of language. The first two capabilities have been attacked by robotics researchers as challenges in locomotion and manipulation that are the main issues in robotics. The third capability has not been considered as within robotics but linguistics. It seems far away from robotics. However, recent progress of research activities developed by the idea of “embodiment” in behavior-based robotics proposed by Rod Brooks at the MIT AI laboratory in the late 1980s has caused more conceptual issues such as body scheme, body image, self, consciousness, theory of mind, communication, and the emergence of language. Although these issues have been attacked in the existing disciplines such as brain science, neuroscience, cognitive science, and developmental psychology, robotics may project a new light on understanding these issues by constructing artifacts similar to us. Many of today’s robots are inspired by nature contributing to the field of bio-inspired robotics. These robots have also created a newer branch of robotics: soft robotics. Thus, robotics covers a broad range of disciplines; therefore it seems very difficult to find comprehensive textbooks to understand the area.

_

Machine learning in robotics:

Machine learning and robotics intersect in a field known as robot learning. Robot learning is the study of techniques that enable a robot to acquire new knowledge or skills through machine learning algorithms. Some applications that have been explored by robot learning include grasping objects, object categorization and even linguistic interaction with a human peer. Learning can happen through self-exploration or via guidance from a human operator. To learn, intelligent robots must accumulate facts through human input or sensors. Then, the robot’s processing unit will compare the newly acquired data to previously stored information and predict the best course of action based on the data it has acquired. However, it’s important to understand that a robot can only solve problems that it is built to solve. It does not have general analytical abilities. Robotic process automation automates repetitive and rules-based tasks that rely on digital information.

_

There are two emerging technologies that will have a dramatic impact on future robots — their form, shape, function and performance — and change the way we think about robotics.

First, advances in Micro Electronic Mechanical Systems (MEMS) will enable inexpensive and distributed sensing and actuation. Just as nature provides complex redundant pathways in critical processes (e.g., synthesis of biomolecules and cell cycle control) to combat the inherent noisiness in the underlying processes and the uncertainty in the environment, we will be able to design and build robots that can potentially deal with uncertainty and adapt to unstructured environments.

Second, advances in biomaterials and biotechnology will enable new materials that will allow us to build softer and friendlier robots, robots that can be implanted in humans, and robots that can be used to manipulate, control, and repair biomolecules, cells, and tissue.

Realistically, we are far from realizing this tremendous potential. While some of the obstacles are technological in flavor (for example, lack of high strength to weight ratio actuators, or lack of inexpensive three-dimensional vision systems), there are several obstacles in robotics that stem from our lack of understanding of the basic underlying problems and the lack of well-developed mathematical tools to model and solve these problems.

_

Robotics Fields:

At the moment being, the number of robotics fields is nearly uncatchable, since robot technology is being applied in so many domains that nobody is able to know how many and which they are. Such an exponential growth cannot be fully tracked and we will discuss the most evident fields of application:

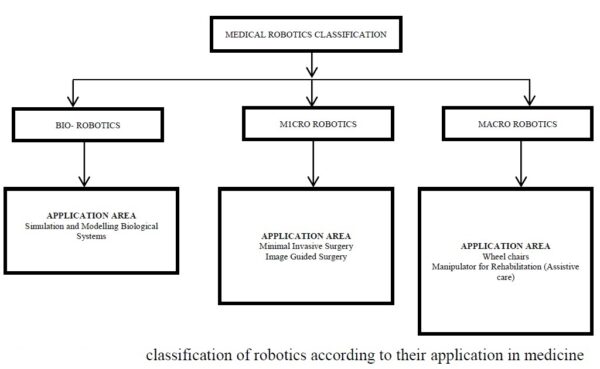

- Healthcare Robotics; Robotics used in the context of patient monitoring/evaluation, medical supplies delivery, and assisting healthcare professionals in unique capacities as well as, Collaborative robots and robotics used for Prevention.

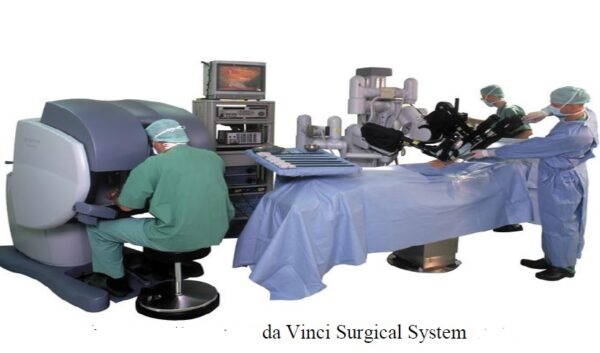

- Medical and Surgery Robotics; Devices used in hospitals mostly for assisting surgery since they allow great precision and minimal invasive procedures.

- Body-machine interfaces help amputees to feed-forward controls that detect their will to move and also receive sensorial feedback that converts digital readings to feelings.

- Telepresence Robotics; Act as your stand-in at remote locations it is meant to be used in hospitals and for business travellers, with the idea of saving both time and money.

- Cyborgs, Exoskeletons, and Wearable Robotics. Allow users to augment their physical strength, helping those with physical disabilities to walk and climb.

- Humanoids. Combine artificial intelligence and machine learning technologies to give robots human-like expressions and reactions.

- Industrial. Arms, grippers and all of the warehouse robotics used for automation of industrial processes. They are used both for saving money and speed up the productions.

- Housekeeping. Floors, Gardens Pools and all the Robot Cleaners.

- Collaborative Robots. Recently the market has been opened to Domotics and other Home and Public Spaces (e.g. Shops) Automation coordinators.

- Military Robotics. Drones, Navigators, Researchers, Warriors and all of the possible robotics extensions which are to be applied in spying operations and battle fields.

- Underwater, Flying and Self -Driving Machines. All the Robotics that deals with self-piloting in all circumstances, on earth, air and water.

- Space Robots. All of the Robotics used in Space missions, therefore highly resistant, expert in exploration and material data collection.

- Entertainment. Toys, Games and Interactive Robotics for children.

- Art. Most creative robotics, which don’t aim at a specific functionality but follows criteria of beauty and conceptual inspiration.

- Environmental and Alternately powered robots use sources like solar, wind and wave energy to be powered indefinitely and open up applications in areas that are off-grid.

- Swarm and Microbots allow emergency responders to explore environments that are too small or too dangerous for humans or larger robots; deploying them in “swarms” compensates for their relatively limited computational ability.

- Robotic networks emerge and allow robots to access databases, share information and learn from one another’s experience.

- Modular Robotics. Robots that can arrange themselves in pre-set patterns to accomplish specific tasks.

_____

_____

Robot:

A robot is a machine—especially one programmable by a computer—capable of carrying out a complex series of actions automatically. A robot can be guided by an external control device, or the control may be embedded within. Robots may be constructed to evoke human form, but most robots are task-performing machines, designed with an emphasis on stark functionality, rather than expressive aesthetics.

_

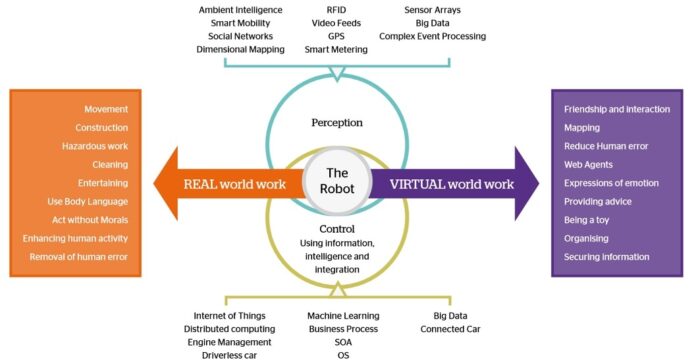

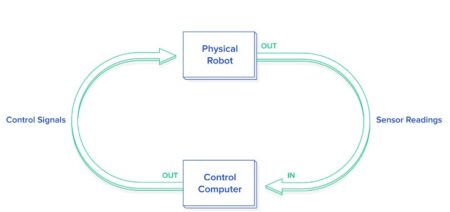

Essentially, there are three problems you need to solve if you want to build a robot: 1) sense things (detect objects in the world), 2) think about those things (in “intelligent” way), and then 3) act on them (move or otherwise physically respond to the things it detects and thinks about). In psychology (the science of human behavior) and in robotics, these things are called perception (sensing), cognition (thinking), and action (moving). Some robots have only one or two. For example, robot welding arms in factories are mostly about action (though they may have sensors), while robot vacuum cleaners are mostly about perception and action and have no cognition to speak of. There’s been a long and lively debate over whether robots really need cognition, but most engineers would agree that a machine needs both perception and action to qualify as a robot.

_

Is robot software considered robotics?

The word robot can refer to both physical robots and virtual software agents, but the latter are usually referred to as bots. A software robot (bot) is an abundant type of computer program which carries out tasks autonomously, such as a chatbot or a web crawler. However, because software robots only exist on the internet and originate within a computer, they are not considered robots. In order to be considered a robot, a device must have a physical form, such as a body or a chassis.

A bot — short for robot and also called an internet bot — is a computer program that operates as an agent for a user or other program or to simulate a human activity. Bots are normally used to automate certain tasks, meaning they can run without specific instructions from humans. An organization or individual can use a bot to replace a repetitive task that a human would otherwise have to perform. Bots are also much faster at these tasks than humans. Although bots can carry out useful functions, they can also be malicious and come in the form of malware.

Why does website ask if I’m a robot?

Software bots are written to perform common tasks such as a form submission to advertise on a website automatically. To protect against these bots, a website asks if you’re a robot as a CAPTCHA to determine if you’re a human or a robot. This protection is done by analyzing the mouse movements and looking for any other irregularities as the user checks the I’m not a robot check box.

_

Is a robot a computer?

No. A robot is considered a machine and not a computer. The computer gives the machine its intelligence and its ability to perform tasks. A robot is a machine capable of manipulating or navigating its environment, and a computer is not. For example, a robot at a car assembly plant assists in building a car by grabbing parts and welding them onto a car frame. A computer helps track and control the assembly, but cannot make any physical changes to a car.

_

There is no consensus on which machines qualify as robots but there is general agreement among experts, and the public, that robots tend to possess some or all of the following abilities and functions: accept electronic programming, process data or physical perceptions electronically, operate autonomously to some degree, move around, operate physical parts of itself or physical processes, sense and manipulate their environment, and exhibit intelligent behavior, especially behavior which mimics humans or other animals. Closely related to the concept of a robot is the field of Synthetic Biology, which studies entities whose nature is more comparable to beings than to machines.

_

Robots can be autonomous or semi-autonomous and range from humanoids such as Honda’s Advanced Step in Innovative Mobility (ASIMO) and TOSY’s TOSY Ping Pong Playing Robot (TOPIO) to industrial robots, medical operating robots, patient assist robots, dog therapy robots, collectively programmed swarm robots, UAV drones such as General Atomics MQ-1 Predator, and even microscopic nano robots. By mimicking a lifelike appearance or automating movements, a robot may convey a sense of intelligence or thought of its own. Autonomous things are expected to proliferate in the future, with home robotics and the autonomous car as some of the main drivers.

_

Undeterred by its somewhat chilling origins (or perhaps ignorant of them), technologists of the 1950s appropriated the term robot to refer to machines controlled by programs. A robot is “a reprogrammable multifunctional device designed to manipulate and/or transport material through variable programmed motions for the performance of a variety of tasks”. The term robotics, which Asimov claims he coined in 1942 refers to “a science or art involving both artificial intelligence (to reason) and mechanical engineering (to perform physical acts suggested by reason)”

As currently defined, robots exhibit three key elements:

- programmability, implying computational or symbol- manipulative capabilities that a designer can combine as desired (a robot is a computer);

- mechanical capability, enabling it to act on its environment rather than merely function as a data processing or computational device (a robot is a machine); and

- flexibility, in that it can operate using a range of programs and manipulate and transport materials in a variety of ways.

We can conceive of a robot, therefore. as either a computer- enhanced machine or as a computer with sophisticated input/output devices. Its computing capabilities enable it to use its motor devices to respond to external stimuli, which it detects with its sensory devices. The responses are more complex than would be possible using mechanical, electromechanical, and/or electronic components alone.

_

According to The Robot Institute of America (1979):

“Robot is a reprogrammable, multifunctional manipulator designed to move materials, parts, tools, or specialized devices through various programmed motions for the performance of a variety of tasks.”

According to the Webster dictionary:

“Robot is automatic device that performs functions normally ascribed to humans or a machine in the form of a human (Webster, 1993).”

There is no single agreed definition of a robot although all definitions include an outcome of a task that is completed without human intervention. Whilst some definitions require the task to be completed by a physical machine that moves and responds to its environment, other definitions use the term robot in connection with tasks completed by software, without physical embodiment.

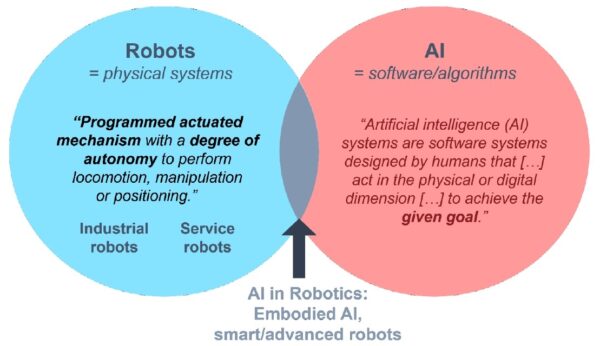

The IFR supports the International Organization for Standardization (ISO) definition 8373-2021 of a robot:

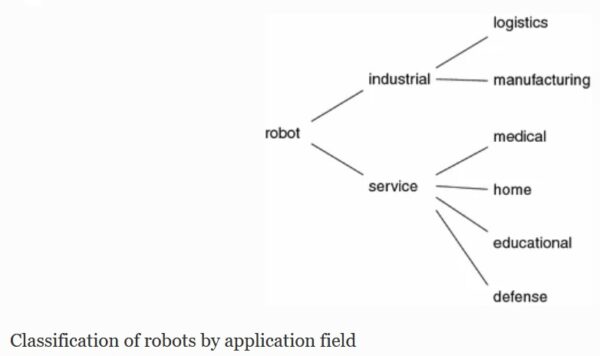

Industrial Robot:

- An automatically controlled, reprogrammable, multipurpose manipulator programmable in three or more axes, which may be either fixed in place or mobile for use in industrial automation applications.

-Reprogrammable: whose programmed motions or auxiliary functions may be changed without physical alterations;

-Multipurpose: capable of being adapted to a different application with physical alterations;

-Physical alterations: alteration of the mechanical structure or control system except for changes of programming cassettes, ROMs, etc.

-Axis: direction used to specify the robot motion in a linear or rotary mode

Service Robot:

- A service robot is a robot that performs useful tasks for humans or equipment excluding industrial automation application.

Note: The classification of a robot into industrial robot or service robot is done according to its intended application.

- A personal service robot or a service robot for personal use is a service robot used for a non-commercial task, usually by lay persons. Examples are domestic servant robot, automated wheelchair, personal mobility assist robot, and pet exercising robot.

- A professional service robot or a service robot for professional use is a service robot used for a commercial task, usually operated by a properly trained operator. Examples are cleaning robot for public places, delivery robot in offices or hospitals, fire-fighting robot, rehabilitation robot and surgery robot in hospitals. In this context an operator is a person designated to start, monitor and stop the intended operation of a robot or a robot system.

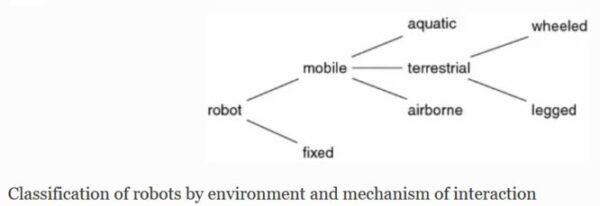

Mobile robot:

- Robot able to travel under its own control

- A mobile robot can be a mobile platform with or without manipulators. In addition to autonomous operation, a mobile robot can have means to be remotely controlled.

_

A robot is a reprogrammable, multifunctional machine designed to manipulate materials, parts, tools, or specialized devices, through variable programmed motions for the performance of a variety of task. It can conveniently be used for variety of industrial tasks. Today 90% of all robots used are found in factories and they are referred to as industrial robots. Robots are slowly finding their way into warehouses, laboratories, research and exploration sites, power plants, hospitals, undersea, and even outer space. Few of the advantages for which the robots are attractive in industrial uses are as follows:

- Robots never get sick or need to rest, so they can work 24 hours a day, 7 days a week.

- When the task required would be dangerous for a person, they can be do the work instead.

- Robots do not get bored. So the work that is repetitive and unrewarding is of no problem for a robot.

_

With the merging of computers, telecommunications networks, robotics, and distributed systems software. and the multiorganizational application of the hybrid technology, the distinction between computers and robots may become increasingly arbitrary. In some cases it would be more convenient to conceive of a principal intelligence with dispersed sensors and effectors, each with subsidiary intelligence (a robotics- enhanced computer system). In others, it would be more realistic to think in terms of multiple devices, each with appropriate sensory, processing, and motor capabilities, all subjected to some form of coordination (an integrated multi-robot system). The key difference robotics brings is the complexity and persistence that artifact behaviour achieves, independent of human involvement.

_

Many industrial robots resemble humans in some ways. In science fiction, the tendency has been even more pronounced, and readers encounter humanoid robots, humaniform robots, and androids. In fiction, as in life, it appears that a robot needs to exhibit only a few human- like characteristics to be treated as if it were human. For example, the relationships between humans and robots in many of Asimov’s stories seem almost intimate, and audiences worldwide reacted warmly to the “personality” of the computer HAL in 2001.’ A Space Odyssey, and to the gibbering rubbish- bin R2- D2 in the Star Wars series.

The tendency to conceive of robots in humankind’s own image may gradually yield to utilitarian considerations, since artifacts can be readily designed to transcend humans’ puny sensory and motor capabilities. Frequently the disadvantages and risks involved in incorporating sensory, processing, and motor apparatus within a single housing clearly outweigh the advantages. Many robots will therefore be anything but humanoid in form. They may increasingly comprise powerful processing capabilities and associated memories in a safe and stable location, communicating with one or more sensory and motor devices (supported by limited computing capabilities and memory) at or near the location(s) where the robot performs its functions.

_

Types of Robots:

Mechanical robots come in all shapes and sizes to efficiently carry out the task for which they are designed. All robots vary in design, functionality and degree of autonomy. From the 0.2 millimeter-long “RoboBee” to the 200 meter-long robotic shipping vessel “Vindskip,” robots are emerging to carry out tasks that humans simply can’t. Generally, there are five types of robots:

-1) Pre-Programmed Robots

Pre-programmed robots operate in a controlled environment where they do simple, monotonous tasks. An example of a pre-programmed robot would be a mechanical arm on an automotive assembly line. The arm serves one function — to weld a door on, to insert a certain part into the engine, etc. — and its job is to perform that task longer, faster and more efficiently than a human.

Pre-programmed robots are ones that have to be told ahead of time what to do, and then they simply execute that program. They cannot change their behavior while they are working, and no human is guiding their actions.

In industry:

There is probably no other industry that has been so revolutionized by industrial robots than the automobile industry. There are pre-programmed robotic arms so large that they are able to handle entire automobiles as if they were toy cars. There are also pre-programmed robots that can make the tiniest weld or spray paint with aesthetic precision. Manufacturing has never been the same since robots have taken over the jobs that humans used to do.

In medicine:

Pre-programmed robots are particularly good for tasks that require a very high level of accuracy at a very fine level. For example, if we want to use radiation to kill tumors in cancer patients, we need to be able to deliver the radiation precisely to the tumor. If we miss, we may end up killing healthy tissue near the tumor instead of the tumor itself. The CyberKnife radiosurgery machine is a pre-programmed robot designed to deliver very precise doses of radiation to very precise locations within the human body.

Another common use of pre-programmed robots is as training simulations, such as the S575 Noelle. These robots are constructed to present medical students with various scenarios that they must diagnose and treat. The robot is pre-programmed with a possible scenario, and its responses to the students’ treatment is also pre-programmed. So you could not, for example, try an experimental treatment because the robot would not know how to respond. You must try one of the treatments that the robot is programmed to respond to.

-2) Humanoid Robots

Humanoid robots are robots that look like and/or mimic human behavior. These robots usually perform human-like activities (like running, jumping and carrying objects), and are sometimes designed to look like us, even having human faces and expressions. Two of the most prominent examples of humanoid robots are Hanson Robotics’ Sophia and Boston Dynamics’ Atlas.

-3) Autonomous Robots

Autonomous robots operate independently of human operators. These robots are usually designed to carry out tasks in open environments that do not require human supervision. They are quite unique because they use sensors to perceive the world around them, and then employ decision-making structures (usually a computer) to take the optimal next step based on their data and mission. Historic examples include space probes. Modern examples include self-driving cars. An example of an autonomous robot would be the Roomba vacuum cleaner, which uses sensors to roam freely throughout a home.

Autonomous medical robots are able to operate intelligently and adapt to their environment without direct human supervision. In particular, they should be able to perform their duties in an environment that might be changing, and without a person sitting at a bank of controls directing their activities.

In military:

The U.S. Defense Department funds cutting-edge research through DARPA. Several times DARPA has offered a $2 million prize to the team that developed the fastest autonomous robotic car that could navigate a cross-country course.

In home:

In the home consumer market, IRobot has already sold millions of robots to everyday consumers. The most popular is the Rhoomba which is an autonomous vacuum cleaner.

In medicine:

Delivery services:

One of the things that nurses in a hospital spend a lot of time doing is giving medications to patients. This requires the nurses to keep track of which patient gets which medicines and what the dosages are. It also requires them to keep track of when the patients have received their medications. Finally, this information must be transmitted across multiple shifts of nurses without error. The TUG automated delivery system is able to deliver medications, both scheduled and on-demand, to patients and keep track of all the information about the delivery of these medications. It can also deliver meals and other items, such as extra pillows and blankets. Using a map of the hospital it has stored, it can navigate hallways and elevators to get to whatever room it is summoned or sent to.

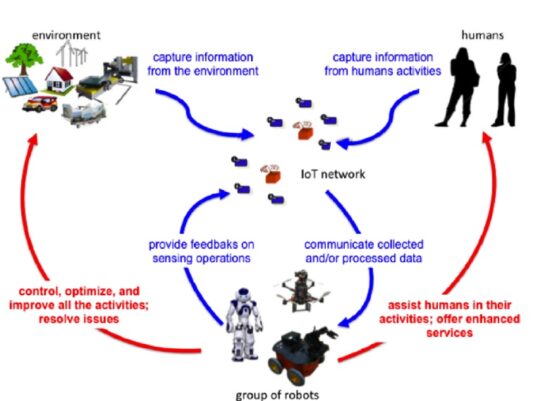

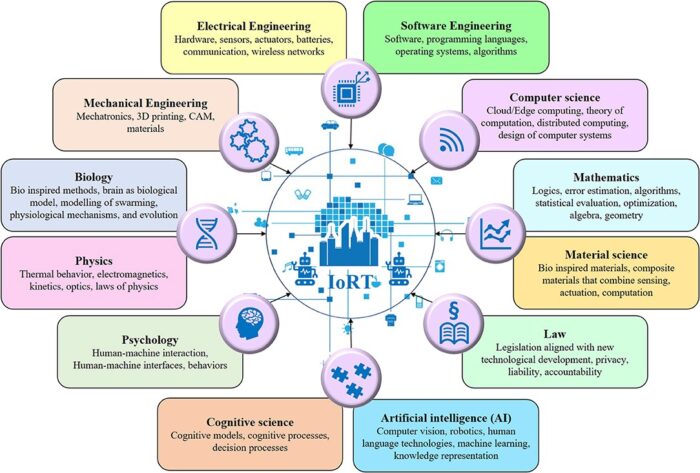

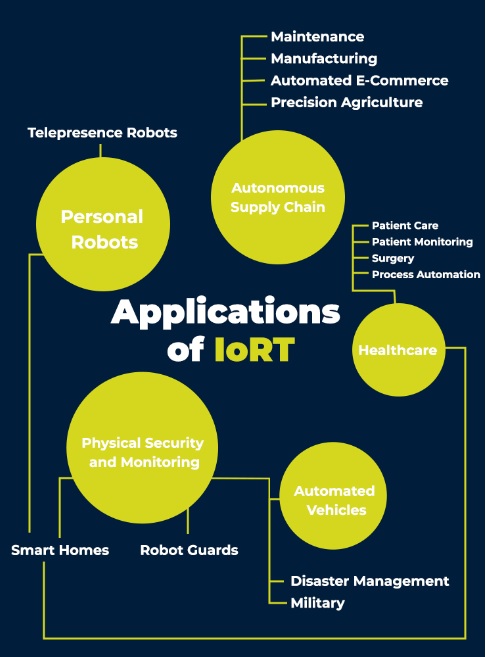

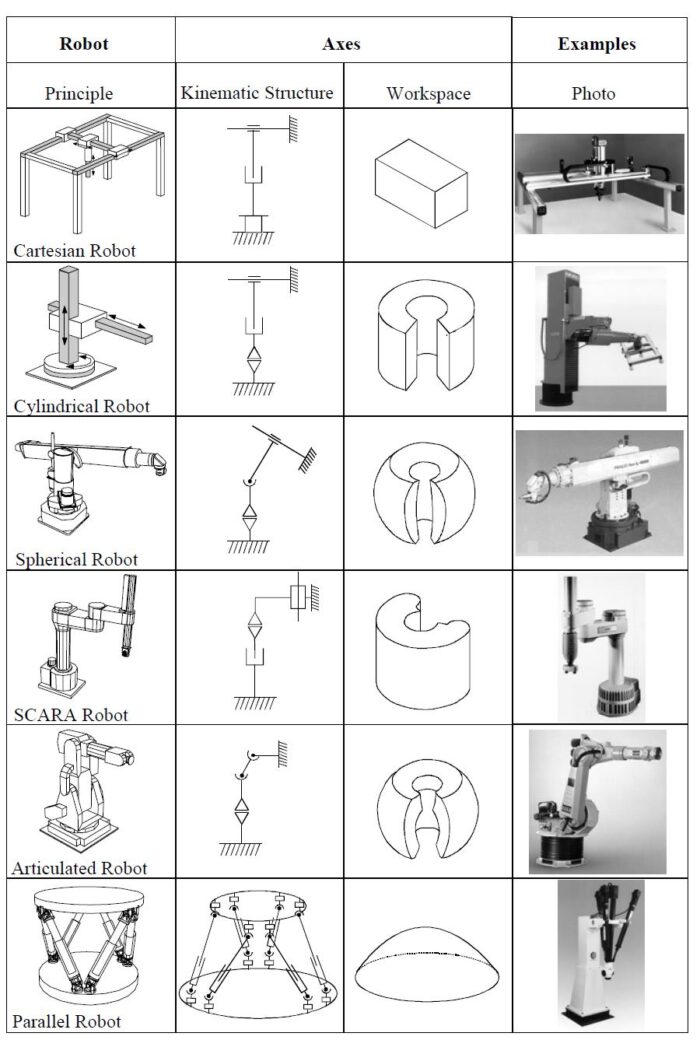

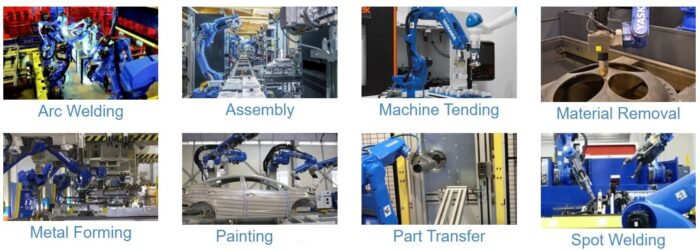

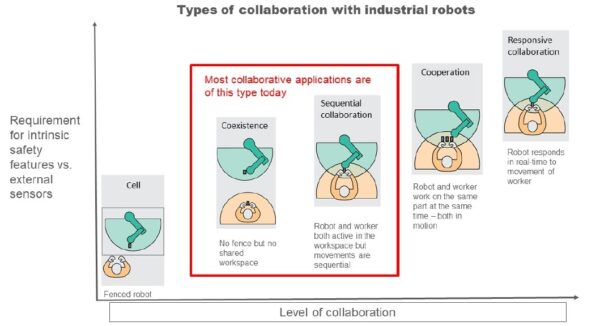

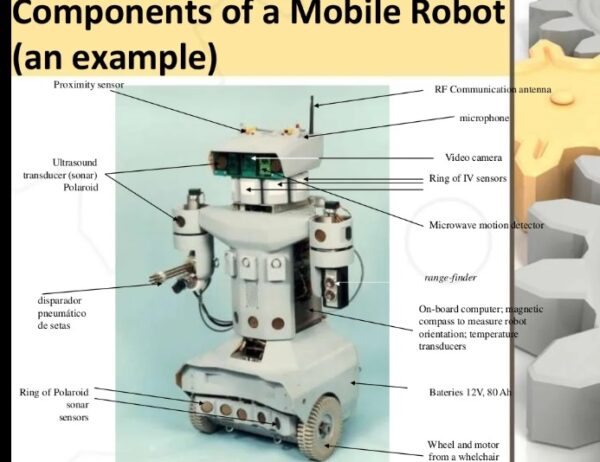

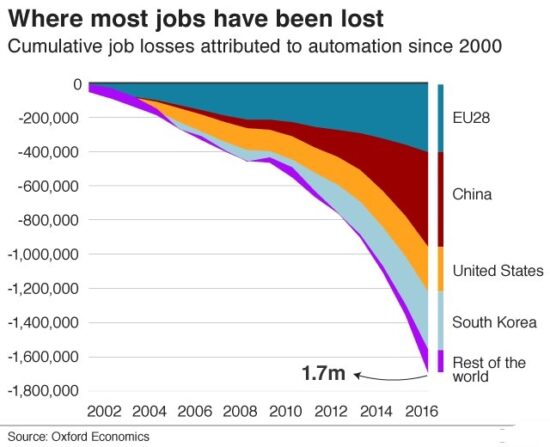

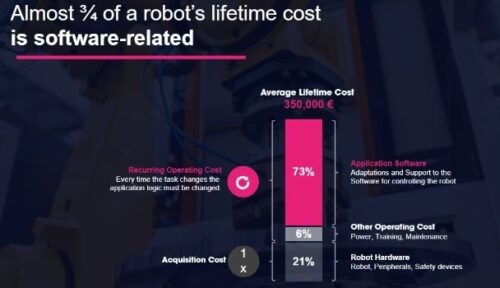

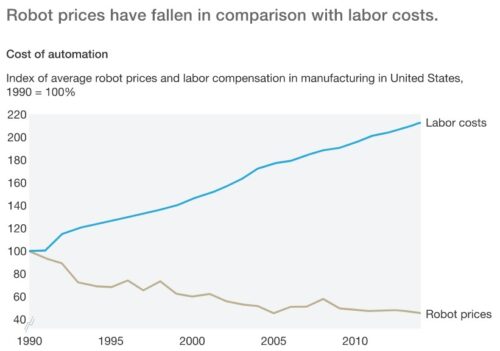

Delicate treatments: