Dr Rajiv Desai

An Educational Blog

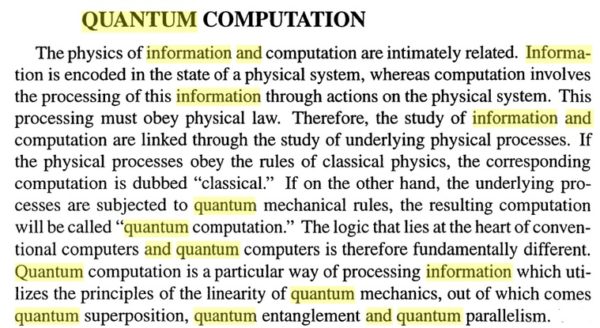

QUANTUM COMPUTING

Quantum Computing:

_

Note:

As a doctor myself, I am on duty treating patients during Coronavirus pandemic and national lockdown. Whatever spare time I have, I utilize it for providing education through my website.

Can Quantum Computing help us to respond to the Coronavirus?

Quantum computing can be applied to work toward vaccines and therapies as well as epidemiology, supply distribution, hospital logistics, and diagnostics. By harnessing the properties of quantum physics, quantum computers have the potential to sort through a vast number of possibilities nearly instantaneously and come up with a probable solution. How? Read the article.

____

____

Prologue:

Quantum theory is one of the most successful theories that have influenced the course of scientific progress during the twentieth century. It has presented a new line of scientific thought, predicted entirely inconceivable situations and influenced several domains of modern technologies. After more than 50 years from its inception, quantum theory married with computer science, another great intellectual triumph of the 20th century and the new subject of quantum computation was born. Quantum computing merges two great scientific revolutions of the 20th century: quantum physics and computer science.

All ways of expressing information (i.e. voice, video, text, data) use physical system, for example, spoken words are conveyed by air pressure fluctuations. Information cannot exist without physical representation. Information, the 1’s and 0’s of classical computers, must inevitably be recorded by some physical system – be it paper or silicon. All matter is composed of atoms – nuclei and electrons – and the interactions and time evolution of atoms are governed by the laws of quantum mechanics. Without our quantum understanding of the solid state and the band theory of metals, insulators and semiconductors, the whole of the semiconductor industry with its transistors and integrated circuits – and hence the computer could not have developed. Quantum physics is the theoretical basis of the transistor, the laser, and other technologies which enabled the computing revolution. But on the algorithmic level, today’s computing machinery still operates on “”classical”” Boolean logic. Quantum computing is the design of hardware and software that replaces Boolean logic by quantum law at the algorithmic level i.e. using superposition and entanglement to process information. At the bottom everything is quantum mechanical and, we can certainly envisage storing information on single atoms or electrons. However, these microscopic objects do not obey Newton’s Laws of classical mechanics: instead, they evolve and interact according to the Schrödinger equation, the ‘Newton’s Law’ of quantum mechanics.

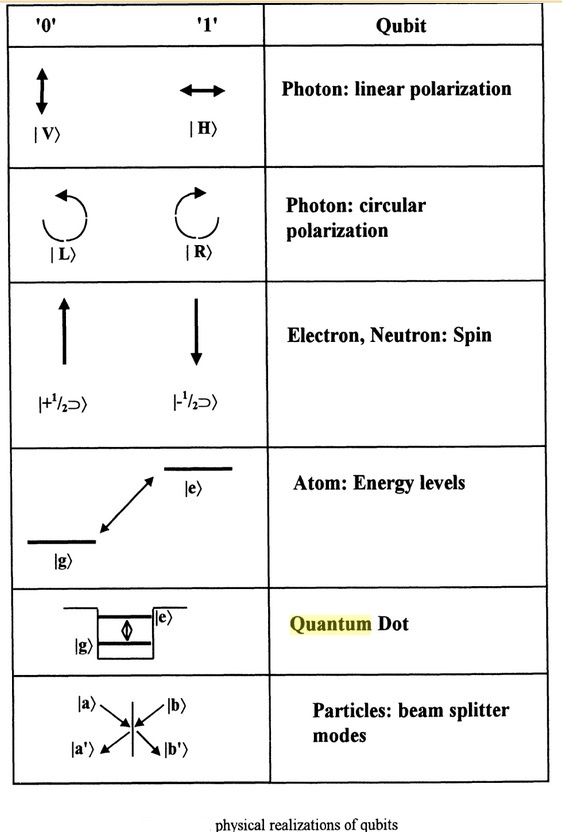

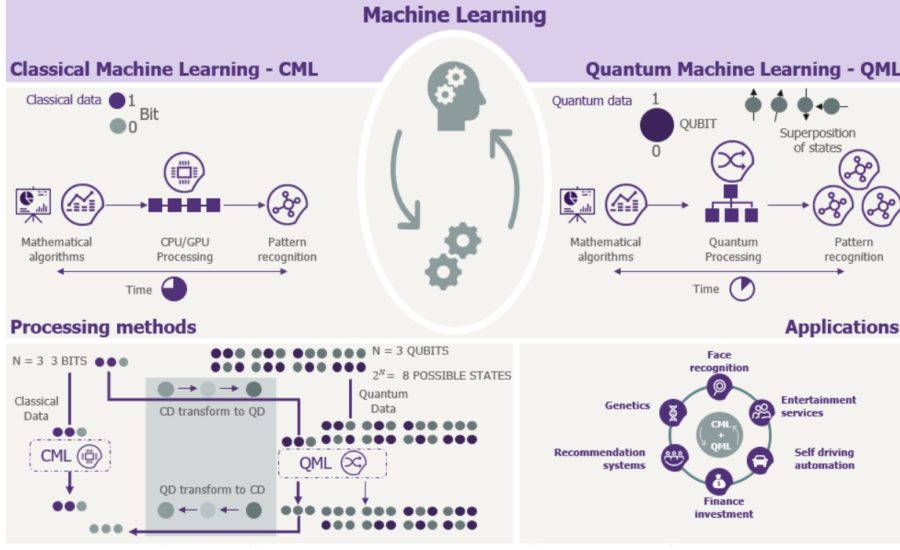

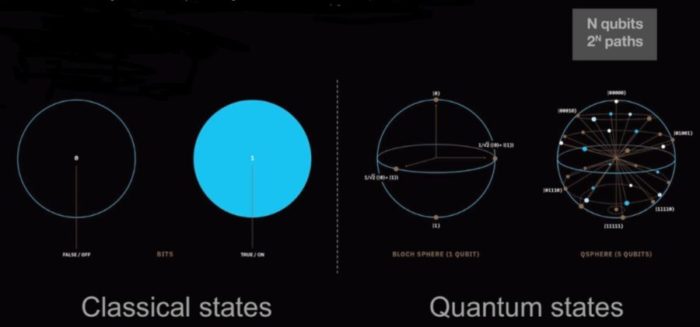

For certain computations such as optimization, sampling, search or quantum simulation quantum computing promises dramatic speedups. Quantum computing is also applied to artificial intelligence and machine learning because many tasks in these areas rely on solving hard optimization problems or performing efficient sampling. Quantum algorithms offer a dramatic speedup for computational problems in machine learning, material science, and chemistry. Quantum computers will outperform traditional computers at certain tasks that are likely to include molecular and material modelling, logistics optimization, financial modelling, cryptography, and pattern matching activities that include deep learning artificial intelligence. Quantum computing will process data of mind-boggling sizes in a few milliseconds, something a classical computer would take years to do. In classical computing, machines use binary code, a series of ones and zeros, to transmit and process information, whereas in a quantum computer, it is qubit (a quantum bit) that enables operations. Just as a bit is the basic unit of information in a classical computer, a qubit is the basic unit of information in a quantum computer. Where a bit can store either a zero or a one, a qubit can store a zero, a one, both zero and one, or an infinite number of values in between—and be in multiple states (store multiple values) at the same time. Examples include: the spin of the electron in which the two levels can be taken as spin up and spin down; or the polarization of a single photon in which the two states can be taken to be the vertical polarization and the horizontal polarization. With quantum computers, classical computers will not go away. For the foreseeable future, the model will be a hybrid one. You’re going to have a classical computer where everything happens. You will go to a quantum computer to solve certain coordinates of a problem and get the result back from the classical computer.

The first wave of technology was about steam power, the second was electricity, the third is high tech and the fourth wave we are now entering is physics at the molecular level, such as AI, nano and bio technology; then we will see the fifth wave of technology which will be dominated by physics at atomic and sub-atomic level i.e. electron spin and photon polarization used to process information. Some mainframes will be replaced by quantum computers in future, but mobile phones & laptops will not be replaced due to the need for a cooling infrastructure for the qubits.

_____

_____

In natural science, Nature has given us a world and we’re just to discover its laws. In computers, we can stuff laws into it and create a world.

-Alan Kay

The theory of computation has traditionally been studied almost entirely in the abstract, as a topic in pure mathematics. This is to miss the point of it. Computers are physical objects, and computations are physical processes. What computers can or cannot compute is determined by the laws of physics alone, and not by pure mathematics.

-David Deutsch

______

______

Abbreviations, synonyms and terminology:

QC = Quantum Computing

H = Hilbert space

CPU = Central processing units

QPU = Quantum processing units

NISQ = Noisy Intermediate-Scale Quantum

CMOS = Complementary Metal Oxide Semiconductor

QEC = Quantum error correction

AQC = Adiabatic quantum computation

QKD = Quantum key distribution

QML = Quantum Machine Learning

QCaaS = Quantum computing as a service

CCD = Charge-coupled device

SQUID = superconducting quantum interference device

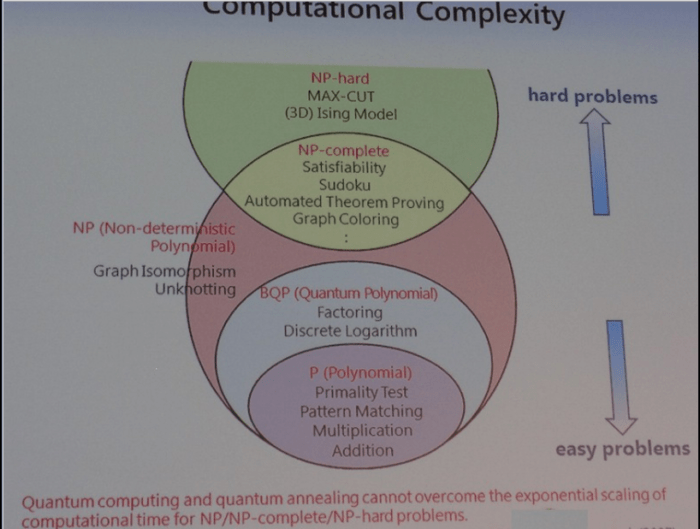

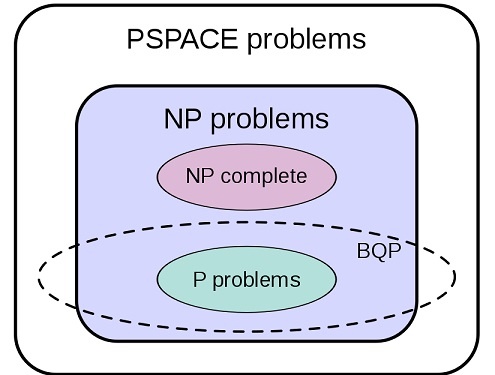

P = the set of problems that are solvable in polynomial time by a Deterministic Turing Machine

NP = the set of decision problems (answer is either yes or no) that are solvable in nondeterministic polynomial time i.e. can be solved in polynomial time by a Nondeterministic Turing Machine. Roughly speaking, it refers to questions where we can provably perform the task in a polynomial number of operations in the input size, provided we are given a certain polynomial-size “hint” of the solution.

PH = polynomial hierarchy

PSPACE = set of all decision problems that can be solved by a Turing machine using a polynomial amount of space.

BQP = Bounded error Quantum Polynomial time

BPP = Bounded error Probabilistic Polynomial time

EPR paradox = Einstein–Podolsky–Rosen paradox, a thought experiment in quantum physics and the philosophy of science

_

Bits, gates, and instructions:

- bit. Pure information, a 0 or a 1, not associated with hardware per se, although a representation of a bit can be stored, transmitted, and manipulated by hardware.

- classical bit. A bit on a classical electronic device or in a transmission medium.

- classical logic gate. Hardware device on an electronic circuit board or integrated circuit (chip) which can process and transmit bits, classical bits.

- flip flop. A classical logic gate which can store, manipulate, and transmit a single bit, a classical bit.

- register. A parallel arrangement of flip flops on a classical computer which together constitute a single value, commonly a 32-bit or 64-bit integer. Some programming tools on quantum computers may simulate a register as a sequence of contiguous qubits, but only for initialization and measurement and not for full-fledged bit and arithmetic operations as on a classical computer. Otherwise, a qubit is simply a 1-bit register, granted, with the capabilities of superposition and entanglement.

- memory cell. An addressable location in a memory chip or storage medium which is capable of storing a single bit, a classical bit.

- quantum information. Information on a quantum computer. Unlike a classical bit which is either a 0 or a 1, quantum information can be a 0 or a 1, or a superposition of both a 0 and a 1, or an entanglement with the quantum information of another qubit.

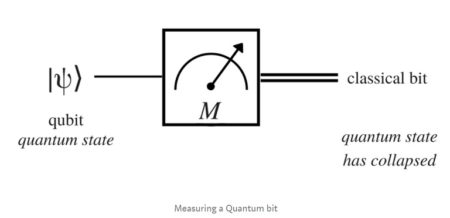

- qubit. Nominally a quantum bit, which represents quantum information, but also and primarily a hardware device capable of storing that quantum information. It is the quantum equivalent of both a bit and a flip flop or memory cell which stores that information. But first and foremost, a qubit is a hardware device, independent of what quantum information may be placed in that device.

- information. Either a bit (classical bit) or quantum information, which can be stored in a flip flop or memory cell on a classical computer or in a qubit on a quantum computer.

- instruction. A single operation to be performed in a computer. Applies to both classical computers and quantum computers.

- quantum logic gate. An instruction on a quantum computer. In no way comparable to a classical logic gate.

- logic gate. Ambiguous term whose meaning depends on context. On a classical computer it refers to hardware — a classical logic gate, while on a quantum computer it refers to software — an instruction.

_______

_______

Notation, Vector and Hilbert space:

In quantum mechanics, Bra-ket notation is a standard notation for describing quantum states, composed of angle brackets and vertical bars. It can also be used to denote abstract vectors and linear functionals in mathematics. It is so called because the inner product (or dot product) of two states is denoted by a ⟨bra|c|ket⟩;

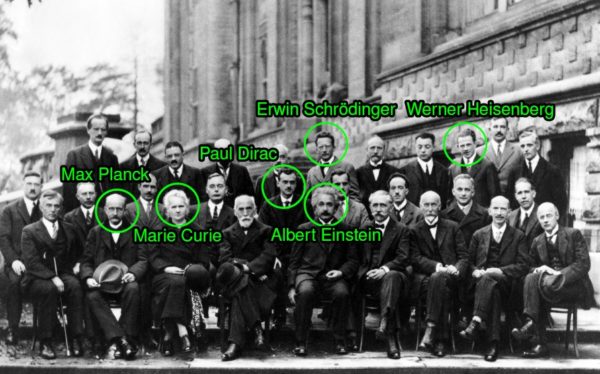

(ϕ|ψ), consisting of a left part, ⟨ ϕ |, called the bra (/brɑː/), and a right part, |ψ⟩, called the ket (/kɛt/). The notation was introduced in 1939 by Paul Dirac and is also known as Dirac notation, though the notation has precursors in Grassmann’s use of the notation (ϕ|ψ) for his inner products nearly 100 years previously. Bra-ket notation is widespread in quantum mechanics: almost every phenomenon that is explained using quantum mechanics—including a large portion of modern physics — is usually explained with the help of bra-ket notation. The expression (ϕ|ψ) is typically interpreted as the probability amplitude for the state ψ to collapse into the state ϕ.

_

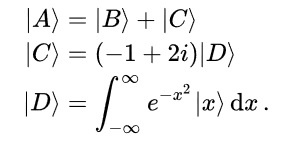

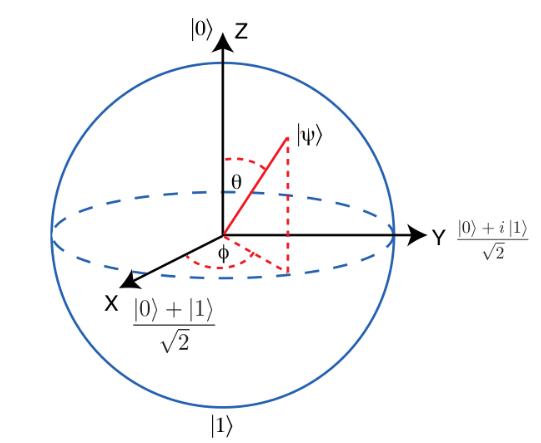

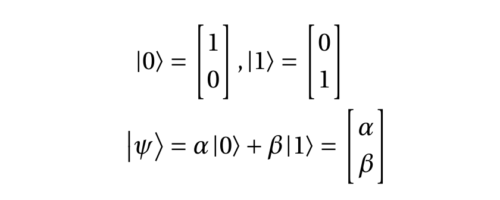

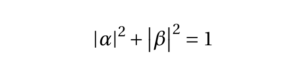

In mathematics and physics textbooks, vectors are often distinguished from scalars by writing an arrow over the identifying symbol. Sometimes boldface is used for this purpose. The notation |v⟩ means exactly the same thing as v⃗, i.e. it denotes a vector whose name is “v”. That’s it. There is no further mystery or magic at all. The symbol |ψ⟩ denotes a vector called “psi”. A ket |ψ⟩ is just a vector. A bra ⟨ψ| is the Hermitian conjugate of the vector. Symbols, letters, numbers, or even words—whatever serves as a convenient label—can be used as the label inside a ket, with the | ⟩ making clear that the label indicates a vector in vector space. In other words, the symbol “|A⟩” has a specific and universal mathematical meaning, while just the “A” by itself does not. For example, |1⟩ + |2⟩ might or might not be equal to |3⟩. You can multiply a vector with a number in the usual way. You can write the scalar product of two vectors |ψ⟩ and |ϕ⟩ as ⟨ϕ|ψ⟩. You can apply an operator to the vector (in finite dimensions this is just a matrix multiplication) X|ψ⟩. You could think of |0⟩ and |1⟩ as two orthonormal basis states (represented by “ket”s) of a quantum bit which resides in a two dimensional complex vector space. As an example |0⟩ could represent the spin-down state of an electron while |1⟩ could represent the spin-up state. But actually the electron can be in a linear superposition of those two states i.e. |ψ⟩ electron = α∣0⟩+β∣1⟩

_

Vectors will sometimes be written in column format, as for example,

and sometimes for readability in the format (1,2). The latter should be understood as shorthand for a column vector. For two-level quantum systems used as qubits, we shall usually identify the state|0〉with the vector (1,0), and similarly|1〉with (0,1). Kets are identified with column vectors, and bras with row vectors.

_

Since kets are just vectors in a Hermitian vector space they can be manipulated using the usual rules of linear algebra, for example:

Note how the last line above involves infinitely many different kets, one for each real number x.

_

A Hilbert space is an abstract vector space possessing the structure of an inner product that allows length and angle to be measured. Furthermore, Hilbert spaces are complete: there are enough limits in the space to allow the techniques of calculus to be used. Virtually all the quantum computing literature refers to a finite-dimensional complex vector space by the name ‘Hilbert space’, and we will use H to denote such a space. Hilbert spaces of interest for quantum computing will typically have dimension 2^n, for some positive integer n. This is because, as with classical information, we will construct larger state spaces by concatenating a string of smaller systems, usually of size two.

______

______

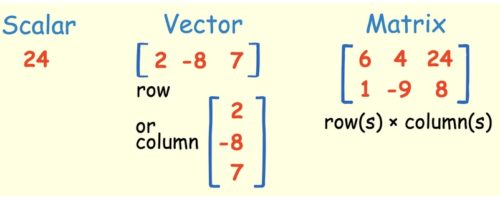

Linear algebra:

Linear Algebra is a continuous form of mathematics and is applied throughout science and engineering because it allows you to model natural phenomena and to compute them efficiently. Because it is a form of continuous and not discrete mathematics, a lot of computer scientists don’t have a lot of experience with it. Linear Algebra is also central to almost all areas of mathematics like geometry and functional analysis. Its concepts are a crucial prerequisite for understanding the theory behind Machine Learning, especially if you are working with Deep Learning Algorithms.

Linear algebra is about linear combinations. That is, using arithmetic on columns of numbers called vectors and arrays of numbers called matrices, to create new columns and arrays of numbers. Linear algebra is the study of lines and planes, vector spaces and mappings that are required for linear transforms.

It is a relatively young field of study, having initially been formalized in the 1800s in order to find unknowns in systems of linear equations. A linear equation is just a series of terms and mathematical operations where some terms are unknown; for example:

y = 4^ x + 1

Equations like this are linear in that they describe a line on a two-dimensional graph. The line comes from plugging in different values into the unknown x to find out what the equation or model does to the value of y.

We can line up a system of equations with the same form with two or more unknowns.

_

Linear algebra is a branch of mathematics, but the truth of it is that linear algebra is the mathematics of data. Matrices and vectors are the language of data. In Linear algebra, data is represented by linear equations, which are presented in the form of matrices and vectors. Therefore, you are mostly dealing with matrices and vectors rather than with scalars. When you have the right libraries, like Numpy, at your disposal, you can compute complex matrix multiplication very easily with just a few lines of code.

_

The application of linear algebra in computers is often called numerical linear algebra. It is more than just the implementation of linear algebra operations in code libraries; it also includes the careful handling of the problems of applied mathematics, such as working with the limited floating point precision of digital computers.

Computers are good at performing linear algebra calculations, and much of the dependence on Graphical Processing Units (GPUs) by modern machine learning methods such as deep learning because of their ability to compute linear algebra operations fast.

Efficient implementations of vector and matrix operations were originally implemented in the FORTRAN programming language in the 1970s and 1980s and a lot of code ported from those implementations, underlies much of the linear algebra performed using modern programming languages, such as Python.

Three popular open source numerical linear algebra libraries that implement these functions are:

Linear Algebra Package, or LAPACK.

Basic Linear Algebra Subprograms, or BLAS (a standard for linear algebra libraries).

Automatically Tuned Linear Algebra Software, or ATLAS.

Often, when you are calculating linear algebra operations directly or indirectly via higher-order algorithms, your code is very likely dipping down to use one of these, or similar linear algebra libraries. The name of one of more of these underlying libraries may be familiar to you if you have installed or compiled any of Python’s numerical libraries such as SciPy and NumPy.

_

Computational Rules:

- Matrix-Scalar Operations

If you multiply, divide, subtract, or add a Scalar to a Matrix, you do so with every element of the Matrix.

- Matrix-Vector Multiplication

Multiplying a Matrix by a Vector can be thought of as multiplying each row of the Matrix by the column of the Vector. The output will be a Vector that has the same number of rows as the Matrix.

- Matrix-Matrix Addition and Subtraction

Matrix-Matrix Addition and Subtraction is fairly easy and straightforward. The requirement is that the matrices have the same dimensions and the result is a Matrix that has also the same dimensions. You just add or subtract each value of the first Matrix with its corresponding value in the second Matrix.

- Matrix-Matrix Multiplication

Multiplying two Matrices together isn’t that hard either if you know how to multiply a Matrix by a Vector. Note that you can only multiply Matrices together if the number of the first Matrix’s columns matches the number of the second Matrix’s rows. The result will be a Matrix with the same number of rows as the first Matrix and the same number of columns as the second Matrix.

_

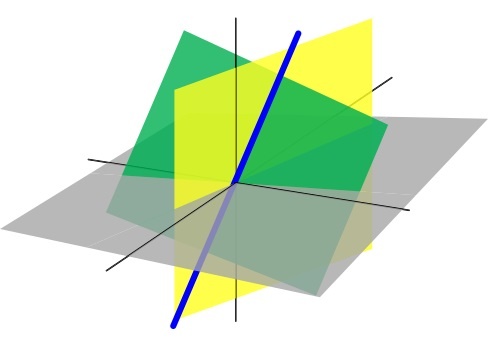

Until the 19th century, linear algebra was introduced through systems of linear equations and matrices. In modern mathematics, the presentation through vector spaces is generally preferred, since it is more synthetic, more general (not limited to the finite-dimensional case), and conceptually simpler, although more abstract. An element of a specific vector space may have various nature; for example, it could be a sequence, a function, a polynomial or a matrix. Linear algebra is concerned with those properties of such objects that are common to all vector spaces. Matrices allow explicit manipulation of finite-dimensional vector spaces and linear maps.

_

In the three-dimensional Euclidean space as seen in the figure above, these three planes represent solutions of linear equations and their intersection represents the set of common solutions: in this case, a unique point. The blue line is the common solution of a pair of linear equations.

_

Usage in quantum mechanics:

The mathematical structure of quantum mechanics is based in large part on linear algebra:

- Wave functions and other quantum states can be represented as vectors in a complex Hilbert space. (The exact structure of this Hilbert space depends on the situation.) In bra-ket notation, for example, an electron might be in “the state |ψ⟩ “. (Technically, the quantum states are rays of vectors in the Hilbert space, as c|ψ⟩ corresponds to the same state for any nonzero complex number c.)

- Quantum superpositions can be described as vector sums of the constituent states. For example, an electron in the state| 1⟩ + | 2 ⟩ is in a quantum superposition of the states| 1⟩ and | 2 ⟩.

- Measurements are associated with linear operators (called observables) on the Hilbert space of quantum states.

- Dynamics is also described by linear operators on the Hilbert space. For example, in the Schrödinger picture, there is a linear operator U with the property that if an electron is in state |ψ⟩ right now, then in one minute it will be in the state U|ψ⟩ , the same U for every possible |ψ⟩.

- Wave function normalization is scaling a wave function so that its norm is 1.

Since virtually every calculation in quantum mechanics involves vectors and linear operators, it can involve, and often does involve, bra-ket notation.

Quantum computation inherited linear algebra from quantum mechanics as the supporting language for describing this area. Therefore, it is essential to have a solid knowledge of the basic results of linear algebra to understand quantum computation and quantum algorithms.

_______

_______

Classical computing:

_

Theoretical computer science is essentially math, and subjects such as probability, statistics, linear algebra, graph theory, combinatorics and optimization are at the heart of artificial intelligence (AI), machine learning (ML), data science and computer science in general. Theoretical work in quantum computing requires expertise in quantum mechanics, linear algebra, theory of computation, information theory and information security.

_

Theory of computation:

In theoretical computer science and mathematics, the theory of computation is the branch that deals with how efficiently problems can be solved on a model of computation, using an algorithm. The field is divided into three major branches: automata theory and languages, computability theory, and computational complexity theory, which are linked by the question: “What are the fundamental capabilities and limitations of computers?”.

In order to perform a rigorous study of computation, computer scientists work with a mathematical abstraction of computers called a model of computation. There are several models in use, but the most commonly examined is the Turing machine. Computer scientists study the Turing machine because it is simple to formulate, can be analyzed and used to prove results, and because it represents what many consider the most powerful possible “reasonable” model of computation. It might seem that the potentially infinite memory capacity is an unrealizable attribute, but any decidable problem solved by a Turing machine will always require only a finite amount of memory. So in principle, any problem that can be solved (decided) by a Turing machine can be solved by a computer that has a finite amount of memory.

_

Now, let’s understand the basic terminologies, which are important and frequently used in Theory of Computation.

Symbol:

Symbol is the smallest building block, which can be any alphabet, letter or any picture.

A, b, c, 0, 1,…

Alphabets (Σ):

Alphabets are set of symbols, which are always finite.

Σ = {0,1} is an alphabet of binary digits.

Σ = {a, b, c}

Σ = {1, 2, 3, ….9} is an alphabet of decimal digits.

String:

String is a finite sequence of symbols from some alphabet. String is generally denoted as w and length of a string is denoted as |w|.

Empty string is the string with zero occurrence of symbols, represented as ε.

Number of Strings (of length 2) that can be generated over the alphabet {a, b}

a a

a b

b a

b b

Length of String |w| = 2

Number of Strings = 4

For alphabet {a, b} with length n, number of strings can be generated = 2n.

Note – If the number of ‘Σ’ is represented by |Σ|, then number of strings of length n, possible over Σ is |Σ|n.

Language:

A language is a set of strings, chosen from some Σ* or we can say- ‘A language is a subset of Σ* ‘. A language which can be formed over ‘ Σ ‘ can be Finite or Infinite.

Powers of ‘ Σ ‘ :

Say Σ = {a,b} then

Σ0 = Set of all strings over Σ of length 0. {ε}

Σ1 = Set of all strings over Σ of length 1. {a, b}

Σ2 = Set of all strings over Σ of length 2. {aa, ab, ba, bb}

i.e. |Σ2|= 4 and Similarly, |Σ3| = 8

Σ* is a Universal Set.

Σ* = Σ0 U Σ1 U Σ2 ………. = {ε} U {a, b} U {aa, ab, ba, bb} = …………. //infinite language.

_

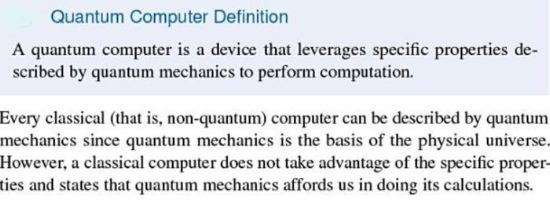

A computer is a physical device that helps us process information by executing algorithms. An algorithm is a well-defined procedure, with finite description, for realizing an information-processing task. An information-processing task can always be translated into a physical task. When designing complex algorithms and protocols for various information-processing tasks, it is very helpful, perhaps essential, to work with some idealized computing model. However, when studying the true limitations of a computing device, especially for some practical reason, it is important not to forget the relationship between computing and physics. Real computing devices are embodied in a larger and often richer physical reality than is represented by the idealized computing model. Quantum information processing is the result of using the physical reality that quantum theory tells us about for the purposes of performing tasks that were previously thought impossible or infeasible. Devices that perform quantum information processing are known as quantum computers.

_

Why should a person interested in quantum computation and quantum information spend time investigating classical computer science?

There are three good reasons for this effort.

First, classical computer science provides a vast body of concepts and techniques which may be reused to great effect in quantum computation and quantum information. Many of the triumphs of quantum computation and quantum information have come by combining existing ideas from computer science with novel ideas from quantum mechanics. For example, some of the fast algorithms for quantum computers are based upon the Fourier transform, a powerful tool utilized by many classical algorithms. Once it was realized that quantum computers could perform a type of Fourier transform much more quickly than classical computers this enabled the development of many important quantum algorithms.

Second, computer scientists have expended great effort understanding what resources are required to perform a given computational task on a classical computer. These results can be used as the basis for a comparison with quantum computation and quantum information. For example, much attention has been focused on the problem of finding the prime factors of a given number. On a classical computer this problem is believed to have no ‘efficient’ solution. What is interesting is that an efficient solution to this problem is known for quantum computers. The lesson is that, for this task of finding prime factors, there appears to be a gap between what is possible on a classical computer and what is possible on a quantum computer. This is both intrinsically interesting, and also interesting in the broader sense that it suggests such a gap may exist for a wider class of computational problems than merely the finding of prime factors. By studying this specific problem further, it may be possible to discern features of the problem which make it more tractable on a quantum computer than on a classical computer, and then act on these insights to find interesting quantum algorithms for the solution of other problems.

Third, and most important, there is learning to think like a computer scientist. Computer scientists think in a rather different style than does a physicist or other natural scientist. Anybody wanting a deep understanding of quantum computation and quantum information must learn to think like a computer scientist at least some of the time; they must instinctively know what problems, what techniques, and most importantly what problems are of greatest interest to a computer scientist.

_

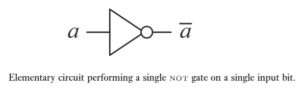

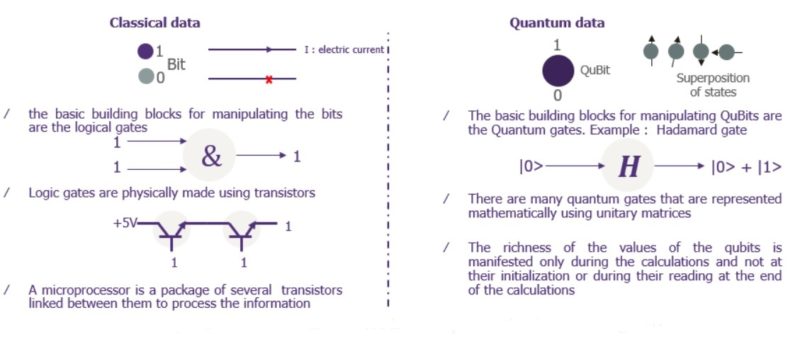

Conventional computers have two tricks that they do really well: they can store numbers in memory and they can process stored numbers with simple mathematical operations (like add and subtract). They can do more complex things by stringing together the simple operations into a series called an algorithm (multiplying can be done as a series of additions, for example). Both of a computer’s key tricks—storage and processing—are accomplished using switches called transistors, which are like microscopic versions of the switches you have on your wall for turning on and off the lights. A transistor can either be on or off, just as a light can either be lit or unlit. If it’s on, we can use a transistor to store a number one (1); if it’s off, it stores a number zero (0). Long strings of ones and zeros can be used to store any number, letter, or symbol using a code based on binary (so computers store an upper-case letter A as 1000001 and a lower-case one as 01100001). Each of the zeros or ones is called a binary digit (or bit) and, with a string of eight bits, you can store 255 different characters (such as A-Z, a-z, 0-9, and most common symbols). Computers calculate by using circuits called logic gates, which are made from a number of transistors connected together. Logic gates compare patterns of bits, stored in temporary memories called registers, and then turn them into new patterns of bits—and that’s the computer equivalent of what our human brains would call addition, subtraction, or multiplication. In physical terms, the algorithm that performs a particular calculation takes the form of an electronic circuit made from a number of logic gates, with the output from one gate feeding in as the input to the next.

_

The trouble with conventional computers is that they depend on conventional transistors. This might not sound like a problem if you go by the amazing progress made in electronics over the last few decades. When the transistor was invented, back in 1947, the switch it replaced (which was called the vacuum tube) was about as big as one of your thumbs. Now, a state-of-the-art microprocessor (single-chip computer) packs hundreds of millions (and up to 30 billion) transistors onto a chip of silicon the size of your fingernail! Chips like these, which are called integrated circuits, are an incredible feat of miniaturization. The high speed modern computer is fundamentally no different from its gargantuan 30 ton ancestors which were equipped with some 18000 vacuum tubes and 500 miles of wiring. Although computers have become more compact and considerably faster in performing their task, the task remains the same: to manipulate and interpret an encoding of binary bits into a useful computational result.

_

Classical computers, which we use on a daily basis, are built upon the concept of digital logic and bits. A bit is simply an idea, or an object, which can take on one of two distinct values, typically labelled 0 or 1. In computers this concept is usually embodied by transistors, which can be charged (1) or uncharged (0). A computer encodes information in a series of bits, and performs operations on them using circuits called logic gates. Logic gates simply apply a given rule to a bit. For example, an OR gate takes two bits as input, and outputs a single value. If either of the inputs is 1, the gate returns 1. Otherwise, it returns 0. Once the operations are finished, the information regarding the output can be decoded from the bits. Engineers can design circuits which perform addition and subtraction, multiplications… almost any operation that comes to mind, as long as the input and output information can be encoded in bits.

_

Classically, a compiler for a high-level programming language translates algebraic expressions into sequences of machine language instructions to evaluate the terms and operators in the expression.

Following instructions are supported on a classical computer:

- Add

- Subtract

- Multiply

- Divide

And with these most basic operations, there is ability to evaluate classical math functions:

- Square root

- Exponentials

- Logarithms

- Trigonometric functions

- Statistical functions

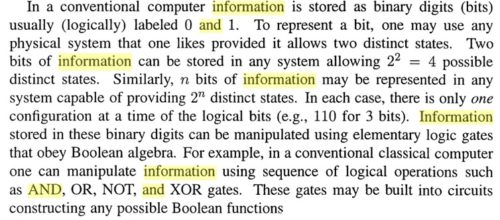

Classical computing is based in large part on modern mathematics and logic. We take modern digital computers and their ability to perform a multitude of different applications for granted. Our desktop PCs, laptops and smart phones can run spreadsheets, stream live video, allow us to chat with people on the other side of the world, and immerse us in realistic 3D environments. But at their core, all digital computers have something in common. They all perform simple arithmetic operations. Their power comes from the immense speed at which they are able to do this. Computers perform billions of operations per second. These operations are performed so quickly that they allow us to run very complex high level applications. Conventional digital computing can be summed up by the diagram shown in figure below.

Although there are many tasks that conventional computers are very good at, there are still some areas where calculations seem to be exceedingly difficult. Examples of these areas are: Image recognition, natural language (getting a computer to understand what we mean if we speak to it using our own language rather than a programming language), and tasks where a computer must learn from experience to become better at a particular task. Even though there has been much effort and research poured into this field over the past few decades, our progress in this area has been slow and the prototypes that we do have working usually require very large supercomputers to run them, consuming vast quantities of space and power.

_

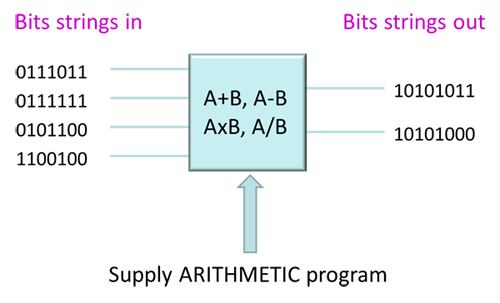

Classical circuit:

Circuits are net-works composed of wires that carry bit values to gates that perform elementary operations on the bits. The circuits we consider will all be acyclic, meaning that the bits move through the circuit in a linear fashion, and the wires never feedback to a prior location in the circuit. A circuit Cn has n wires, and can be described by a circuit diagram shown in figure below for n=4. The input bits are written onto the wires entering the circuit from the left side of the diagram. The output bits are read-off the wires leaving the circuit at the right side of the diagram.

A circuit is an array or network of gates, which is the terminology often used in the quantum setting. The gates come from some finite family, and they take information from input wires and deliver information along some output wires.

An important notion is that of universality. It is convenient to show that a finite set of different gates is all we need to be able to construct a circuit for performing any computation we want.

_

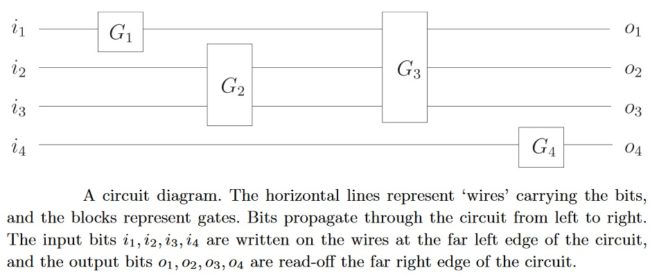

A circuit is made up of wires and gates, which carry information around, and perform simple computational tasks, respectively. For example, figure below shows a simple circuit which takes as input a single bit, a. This bit is passed through a gate, which flips the bit, taking 1 to 0 and 0 to 1. The wires before and after the gate serve merely to carry the bit to and from the gate; they can represent movement of the bit through space, or perhaps just through time.

More generally, a circuit may involve many input and output bits, many wires, and many logical gates. A logic gate is a function f:{0,1}^k→{0,1}^l from some fixed number k of input bits to some fixed number l of output bits. For example, the gate is a gate with one input bit and one output bit which computes the function f(a)=1⊕a, where a is a single bit, and ⊕ is modulo 2 addition. It is also usual to make the convention that no loops are allowed in the circuit, to avoid possible instabilities. We say such a circuit is acyclic, and we adhere to the convention that circuits in the circuit model of computation be acyclic.

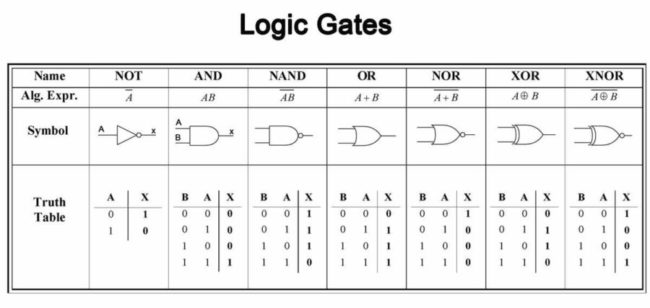

There are many other elementary logic gates which are useful for computation. A partial list includes the AND gate, the OR gate, the XOR gate, the NAND gate, and the NOR gate. Each of these gates takes two bits as input, and produces a single bit as output. The AND gate outputs 1 if and only if both of its inputs are 1. The OR gate outputs 1 if and only if at least one of its inputs is 1. The XOR gate outputs the sum, modulo 2, of its inputs. The NAND and NOR gates take the AND and, OR respectively, of their inputs, and then apply a NOT to whatever is output.

These simple circuit elements can be put together to perform an enormous variety of computations.

_

The most important characteristics of a computer is how fast it can perform calculations (the clock speed in our domestic PCs is a measure of this). This is determined by two main factors. One is the processor, which determined how many operations can be carried out on bits in a given time interval. The other is the nature of the calculation: how many operations on bits it takes to carry it out. This is why it is key to have optimized algorithms, you want to complete a given task in as few steps as possible. The problem is that even the most sophisticated versions of some tasks, like integer factoring, require an enormous amount of operations to be completed. These are the kind of tasks that could take billions of years to complete even on the best computers.

_

What we refer to as the universal computing machine was conceived by the man considered the father of computer sciences, Alan Turing, in 1936. Years before there were actual computers in the world, Turing suggested building a read-write head that would move a tape, read the different state in each frame, and replicate it according to commands it received. It sounds simplistic, but there is no fundamental difference between the theoretical Turing machine and laptop. The only difference is that laptop reads-writes so many frames per second that it’s impossible to discern that it’s actually calculating. Classical computers perform these calculations by means of transistors. In 1947, William Shockley, Walter Brattain and John Bardeen built the first transistor – the word is an amalgam of “transfer” and “resistor.” The transistor is a kind of switch that sits within a slice of silicon and acts as the multi-state frame that Turing dreamed of. Turn on the switch and the electricity flows through the transistor; turn it off, and the electricity does not flow. Hence, the use of transistors in computers is binary: if the electricity flows through the transistor, the bit, or binary digit, is 1; and if the current does not flow, the bit is 0.

With transistors, the name of the game is miniaturization. The smaller the transistor, the more of them it is possible to compress into the silicon slice, and the more complex are the calculations one can perform. It took a whole decade to get from the one transistor to an integrated circuit of four transistors. Ten years later, in 1965, it had become possible to compress 64 transistors onto a chip. At this stage, Gordon Moore, who would go on to found Intel, predicted that the number of transistors per silicon slice would continue to grow exponentially. Moore’s Law states that every 2 years, like clockwork, engineers will succeed in miniaturizing and compressing double the number of transistors in an integrated circuit. Thus we got the golden age of computers: the Intel 286, with 134,000 transistors in 1982; the 386, with 275,000 transistors, in 1985; the 486, with 1,180,235 transistors, in 1989; and the Pentium, with 3.1 million transistors, in 1993. There was no reason to leave the house. Today, the human race is manufacturing dozens of billions of transistors per second. Your smartphone has about 2 to 4 billion transistors. According to a calculation made by the semiconductor analyst Jim Handy, since the first transistor was created in 1947, 2,913,276,327,576,980,000,000 transistors – that’s 2.9 sextillion – have been manufactured, and within a few years there will be more transistors in the world than all the cells in all the human bodies on earth.

_

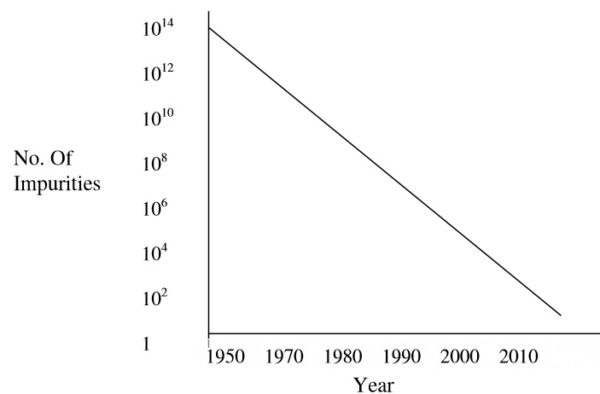

The number of transistors per inch on integrated circuit will double every 2 years and this trend will continue for at least two decades. This prediction was formulated by Gordon Moore in 1965. Today, Moore’s Law still applies. If Moore’s Law is extrapolated naively to the future, it is learnt that sooner or later, each bit of information should be encoded by a physical system of subatomic size. If we’d continued to miniaturize transistors at the rate of Moore’s Law, we would have reached the stage of a transistor the size of an atom – and we would have had to split the atom. As a matter of fact, this point is substantiated by the survey made by Keyes in 1988 as shown in figure below. This plot shows the number of electrons required to store a single bit of information. An extrapolation of the plot suggests that we might be within the reach of atomic scale computations with in a decade or so at the atomic scale however.

_

Figure above shows number of dopant impurities in logic in bipolar transistors with year.

_

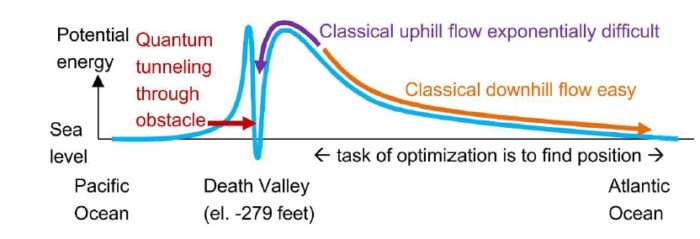

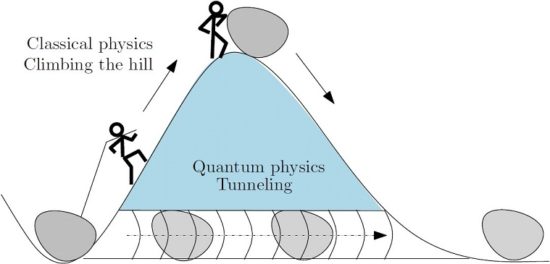

The problem will arise when the new technologies allow to manufacture chips of around 5 nm. Modern microprocessors are working on 64-bit architectures integrating more than 700 million transistors and they can operate at frequencies above 3 GHz. For instance, the third-generation Intel Core (2012) evolved from 32 nm wide to 22 nm, thus allowing duplication of the number of transistors per surface unit. A dual-core mobile variant of the Intel Core i3/i5/i7 has around 1.75 billion transistors for a die size of 101.83 mm². This works out at a density of 17.185 million transistors per square millimetre. Moreover, a larger number of transistors means that a given computer system could be able to do more tasks rapidly. But, following the arguments of Feynman there is an “essential limit” for this to be done. The limits of the so-called “Tunnel Effect”

The fact is that increasingly smaller microchips are manufactured. And the smaller is the device, the faster the computing process is reached. However, we cannot infinitely diminish the size of the chips. There is a limit at which they stop working correctly. When it comes down to the nanometer size, electrons escape from the channels where they circulate through the so-called “tunnel effect”, a typically quantum phenomenon. Electrons are quantum particles and they have wave-like behavior, hence, there is a possibility that a part of such electrons can pass through the walls between which they are confined. Under these conditions the chip stops working properly. In this context the traditional digital computing should not be far from the limits, since we have already reached sizes of only a few tens of nanometers. But… where is the real limit?

The answer may have much to do with the world of the very small. In this respect, the various existing methods to estimate the size of the atomic radius give values between 0.5 and 5Å. The size of current transistor is of the order of nanometers (nm), while the size of typical atoms is of the order of angstroms (Å). But 10 Å = 1 nm. We only have to go one order of magnitude further, prior to designing our computers considering the restrictions imposed by quantum mechanics! Tiny matter obeys the rules of quantum mechanics, which are quite different from the classical rules that determine the properties of conventional logic gates.

_

The process of miniaturization that has made current classical computers so powerful and cheap, has already reached micro-levels where quantum effects occur. Chip-makers tend to go to great lengths to suppress those quantum effects, but instead one might also try to work with them, enabling further miniaturization. With the size of components in classical computers shrinking to where the behaviour of the components is practically dominated by quantum theory than classical theory, researchers have begun investigating the potential of these quantum behaviours for computation. Surprisingly it seems that a computer whose components are all to function in a quantum way are more powerful than any classical computer can be. It is the physical limitations of the classical computer and the possibilities for the quantum computer to perform certain useful tasks more rapidly than any classical computer, which drive the study of quantum computing.

_

Figure below shows Memory chip from a USB flash memory stick:

This memory chip from a typical USB stick contains an integrated circuit that can store 512 megabytes of data. That’s roughly 500 million characters (536,870,912 to be exact), each of which needs eight binary digits—so we’re talking about 4 billion (4,000 million) transistors in all (4,294,967,296 if you’re being picky) packed into an area the size of a postage stamp! It sounds amazing. The more information you need to store, the more binary ones and zeros—and transistors—you need to do it. Since most conventional computers can only do one thing at a time, the more complex the problem you want them to solve, the more steps they’ll need to take and the longer they’ll need to do it. Some computing problems are so complex that they need more computing power and time than any modern machine could reasonably supply; computer scientists call those intractable problems. As Moore’s Law advances, so the number of intractable problems diminishes: computers get more powerful and we can do more with them. The trouble is, transistors are just about as small as we can make them: we’re getting to the point where the laws of physics seem likely to put a stop to Moore’s Law. As a consequence of the relentless, Moore’s law-driven miniaturization of silicon devices, it is now possible to make transistors that are only few tens of atoms long. At this scale, however, quantum physics effects begin to prevent transistors from performing reliably – a phenomenon that limits prospects for future progress in conventional computing and end to Moore’s law.

Unfortunately, there are still hugely difficult computing problems we can’t tackle because even the most powerful computers find them intractable. That’s one of the reasons why people are now getting interested in quantum computing.

_____

_____

Quantum mechanics:

Quantum mechanics is the science of the very small. It explains the behavior of matter and its interactions with energy on the scale of atoms and subatomic particles. By contrast, classical physics explains matter and energy only on a scale familiar to human experience, including the behavior of astronomical bodies such as the Moon. Classical physics is still used in much of modern science and technology. However, towards the end of the 19th century, scientists discovered phenomena in both the large (macro) and the small (micro) worlds that classical physics could not explain. The desire to resolve inconsistencies between observed phenomena and classical theory led to two major revolutions in physics that created a shift in the original scientific paradigm: the theory of relativity and the development of quantum mechanics.

_

Newtonian physics thought of the world as composed of distinct objects, much like tennis balls or stone blocks. In this model, the universe is a giant machine of interlocking parts in which every action produces an equal and opposite reaction. Unfortunately the Newtonian world breaks down at the subatomic level. In the quantum world, everything seems to be an ocean of interconnected possibilities. Every particle is just a wave function and could be anywhere at anytime; it could even be at several places simultaneously. Swirling electrons occupy two positions at once, and possess dual natures — they can be both waves and particles simultaneously. In recent times, physicists have discovered a phenomenon called quantum entanglement. In an entangled system, two seemingly separate particles can behave as an inseparable whole. Theoretically, if one separates the two entangled particles, one would find that their velocity of spin would be identical but in opposite directions. They are quantum twins. Despite the seeming irrationality of these concepts, scientists over the last 120 years have demonstrated that this realm — known as quantum mechanics — is the foundation on which our physical existence is built. It is one of the most successful theories in modern science. Without it, we would not have such marvels as atomic clocks, computers, lasers, LEDs, global positioning systems and magnetic resonance imaging, among many other innovations.

_

Prior to the emergence of quantum mechanics, fundamental physics was marked by a peculiar dualism. On the one hand, we had electric and magnetic fields, governed by Maxwell’s equations. The fields filled all of space and were continuous. On the other hand, we had atoms, governed by Newtonian mechanics. The atoms were spatially limited — indeed, quite small — discrete objects. At the heart of this dualism was the contrast of light and substance, a theme that has fascinated not only scientists but artists and mystics for many centuries. One of the glories of quantum theory is that it has replaced that dualistic view of matter with a unified one. We learned to make fields from photons, and atoms from electrons (together with other elementary particles). Both photons and electrons are described using the same mathematical structure. They are particles, in the sense that they come in discrete units with definite, reproducible properties. But the new quantum-mechanical sort of “particle” cannot be associated with a definite location in space. Instead, the possible results of measuring its position are given by a probability distribution. And that distribution is given as the square of a space-filling field, its so-called wave function.

_

Quantum mechanics (also known as quantum physics, quantum theory, the wave mechanical model, or matrix mechanics), including quantum field theory, is a fundamental theory in physics which describes nature at the smallest – including atomic and subatomic – scales.

Quantum theory’s development began in 1900 with a presentation by Max Planck to the German Physical Society, in which he introduced the idea that energy exists in individual units (which he called “quanta”), as does matter. Further developments by a number of scientists over the following thirty years led to the modern understanding of quantum theory.

The Essential Elements of Quantum Theory:

- Energy, like matter, consists of discrete units, rather than solely as a continuous wave.

- Elementary particles of both energy and matter, depending on the conditions, may behave like either particles or waves.

- The movement of elementary particles is inherently random, and, thus, unpredictable.

- The simultaneous measurement of two complementary values, such as the position and momentum of an elementary particle, is inescapably flawed; the more precisely one value is measured, the more flawed will be the measurement of the other value.

Further Developments of Quantum Theory:

Niels Bohr proposed the Copenhagen interpretation of quantum theory, which asserts that a particle is whatever it is measured to be (for example, a wave or a particle) but that it cannot be assumed to have specific properties, or even to exist, until it is measured. In short, Bohr was saying that objective reality does not exist. This translates to a principle called superposition that claims that while we do not know what the state of any object is, it is actually in all possible states simultaneously, as long as we don’t look to check.

The second interpretation of quantum theory is the multiverse or many-worlds theory. It holds that as soon as a potential exists for any object to be in any state, the universe of that object transmutes into a series of parallel universes equal to the number of possible states in which that the object can exist, with each universe containing a unique single possible state of that object. Furthermore, there is a mechanism for interaction between these universes that somehow permits all states to be accessible in some way and for all possible states to be affected in some manner. Stephen Hawking and the late Richard Feynman are among the scientists who have expressed a preference for the many-worlds theory.

_

Classical physics, the description of physics existing before the formulation of the theory of relativity and of quantum mechanics, describes nature at ordinary (macroscopic) scale. Most theories in classical physics can be derived from quantum mechanics as an approximation valid at large (macroscopic) scale. Quantum mechanics differs from classical physics in that energy, momentum, angular momentum, and other quantities of a bound system are restricted to discrete values (quantization), objects have characteristics of both particles and waves (wave-particle duality), and there are limits to how accurately the value of a physical quantity can be predicted prior to its measurement, given a complete set of initial conditions (the uncertainty principle).

_

Quantum mechanics gradually arose from theories to explain observations which could not be reconciled with classical physics, such as Max Planck’s solution in 1900 to the black-body radiation problem, and from the correspondence between energy and frequency in Albert Einstein’s 1905 paper which explained the photoelectric effect. Early quantum theory was profoundly re-conceived in the mid-1920s by Erwin Schrödinger, Werner Heisenberg, Max Born and others. The modern theory is formulated in various specially developed mathematical formalisms. In one of them, a mathematical function, the wave function, provides information about the probability amplitude of energy, momentum, and other physical properties of a particle.

_

Many aspects of quantum mechanics are counterintuitive and can seem paradoxical because they describe behavior quite different from that seen at larger scales.

For example, the uncertainty principle of quantum mechanics means that the more closely one pins down one measurement (such as the position of a particle), the less accurate another complementary measurement pertaining to the same particle (such as its speed) must become.

Another example is entanglement, in which a measurement of any two-valued state of a particle (such as light polarized up or down) made on either of two “entangled” particles that are very far apart causes a subsequent measurement on the other particle to always be the other of the two values (such as polarized in the opposite direction).

A final example is superfluidity, in which a container of liquid helium, cooled down to near absolute zero in temperature spontaneously flows (slowly) up and over the opening of its container, against the force of gravity.

_

The building blocks of quantum mechanics:

The birth of quantum mechanics took place the first 27 years of the twentieth century to overcome the severe limitations in the validity of classical physics, with the first inconsistency being the Plank’s radiation law. Einstein, Debye, Bohr, de Broglie, Compton, Heisenberg, Schrödinger, Dirac amongst others were the pioneers in developing the theory of quantum mechanics as we know it today.

The fundamental building blocks of quantum mechanics are:

- Quantisation: energy, momentum, angular momentum and other physical quantities of a bound system are restricted to discrete values (quantised)

- Wave-particle duality: objects are both waves and particles

- Heisenberg principle: the more precise the position of some particle is determined, the less precise its momentum can be known, and vice versa. Thus there is a fundamental limit to the measurement precision of physical quantities of a particle

- Superposition: two quantum states can be added together, and the result is another valid quantum state

- Entanglement: when the quantum state of any particle belonging to a system cannot be described independently of the state of the other particles, even when separated by a large distance, the particles are entangled

- Fragility: by measuring a quantum system we destroy any previous information. From this, it follows the no-cloning theorem that states: it is impossible to create an identical copy of an arbitrary unknown quantum state

_

To understand how things work in the real world, quantum mechanics must be combined with other elements of physics – principally, Albert Einstein’s special theory of relativity, which explains what happens when things move very fast – to create what are known as quantum field theories. Three different quantum field theories deal with three of the four fundamental forces by which matter interacts: electromagnetism, which explains how atoms hold together; the strong nuclear force, which explains the stability of the nucleus at the heart of the atom; and the weak nuclear force, which explains why some atoms undergo radioactive decay.

_

Copenhagen interpretation:

Bohr, Heisenberg, and others tried to explain what these experimental results and mathematical models really mean. Their description, known as the Copenhagen interpretation of quantum mechanics, aimed to describe the nature of reality that was being probed by the measurements and described by the mathematical formulations of quantum mechanics.

The main principles of the Copenhagen interpretation are:

- A system is completely described by a wave function, usually represented by the Greek letter ψ (“psi”). (Heisenberg)

- How ψ changes over time is given by the Schrödinger equation.

- The description of nature is essentially probabilistic. The probability of an event—for example, where on the screen a particle shows up in the double-slit experiment—is related to the square of the absolute value of the amplitude of its wave function. (Born rule, due to Max Born, which gives a physical meaning to the wave function in the Copenhagen interpretation: the probability amplitude)

- It is not possible to know the values of all of the properties of the system at the same time; those properties that are not known with precision must be described by probabilities. (Heisenberg’s uncertainty principle)

- Matter, like energy, exhibits a wave–particle duality. An experiment can demonstrate the particle-like properties of matter, or its wave-like properties; but not both at the same time. (Complementarity principle due to Bohr)

- Measuring devices are essentially classical devices, and measure classical properties such as position and momentum.

- The quantum mechanical description of large systems should closely approximate the classical description. (Correspondence principle of Bohr and Heisenberg)

_

Uncertainty principle:

Suppose it is desired to measure the position and speed of an object—for example a car going through a radar speed trap. It can be assumed that the car has a definite position and speed at a particular moment in time. How accurately these values can be measured depends on the quality of the measuring equipment. If the precision of the measuring equipment is improved, it provides a result closer to the true value. It might be assumed that the speed of the car and its position could be operationally defined and measured simultaneously, as precisely as might be desired.

In 1927, Heisenberg proved that this last assumption is not correct. Quantum mechanics shows that certain pairs of physical properties, for example position and speed, cannot be simultaneously measured, nor defined in operational terms, to arbitrary precision: the more precisely one property is measured, or defined in operational terms, the less precisely can the other. This statement is known as the uncertainty principle. The uncertainty principle is not only a statement about the accuracy of our measuring equipment, but, more deeply, is about the conceptual nature of the measured quantities—the assumption that the car had simultaneously defined position and speed does not work in quantum mechanics. On a scale of cars and people, these uncertainties are negligible, but when dealing with atoms and electrons they become critical.

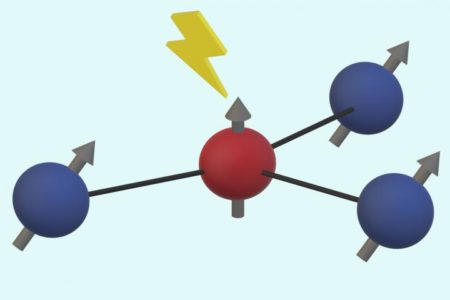

Heisenberg gave, as an illustration, the measurement of the position and momentum of an electron using a photon of light. In measuring the electron’s position, the higher the frequency of the photon, the more accurate is the measurement of the position of the impact of the photon with the electron, but the greater is the disturbance of the electron. This is because from the impact with the photon, the electron absorbs a random amount of energy, rendering the measurement obtained of its momentum increasingly uncertain (momentum is velocity multiplied by mass), for one is necessarily measuring its post-impact disturbed momentum from the collision products and not its original momentum. With a photon of lower frequency, the disturbance (and hence uncertainty) in the momentum is less, but so is the accuracy of the measurement of the position of the impact.

At the heart of the uncertainty principle is not a mystery, but the simple fact that for any mathematical analysis in the position and velocity domains (Fourier analysis), achieving a sharper (more precise) curve in the position domain can only be done at the expense of a more gradual (less precise) curve in the speed domain, and vice versa. More sharpness in the position domain requires contributions from more frequencies in the speed domain to create the narrower curve, and vice versa. It is a fundamental tradeoff inherent in any such related or complementary measurements, but is only really noticeable at the smallest (Planck) scale, near the size of elementary particles.

The uncertainty principle shows mathematically that the product of the uncertainty in the position and momentum of a particle (momentum is velocity multiplied by mass) could never be less than a certain value, and that this value is related to Planck’s constant.

_

Wave function collapse:

Wave function collapse means that a measurement has forced or converted a quantum (probabilistic) state into a definite measured value. This phenomenon is only seen in quantum mechanics rather than classical mechanics. For example, before a photon actually “shows up” on a detection screen it can be described only with a set of probabilities for where it might show up. When it does appear, for instance in the CCD of an electronic camera, the time and the space where it interacted with the device are known within very tight limits. However, the photon has disappeared in the process of being captured (measured), and its quantum wave function has disappeared with it. In its place some macroscopic physical change in the detection screen has appeared, e.g., an exposed spot in a sheet of photographic film, or a change in electric potential in some cell of a CCD.

_

Eigenstates and eigenvalues:

Because of the uncertainty principle, statements about both the position and momentum of particles can only assign a probability that the position or momentum will have some numerical value. The uncertainty principle also says that eliminating uncertainty about position maximises uncertainty about momentum, and eliminating uncertainty about momentum maximizes uncertainty about position. A probability distribution assigns probabilities to all possible values of position and momentum. Schrödinger’s wave equation gives wavefunction solutions, the squares of which are probabilities of where the electron might be, just as Heisenberg’s probability distribution does.

In the everyday world, it is natural and intuitive to think of every object being in its own eigenstate. This is another way of saying that every object appears to have a definite position, a definite momentum, a definite measured value, and a definite time of occurrence. However, the uncertainty principle says that it is impossible to measure the exact value for the momentum of a particle like an electron, given that its position has been determined at a given instant. Likewise, it is impossible to determine the exact location of that particle once its momentum has been measured at a particular instant.

Therefore, it became necessary to formulate clearly the difference between the state of something that is uncertain in the way just described, such as an electron in a probability cloud, and the state of something having a definite value. When an object can definitely be “pinned down” in some respect, it is said to possess an eigenstate. As stated above, when the wavefunction collapses because the position of an electron has been determined, the electron’s state becomes an “eigenstate of position”, meaning that its position has a known value, an eigenvalue of the eigenstate of position.

The word “eigenstate” is derived from the German/Dutch word “eigen”, meaning “inherent” or “characteristic”. An eigenstate is the measured state of some object possessing quantifiable characteristics such as position, momentum, etc. The state being measured and described must be observable (i.e. something such as position or momentum that can be experimentally measured either directly or indirectly), and must have a definite value, called an eigenvalue.

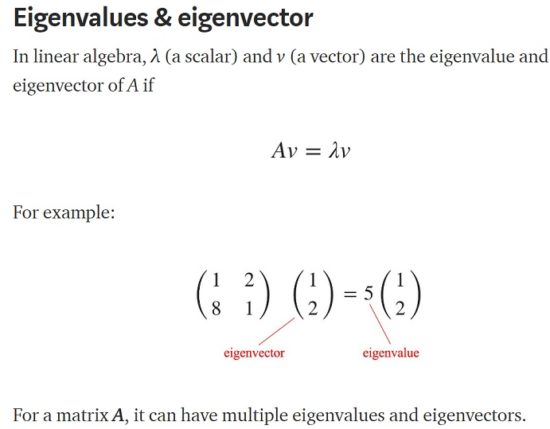

Eigenvalue also refers to a mathematical property of square matrices, a usage pioneered by the mathematician David Hilbert in 1904. Some such matrices are called self-adjoint operators, and represent observables in quantum mechanics as seen in the figure below:

_

The Pauli exclusion principle:

In 1924, Wolfgang Pauli proposed a new quantum degree of freedom (or quantum number), with two possible values, to resolve inconsistencies between observed molecular spectra and the predictions of quantum mechanics. In particular, the spectrum of atomic hydrogen had a doublet, or pair of lines differing by a small amount, where only one line was expected. Pauli formulated his exclusion principle, stating, “There cannot exist an atom in such a quantum state that two electrons within [it] have the same set of quantum numbers.” A year later, Uhlenbeck and Goudsmit identified Pauli’s new degree of freedom with the property called spin whose effects were observed in the Stern–Gerlach experiment.

_

Dirac wave equation:

In 1928, Paul Dirac extended the Pauli equation, which described spinning electrons, to account for special relativity. The result was a theory that dealt properly with events, such as the speed at which an electron orbits the nucleus, occurring at a substantial fraction of the speed of light. By using the simplest electromagnetic interaction, Dirac was able to predict the value of the magnetic moment associated with the electron’s spin, and found the experimentally observed value, which was too large to be that of a spinning charged sphere governed by classical physics. He was able to solve for the spectral lines of the hydrogen atom, and to reproduce from physical first principles Sommerfeld’s successful formula for the fine structure of the hydrogen spectrum. Dirac’s equations sometimes yielded a negative value for energy, for which he proposed a novel solution: he posited the existence of an antielectron and of a dynamical vacuum. This led to the many-particle quantum field theory.

_

Two-state quantum system:

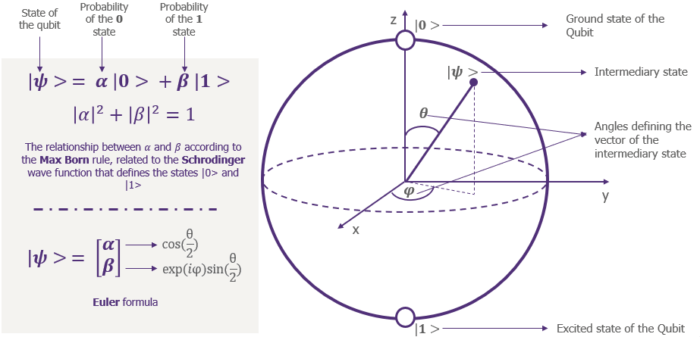

In quantum mechanics, a two-state system (also known as a two-level system) is a quantum system that can exist in any quantum superposition of two independent (physically distinguishable) quantum states. The Hilbert space describing such a system is two-dimensional. Therefore, a complete basis spanning the space will consist of two independent states. Any two-state system can also be seen as a qubit. In quantum computing, a qubit or quantum bit is the basic unit of quantum information—the quantum version of the classical binary bit physically realized with a two-state device. A qubit is a two-state (or two-level) quantum-mechanical system, one of the simplest quantum systems displaying the peculiarity of quantum mechanics. Examples include: the spin of the electron in which the two levels can be taken as spin up and spin down; or the polarization of a single photon in which the two states can be taken to be the vertical polarization and the horizontal polarization. In a classical system, a bit would have to be in one state or the other. However, quantum mechanics allows the qubit to be in a coherent superposition of both states simultaneously, a property which is fundamental to quantum mechanics and quantum computing.

Two-state systems are the simplest quantum systems that can exist, since the dynamics of a one-state system is trivial (i.e. there is no other state the system can exist in). The mathematical framework required for the analysis of two-state systems is that of linear differential equations and linear algebra of two-dimensional spaces. As a result, the dynamics of a two-state system can be solved analytically without any approximation. The generic behavior of the system is that the wavefunction’s amplitude oscillates between the two states.

_

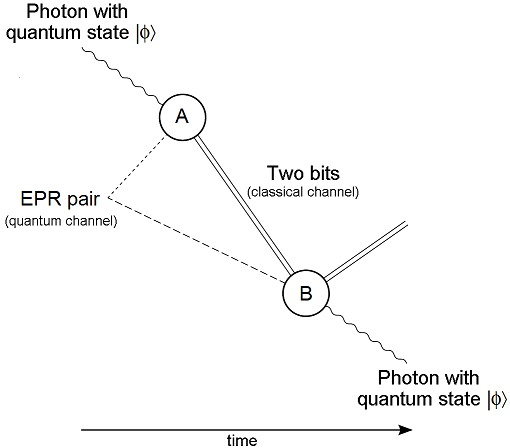

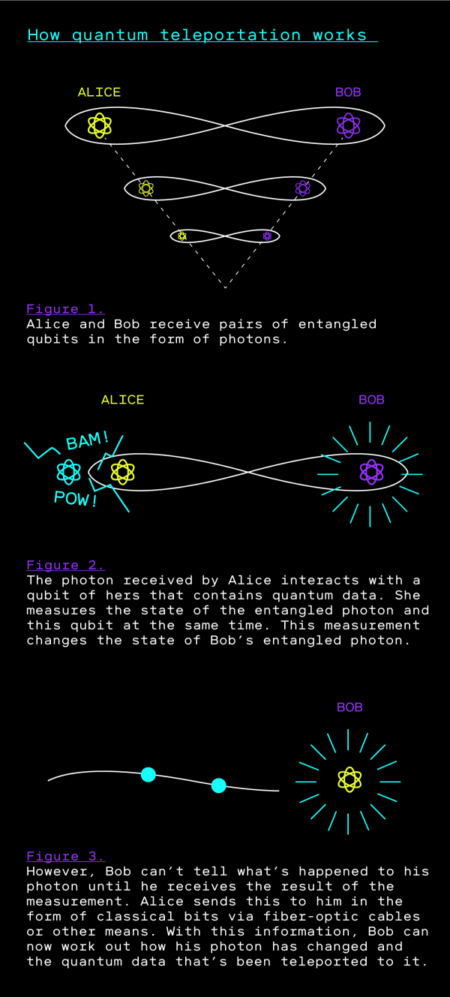

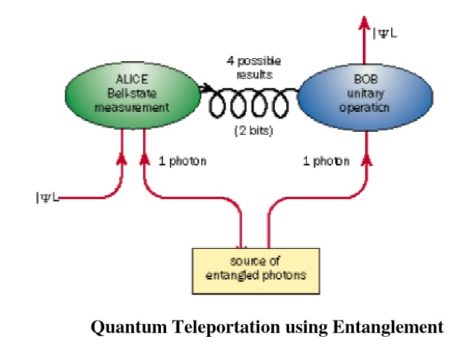

Quantum entanglement:

The Pauli exclusion principle says that two electrons in one system cannot be in the same state. Nature leaves open the possibility, however, that two electrons can have both states “superimposed” over each of them. Nothing is certain until the superimposed waveforms “collapse”. At that instant an electron shows up somewhere in accordance with the probability that is the square of the absolute value of the sum of the complex-valued amplitudes of the two superimposed waveforms. The situation there is already very abstract. A concrete way of thinking about entangled photons, photons in which two contrary states are superimposed on each of them in the same event, is as follows:

Imagine that we have two color-coded states of photons: one state labeled blue and another state labeled red. Let the combination of the red and the blue state appear (in imagination) as a purple state. We consider a case in which two photons are produced as the result of one single atomic event. Perhaps they are produced by the excitation of a crystal that characteristically absorbs a photon of a certain frequency and emits two photons of half the original frequency. In this case, the photons are connected with each other via their shared origin in a single atomic event. This setup results in combined states of the photons. So the two photons come out purple. If the experimenter now performs some experiment that determines whether one of the photons is either blue or red, then that experiment changes the photon involved from one having a combination of blue and red characteristics to a photon that has only one of those characteristics. The problem that Einstein had with such an imagined situation was that if one of these photons had been kept bouncing between mirrors in a laboratory on earth, and the other one had traveled halfway to the nearest star, when its twin was made to reveal itself as either blue or red, that meant that the distant photon now had to lose its purple status too. So whenever it might be investigated after its twin had been measured, it would necessarily show up in the opposite state to whatever its twin had revealed.

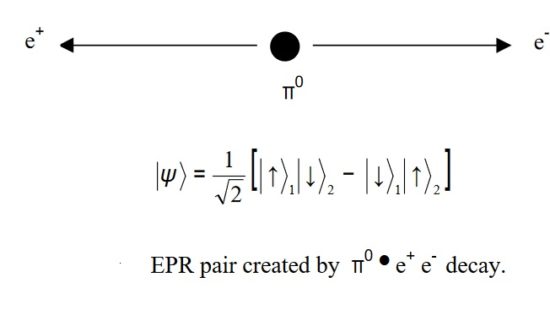

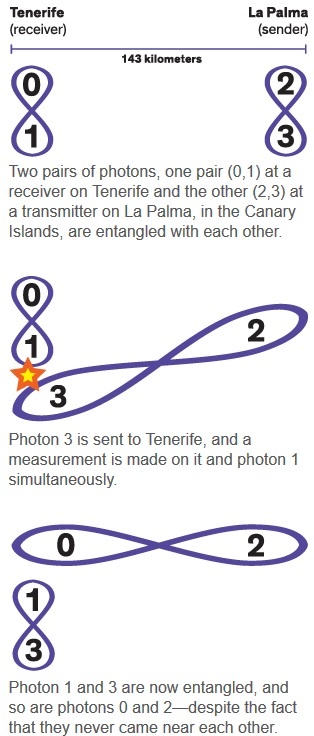

In trying to show that quantum mechanics was not a complete theory, Einstein started with the theory’s prediction that two or more particles that have interacted in the past can appear strongly correlated when their various properties are later measured. He sought to explain this seeming interaction in a classical way, through their common past, and preferably not by some “spooky action at a distance”. The argument is worked out in a famous paper, Einstein, Podolsky, and Rosen (1935; abbreviated EPR), setting out what is now called the EPR paradox. Assuming what is now usually called local realism, EPR attempted to show from quantum theory that a particle has both position and momentum simultaneously, while according to the Copenhagen interpretation, only one of those two properties actually exists and only at the moment that it is being measured. EPR concluded that quantum theory is incomplete in that it refuses to consider physical properties that objectively exist in nature. (Einstein, Podolsky, & Rosen 1935 is currently Einstein’s most cited publication in physics journals.) In the same year, Erwin Schrödinger used the word “entanglement” and declared: “I would not call that one but rather the characteristic trait of quantum mechanics.” Ever since Irish physicist John Stewart Bell theoretically and experimentally disproved the “hidden variables” theory of Einstein, Podolsky, and Rosen, most physicists have accepted entanglement as a real phenomenon. However, there is some minority dispute. The Bell inequalities are the most powerful challenge to Einstein’s claims.

_

It is in the domain of information technology, however, that we might end up owing quantum mechanics our greatest debt. Researchers hope to use quantum principles to create an ultra-powerful computer that would solve problems that conventional computers cannot — from improving cybersecurity and modeling chemical reactions to formulating new drugs and making supply chains more efficient. This goal could revolutionize certain aspects of computing and open up a new world of technological possibilities. Thanks to advances at universities and industry research centers, a handful of companies have now rolled out prototype quantum computers, but the field is still wide open on fundamental questions about the hardware, software and connections necessary for quantum technologies to fulfil their potential.

______

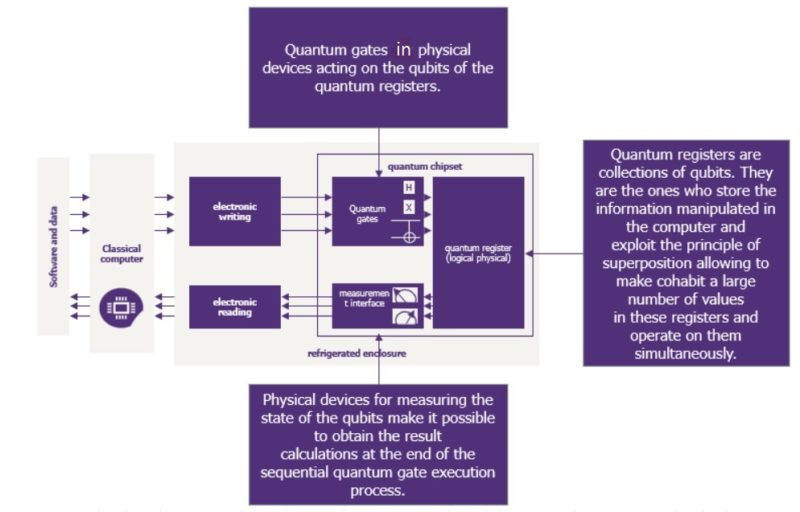

Classical to Quantum Computation:

Computing has revolutionized information processing and management. As far back as Charles Babbage, it was recognized that information could be processed with physical systems, and more recently (e.g. the work of Rolf Landauer) that information must be represented in physical form and is thus subject to physical laws. The physical laws relevant to the information processing system are important to understanding the limitations of computation. Traditional computing devices adhere to the laws of classical mechanics and are thus referred to as “classical computers.” Proposed “quantum computers” are governed by the laws of quantum mechanics, leading to a dramatic difference in the computational capacity.

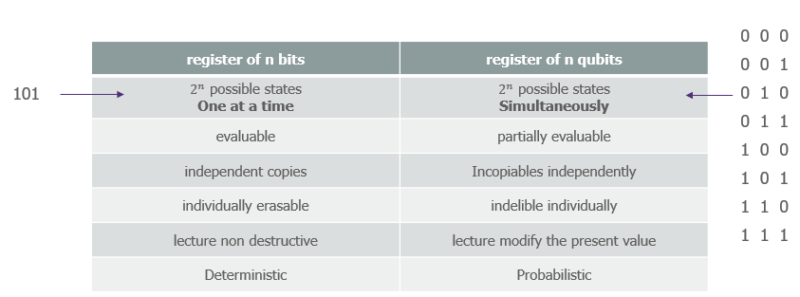

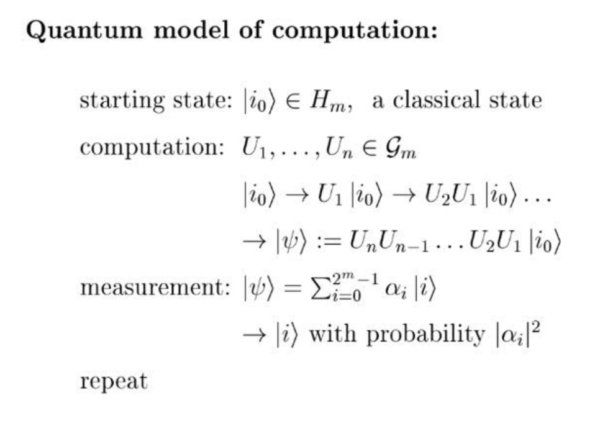

Fundamentally, Quantum Mechanics adds features that are absent in classical mechanics. To begin, physical quantities are “quantized,” i.e. cannot be subdivided. For example, light is quantized: the fundamental quantum of light is called the photon and cannot be subdivided into two photons. Quantum mechanics further requires physical states to evolve in such a way that cloning an arbitrary, unknown state into an independent copy is not possible. This is used in quantum cryptography to prevent information copying. Furthermore, quantum mechanics describes systems in terms of superpositions that allow multiple distinguishable inputs to be processed simultaneously, though only one can be observed at the end of processing, and the outcome is generally probabilistic in nature. Finally, quantum mechanics allows for correlations that are not possible to obtain in classical physics. Such correlations include what is called entanglement.

_

_

Classical machines couldn’t necessarily do all these computations efficiently, though. Let’s say you wanted to understand something like the chemical behavior of a molecule. This behavior depends on the behavior of the electrons in the molecule, which exist in a superposition of many classical states. Making things messier, the quantum state of each electron depends on the states of all the others — due to the quantum-mechanical phenomenon known as entanglement. Classically calculating these entangled states in even very simple molecules can become a nightmare of exponentially increasing complexity.

In order to describe a simple molecule with 300 atoms – penicillin, let’s say – we will need 2 to the 300th power classic transistors – which is more than the number of atoms in the universe. And that is only to describe the molecule at a particular moment. To run it in a simulation would require us to build another few universes to supply all the material needed.

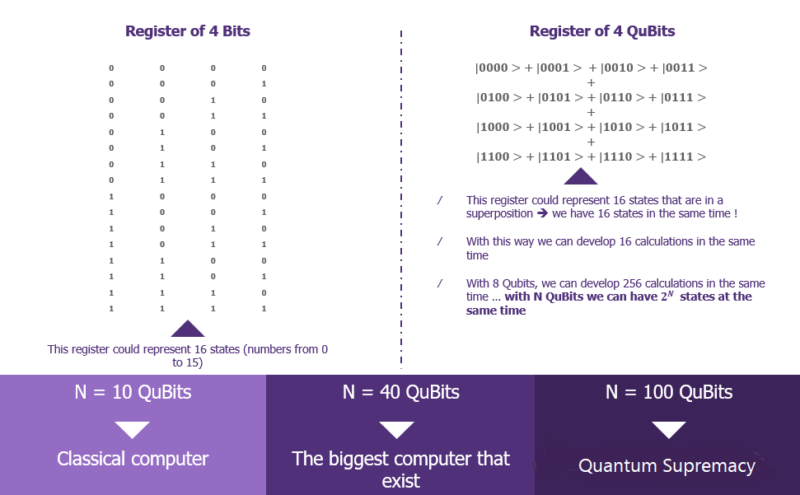

A quantum computer, by contrast, can deal with the intertwined fates of the electrons under study by superposing and entangling its own quantum bits. This enables the computer to process extraordinary amounts of information. Each single qubit you add doubles the states the system can simultaneously store: Two qubits can store four states, three qubits can store eight states, and so on. Thus, you might need just 50 entangled qubits to model quantum states that would require exponentially many classical bits — 1.125 quadrillion to be exact — to encode. A quantum machine could therefore make the classically intractable problem of simulating large quantum-mechanical systems tractable, or so it appeared. “Nature isn’t classical, dammit, and if you want to make a simulation of nature, you’d better make it quantum mechanical,” the physicist Richard Feynman famously quipped in 1981. “And by golly it’s a wonderful problem, because it doesn’t look so easy.”

_

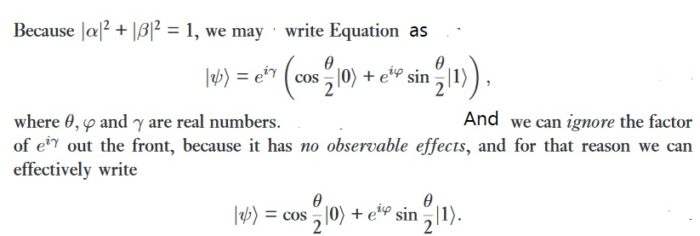

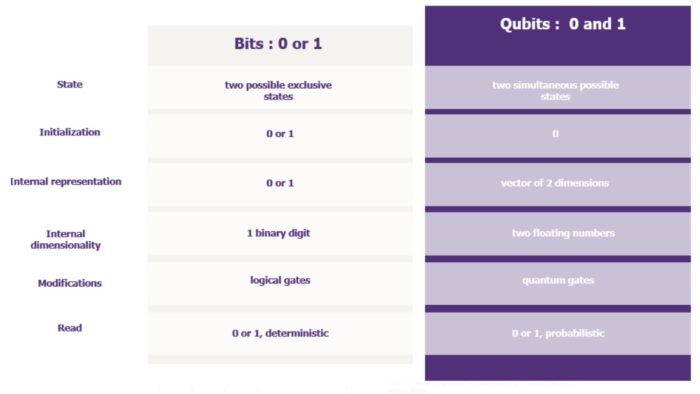

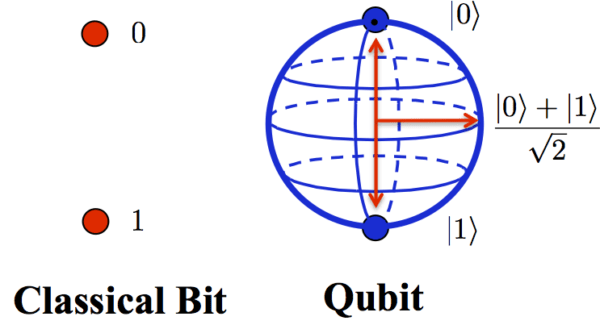

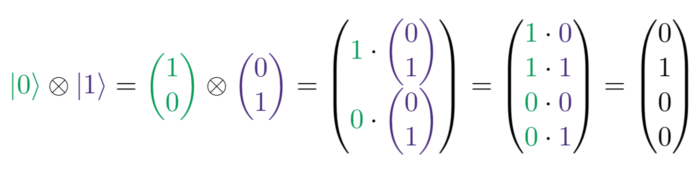

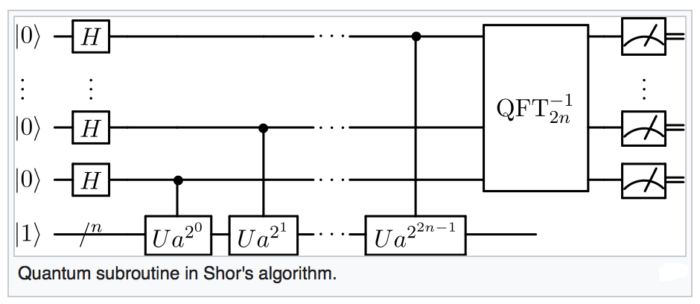

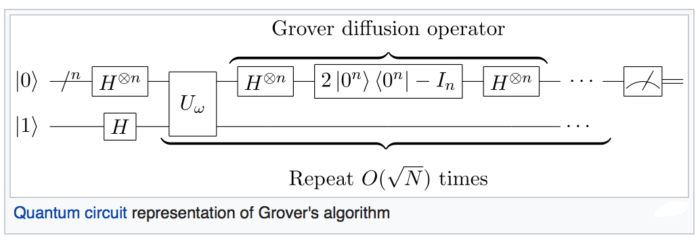

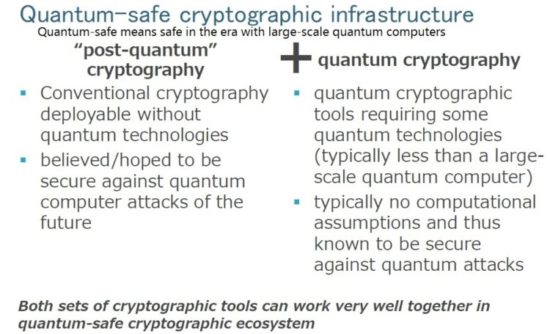

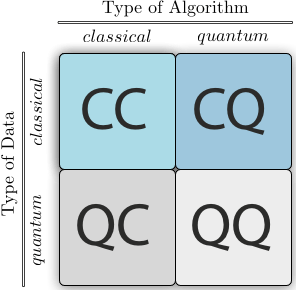

Classical computing relies, at its ultimate level, on principles expressed by Boolean algebra, operating with a (usually) 7-mode logic gate principle, though it is possible to exist with only three modes (which are AND, NOT, and COPY). Data must be processed in an exclusive binary state at any point in time – that is, either 0 (off / false) or 1 (on / true). These values are binary digits, or bits. The millions of transistors at the heart of computers can only be in one state at any point. While the time that each transistor need be either in 0 or 1 before switching states is now measurable in billionths of a second, there is still a limit as to how quickly these devices can be made to switch state. As we progress to smaller and faster circuits, we begin to reach the physical limits of materials and the threshold for classical laws of physics to apply. Beyond this, the quantum world takes over, which opens a potential as great as the challenges that are presented.