Dr Rajiv Desai

An Educational Blog

NET NEUTRALITY

NET NEUTRALITY:

_

_

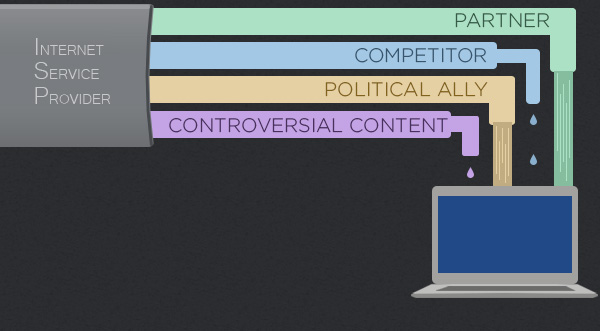

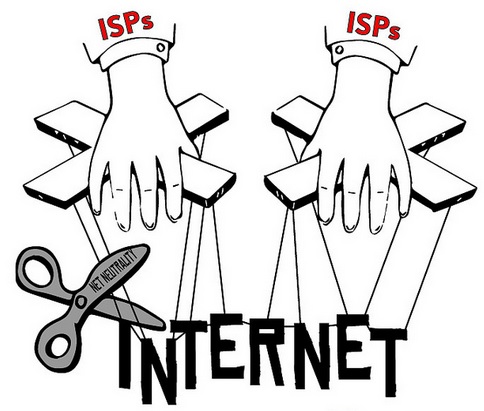

I am reminded of Abraham Lincoln’s remark: “The world has never had a good definition of the word liberty. We all declare for liberty, but in using the same word we do not mean the same thing.” Substitute ‘net neutrality’ for ‘liberty’, and that’s where we are today. The Internet has unleashed innovation, enabled growth, and inspired freedom more rapidly and extensively than any other technological advance in human history. Its independence is its power. Net neutrality means internet service providers (ISPs) should treat all data on internet equally. The ISPs have structural capacity to determine the way in which information is transmitted over the internet and the speed at which it is delivered. And the present internet network operators, principally large telephone and cable companies—have an economic incentive to extend their control over the physical infrastructure of the internet to leverage their control of internet content. If they went about it in the wrong way, these companies could institute changes that have the effect of limiting the free flow of information over the internet in a number of troubling ways. Network operators could prioritize the transmission of some content—their own for example—over other material produced by competitors. If this was to be allowed, web companies would lose revenues that they could otherwise devote to improvements in old products and innovations in new ones. Worse yet, the smaller content providers, who can now capitalize on the two-way nature of the internet—whether online stores or forums for democratic discourse—might be unable to secure quality service online. An entrepreneur’s fledgling company should have the same chance to succeed as established corporations, and that access to a high school student’s blog shouldn’t be unfairly slowed down to make way for advertisers with more money. At the core of the principle of net neutrality is thus the idea that all content on the internet should be accessible in a fully equitable way and once an internet user has accessed that content, he should be able to engage with that content in the same way that he would engage with any other content on the internet. Allowing broadband carriers to control what people see and do online would fundamentally undermine the principles that have made internet such a success. On the other hand, to be honest, there is no absolute neutrality. The world is neither neutral nor equal. Umpires in a game of cricket were perceived to be biased and so we have neutral umpires from countries not playing the present game. Humans have been subjective. They’ve got their own positions, opinions and priorities. So net neutrality cannot be seen in isolation of entire gambit of human behaviours but approached by combining different views and opinions.

________

Internet terminology, abbreviations and synonyms:

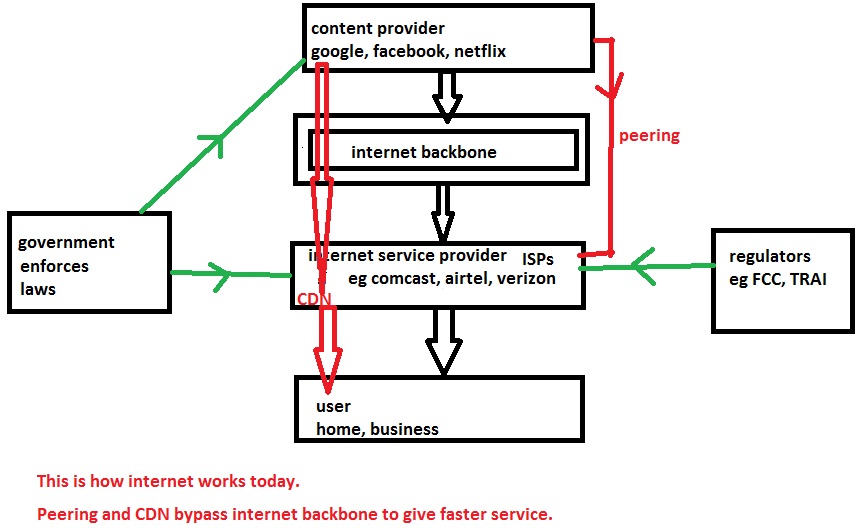

Internet Backbone:

The collection of cables and data canters that make up the core of the internet. This is operated not by a single operator but by many independent companies spread across the globe.

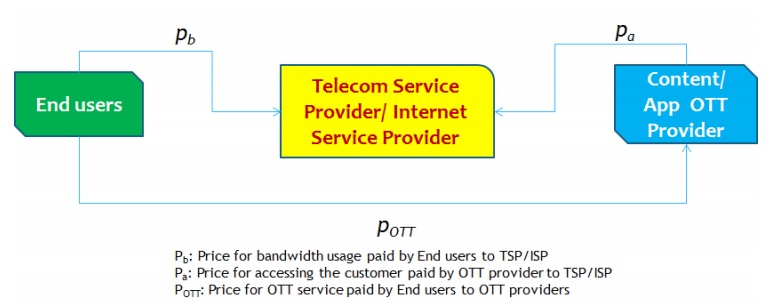

Internet Service Provider (ISP):

A company, such as Comcast or Verizon or Airtel or Tata docomo, that plugs into the backbone and then provides internet connections to homes and businesses. ISP is also known as TSP (telecom service provider) or Telco or broadband carriers or network operators or internet access providers or platform operator. An ISP provides internet services to users via cable or wireless connections.

Access ISP = last mile ISP = eyeball ISP = ISP that provides internet access to user.

Content Provider:

Companies such as Google, Facebook, and Netflix that provide the webpages, videos, and other content that moves across the internet. My website www.drrajivdesaimd.com is also a content provider. A content provider is anyone who has a website that delivers content to internet users. Content and service providers (CSPs) offer a wide range of applications and content to the mass of potential consumers.

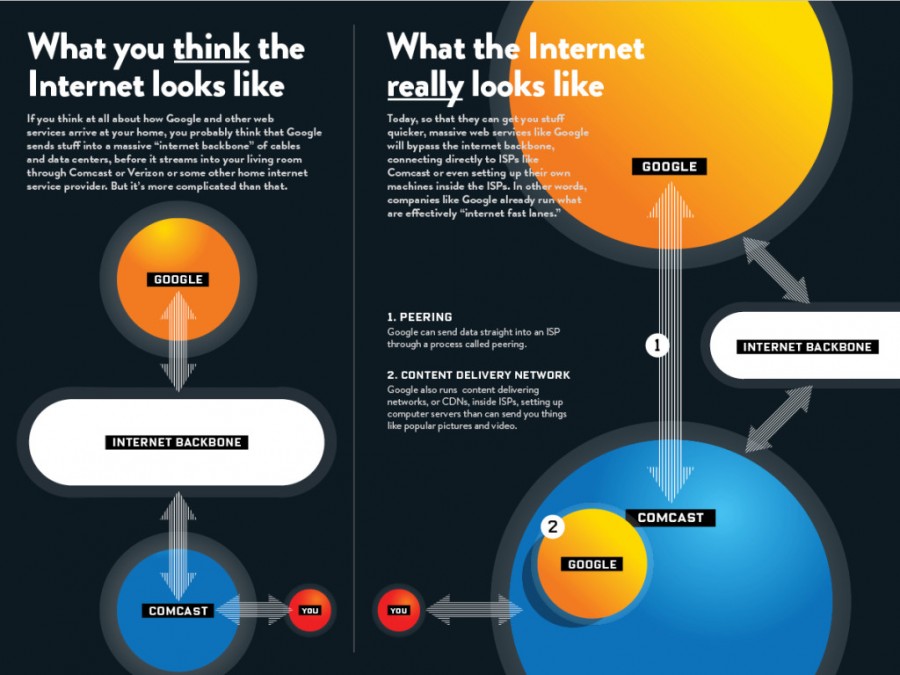

Peering:

Where one internet operation connects directly to another so that they can trade traffic. This could be a connection between an ISP such as Comcast and an internet backbone provider such as Level 3. But it could also be a direct connection between an ISP and a content provider such as Google.

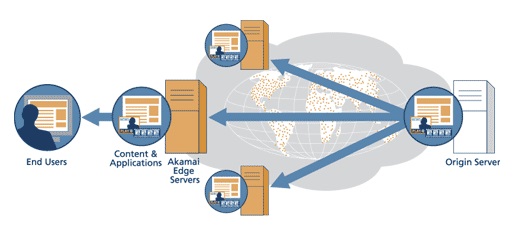

Content Delivery Network (CDN):

A network of computer servers set up inside an ISP that delivers popular photos, videos, and other content. These servers can deliver this content faster to home users because they’re closer to home users. Companies such as Akamai and Cloudflare run CDNs that anyone can use. But content providers such as Google and Netflix now run their own, private CDNs as well.

Regulator:

FCC (federal communication commission of U.S.) and TRAI (telecom regulatory authority of India) are some examples of regulators that regulate ISPs.

___

ISP = internet service provider

CSP = content & service provider

IU = internet user = user = consumer

NN = net neutrality

IP = internet protocol

TCP = transmission control protocol

VoIP = voice over internet protocol

Kbps = kilobits per second

Mbps = megabits per second = 1000 Kbps

Gbps = gigabits per second = 1000 Mbps

QoS = quality of service

CDN = content delivery network

P2P = peer-to-peer file sharing

SMS = short message service

MMS = multimedia message service

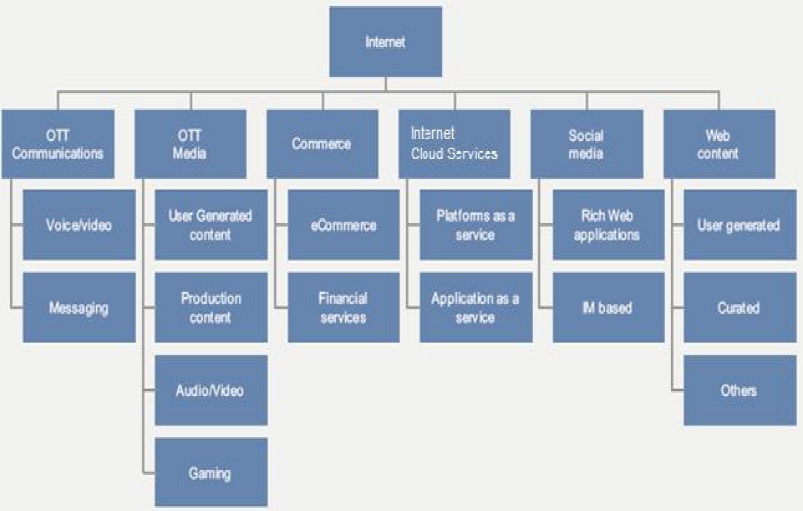

OTT = over the top services

BE = best effort

LAN = local area network

WAN = wide area network

WLAN = wireless local area network

DSL = digital subscriber line

Packets = datagrams

IPTV = internet protocol television

_____

Who’s an Internet user?

A user is a pretty broad term to describe someone who uses the Internet so let’s take a closer look at what “user” means. A user can be a person, small business, local city, state or national government agency, or a large organization, such as the U.S. Government, AT&T, Google, Microsoft, or Facebook. As you can see by this wide range of Internet users, an organization that makes laws, sets tariffs, owns portions of the cables that makeup the Internet, or has the money to buy faster speeds and pay for larger amounts of data could obtain an advantage over a smaller organization or user. In addition to size, governments of certain countries restrict both who is allowed to use the Internet and what the users can do when using the Internet. Some countries have tightly controlled Internets within their borders, and net neutrality is sometimes used more broadly to include the freedom to send and receive data without government restrictions.

_____

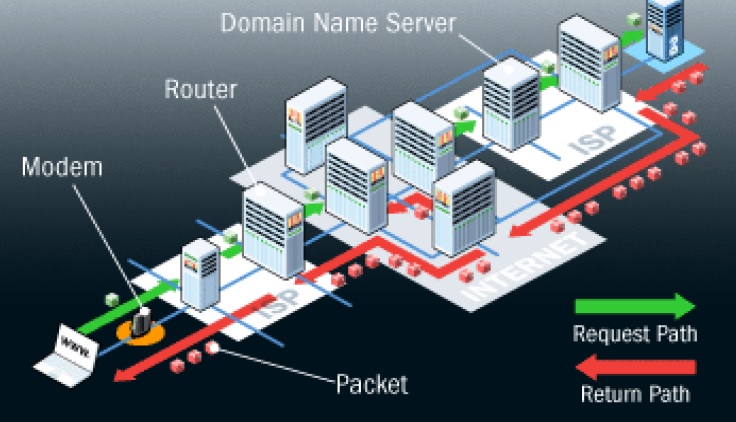

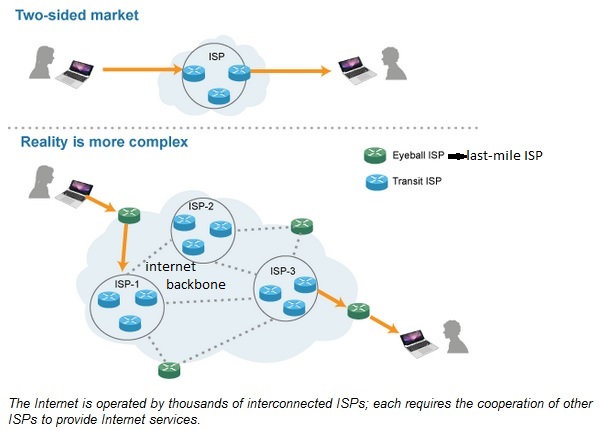

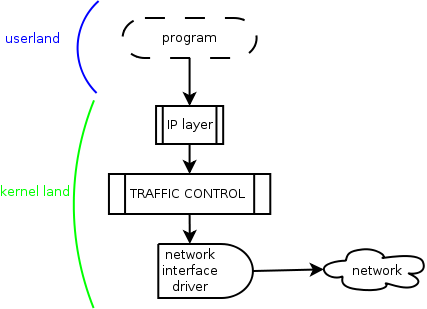

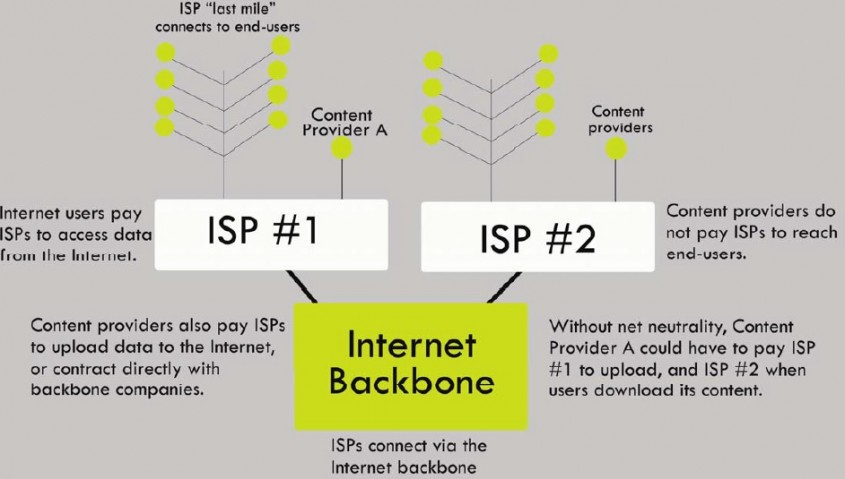

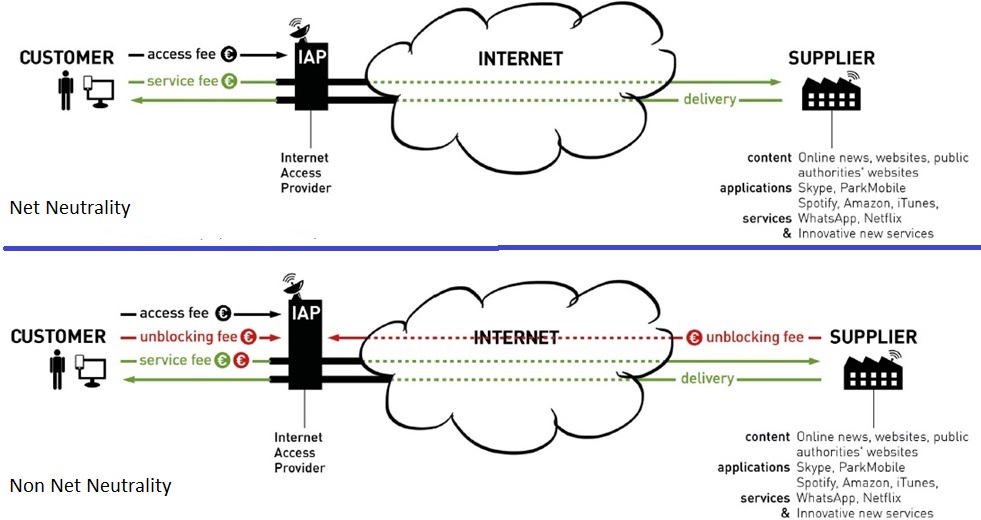

The figure below depicts how internet works today. To understand net neutrality and how ISPs interfere to circumvent net neutrality, this figure must be memorised:

___

There are a lot of emotional terms used to describe various aspects of what makes the melting pot of the neutrality debate. For example, censorship or black-holing (where route filtering, fire-walling and port blocking might say what is happening in less insightful way); free-riding is often bandied about to describe the business of making money on the net (rather than overlay service provision); monopolistic tendencies, instead of the natural inclination of an organisation that owns a lot of kit that they’ve sunk capital into, to want to make revenue from it!

______

Growth of internet:

As the flood of data across the internet continues to increase, there are those that say sometime soon it is going to collapse under its own weight. Back in the early 90s, those of us that were online were just sending text e-mails of a few bytes each, traffic across the main US data lines was estimated at a few terabytes a month, steadily doubling every year. But the mid 90s saw the arrival of picture rich websites, and the invention of the MP3. Suddenly each net user wanted megabytes of pictures and music, and the monthly traffic figure exploded. For the next few years we saw more steady growth with traffic again roughly doubling every year. But since 2003, we have seen another change in the way we use the net. The YouTube generation want to stream video, and download gigabytes of data in one go. In one day, YouTube sends data equivalent to 75 billion e-mails; so it’s clearly very different. The network is growing up, is starting to get more capacity than it ever had, but it is a challenge. Video is real-time; it needs to not have mistakes or errors. E-mail can be a little slow. You wouldn’t notice if it was 11 seconds rather than 10, but you would notice that on a video.

_______

_______

Introduction to net neutrality:

The Internet owes much of its success to the fact that it is open and easily accessible, provided that the user has an Internet connection. Any content provider who has opportunity to test its ideas and their relative value in the marketplace can put its content on internet. The required investment, such as buying a domain name, renting a space on a server and implementing its application or software has been relatively low. As a result, new services have been made available to consumers: browsing, mailing, Peer-to-Peer (P2P), instant messaging, Internet telephony (Voice over Internet Protocol ‘VoIP’), videoconference, gaming online, video streaming, etc. This development has taken place mainly on a commercial basis without any regulatory intervention.

_

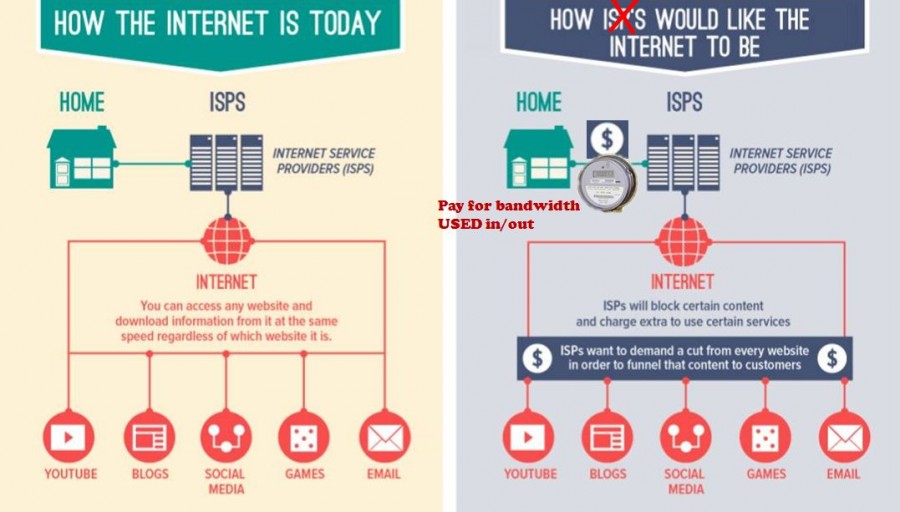

Net neutrality is the principle that all data on the internet is equal, and must be treated equally with no discrimination on the basis of content, user or design by governments and Internet Service Providers (ISP’s). Net Neutrality is the principle that all data on the internet is transported using best effort. This includes not discriminating for origin and service. Under this principle, consumers can make their own choices about what applications and services to use and are free to decide what lawful content they want to access, create, or share with others… Once you’re online, you don’t have to ask permission or pay tolls to broadband providers to reach others on the network. If you develop an innovative new website, you don’t have to get permission to share it with the world. For example, Times is a widely popular online newspaper, while Mirror has comparatively fewer visitors to their website. Right now, if Mirror wanted to boost their page views, they would have to write more engaging stories and find ways to share their content so that more people read it. They are not allowed to make deals with ISP’s to charge customers less money if they visit Mirror website. Net Neutrality means that Internet Service Providers should bill you on the amount of bandwidth you have consumed, and not on which website you visited. Net neutrality is the principle that all packets of data over the internet should be transmitted equally, without discrimination. So, for example, net neutrality ensures that my blog can be accessed just as quickly as, say the BBC website. Essentially, it prevents ISPs from discriminating between sites, organisations etc. whereby those with the deepest pockets can pay to get in the fast lane, whilst the rest have to contend with the slow lane. Instead, every website is treated equally, preventing the big names from delivering their data faster than a small independent online service. This ensures that no one organisation can deliver their data any quicker than anyone else, enabling a fair and open playing field that encourages innovation and diversity in the range of information material online. The principles of net neutrality are effectively the reason why we have a (reasonably) diverse online space that enables anyone to create a website and reach a large volume of people. Network neutrality is the idea that Internet service providers must allow customers equal access to content and applications regardless of the source or nature of the content. Presently the Internet is indeed neutral: All Internet traffic is treated equally on a first‐come, first‐serve basis by Internet backbone owners. The Internet is neutral because it was built on phone lines, which are subject to ‘common carriage’ laws. These laws require phone companies to treat all calls and customers equally. They cannot offer extra benefits to customers willing to pay higher premiums for faster or clearer calls, a model knows as tiered service.

_

Net neutrality is not a new concept relative to the age of the Internet; its roots are embedded within the founders. Net Neutrality refers to a guiding principle that preserves the free and open Internet with no discrimination. It makes it such that an Internet Service Provider (ISP) cannot discriminate the speed of the connection – or lack thereof – to one content provider versus another (Eudes 2008). When the Internet was first invented, founders wanted to be sure that it was to provide a safe haven for the transportation of information without any biases. They wanted to ensure that all people had a consistent way to use the Internet; regardless of their connection and social status (Margulius, 2003). Net Neutrality has two polarizing factions; those who are in favor, and those who are not. On this topic there is not a middle ground. Those who are in favor of Net Neutrality consist of organizations like Microsoft, Google, and other content providers. Those who are against Net Neutrality are generally made of telecommunication network organizations and/or ISPs (Owen 2007). Network neutrality, or open inter-working, means in accessing the World Wide Web, one is in full control over how to go online, where to go and what to do, as long as these are lawful. So firms that provide Internet services should treat all lawful Internet content in a neutral manner. It also required such companies not to charge users, content, platform, site, application or mode of communication differentially. These are also the founding principles of the Internet and what has made it the largest and most diverse platform for expression in recent history.

_

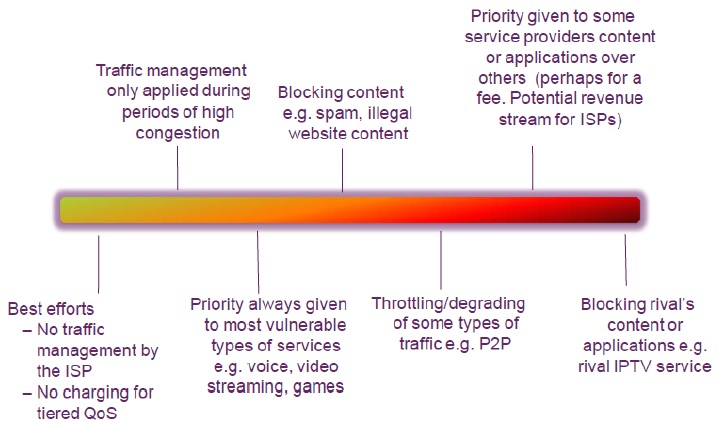

Net neutrality is when an ISP treats all content on the internet neutrally, and does not prioritize one over the other. ISPs are charging content companies because money makes their shareholders happy. Also because they believe they have the right to do so when a certain content provider (e.g. netflix) takes up the majority of the bandwidth from their data centers. Companies are concerned because it will give ISPs free reign to downright slow down any content they please and demand money to bring it to normal speed. Whether you’re accessing How-To Geek, Google, or a tiny website running on shared hosting somewhere, your Internet service provider treats these connections equally and forwards the data along without prioritizing any one party. Your Internet service provider could prioritize data from Google, charging them for the privilege. They could throttle Netflix while providing you with unlimited bandwidth to stream videos from their own video-streaming service. They could restrict the bandwidth available to VoIP applications and encourage you to keep paying for a phone line. They could throttle connections to websites run by startups and other individuals that haven’t signed a contract with the Internet service provider to pay for priority access. These actions would all be violations of net neutrality. However, by and large, Internet service providers don’t violate net neutrality in this way. They just forward packets along — that’s the way the Internet has worked and it has given us the Internet we have today.

_

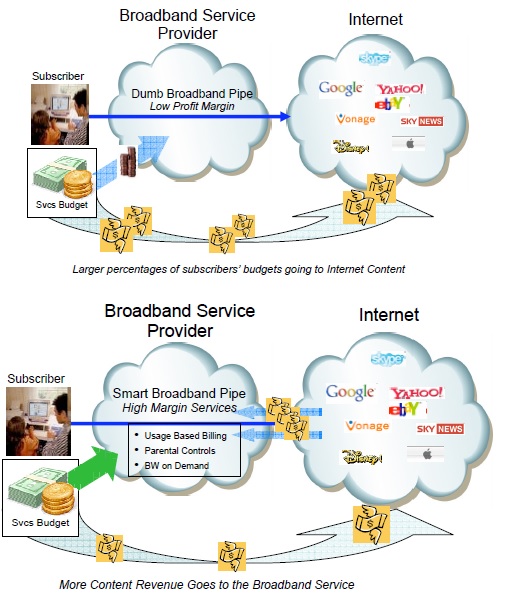

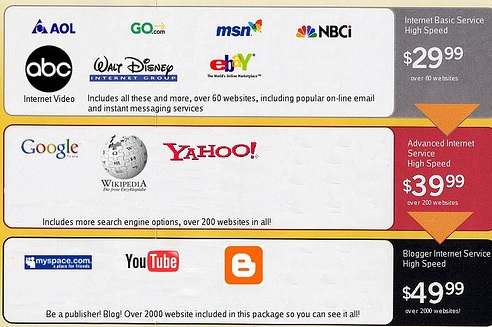

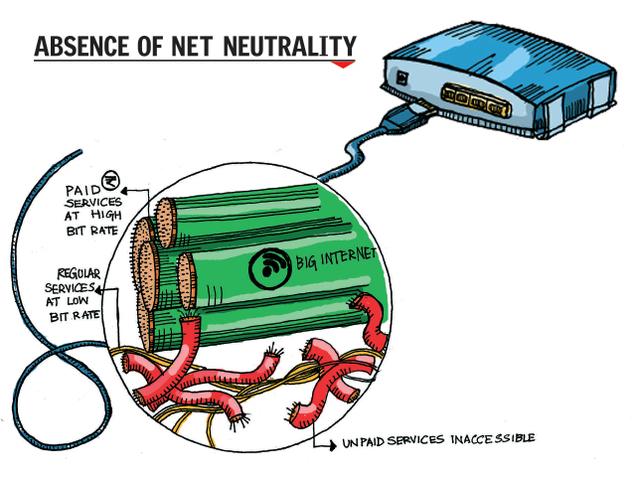

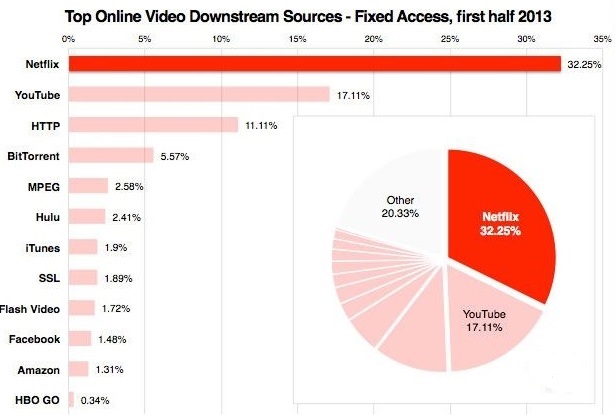

The figure below shows how ISPs would like the internet to be without net neutrality:

_

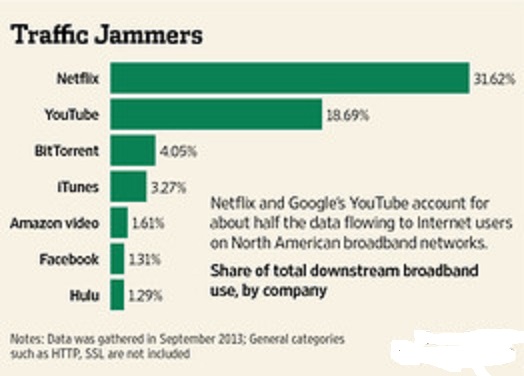

One percent of the world’s population controls almost 50 percent of the world’s wealth, according to the poverty eradication nonprofit Oxfam. Advocates of net neutrality worry that loosening the rules for ISPs will result in a one-percent version of the Internet. Here’s how it could happen. In 2004, Internet traffic was more or less equally distributed across thousands of Web companies. Just 10 years later, half of all Internet traffic originated from only 30 companies. The top three websites by daily unique visitors and page views are Google, Facebook and YouTube. In terms of data, Netflix and YouTube hog more than half of all downstream traffic in North America. That means one out of every two bytes of data traveling across the Internet is streaming video from Netflix or YouTube. If the distribution of Internet traffic is so out of whack now, imagine what it would be like if ISPs were given the green light to give further preferential treatment to the biggest players. Would there be any bandwidth left for the 99 percent — independent video producers, upstart social media sites, bloggers and podcasters? This is a really important reason why you should care about net neutrality. The Internet, as it exists today, is an open forum for free speech and freedom of expression. Websites publishing both popular and unpopular viewpoints are treated equally in terms of how their data gets from servers to screens. If the FCC allows Internet service providers (ISPs) to charge extra money for access to Internet last-mile fast lanes, the playing field of free speech is no longer equal. Those with the money to pay for special treatment could broadcast their opinions more quickly and more smoothly than their opponents. Those without as many resources — activists, artists and political outsiders — could be relegated to the Internet slow lane.

_

If you’re lucky enough to live in a country that doesn’t regulate the information you access online, you probably take net neutrality for granted. You search the Web unrestricted by government censors, free to choose what information to believe or discard, and what websites and online services to patronize. In mainland China, citizens of the highly restrictive communist regime enjoy no such freedoms. This is what a heavily censored and closely monitored Internet looks like:

1. Chinese internet service providers (ISPs) block access to a long list of sites banned by the government.

2. Specific search terms are red flagged; type them into Google and you’ll be blocked from the search engine for 90 seconds.

3. Chinese ISPs are given lists of problematic keywords and ordered to take down pages that include those words.

4. The government and private companies employ 100,000 people to police the Internet and snitch on dissenters.

5. The government also pays people to post pro-government messages on social networks, blogs and message boards.

_

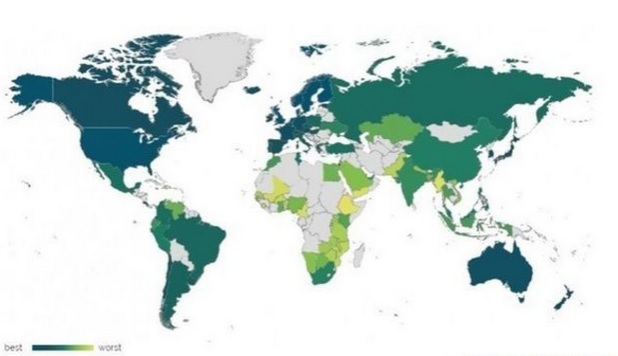

The unequal Web:

The figure above shows that richer countries rank highest for net access, freedom and openness. The web is becoming less free and more unequal, according to a report from the World Wide Web Foundation. Its annual web index suggests web users are at increasing risk of government surveillance, with laws preventing mass snooping weak or non-existent in over 84% of countries. It also indicates that online censorship is on the rise. The report led web inventor Sir Tim Berners-Lee to call for net access to be recognised as a human right. That means guaranteeing affordable access for all, ensuring internet packets are delivered without commercial or political discrimination, and protecting the privacy and freedom of web users regardless of where they live.

_

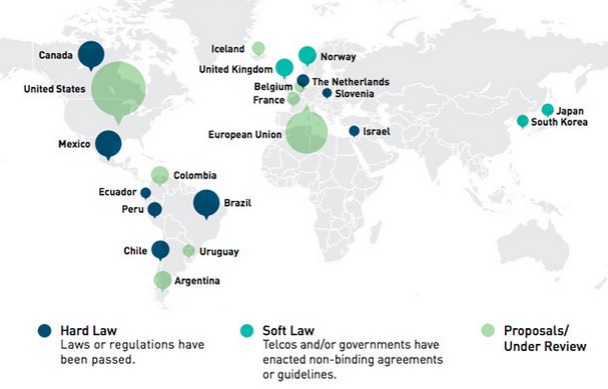

Net neutrality worldwide:

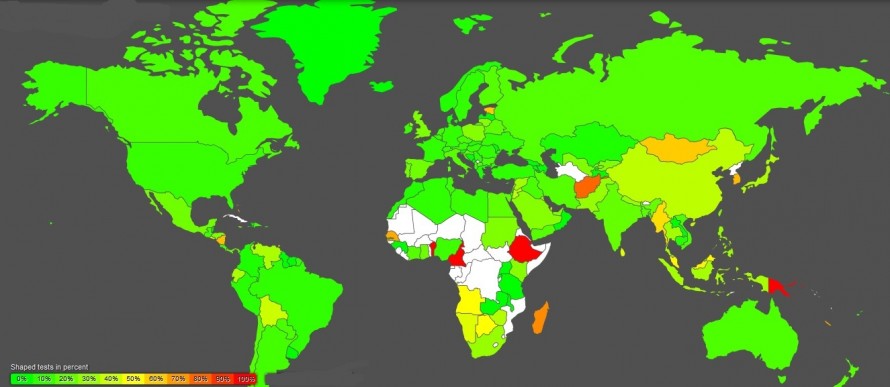

This map shows data from Glasnost, one of the measurement lab tools for examining your internet connection. Authors map the percentage of tests where violations of net neutrality was discovered worldwide. Data covers the period from 2012-12-26 00:02:11 to 2013-12-22 23:59:19.

____________

____________

Outline of computer, internet, bits, bytes, speed, packets and internet protocol:

Computer is defined as a programmable machine that computes (stores, processes and retrieves) information (data) according to a set of instructions (program). Computer processes data in numerical form and its digital electronic circuits perform mathematical operations using Binary System. Binary system means using only two digits for arithmetic processing, namely, 0 and 1 known as bits (binary digits).

0 means absence of current/voltage in electronic circuit = off

1 means presence of current/voltage in electronic circuit = on

A series of 8 consecutive bits is known as a byte which permits 256 different on/off combinations.

_

Computers see everything in terms of binary. In binary systems, everything is described using two values or states: on or off, true or false, yes or no, 1 or 0. A light switch could be regarded as a binary system, since it is always either on or off. As complex as they may seem, on a conceptual level computers are nothing more than boxes full of millions of “light switches.” Each of the switches in a computer is called a bit, short for binary digit. A computer can turn each bit either on or off. Your computer likes to describe on as 1 and off as 0. By itself, a single bit is kind of useless, as it can only represent one of two things. By arranging bits in groups, the computer is able to describe more complex ideas than just on or off. The most common arrangement of bits in a group is called a byte, which is a group of eight bits.

_

Internet is defined as a global communication system of data connectivity between computers using transmission control protocol (TCP) and internet protocol (IP) to serve billions of users in the world. Internet is the greatest invention in communication breaking barriers of age/distance/language/religion/race/region and making the world a better place to live in. If you do not have internet access in 21′st century, you are illiterate. Internet scores over media due to internet’s openness and neutrality. Every school must teach basics of computer and internet to students.

Data transfer rate (speed) of internet is usually in bits per second.

1000 bits per second = 1 kilobit per second (Kbps)

1000000 bits per second = 1 megabit per second (Mbps) = 1000 Kbps

Broadband means download internet speed of more than 4 Mbps and upload internet speed of more than 1 Mbps. Newer technology with fiber-optic cables can give internet speed of 100 Mbps.

The speed of travel of data from computer to computer through wireless technology (air) is same as the speed of radio waves (speed of light) which is 300,000 kilo meters per second. The speed of travel of data from computer to computer through wired network is same of speed of electricity which is also near speed of light. Please do not confuse between speed of data travel i.e. speed of light and internet speed i.e. data transfer rate in Kbps or Mbps which refers to the speed of digital data converted into radio waves or electricity and not the speed of data when traveling through the air or wires. Data transfer rate and data travel rate are different. The term latency is used to determine amount of time taken by packets to travel from source to destination. Since speed of light is constant and fastest, latency depends on time taken by packets to travel through routers (queuing) and other hardware/software.

_

IP address:

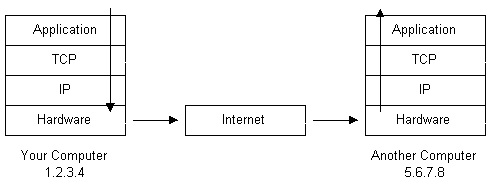

The picture below illustrates two computers connected to the Internet; your computer with IP address 1.2.3.4 and another computer with IP address 5.6.7.8. The Internet is represented as an abstract object in-between.

_

An Internet Protocol address (IP address) is a numerical label assigned to each device (e.g., computer, printer) participating in a computer network that uses the Internet Protocol for communication. An IP address serves two principal functions: host or network interface identification and location addressing. A name indicates what we seek. An address indicates where it is. A route indicates how to get there. The designers of the Internet Protocol defined an IP address as a 32-bit number and this system, known as Internet Protocol Version 4 (IPv4), is still in use today. However, because of the growth of the Internet and the predicted depletion of available addresses, a new version of IP (IPv6), using 128 bits for the address, was developed in 1995. IP addresses are usually written and displayed in human-readable notations, such as 172.16.254.1 (IPv4), and 2001:db8:0:1234:0:567:8:1 (IPv6). Each version defines an IP address differently. Because of its prevalence, the generic term IP address typically still refers to the addresses defined by IPv4. IPv4 addresses are canonically represented in dot-decimal notation, which consists of four decimal numbers, each ranging from 0 to 255, separated by dots, e.g., 172.16.254.1. Each part represents a group of 8 bits (octet) of the address. In some cases of technical writing, IPv4 addresses may be presented in various hexadecimal, octal, or binary representations. There are about 4.3 billion IP addresses. The class-based, legacy addressing scheme places heavy restrictions on the distribution of these addresses. TCP/IP networks are inherently router-based, and it takes much less overhead to keep track of a few networks than millions of them. The rapid exhaustion of IPv4 address space, despite conservation techniques, prompted the Internet Engineering Task Force (IETF) to explore new technologies to expand the addressing capability in the Internet. The permanent solution was deemed to be a redesign of the Internet Protocol itself. This new generation of the Internet Protocol, intended to replace IPv4 on the Internet, was eventually named Internet Protocol Version 6 (IPv6) in 1995. The address size was increased from 32 to 128 bits or 16 octets. This, even with a generous assignment of network blocks, is deemed sufficient for the foreseeable future. Mathematically, the new address space provides the potential for a maximum of 2128, or about 3.403×1038 addresses. The Domain Name System (DNS) converts IP addresses to domain names so that users only need to specify a domain name to access a computer on the Internet instead of typing the numeric IP address. DNS servers maintain a database containing IP addresses mapped to their corresponding domain names.

_

IP address assignment:

Internet Protocol addresses are assigned to a host either anew at the time of booting, or permanently by fixed configuration of its hardware or software. Persistent configuration is also known as using a static IP address. In contrast, in situations when the computer’s IP address is assigned newly each time, this is known as using a dynamic IP address. An Internet Service Provider (ISP) will generally assign either a static IP address (always the same) or a dynamic address (changes every time one logs on). If you connect to the Internet from a local area network (LAN) your computer might have a permanent IP address or it might obtain a temporary one from a DHCP (Dynamic Host Configuration Protocol) server. In any case, if you are connected to the Internet, your computer has a unique IP address.

_

Packets and protocols:

When a file is sent from one computer to another, it is broken into small pieces called packets. A typical packet contains perhaps 1,000 or 1,500 bytes. It turns out that everything you do on the Internet involves packets. For example, every Web page that you receive comes as a series of packets, and every e-mail you send leaves as a series of packets. The packets are labelled individually with origin, destination and place in the original file. The packets are sent sequentially over network. Each packet carries the information that will help it get to its destination — the sender’s IP address, the intended receiver’s IP address, something that tells the network how many packets this e-mail message has been broken into and the number of this particular packet. When a packet get on a router, the router looks at the packet to see where it needs to go. The routers determine where to send information from one computer to another. Routers are specialized computers that send your messages and those of every other Internet user speeding to their destinations along thousands of pathways. The packets carry the data in the protocols that the Internet uses: Transmission Control Protocol/Internet Protocol (TCP/IP). Using “pure” IP, a computer first breaks down the message to be sent into small packets, each labelled with the address of the destination machine; the computer then passes those packets along to the next connected Internet machine (router), which looks at the destination address and then passes it along to the next connected internet machine, which looks the destination address and pass it along, and so forth, until the packets (we hope) reach the destination machine. IP is thus a “best efforts” communication service, meaning that it does its best to deliver the sender’s packets to the intended destination, but it cannot make any guarantees. If, for some reason, one of the intermediate computers “drops” (i.e., deletes) some of the packets, the dropped packets will not reach the destination and the sending computer will not know whether or why they were dropped. By itself, IP can’t ensure that the packets arrived in the correct order, or even that they arrived at all. That’s the job of another protocol: TCP (Transmission Control Protocol). TCP sits “on top” of IP and ensures that all the packets sent from one machine to another are received and assembled in the correct order. Should any of the packets get dropped during transmission, the destination machine uses TCP to request that the sending machine resend the lost packets, and to acknowledge them when they arrive. TCP’s job is to make sure that transmissions get received in full, and to notify the sender that everything arrived OK. Each packet is sent off to its destination by the best available route — a route that might be taken by all the other packets in the message or by none of the other packets in the message. This makes the network more efficient. First, the network can balance the load across various pieces of equipment on a millisecond-by-millisecond basis. Second, if there is a problem with one piece of equipment in the network while a message is being transferred, packets can be routed around the problem, ensuring the delivery of the entire message. Packets don’t necessarily all take the same path — they’ll generally travel the path of least resistance. That’s an important feature. Because packets can travel multiple paths to get to their destination, it’s possible for information to route around congested areas on the Internet. In fact, as long as some connections remain, entire sections of the Internet could go down and information could still travel from one section to another — though it might take longer than normal. When the packets get to you, your device arranges them according to the rules of the protocols. It’s kind of like putting together a jigsaw puzzle. When you send an e-mail, it gets broken into packets before zooming across the Internet. Phone calls over the Internet also convert conversations into packets using the Voice over Internet protocol (VoIP).

_

Many things can happen to packets as they travel from origin to destination, resulting in the following problems as seen from the point of view of the sender and receiver:

Low throughput:

Due to varying load from disparate users sharing the same network resources, the bit rate (the maximum throughput) that can be provided to a certain data stream may be too low for realtime multimedia services if all data streams get the same scheduling priority.

Dropped packets:

The routers might fail to deliver (drop) some packets if their data loads are corrupted, or the packets arrive when the router buffers are already full. The receiving application may ask for this information to be retransmitted, possibly causing severe delays in the overall transmission.

Errors:

Sometimes packets are corrupted due to bit errors caused by noise and interference, especially in wireless communications and long copper wires. The receiver has to detect this and, just as if the packet was dropped, may ask for this information to be retransmitted.

Latency:

Latency is defined as the time it takes for a source to send a packet of data to a receiver. Latency is typically measured in milliseconds. The lower the latency (the fewer the milliseconds), the better the network performance. It might take a long time for each packet to reach its destination, because it gets held up in long queues, or it takes a less direct route to avoid congestion. This is different from throughput, as the delay can build up over time, even if the throughput is almost normal. In some cases, excessive latency can render an application such as VoIP or online gaming unusable. Ideally latency is as close to zero as possible.

Jitter:

Packets from the source will reach the destination with different delays. A packet’s delay varies with its position in the queues of the routers along the path between source and destination and this position can vary unpredictably. This variation in delay is known as jitter and can seriously affect the quality of streaming audio and/or video.

Out-of-order delivery:

When a collection of related packets is routed through a network, different packets may take different routes, each resulting in a different delay. The result is that the packets arrive in a different order than they were sent. This problem requires special additional protocols responsible for rearranging out-of-order packets to an isochronous state once they reach their destination. This is especially important for video and VoIP streams where quality is dramatically affected by both latency and lack of sequence.

_

Protocols:

At their most basic level, protocols establish the rules for how information passes through the Internet. Protocols are to computers what language is to humans. Since this article is in English, to understand it you must be able to read English. Similarly, for two devices on a network to successfully communicate, they must both understand the same protocols. Without these rules, you would need direct connections to other computers to access the information they hold. You’d also need both your computer and the target computer to understand a common language. When you want to send a message or retrieve information from another computer, the TCP/IP protocols are what make the transmission possible. You’ve probably heard of several protocols on the Internet. For example, hypertext transfer protocol (HTTP) is what we use to view Web sites through a browser — that’s what the http at the front of any Web address stands for. If you’ve ever used an FTP server, you relied on the file transfer protocol. Protocols like these and dozens more create the framework within which all devices must operate to be part of the Internet.

_

Protocol Stacks:

So your computer is connected to the Internet and has a unique address. How does it ‘talk’ to other computers connected to the Internet? An example should serve here: Let’s say your IP address is 1.2.3.4 and you want to send a message to the computer 5.6.7.8. The message you want to send is “Hello computer 5.6.7.8!” Obviously, the message must be transmitted over whatever kind of wire connects your computer to the Internet. Let’s say you’ve dialled into your ISP from home and the message must be transmitted over the phone line. Therefore the message must be translated from alphabetic text into electronic signals, transmitted over the Internet, then translated back into alphabetic text. How is this accomplished? Through the use of a protocol stack. Every computer needs one to communicate on the Internet and it is usually built into the computer’s operating system (i.e. Windows, Unix, etc.). The protocol stack used on the Internet is referred to as the TCP/IP protocol stack because of the two major communication protocols used. The TCP/IP stack looks like this:

| Protocol Layer | Comments |

| Application Protocols Layer | Protocols specific to applications such as WWW, e-mail, FTP, etc. |

| Transmission Control Protocol Layer | TCP directs packets to a specific application on a computer using a port number. |

| Internet Protocol Layer | IP directs packets to a specific computer using an IP address. |

| Hardware Layer | Converts binary packet data to network signals and back. (E.g. Ethernet network card, modem for phone lines, etc.) |

_

If we were to follow the path that the message “Hello computer 5.6.7.8!” took from our computer to the computer with IP address 5.6.7.8, it would happen something like this:

_

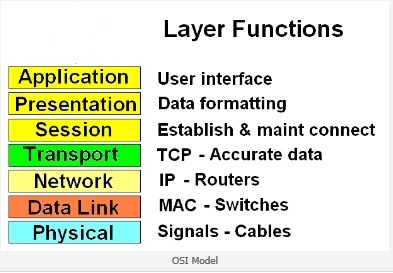

Internet layers/protocol layers:

The internet layer is a group of internetworking methods, protocols, and specifications in the Internet protocol suite that are used to transport datagrams (packets) from the originating host across network boundaries to the destination host specified by a network address (IP address) which is defined for this purpose by the Internet Protocol (IP). A common design aspect in the internet layer is the robustness principle: ‘Be liberal in what you accept, and conservative in what you send’ as a misbehaving host can deny Internet service to many other users. The internet layer of the TCP/IP model is often compared directly with the network layer (layer 3) in the Open Systems Interconnection (OSI) protocol stack. OSI’s network layer is a catch-all layer for all protocols that facilitate network functionality. The internet layer, on the other hand, is specifically a suite of protocols that facilitate internetworking using the Internet Protocol. Protocol layers exist to reduce design complexity and improve portability and support for change. Networks are organised as series of layers or levels each built on the one below. The purpose of each layer is to offer services required by higher levels and to shield higher layers from the implementation details of lower layers.

_

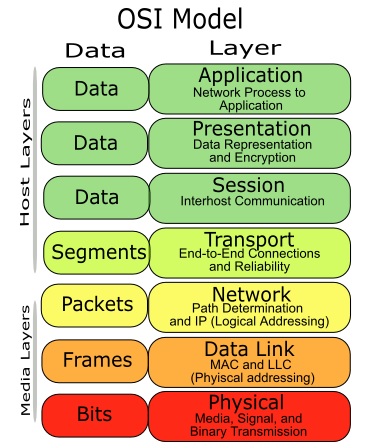

OSI Reference Model:

_

OSI consists of 7 layers of protocols, i.e., of 7 different areas in which the protocols operate. In principle, the areas are distinct and of increasing generality; in practice, the boundaries between the layers are not always sharp. The model draws a clear distinction between a service, something that an application program or a higher-level protocol uses, and the protocols themselves, which are sets of rules for providing services.

_

_

The OSI Model was developed to help provide a better understanding of how a network operates. The better you understand the model the better you will understand networking. It is composed of seven OSI layers. Each layer is unique and supports the creation and control of data packets. The layers start with Physical and ends with the Application. The first three layers relate to network equipment. For example, switches are layer 2 devices and routers are layer 3 devices.

1. The first layer is the Physical layer and is where the data is either put onto the media or taken off the media. The media could be the network cable or wireless. The data is in the form of bits and is called Bits as the PDU (protocol data unit). These bits can be voltage levels that represent binary numbers of 1 or 0. They could also be light pulses traveling on a fiber optic cable or radio wave pulses for a wireless network.

2. The second layer is the Data Link layer and is where framing of the data takes place. The Frame is the PDU name at this layer. The MAC (media access control) physical address is added or removed depending on which direction the data is traveling. The MAC address is used by switches to switch the data to the appropriate computer or node that it is intended for in a LAN (local area network).

3. The third layer is the Network layer and is where the IP (internet protocol) address is added or removed and the PDU at this layer is called a Packet. Routers operate at this level and use the IP (logical address) to route the data to the appropriate network. Network locations are found by the routers using routing tables to locate the appropriate networks.

4. The fourth layer is the Transport layer and is where the data is segmented (broken into pieces) and used by the TCP protocol to ensure accurate and reliable data is transferred. The data segments are numbers so that proper sequencing can be determined on the receiving side in order to rebuild accurate files. The PDU name at this layer is called Segment.

5. The fifth layer is the Session layer and is where the session is created, maintained, and torn-down when finished.

6. The sixth layer is the Presentation layer and is where the data is formatted or decrypted into files that the user can understand.

7. The seventh layer is the Application layer and is the user interface to the network where that data is either being generated or received.

_

_

The Internet, like any other computer network, is defined in terms of layers; these are the often-referenced “OSI Layers”. This division into layers is a logical (rather than physical) one; the data traversing the network is eventually one long series of bits — 0’s and 1’s. Such “layers” is how we address the representation of those many bits; their grouping into clusters of bits that have meaning. The different network layers are different levels of interpretation of this large set of bits moving along the wire. Understanding the same raw traffic at different layers allows us to bridge the semantic gap between a bunch of 0’s and 1’s and an e-mail being sent or to a web site being browsed. After all, all emails and browsing sessions end up as 0’s and 1’s on a wire. Processing those sequences of bits at different layers of abstraction is what makes the network as versatile as it is and technically manageable. In a nutshell, Internet traffic is interpreted at seven layers, where each layer introduces meaningful data objects and uses the underlying layer to transfer these objects. Each of the many components of the Internet (applications sending and receiving data, routers, modems, and wires) knows how to process data at its own layer and needs not be aware of what the data represents at higher layers or of how data is processed by the lower layers.

_

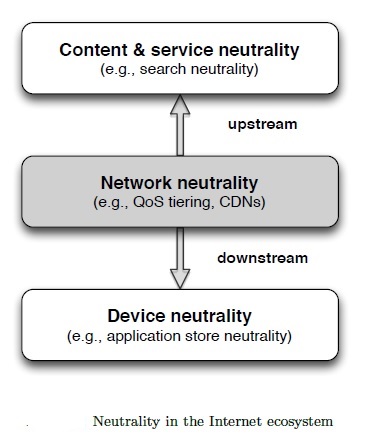

Understanding the layered architecture of the Internet allows us to define net neutrality:

Network neutrality is the adherence to the paradigm that operation at a certain layer, by a network component (or provider) that is chartered for operating at that layer, is not influenced by interpretation of the processed data at higher layers. So network neutrality is an intended feature of the Internet. A component operating at a certain layer is not required to understand the data it processes at higher layers. The network card operating at Layer 2 does not need to know that it is sending an e-mail message (Layer 7). It only needs to know that it is sending a frame (Layer 2) with a certain opaque payload. Net-neutrality is thus built into the Internet. When expanding the notion of net neutrality from the purely technical domain to the service domain, we can define network neutrality as the adherence to the paradigm that operation of a service at a certain layer is not influenced by any data other than the data interpreted at that layer, and in accordance with the protocol specification for that layer. Therefore, a service provider is said to operate in net neutrality if it provides the service in a way what is strictly “by the book”, where “the book” is the specification of the network protocol it implements as its service. Its operation is network-neutral if it is not impacted by any other logic other than that of implementing the network layer protocol that it is chartered at implementing.

_

Router:

So how do packets find their way across the Internet? Does every computer connected to the Internet know where the other computers are? Do packets simply get ‘broadcast’ to every computer on the Internet? The answer to both the preceding questions is ‘no’. No computer knows where any of the other computers are, and packets do not get sent to every computer. The information used to get packets to their destinations are contained in routing tables kept by each router connected to the Internet. Routers are packet switches. A router is usually connected between networks to route packets between them. Each router knows about its sub-networks and which IP addresses they use. The router usually doesn’t know what IP addresses are ‘above’ it. When a packet arrives at a router, the router examines the IP address put there by the IP protocol layer on the originating computer. The router checks its routing table. If the network containing the IP address is found, the packet is sent to that network. If the network containing the IP address is not found, then the router sends the packet on a default route, usually up the backbone hierarchy to the next router. Hopefully the next router will know where to send the packet. If it does not, again the packet is routed upwards until it reaches a NSP (network service provider) backbone. The routers connected to the NSP backbones hold the largest routing tables and here the packet will be routed to the correct backbone, where it will begin its journey ‘downward’ through smaller and smaller networks until it finds its destination.

_

Modem vs. router:

A router is a device that forwards data packets along networks. A router is connected to at least two networks, commonly two LANs or WANs or a LAN and its ISP’s network. Routers are located at gateways, the places where two or more networks connect. While connecting to a router provides access to a local area network (LAN), it does not necessarily provide access to the Internet. In order for devices on the network to connect to the Internet, the router must be connected to a modem. While the router and modem are usually separate entities, in some cases, the modem and router may be combined into a single device. This type of hybrid device is sometimes offered by ISPs to simplify the setup process.

_

Modem:

A modem (modulator-demodulator) is a device that modulates signals to encode digital information and demodulates signals to decode the transmitted information. The goal is to produce a signal that can be transmitted easily and decoded to reproduce the original digital data. A modem is a device that provides access to the Internet. The modem connects to your ISP. Modems can be used with any means of transmitting analog signals, from light emitting diodes to radio. A common type of modem is one that turns the digital data of a computer into modulated electrical signal for transmission over telephone lines and demodulated by another modem at the receiver side to recover the digital data. Modems which use a mobile telephone system (GPRS, UMTS, HSPA, EVDO, WiMax, etc.), are known as mobile broadband modems (sometimes also called wireless modems). Wireless modems can be embedded inside a laptop or appliance, or be external to it. External wireless modems are connect cards, USB modems for mobile broadband and cellular routers. A connect card is a PC Card or ExpressCard which slides into a PCMCIA/PC card/ExpressCard slot on a computer. USB wireless modems use a USB port on the laptop instead of a PC card or ExpressCard slot. A USB modem used for mobile broadband Internet is also sometimes referred to as a dongle. A cellular router may have an external datacard (AirCard) that slides into it. Most cellular routers do allow such datacards or USB modems. Cellular routers may not be modems by definition, but they contain modems or allow modems to be slid into them. The difference between a cellular router and a wireless modem is that a cellular router normally allows multiple people to connect to it (since it can route data or support multipoint to multipoint connections), while a modem is designed for one connection. By connecting your modem to your router (instead of directly to a computer), all devices connected to the router can access the modem, and therefore, the Internet. The router provides a local IP address to each connected device, but they will all have the same external IP address, which is assigned by your ISP.

_

The figure below shows request path and return path of internet utilizing modem, router and DNS server:

_

In order to retrieve this article, your computer had to connect with the Web server containing the article’s file. We’ll use that as an example of how data travels across the Internet. First, you open your Web browser and connect to our Web site. When you do this, your computer sends an electronic request over your Internet connection to your Internet service provider (ISP). The ISP routes the request to a server further up the chain on the Internet. Eventually, the request will hit a domain name server (DNS). This server will look for a match for the domain name you’ve typed in (www.drrajivdesaimd.com). If it finds a match, it will direct your request to the proper server’s IP address. If it doesn’t find a match, it will send the request further up the chain to a server that has more information. The request will eventually come to our Web server. Our server will respond by sending the requested file in a series of packets.

_

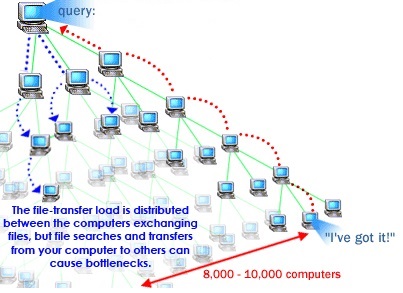

Peer to Peer file sharing:

_

_

Peer-to-peer file sharing is different from traditional file downloading. In peer-to-peer sharing, you use a software program (rather than your Web browser) to locate computers that have the file you want. Because these are ordinary computers like yours, as opposed to servers, they are called peers. The process works like this:

•You run peer-to-peer file-sharing software (for example, a Gnutella program) on your computer and send out a request for the file you want to download.

•To locate the file, the software queries other computers that are connected to the Internet and running the file-sharing software.

•When the software finds a computer that has the file you want on its hard drive, the download begins.

•Others using the file-sharing software can obtain files they want from your computer’s hard drive.

The file-transfer load is distributed between the computers exchanging files, but file searches and transfers from your computer to others can cause bottlenecks. Some people download files and immediately disconnect without allowing others to obtain files from their system, which is called leeching. This limits the number of computers the software can search for the requested file. As Peer-to-Peer (P2P) file exchange applications gain popularity, Internet service providers are faced with new challenges and opportunities to sustain and increase profitability from the broadband IP network. Unlike other P2P download methods, BitTorrent maximizes transfer speed by gathering pieces of the file you want and downloading these pieces simultaneously from people who already have them. This process makes popular and very large files, such as videos and television programs, download much faster than is possible with other protocols. Due to the unique and aggressive usage of network resources by Peer-to-Peer technologies, network usage patterns are changing and provisioned capacity is no longer sufficient. Extensive use of Peer-to-Peer file exchange causes network congestion and performance deterioration, and ultimately leads to customer dissatisfaction and churn.

_

Note:

Please do not confuse between peering and peer-to-peer file transfer. Peering is direct connection between ISP and content provider (e.g. Google) bypassing internet backbone while peer to peer is sharing files between client computers rather than downloading file from content provider. During peering, you are getting file from content provider at faster speed while during P2P, you are getting file from another user’s computer at faster speed. Peering is violation of net neutrality by ISP while P2P is violation of net neutrality by consumers.

_

End-to-end principle:

The principle states that, whenever possible, communications protocol operations should be defined to occur at the end-points of a communications system, or as close as possible to the resource being controlled. This leads to the model of a minimal dumb network with smart terminals, a completely different model from the previous paradigm of the smart network with dumb terminals. All of the intelligence is held by producers and users, not the networks that connect them. End-to-end design of the network entails that the intelligence would be exclusively located at the edges of the Internet (i.e. with end users), and not at the core (i.e. with networks). If the hosts need a mechanism to provide some functionality, then the network should not interfere or participate in that mechanism unless it absolutely has to. Or, more simply put, the network should mind its own business. If a network function can be implemented correctly and completely using the functionalities available on the end-hosts, that function should be implemented on the end-hosts without delegating any task to the network (i.e., intermediary nodes in between the end-hosts). Because the end-to-end principle is one of the central design principles of the Internet, and because the practical means for implementing data discrimination violate the end-to-end principle, the principle often enters discussions about net neutrality (NN). The end-to-end principle is closely related, and sometimes seen as a direct precursor to the principle of net neutrality.

___________

The Internet is a global, interconnected and decentralised autonomous computer network. We can access the Internet via connections provided by Internet access providers (ISP). These access providers transmit the information that we send over the Internet in so-called data “packets”. The way in which data is sent and received on the Internet can be compared to sending the pages of a book by post in lots of different envelopes. The post office can send the pages by different routes and, when they are received, the envelopes can be removed and the pages put back together in the right order. When we connect to the Internet, each one of us becomes an endpoint in this global network, with the freedom to connect to any other endpoint, whether this is another person’s computer (“peer-to-peer”), a website, an e-mail system, a video stream or whatever.

The success of the Internet is based on two simple but crucial components of its architecture:

1. Every connected device can connect to every other connected device.

2. All services use the “Internet Protocol,” which is sufficiently flexible and simple to carry all types of content (video, e-mail, messaging etc.) unlike networks that are designed for just one purpose, such as the voice telephony system.

_

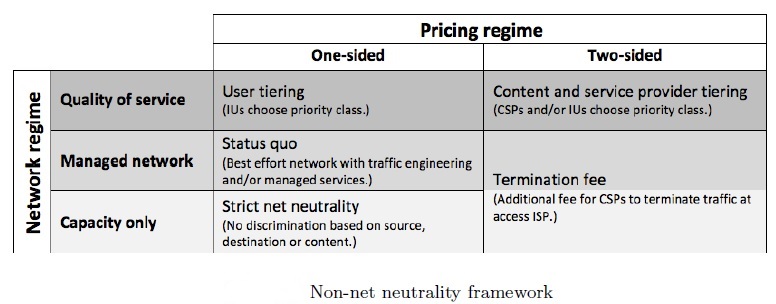

Technical internet:

Internet is the abbreviation of the term internetwork, which describes the connection between computer networks all around the world on the basis of the same set of communication protocols. At its start in the 1960s, the Internet was a closed research network between just a few universities, intended to transmit text messages. The architectural design of the Internet was guided by two fundamental design principles: Messages are fragmented into data packets that are routed through the network autonomously (end-to-end principle) and as fast as possible (best-effort principle [BE]). This entails that intermediate nodes, so-called routers, do not differentiate packets based on their content or source. Rather, routers maintain routing tables in which they store the next node that lies on the supposedly shortest path to the packet’s destination address. However, as each router acts autonomously along when deciding the path along which it sends a packet, no router has end-to-end control over which path the packet is send from sender to receiver. Moreover, it is possible, even likely, that packets from the same message flow may take different routes through the network. Packets are stored in a router’s queue if they arrive at a faster rate than the rate at which the router can send out packets. If the router’s queue is full, the package is deleted (dropped) and must be resent by the source node. Full router queues are the main reason for congestion on the Internet. However, no matter how important a data packet may be, routers would always process their queue according to the first-in-first-out principle. These fundamental principles always were (and remain in the context of the NN debate) key elements of the open Internet spirit. Essentially, they establish that all data packets sent to the network are treated equally and that no intermediate node can exercise control over the network as a whole. This historic and romantic view of the Internet neglects that Quality of Service (QoS) has always been an issue for the network of networks. Over and beyond the sending of mere text messages, there is a desire for reliable transmission of information that is time critical (low latency), or for which it is desired that data packets are received at a steady rate and in a particular order (low jitter). Voice communication, for example, requires both, low latency and low jitter. This desire for QoS was manifested in the architecture of the Internet as early as January 1, 1983, when the Internet was switched over to the Transmission Control Protocol / Internet Protocol (TCP/IP). In particular, the Internet protocol version 4 (IPv4), which constitutes the nuts and bolts of the Internet since then, already contains a type of service (TOS) field in its header by which routers could prioritize packets in their queues and thereby establish QoS. However, a general agreement on how to handle data with different TOS entries was never reached and thus the TOS field was not used accordingly. Consequently, in telecommunications engineering, research on new protocols and mechanisms to enable QoS in the Internet has spurred ever since, long before the NN debate came to life. In addition, data packets can even be differentiated solely based on what type of data they are carrying, without the need for an explicit marking in the protocol header. This is possible by means of so-called Deep Packet Inspection (DPI). All of these features are currently deployed in the Internet as we know it, and many of them have been deployed for decades. The NN debate, however, sometimes questions the existence and use of QoS mechanisms in the Internet and argues that the success of the Internet was only possible due to the BE principle. While the vision of an Internet that is based purely on the BE principle is certainly not true, some of these claims nevertheless deserve credit.

_

Commercial internet:

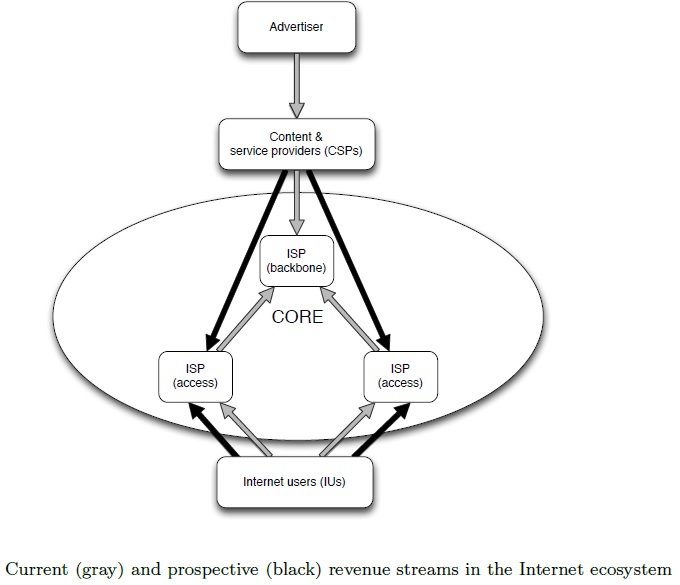

Another far-reaching event was the steady commercialization of the Internet in the 1990s. At about the same time, the disruptive innovation of content visualization and linkage via the Hyper Text Markup Language (HTML), the so called World Wide Web (WWW) made the Internet a global success. Private firms began to heavily invest in backbone infrastructure and commercial ISPs provided access to the Internet, at first predominately by dial up connections. The average data traffic per household severely increased with the availability of broadband and rich media content (Bauer et al., 2009). According to the Minnesota Internet Traffic Studies (Odlyzko et al., 2012) Internet traffic in the US is growing annually by about 50 percent. The increase in network traffic is the consequence of the on-going transition of the Internet to a fundamental universal access technology. Media consumption using traditional platforms such as broadcasting and cable is declining and content is instead consumed via the Internet. Today the commercial Internet ecosystem consists of several players. Internet users (IUs) are connected to the network by their local access provider (ISP), while content and service providers (CSPs) offer a wide range of applications and content to the mass of potential consumers. All of these actors are spread around the world and interconnect with each other over the Internet’s backbone, which is under the control of an oligopoly of big network providers (Economides, 2005). The Internet has become a trillion dollar industry (Pélissié du Rausas et al., 2011) and has emerged from a mere network of networks to the market of markets. Much of the NN debate is devoted to the question whether the market for Internet access should be a free market, or whether it should be regulated in the sense that some feasible revenue flows are to be prohibited.

_

The principal Internet services:

• E‐mail person‐to‐person messaging; document sharing.

• Newsgroups discussion groups on electronic bulletin boards.

• Chatting and instant messaging interactive conversations.

• Telnet logging on to one computer system and doing work on another.

• File Transfer Protocol (FTP) transferring files from computer to computer.

• World Wide Web retrieving, formatting, and displaying information (including text, audio, graphics, and video) using hypertext links.

_

The modern Internet was invented to be a free and open network that allows anyone with a Web connection to communicate directly with any individual or computer on that network. Over the past 25 years, the Internet has transformed the way we do just about everything. Think about the conveniences and services that wouldn’t exist without the Internet:

• instant access to information about everything email

• online shopping

• online social networks

• independent global news sources

• streaming movies, TV shows and music

• online banking

• video calls and videoconferencing

The Internet has evolved so quickly and works so well precisely because the technology behind the Internet is neutral. In other words, the physical cables, routers, switches, servers and software that run the Internet treat every byte of data equally. A streaming movie from Netflix shares the same crowded fiber optic cable as the pictures from your niece’s birthday. The Internet doesn’t pick favourites. That, at its core, is what net neutrality means. And that’s one of the most important reasons why you should care about it: to keep the Internet as free, open and fair as possible, just as it was designed to be.

_

Networking:

Networking allows one computer to send information to and receive information from another. We may not always be aware of the numerous times we access information on computer networks. Certainly the Internet is the most conspicuous example of computer networking, linking millions of computers around the world, but smaller networks play a role in information access on a daily basis. We can classify network technologies as belonging to one of two basic groups. Local area network (LAN) technologies connect many devices that are relatively close to each other. Wide area network (WAN) technologies connect a smaller number of devices that can be many kilometers apart. Ethernet is a wired LAN technology while Wi-Fi is wireless LAN technology. WAN is a computer networking technologies used to transmit data over long distances, and between different LANs and other localised computer networking architectures. Network nodes can be connected using any given technology, from circuit switched telephone lines (DSL) through radio waves (wireless broadband/mobile broadband) through optic fibre.

_

Broadband network:

The ideal telecommunication network has the following characteristics: broadband, multi-media, multi-point, multi-rate and economical implementation for a diversity of services (multi-services). The Broadband Integrated Services Digital Network (B-ISDN) intended to provide these characteristics. Asynchronous Transfer Mode (ATM) was promoted as a target technology for meeting these requirements.

_

Multi-media:

A multi-media call may communicate audio, data, still images, or full-motion video, or any combination of these media. Each medium has different demands for communication quality, such as:

1. bandwidth requirement,

2. signal latency within the network, and

3. signal fidelity upon delivery by the network.

The information content of each medium may affect the information generated by other media. For example, voice could be transcribed into data via voice recognition, and data commands may control the way voice and video are presented. These interactions most often occur at the communication terminals, but may also occur within the network.

_______

Internet access:

Internet access connects individual computer terminals, computers, mobile devices, and computer networks to the Internet, enabling users to access Internet services, such as email and the World Wide Web. Internet service providers (ISPs) offer Internet access through various technologies that offer a wide range of data signalling rates (speeds). Consumer use of the Internet first became popular through dial-up Internet access in the 1990s. By the first decade of the 21st century, many consumers in developed nations used faster, broadband Internet access technologies. As of 2014, broadband was ubiquitous around the world, with a global average connection speed exceeding 4 Mbit/s.

_

Choosing an Internet service:

It all depends on where you live and how much speed you need. Internet service providers (ISPs) usually offer different levels of speed based on your needs. If you’re mainly using the Internet for email and social networking, a slower connection might be all you need. However, if you want to download a lot of music or watch streaming movies, you’ll want a faster connection. You’ll need to do some research to find out what the options are in your area. Here are some common types of Internet service.

Dial-up:

Dial-up is generally the slowest type of Internet connection, and you should probably avoid it unless it is the only service available in your area. Like a phone call, a dial-up modem will connect you to the Internet by dialling a number, and it will disconnect when you are done surfing the Web. Unless you have multiple phone lines, you will not be able to use your land line and the Internet at the same time with a dial-up connection.

DSL (digital subscriber line):

DSL service uses a broadband connection, which makes it much faster than dial-up. DSL is a high-speed Internet service like cable Internet. DSL provides high-speed networking over ordinary phone lines using broadband modem technology. DSL technology allows Internet and telephone service to work over the same phone line without requiring customers to disconnect either their voice or Internet connections. DSL technology theoretically supports data rates of 8.448 Mbps, although typical rates are 1.544 Mbps or lower. DSL Internet services are used primarily in homes and small businesses. DSL Internet service only works over a limited physical distance and remains unavailable in many areas where the local telephone infrastructure does not support DSL technology. However, it is unavailable in many locations, so you’ll need to contact your local ISP for information about your area. DSL connects to the Internet via phone line but does not require you to have a land line at home. Unlike dial-up, it will always be on once its set up, and you’ll be able to use the Internet and your phone line simultaneously.

Cable:

Cable service connects to the Internet via cable TV, although you do not necessarily need to have cable TV in order to get it. It uses a broadband connection and can be faster than both dial-up and DSL service; however, it is only available in places where cable TV is available.

Satellite:

A satellite connection uses broadband but does not require cable or phone lines; it connects to the Internet through satellites orbiting the Earth. As a result, it can be used almost anywhere in the world, but the connection may be affected by weather patterns. A satellite connection also relays data on a delay, so it is not the best option for people who use real-time applications, like gaming or video conferencing.

3G and 4G:

3G and 4G service is most commonly used with mobile phones and tablet computers, and it connects wirelessly through your ISP’s network. If you have a device that’s 3G or 4G enabled, you’ll be able to use it to access the Internet away from home, even when there is no Wi-Fi connection. However, you may have to pay per device to use a 3G or 4G connection, and it may not be as fast as DSL or cable.

Wireless hotspots:

If you’re out and about with an internet device like a laptop, tablet or smartphone, you might want to connect at a wireless hotspot. Wireless ‘hotspots’ are places like libraries and cafés, which offer you free access to their broadband connection (Wi-Fi). You may need to be a member of the library or a customer at a café to get the password for the wireless connection.

_

Wired vs. wireless internet access:

A wired network connects devices to the Internet or other network using cables. The most common wired networks use cables connected to Ethernet ports on the network router on one end and to a computer or other device on the cable’s opposite end. A wireless local-area network (LAN) uses radio waves to connect devices such as laptops to the Internet and to your business network and its applications. When you connect a laptop to a Wi-Fi hotspot at a cafe, hotel, airport lounge, or other public place, you’re connecting to that business’s wireless network. Almost all of the discussion surrounding net neutrality has been confined to wired (that is, cable, DSL and fiber) broadband in the U.S. while in India, most internet is wireless mobile broadband. In India they have an abnormally high mobile to fixed broadband ratio of 4:1 and only 15.2 million wired broadband connections in a country of 1.25 billion. India has a fixed broadband penetration ratio of 1.2 per 100 as against the world average of 9.4 per 100. The Open Internet Order by FCC adopted definitions for “fixed” and “mobile” Internet access service. It defined “fixed broadband Internet access service” to expressly include “broadband Internet access service that serves end users primarily at fixed endpoints using stationary equipment … fixed wireless services (including fixed unlicensed wireless services), and fixed satellite services.” It defined “mobile broadband Internet access service” as “a broadband Internet access service that serves end users primarily using mobile stations.” So fixed internet access include wired and wireless technology while mobile internet access is always wireless. The transparency rule applies equally to both fixed and mobile broadband Internet access service. The no-blocking rule applied a different standard to mobile broadband Internet access services and mobile Internet access service was excluded from the unreasonable discrimination rule.

_

| Wired network | Wireless network |

| Consumers use cable (cable TV), copper wire (DSL) or fiber-optic to connect to internet | Consumers use radio waves to connect to internet via 3G/4G data card containing modem (mobile broadband) or through Wi-Fi using LAN |

| Large capacity of data transmission, volume uncapped | It requires the use of spectrum, which is a scarce public resource, limited capacity of data transmission, restrictive volume caps |

| Multiple simultaneous users do not significantly affect speed | Multiple simultaneous users significantly reduces speed |

| Majority of American population uses wired network | Majority of Indian population uses wireless network |

| Net neutrality debate mainly involve wired transmission in America | Net neutrality debate mainly involve wireless transmission in India |

| Wired connection speed is near maximum throughput | Wireless connection speed will be less than the maximum throughput due to various factors reducing signal strength |

| Wired connection generally have faster internet speed | Wireless connection generally have slower internet speed |

| You have to access internet at a fixed point | You can move around with device within network coverage area for internet access |

| Voice and video quality not significantly affected in network congestion | Voice and video quality significantly affected in network congestion |

_____

What is spectrum?

Spectrum in wireless telephone/internet transmission is the radio frequency spectrum that ranges from very low frequency radio waves at around 10kHz (30 kilometres wavelength) up to 100GHz (3 millimetres wavelength). The radio spectrum is divided into frequency bands reserved for a single use or a range of compatible uses. Within each band, individual transmitters often use separate frequencies, or channels, so they do not interfere with each other. Because there are so many competing uses for wireless communication, strict rules are necessary to prevent one type of transmission from interfering with the next. And because spectrum is limited — there are only so many frequency bands — governments must oversee appropriate licensing of this valuable resource to facilitate use in all bands. Governments spend a considerable amount of time allocating particular frequencies for particular services, so that one service does not interfere with another. These allocations are agreed internationally, so that interference across borders, as well as between services, is minimised. Not all radio frequencies are equal. In general, lower frequencies can reach further beyond the visible horizon and are better at penetrating physical obstacles such as rain or buildings. Higher frequencies have greater data-carrying capacity, but less range and ability to pass through obstacles. For example, Mobile broadband uses the spectrum of 225 MHz to 3700 MHz while Wi-Fi uses 2.4 and 5 GHz frequency. Capacity is also dependent on the amount of spectrum a service uses — the channel bandwidth. For many wireless applications, the best trade-off of these factors occurs in the frequency range of roughly 400MHz to 4GHz, and there is great demand for this portion of the radio spectrum.

_

All communication devices that use digital radio transmissions operate in a similar way. A transmitter generates a signal that contains encoded voice, video or data at a specific radio frequency, and this is radiated into the environment by an antenna (also known as an aerial). This signal spreads out in the environment, of which a very small portion is captured by the antenna of the receiving device, which then decodes the information. The received signal is incredibly weak — often only one part in a trillion of what was transmitted. In the case of a mobile phone call, a caller’s voice is converted by the handset into digital data, transmitted via radio to the network operator’s nearest tower or base station, transferred to another base station serving the recipient’s location, and then transmitted again to the recipient’s phone, which converts the signal back into audio through the earpiece. There are a number of standards for mobile phones and base stations, such as GSM, WCDMA and LTE, which use different methods for coding and decoding, and ensure that users can only receive voice calls and data that are intended for them.

_

The bandwidth of a radio signal is the difference between the upper and lower frequencies of the signal. For example, in the case of a voice signal having a minimum frequency of 200 hertz (Hz) and a maximum frequency of 3,000 Hz, the bandwidth is 2,800 Hz (3 KHz). The amount of bandwidth needed for 3G services could be as much as 15-20 Mhz, whereas for 2G services a bandwidth of 30-200 KHz is used. Hence, for 3G huge bandwidth is required. Please do not confuse between bandwidth of 2G/3G spectrum and bandwidth of internet transmission i.e. internet speed.

_

What is Broadband?

Broadband is a technology that transmits data at high speed along cables, ISDN / DSLs (Digital Subscriber Lines) and mobile phone networks. The most common type of broadband is ADSL (carried along phone lines), though cable (using new fibre-optic cables) and mobile broadband (using 3G and 4G mobile reception) are hot contenders to topple ADSL’s dominance. ADSL broadband comes from your local telephone exchange, through a Fixed Line Access Network made out of copper wires. These are the telephone lines that you see in the street. The lines in the street connect to the wiring inside your house and provide you an internet and phone connection through the socket on the wall. Unlike the copper wires of an ADSL connection, cables are partially made of fibre-optic material, which allows for much faster broadband speeds and increased reliability. The other advantage of cable is that it also allows for the transmission of audio and visual signals, which means you can get both landline and digital TV services from your cable broadband provider. Mobile broadband uses 3G and 4G mobile phone technology. These are made possible by two complementary technologies, HSDPA and HSUPA (high speed download and upload packet access, respectively).

_

Broadband provides improved access to Internet services such as:

1. Faster World Wide Web browsing

2. Faster downloading of documents, photographs, videos, and other large file

3. Telephony, radio, television, and videoconferencing

4. Virtual private networks and remote system administration

5. Online gaming, especially massively multiplayer online role-playing games which are interaction-intensive

_

Broadband technologies supply considerably higher bit rates than dial-up, generally without disrupting regular telephone use. Various minimum data rates and maximum latencies have been used in definitions of broadband, ranging from 64 kbit/s up to 4.0 Mbit/s. In 1988 the CCITT standards body defined “broadband service” as requiring transmission channels capable of supporting bit rates greater than the primary rate which ranged from about 1.5 to 2 Mbit/s. A 2006 Organization for Economic Co-operation and Development (OECD) report defined broadband as having download data transfer rates equal to or faster than 256 kbit/s. And in 2015 the U.S. Federal Communications Commission (FCC) defined “Basic Broadband” as data transmission speeds of at least 25 Mbit/s downstream (from the Internet to the user’s computer) and 3 Mbit/s upstream (from the user’s computer to the Internet). The trend is to raise the threshold of the broadband definition as higher data rate services become available.

_

Broadband infrastructure: