Dr Rajiv Desai

An Educational Blog

Digital Twin

Digital Twin:

Above is an image from the digital twin (DT) of BMW’s factory in Regensburg, Bavaria, created in NVIDIA’s Omniverse. There are two versions of a BMW factory in the medieval town of Regensburg, Germany. One is a physical plant that cranks out thousands of cars a year. The other is a virtual 3-D replica, accessed by screen or VR headset, in which every surface and every bit of machinery looks exactly the same as in real life. Soon, whatever is happening in the physical factory will be reflected inside the virtual one in real time: frames being dipped in paint; doors being sealed onto hinges; avatars of workers carrying machinery to its next destination. The latter factory is an example of a “digital twin”: an exact digital re-creation of an object or environment. The concept might at first seem like sci-fi babble or even a frivolous experiment: Why would you spend time and resources to create a digital version of something that already exists in the real world?

_____

_____

Section-1

Prologue:

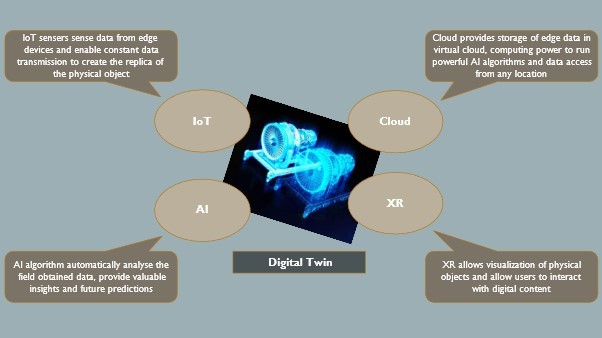

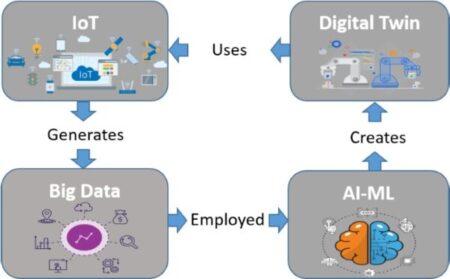

For centuries, people have used pictures and models to help them tackle complex problems. Great buildings first took shape on the architect’s drawing board. Classic cars were shaped in wood and clay. Over time, our modelling capabilities have become more sophisticated. Computers have replaced pencils. 3D computer models have replaced 2D drawings. Advanced modelling systems can simulate the operation and behavior of a product as well as its geometry. Until recently, however, there remained an unbridged divide between model and reality. No two manufactured objects are ever truly identical, even if they have been built from the same set of drawings. Computer models of machines don’t evolve as parts wear out and are replaced, as fatigue accumulates in structures, or as owners make modifications to suit their changing needs. That gap is now starting to close. Fueled by developments in the internet of things (IoT), big data, artificial intelligence, cloud computing, and digital reality technologies, the recent arrival of digital twins (DTs) heralds a tipping point where the physical and digital worlds can be managed as one, and we can interact with the digital counterpart of physical things much like we would interact with physical things themselves in 3D space around us. Led by the engineering, manufacturing, automotive, and energy industries in particular, digital twins are already creating new value. They are helping companies to design, visualize, monitor, manage, and maintain their assets more effectively. And they are unlocking new business opportunities like the provision of advanced services and the generation of valuable insight from operational data.

_

A Digital Twin is a virtual world that matches the real world in its complexity, scale, and accuracy. It’s an exact digital re-creation of an object or environment, like a road network or underground water infrastructure— and there are so many things you can do in it. The ‘measure twice, cut once’ proverb in carpentry teaches that measurements should be double-checked to ensure accuracy before cutting the wood. That is, before taking any action, we must carefully plan so that we do not waste time, energy, or resources correcting mistakes. With a Digital Twin, city planners can see what would happen if they modified a city’s layout, planned a road, or changed the traffic systems. They can compute not just one possible future but many possible futures. And if it doesn’t work in the Digital Twin, it won’t work in the real world. Testing it out first means we prevent bad decisions. And the more ‘what-if’ situations we test, the more creative and effective the solution will be. It’s how we measure twice and cut once. Digital twins play the same role for complex machines and processes as food tasters for monarchs or stunt doubles for movie stars: They prevent harm that otherwise could be done to precious assets. Having made their way to the virtual world, duplicates save time, money, and effort for numerous businesses — protecting the health and safety of high value resources.

_

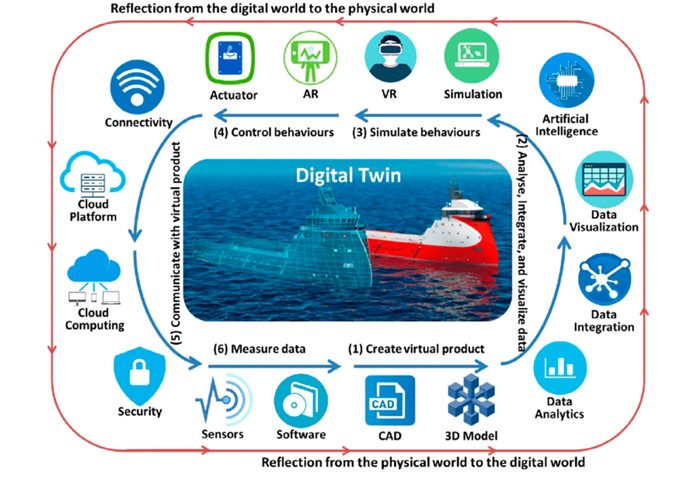

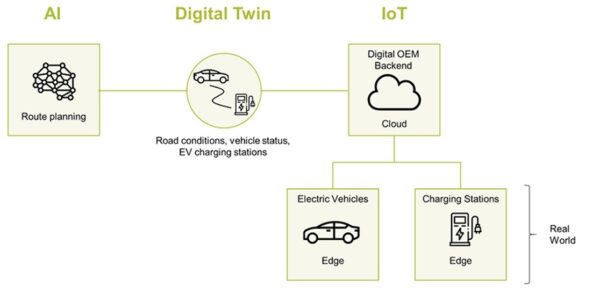

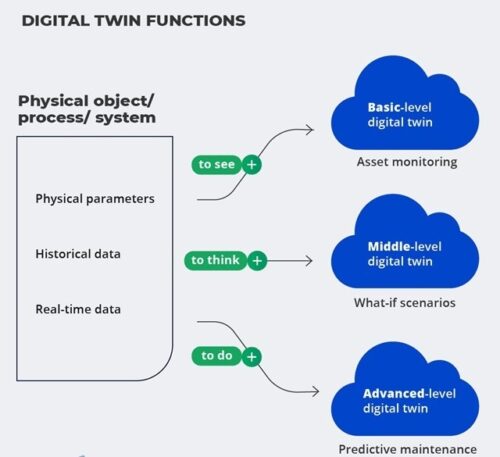

Marshall McLuhan once famously observed, “First we build the tools, then they build us.” Building on artificial intelligence (AI), the Internet of Things (IoT), and 5G communications, advanced software systems are remaking the nature and complexity of human engineering. In particular, digital twin technology can provide companies with improved insights to inform the decision-making process. Digital Twin can be defined as a software representation of a physical asset, system or process designed to detect, prevent, predict and optimize through real time analytics to deliver business value. There are many definitions of a digital twin but the general consensus is around this definition “a virtual representation of an object or system that spans its lifecycle, is updated from real-time data and uses simulation, machine learning, and reasoning to help decision-making.” A digital twin is a virtual instance of a physical system (twin) that is continually updated with the latter’s performance, maintenance, and health status data throughout the physical system’s life cycle. A digital twin leverages artificial intelligence, the Internet of Things, big data, blockchain, virtual reality technologies, collaborative platforms, APIs, and open standards. By having better and constantly updated data related to a wide range of areas, combined with the added computing power that accompanies a virtual environment, digital twins are able to give a clearer picture and address more issues from far more vantage points than a standard simulation can, with greater ultimate potential to improve products and processes.

_

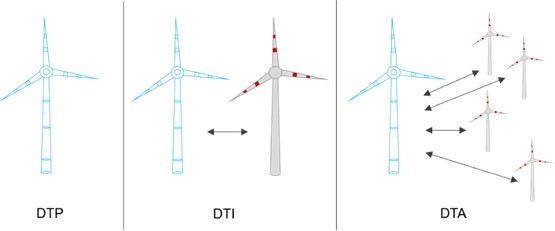

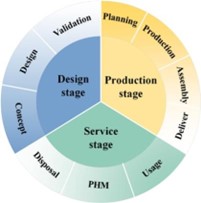

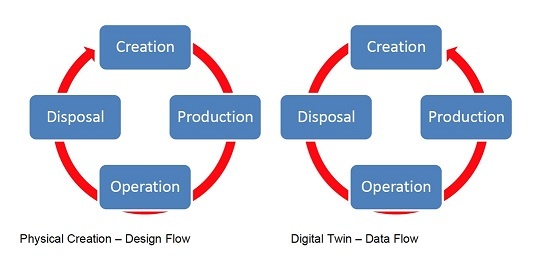

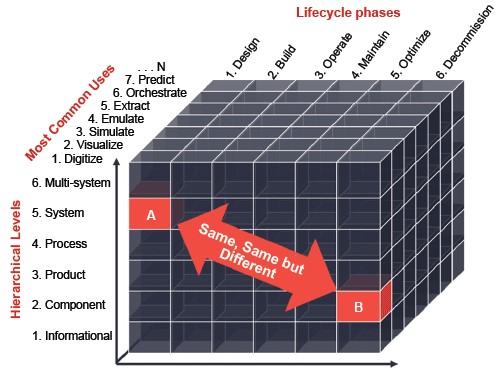

It is a common misconception that Digital Twins only come into use once the physical system has been created but Digital Twins are digital replicas of real and potential physical assets (i.e., “Physical Twins”). The ability to develop and test new products using virtual models (digital twins) before producing them physically has saved companies valuable time and money. Digital twins are used throughout the programme lifecycle and have reduced engineers’ reliance on physical prototypes. The digital twin can be any of the following three types: 1) digital twin prototype (DTP); 2) digital twin instance (DTI); and 3) digital twin aggregate (DTA). A DTP is a constructed digital model of an object that has not yet been created in the physical world, e.g., 3D modelling of a component. The primary purpose of a DTP is to build an ideal product, covering all the important requirements of the physical world. On the other hand, a DTI is a virtual twin of an already existing object, focusing on only one of its aspects. Finally, a DTA is an aggregate of multiple DTIs that may be an exact digital copy of the physical twin; for example, the digital twins of a spacecraft structure. The digital twin concept is a perfect example of how the physical and virtual worlds converge. Essentially, a digital twin makes use of real-world data to create a simulation via a computer program that predicts how a product or process will fare. These programs are easily integrated into the Internet of Things (IoT), artificial intelligence (AI), as well as software analytics–all in a bid to enhance output.

_

Some examples of digital twin:

-1. Twin earth – NVIDIA Earth 2:

NVIDIA’s recently launched Earth-2 initiative aims to build digital twins of the Earth to address one of the most pressing challenges of our time, climate change. Earth-2 aims to improve our predictions of extreme weather, projections of climate change, and accelerate the development of effective mitigation and adaptation strategies — all using the most advanced and scientifically principled machine learning methods at unprecedented scale. Combining accelerated computing with physics-informed machine learning at scale, on the largest supercomputing systems today, Earth-2 will provide actionable weather and climate information at regional scales.

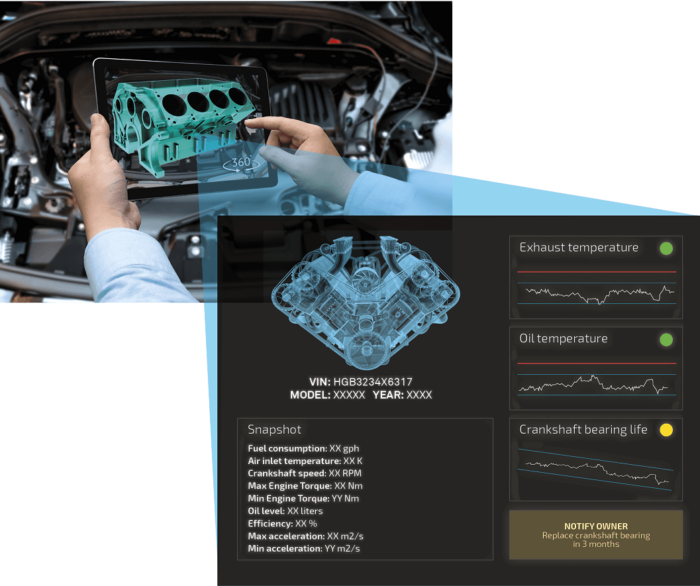

-2. Tesla – a digital twin for every car made:

Tesla creates a digital simulation of every one of its cars, using data collected from sensors on the vehicles and uploaded to the cloud. These allow the company’s AI algorithms to determine where faults and breakdowns are most likely to occur and minimize the need for owners to take their cars to servicing stations for repairs and maintenance. This reduces cost to the company of servicing cars that are under warranty and improves user experience, leading to more satisfied customers and a higher chance of winning repeat business.

-3. Digital twin of a city

Do you know that the densely populated city of Shanghai has its own fully deployed digital twin? This was created by mapping every physical device to a new virtual world and applying artificial intelligence, machine learning and IoT technologies to that map. The “digital twin” technique has been used well in pandemic control and prevention following the COVID-19 outbreak. Similarly, Singapore is bracing for a full deployment of its own digital twin.

_

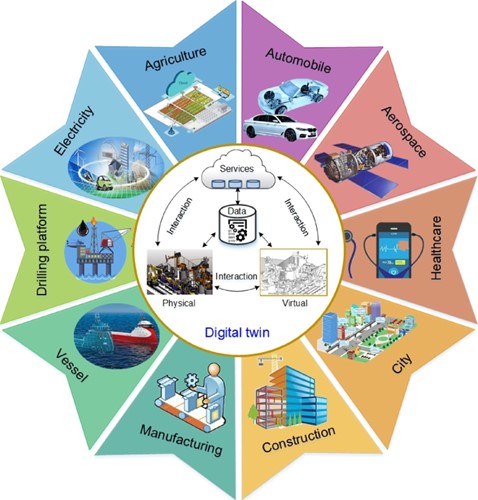

Digital twins are now proving invaluable across multiple industries, especially those that involve costly or scarce physical objects. Created by feeding video, images, blueprints or other data into advanced 3-D mapping software, digital twins are being used in medicine to replicate and study internal organs. They’ve propelled engineers to devise car and plane prototypes—including Air Force fighter jets—more quickly. They allow architects and urban planners to envision and then build skyscrapers and city blocks with clarity and precision. And in 2021, digital twins began to break into the mainstream of manufacturing and research. Chipmaker Nvidia launched a version of its Omniverse 3-D simulation engine that allows businesses to build 3-D renderings of their own—including digital twins. Amazon Web Services announced a competing service, the IoT TwinMaker. Digital twins could have huge implications for training workers, for formulating complicated technical plans without having to waste physical resources—even for improving infrastructure and combatting climate change. Health care, construction, education, taking city kids on safari: it’s hard to imagine where digital twins won’t have an impact. In the future, everything in the physical world would be replicated in the digital space through digital twin technology.

In a lighter vein, my digital twin will keep writing articles for you after my death.

______

______

Abbreviations and synonyms:

DT = digital twin

MDT = mobile digital twin

CDT = cognitive digital twin

CAD = computer aided design

CAE = computer aided engineering

CAM = computer aided manufacturing

AI = artificial intelligence

ML = machine learning

IoT = internet of things

VR = virtual reality

AR = augmented reality

XR = extended reality

MR = mixed reality

CPS = cyber-physical systems

PLM = product lifecycle management

NPP = nuclear power plant

NRC = nuclear regulatory commission

O&M = operating and maintenance

PRA = probabilistic risk assessment

MBSE = model-based systems engineering

FEA = finite element analysis

PDM = product data management

ERP = enterprise resource planning

MRP = material requirements planning

CAFM = computer aided facility management

RUL = remaining useful life

BOM = bill of materials

ROI = return on investment

CVE = common vulnerabilities and exposures

BIM = building information modelling

OT = operational technology

DTO = digital twin of an organization

OEM = original equipment manufacturer

_____

_____

Section-2

Digital engineering, Industry 4.0 and digital twins:

_

Digital Engineering and digital twins:

Digital Engineering is an umbrella term referring to the synergistic application of electronic and software technologies to facilitate the architecture, analysis, design, simulation, building, and testing of complex software-intensive systems-of-systems. The ultimate goal of Digital Engineering is to produce Digital Twins, or digital replicas of real and potential physical assets (i.e., “Physical Twins”) that include both inanimate and animate physical entities, with broad usages and where for Systems Integration & Testing purposes the former are largely indistinguishable from the latter.

_

The increasing reliance of our information-age economies and governments on software-intensive Systems-of-Systems makes us progressively more vulnerable to their failures. Due to advances in Artificial Intelligence (AI) and Machine Learning (ML), these Systems-of-Systems are exponentially increasing in size and complexity, and traditional Model-Based Systems Engineering technologies (MBSE) are insufficient to manage them. Compare and contrast recent Lockheed-Martin F-35 project problems and Boeing 737 MAX MCAS project problems. Consequently, we must seek more robust and scalable solutions to the System-of-System Sprawl (cf. System Ball-of-Mud) anti-pattern, which is a fractal problem. Fractal problems require recursive solutions. Digital Engineering’s recursive “Simulation-of-Simulations” approach to the System-of-Systems fractal problem represents a quantum improvement over traditional MBSE approaches.

_

Digital engineering and digital twins are closely related fields, as digital engineering techniques and tools are often used to develop and maintain digital twins. Digital engineering is an interdisciplinary field that uses a variety of techniques and tools, such as modelling, simulation, and analytics, to design, develop, and analyze complex systems and Systems-of-Systems. Digital twins are digital representations of physical systems or processes, and can be used to simulate, monitor, and optimize the performance and behavior of these systems in real time. In other words, digital engineering provides the tools and techniques that are used to create, manage, and analyze digital twins, while digital twins provide a means by which to apply these techniques and tools to specific systems and applications. Digital engineering and digital twins are often used together in a variety of applications, such as engineering design, manufacturing, supply chain management, and maintenance. Overall, digital engineering and digital twins are distinct yet interrelated fields, and they often intersect and inform each other in various ways. Digital engineering provides the methods and tools used to develop and maintain digital twins, whereas digital twins provide a means by which to apply said methods and tools.

_

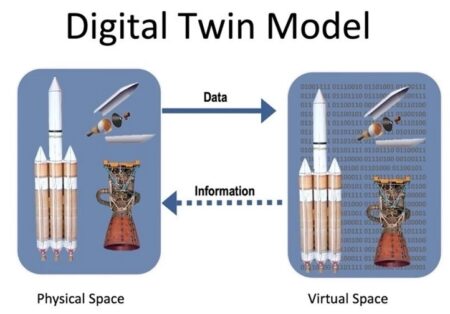

A Digital Twin is a real-time virtual replica of a real-world physical system or process (i.e., a “Physical Twin”) that serves as its indistinguishable digital counterpart for practical purposes, such as systems simulation, integration, testing, and maintenance. In order to pragmatically achieve indistinguishability between Digital Twins and their Physical Twin counterparts, massive coarse- and fine-grained Modelling & Simulation (M&S) is typically required. From a pragmatic Systems Engineering perspective, Physical Twins represent “Systems-of-Systems” and Digital Twins represent “Simulations-of-Simulations” of “Systems-of-Systems”.

Digital Twins are produced by systematically applying Digital Engineering technologies, including both dynamic (behavioral) and mathematical (parametric) M&S, in a synergistic manner that blurs the usual distinctions between real physical systems and virtual logical systems. From a Systems Integration & Testing perspective, a Digital Twin should be indistinguishable from its Physical Twin counterpart. Consequently, Digital Twins can be Verified & Validated (V&V) by the following pragmatic test:

A reasonable litmus test for a “Digital Twin” is analogous to the Turing Test for AI. Suppose you have a System Tester (ST), a Physical System (PS), and a Digital Twin (DT) for PS. If the ST can run a robust system Verification & Validation (V&V) Test Suite on both PS and DT, but cannot reliably distinguish between them with a probability > 80%, ∴ DT is a bona fide Digital Twin of PS.

_

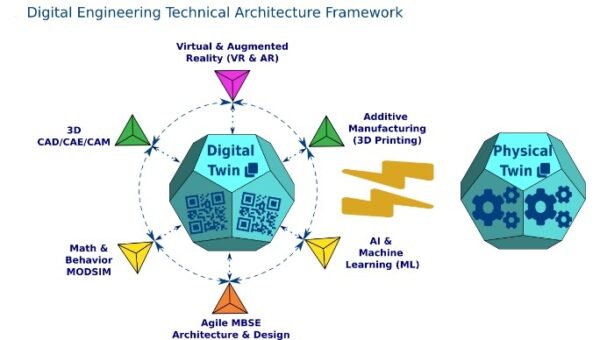

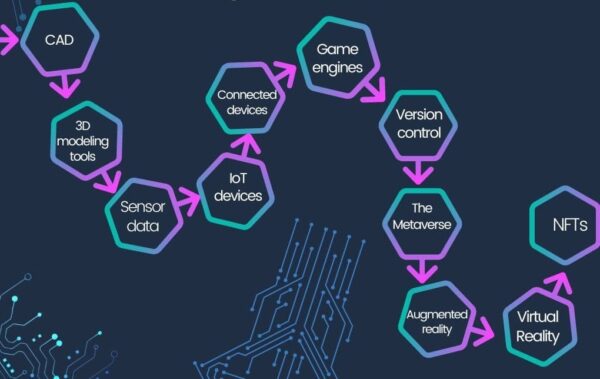

Digital Engineering Technical Architecture Framework™ (DETAF™) is a reference Enterprise Architecture Framework (EAF) for architecting, designing, building, testing, and integrating Digital Twins (virtual Simulations-of-Simulations) that are indistinguishable from their Physical Twin (physical Systems-of-Systems) counterparts. The architecture infrastructure of DETAF is the 6D System M-Model™ Enterprise Architecture Framework for Agile MBSE™, which has been customized to support the following core Digital Engineering technologies (figure below), which are listed as related technology pairs: Massive Mathematical & Dynamic M&S; Artificial Intelligence (AI) & Machine Learning (ML); 3D CAD & 3D Printing; and Virtual Reality (VR) & Augmented Reality (AR). The DETAF is designed to be highly scalable, simulatable, and customizable.

_

Digital Engineering and its associated Digital Twins promise to revolutionize how we architect future Systems-of-Systems. The following interrelated Engineering sub-disciplines will likely play key roles in the Digital Twin technology revolution:

- Agile MBSE technologies provide the architecture infrastructure for recursively scaling and simulating Digital Twins. Agile MBSE technologies are the distillation of a quarter century of lessons-learned from Model-Driven Development (MDD), Model-Based Systems Engineering (MBSE), and Agile/Lean Development technologies, and can provide the recursive architecture and design patterns needed to address the fractal Digital Twin System-of-System challenge.

- Massive Mathematical & Dynamic M&S technologies provide the large-scale Modelling & Simulation (M&S) infrastructure necessary for recursively simulating Physical Twins as Digital Twins. It is essential that mathematical (parametric) and dynamic (behavioral) simulations for Digital Twins are fully integrated and applied on a massive scale. Compare and contrast how massive data sets and big data fuel Machine Learning (ML) technology. Stated otherwise, if a Physical Twin is a “System-of-Systems”, then a Digital Twin is a “Simulation-of-Simulations” for a “System-of-Systems”.

- Artificial Intelligence (AI) & Machine Learning (ML) technologies provide the software infrastructure needed for constructing intelligent (“smart”) Physical Twins, and by extension their Digital Twin counterparts. Since AI & ML technologies are rapidly transforming how software-intensive Systems-of-Systems interact with humans, these complementary technologies will play a critical role in the evolution of Digital Twin technology.

- 3D CAD & 3D Printing (a.k.a. Additive Manufacturing) technologies provide the electro-mechanical infrastructure needed for the physical construction of Physical Twins, and by extension their Digital Twin counterparts. Since [3D CAD] Design = Implementation [3D Printed Product] is rapidly transforming how we design and manufacture Systems-of-Systems, these complementary technologies will also play a vital role in the evolution of Digital Twin technology.

- Virtual Reality (VR) & Augmented Reality (AR) technologies provide the simulation infrastructure for the massive simulations (Simulation-of-Simulations) required by Digital Twins in order to be indistinguishable from their Physical Twin counterparts. Since VR/AR technologies are rapidly transforming how we interact with Systems-of-Systems, they will additionally play a critical role in the evolution of Digital Twin technology.

______

______

Industrial Revolutions:

First Industrial Revolution:

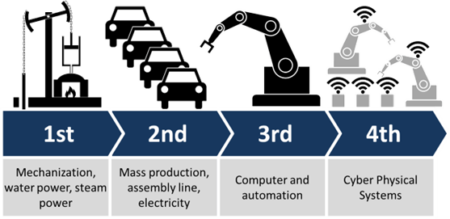

The First Industrial Revolution was marked by a transition from hand production methods to machines through the use of steam power and water power. The implementation of new technologies took a long time, so the period which this refers to was between 1760 and 1820, or 1840 in Europe and the United States. Its effects had consequences on textile manufacturing, which was first to adopt such changes, as well as iron industry, agriculture, and mining although it also had societal effects with an ever stronger middle class.

Second Industrial Revolution:

The Second Industrial Revolution, also known as the Technological Revolution, is the period between 1871 and 1914 that resulted from installations of extensive railroad and telegraph networks, which allowed for faster transfer of people and ideas, as well as electricity. Increasing electrification allowed for factories to develop the modern production line. It was a period of great economic growth, with an increase in productivity, which also caused a surge in unemployment since many factory workers were replaced by machines.

Third Industrial Revolution:

The Third Industrial Revolution, also known as the Digital Revolution, occurred in the late 20th century, after the end of the two world wars, resulting from a slowdown of industrialization and technological advancement compared to previous periods. The production of the Z1 computer, which used binary floating-point numbers and Boolean logic, a decade later, was the beginning of more advanced digital developments. The next significant development in communication technologies was the supercomputer, with extensive use of computer and communication technologies in the production process; machinery began to abrogate the need for human power.

Fourth Industrial Revolution:

Industry 4.0 is used interchangeably with the fourth industrial revolution and represents a new stage in the organization and control of the industrial value chain. Cyber-physical systems form the basis of Industry 4.0 (e.g., ‘smart machines’). In essence, the Fourth Industrial Revolution is the trend towards automation and data exchange in manufacturing technologies and processes which include cyber-physical systems (CPS), IoT, industrial internet of things, cloud computing, cognitive computing, and artificial intelligence. CPSs are defined as systems that work by using various sensors to understand physical components, automatically transferring captured data to cyber components, analyzing the data, and then converting the data into required information via cyber processes that can be used to make required decisions and actions. Intelligent building systems are one of the examples of CPSs. The machines cannot replace the deep expertise but they tend to be more efficient than humans in performing repetitive functions, and the combination of machine learning and computational power allows machines to carry out highly complicated tasks.

_

Industry 4.0 is a concept that refers to the current trend of technology automation and data exchange, which includes cyber-physical systems (CPSs), the Internet of things (IoT), cloud computing, cognitive computing and developing smart businesses. The Fourth Industrial Revolution, 4IR, or Industry 4.0, conceptualizes rapid change to technology, industries, and societal patterns and processes in the 21st century due to increasing interconnectivity and smart automation. The term has been used widely in scientific literature, and in 2015 was popularized by Klaus Schwab, the World Economic Forum Founder and Executive chairman. Schwab asserts that the changes seen are more than just improvements to efficiency, but express a significant shift in industrial capitalism.

The technological basis of Industry 4.0 roots back in the Internet of Things (IoT), which proposed to embed electronics, software, sensors, and network connectivity into devices (i.e., “things”), in order to allow the collection and exchange of data through the internet. As such, IoT can be exploited at industrial level: devices can be sensed and controlled remotely across network infrastructures, allowing a more direct integration between the physical world and virtual systems, and resulting in higher efficiency, accuracy and economic benefits. Although it is a recent trend, Industry 4.0 has been widely discussed and its key technologies have been identified, among which Cyber-Physical Systems (CPS) have been proposed as smart embedded and networked systems within production systems. They operate at virtual and physical levels interacting with and controlling physical devices, sensing and acting on the real world. According to scientific literature, in order to fully exploit the potentials of CPS and IoT, proper data models should be employed, such as ontologies, which are explicit, semantic and formal conceptualizations of concepts in a domain. They are the core semantic technology providing intelligence embedded in the smart CPS and could help the integration and sharing of big amounts of sensed data. Through the use of Big Data analytics, it is possible to access sensed data, through smart analytics tools, for a rapid decision making and improved productivity.

_

The Importance of Digital Twins in Industry 4.0:

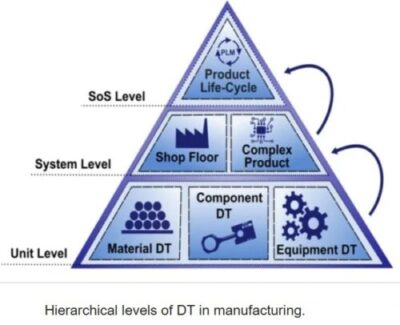

The fourth industrial revolution or Industry 4.0 which embraces automation, data exchange and manufacturing technologies is at the talking point of the business world. Digital Twins is at the core of this new industrial revolution bringing in unlimited possibilities. The traditional approach of building something and then tweaking it in new versions and releases is now obsolete. With a virtually-based system of designing, the best possible efficiency level of a product, process, or system can be identified and created simply by understanding its specific features, its performance abilities, and the potential issues that may arise.

The development cycle of a product with digital twins makes a complex process much simpler. Right from the design to the deployment phase, organizations can create a digital footprint of their creation. Each aspect of these digital creations are interconnected and will be able to generate data in real time. This helps businesses to better analyze and predict possible challenges in implementation, right from the initial design stage. Problems can be corrected in advance, or they can give early warnings to prevent any downtime.

This process also opens up possibilities to create newer and improved products in a more cost-effective manner, as a result of the simulations in real world applications. The end result will be a better customer experience. Digital twin processes incorporate big data, artificial intelligence, machine learning as well as the Internet of Things, and represent the future of the engineering and manufacturing spaces. In the Industry 4.0 era, the Digital Twin (DT), virtual copies of the system that are able to interact with the physical counterparts in a bi-directional way, seem to be promising enablers to replicate production systems in real time and analyse them. A DT should be capable to guarantee well-defined services to support various activities such as monitoring, maintenance, management, optimization and safety.

______

______

Section-3

Origin and history of Digital Twin:

_

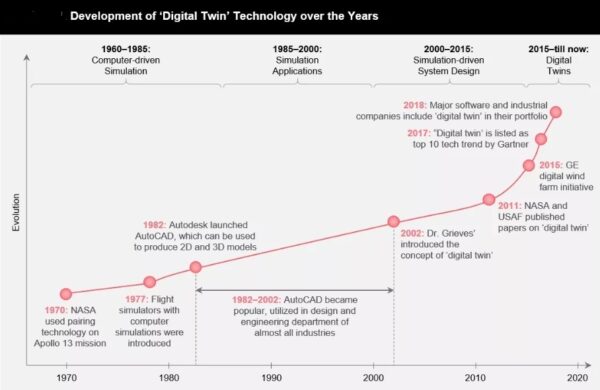

Digital twins were anticipated by David Gelernter’s 1991 book Mirror Worlds. The concept and model of the digital twin was first publicly introduced in 2002 by Michael Grieves, at a Society of Manufacturing Engineers conference in Troy, Michigan. Grieves proposed the digital twin as the conceptual model underlying product lifecycle management (PLM).

Figure above shows early digital twin concept by Grieves and Vickers.

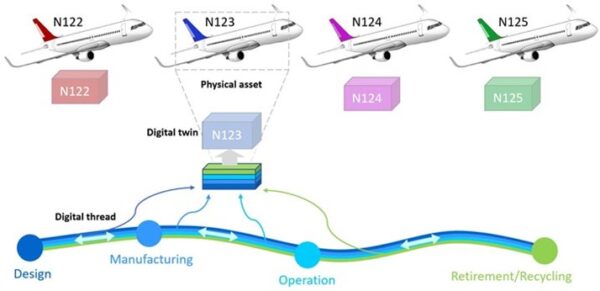

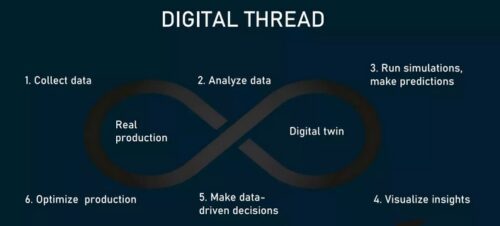

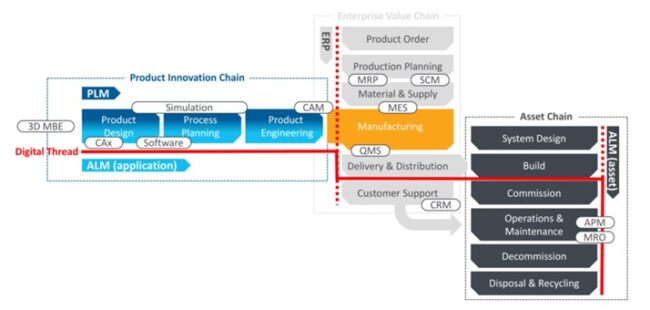

The digital twin concept, which has been known by different names (e.g., virtual twin), was subsequently called the “digital twin” by John Vickers of NASA in a 2010 Roadmap Report. The digital twin concept consists of three distinct parts: the physical object or process and its physical environment, the digital representation of the object or process, and the communication channel between the physical and virtual representations. The connections between the physical version and the digital version include information flows and data that includes physical sensor flows between the physical and virtual objects and environments. The communication connection is referred to as the digital thread.

_

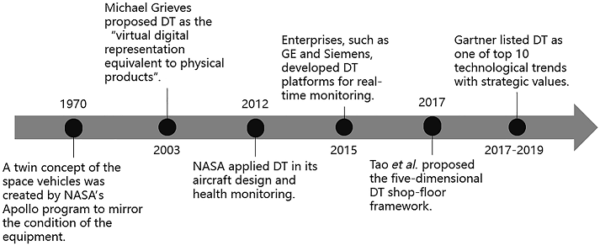

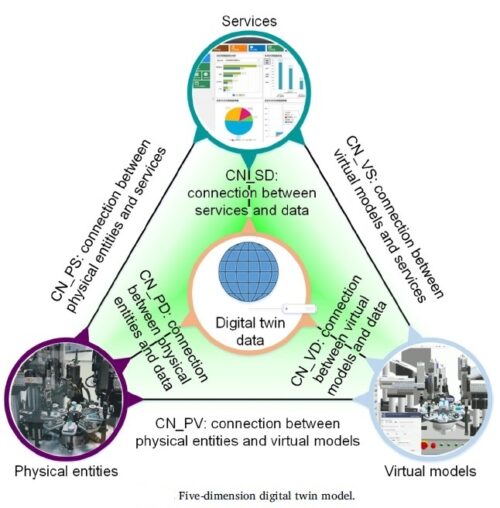

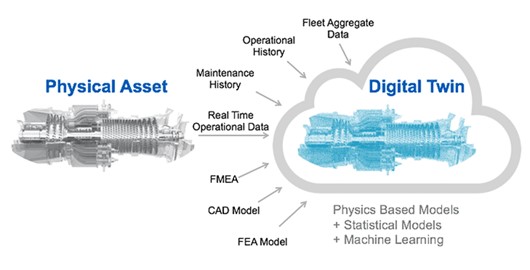

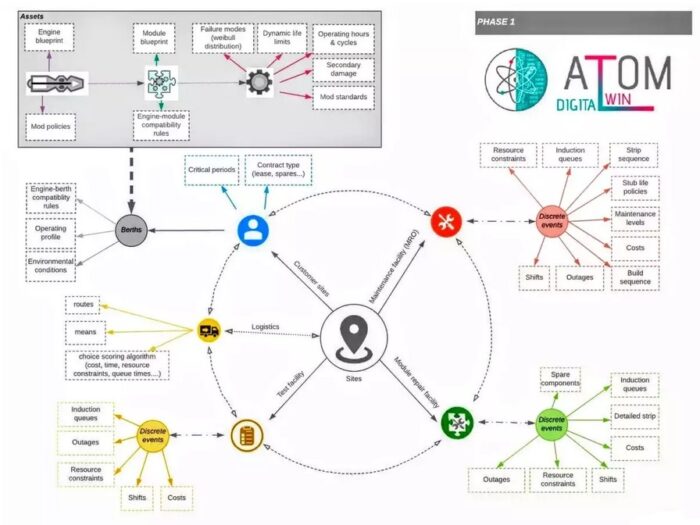

The concept of the “twin” dates to the National Aeronautics and Space Administration (NASA) Apollo program in the 1970s, where a replica of space vehicles on Earth was built to mirror the condition of the equipment during the mission (Rosen et al., 2015; Miskinis, 2019). This replica was the first application of the “twin” concept. In 2003, DT was proposed by Michael Grieves in his product lifecycle management (PLM) course as “virtual digital representation equivalent to physical products” (Grieves, 2014). In 2012, DT was applied by NASA to integrate ultra-high-fidelity simulation with a vehicle’s on-board integrated vehicle health management system, maintenance history, and all available historical and fleet data to mirror the life of its flying twin and enable unprecedented levels of safety and reliability (Glaessgen and Stargel, 2012; Tuegel et al., 2011a). The advent of IoT boosts the development of DT technology in the manufacturing industry. Enterprises such as Siemens and GE developed platforms of DT for real-time monitoring, inspection and maintenance (Eliane Fourgeau, 2016). Recently, Tao and Zhang (2017) proposed a five-dimensional DTS framework, which provides theoretical guidance for the digitalization and intellectualization of the manufacturing industry. From 2017 to 2019, DT has been continuously selected as one of the top 10 technological trends with strategic values by Gartner (Panetta, 2016, 2017, 2018). The history of the DT is briefly summarized in figure below.

_

Digital manufacturing has brought considerable values to the entire industry over the last decades. Through virtually representing factories, resources, workforces and their skills, etc., digital manufacturing builds models and simulates product and process development. The progress in information and communication technologies (ICTs) has promoted the development of manufacturing greatly. Computer-aided technologies are developing quickly and playing more and more critical as well as typical role in industry, including CAD, CAE, CAM, FEA, PDM, etc. Big data, Internet of things (IoT), artificial intelligence, cloud computing, edge computing, the fifth-generation cellular network (5G), wireless sensor networks, etc. are developing rapidly and show big potentials in every aspect of the industry field. All these technologies provide opportunities for the integration of the physical world and the digital world, which is an inevitable trend for addressing growing complexities and high demands of the market. However, the full strategic advantage of this integration is not exploited to its full extent. The process of this integration is a long way to go and the newest developments focus on digital twin.

_

NASA’s Apollo space program was the first program to use the ‘twin’ concept. The program built two identical space vehicles so that the space vehicle on earth can mirror, simulate, and predict the conditions of the other one in space. The vehicle remained on earth was the twin of the vehicle that executed mission in the space. The Apollo 13 mission quickly met its end as oxygen tanks failed and exploded two days into the mission. The implementation of a digital twin on the ground level contributed to the success of the rescue mission, as engineers were able to assess and test out all possible mission outcomes, troubleshooting, and solutions. The first use of the “digital twin” terminology appeared in Hernández and Hernández’s work. Digital twin was used for iterative modifications in the design of urban road networks. However, it is widely acknowledged that the terminology was first introduced as “digital equivalent to a physical product” by Michael Grieves at University of Michigan in 2003. The concept of “product avatar” was introduced by Hribernik et al. in 2006, which is a similar concept to digital twin. The product avatar concept intended to build the architecture of information management that supports a bidirectional information flow from the product-centric perspective. Research regarding product avatar can be found before 2015. However, it seems that the product avatar concept was replaced by digital twin since then.

_

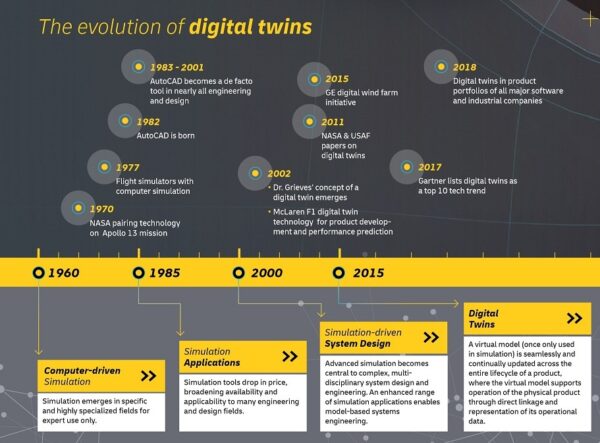

For many years, scientists and engineers have created mathematical models of real-world objects and over time these models have become increasingly sophisticated. Today the evolution of sensors and network technologies enables us to link previously offline physical assets to digital models. In this way, changes experienced by the physical object are reflected in the digital model, and insights derived from the model allow decisions to be made about the physical object, which can also be controlled with unprecedented precision. At first the complexity and cost involved in building digital twins limited their use to the aerospace and defense sectors (see the timeline in figure below) as the physical objects were high-value, mission-critical assets operating in challenging environments that could benefit from simulation. Relatively few other applications shared the same combination of high-value assets and inaccessible operating conditions to justify the investment. That situation is changing rapidly. Today, as part of their normal business processes, companies are using their own products to generate much of the data required to build a digital twin; computer-aided design (CAD) and simulation tools are commonly used in product development, for example. Many products, including consumer electronics, automobiles, and even household appliances now include sensors and data communication capabilities as standard features.

While the digital twin concept has existed since the start of the 21st century, the approach is now reaching a tipping point where widespread adoption is likely in the near future. That’s because a number of key enabling technologies have reached the level of maturity necessary to support the use of digital twins for enterprise applications. Those technologies include low-cost data storage and computing power, the availability of robust, highspeed wired and wireless networks, and cheap, reliable sensors. As corporate interest in digital twins grows, so too does the number of technology providers to supply this demand. Industry researchers expect the digital twins market to grow at an annual rate of more than 38 percent over the next few years, passing the USD $26 billion point by 2025.

_____

_____

Section-4

Digital Twin versus other closely related technologies:

_

3D model versus digital twin:

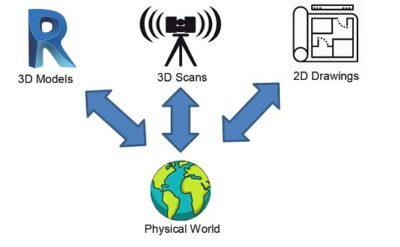

Digital twins started as basic CAD documentation of the physical world in the late 1900s, but with the growth of BIM working processes the concept of digital twin has become a representation much closer to reality. With the ability to assign parametric data to objects it was possible to make the representations move beyond just a physical description, but a functional representation as well. Recently, with the growth of IoT technologies it became possible to live stream data to the objects and systems in the physical world to a remote location, analyze the data, and react to modify conditions of the physical object in its actual location. This moves the CAD object from being just a 2D/3D representation randomly positioned in space to being a representation of the physical object that demonstrates not only the form of the physical object, but its behavior as well. Digital twins can be made using 3D models, 3D scans, and even 2D documentation as seen in the figure below. The requirement to qualify as a digital twin is the link between the physical and virtual worlds where data is transmitted bi-directionally between the two worlds.

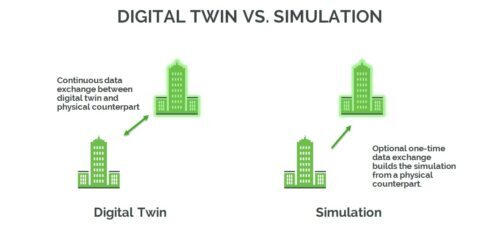

CAD (3D model) is used for both simulation technology and digital twin technology. Both simulations and digital twins utilize digital models to replicate a system’s various processes. But optional one-time data exchange builds the simulation from physical counterpart using 3D model while continuous bidirectional data exchange builds digital twin from physical counterpart using 3D model.

_

Nowadays there are many players in the market that can create accurate 3D models of the real world structures – a process that is commonly called “reality capture”. Using laser scanners or photogrammetry, detailed three-dimensional, geometric representations of captured objects are created. The results of this is referred to as a “digital twin”. But such a three-dimensional model is by no means a digital twin. The models certainly represent what is physically present and all the associated visible information. Though any building or technical installation has a large amount of information and data that is not visible. These invisible information are for example asset information stored in an ERP system, process data used by the CAFM as well as consumption and meter data from the building management system. Also the energy management system or some other control system, as well as the multitude of sensors in modern “smart” buildings or factories yield additional information. Only when the 3D geometry is combined with all the additional information in a seamlessly integrated, easy to use and understand photorealistic model, we have a real digital twin.

_

3D models and digital twins are easily confused because they look similar at first glance. In both cases. what you see on the screen is a detailed visualisation of your physical asset in three dimensions. The difference – and it’s a big one – is the data that appears on the 3D model. A barebones 3D model without data has some use as a point of reference on a sprawling gas plant or oil rig. It basically does the same job as a traditional paper plan by providing orientation on the asset. With more advanced 3D visualisations, you might get some additional static data attached to the model. Static here means that the data cannot be updated easily. An example would be a pdf document containing an equipment spec sheet. Now in theory static data ought to help people make better decisions and complete tasks more efficiently. For example, you might be able to locate a piece of equipment on the 3D model and see its id number on the attached pdf. Armed with this identifier you can then chase up back office to investigate when it was installed, who installed it, and when it needs to be replaced. This is clearly more useful than a dog-eared paper map.

But is it going to transform efficiency and safety on the asset?

The answer is no.

3D models populated by static data simply aren’t that useful, particularly in operations. Quite often the data is out of date because busy staff and contractors have other priorities and don’t really see the point in spending time and energy on curating the model. In their eyes it’s just another digital initiative that adds to their workload. Because the data isn’t always updated, it isn’t 100% trusted. Because it isn’t trusted, people don’t bother to consult the 3D model. The net result is that people in operations quietly revert to their old ‘tried-and-tested’ processes.

Dynamic data:

The (huge) difference between the 3D model and a digital twin can be expressed in two words: dynamic data.

Unlike static data, dynamic data changes and updates in real-time on the 3D model as soon as new information becomes available. One example would be a sensor on a pipe that shows real-time temperature or pressure measurements on the 3D model, and is able to trigger an alert. From the point of view of someone on site with a maintenance task, this is extremely useful information that makes their job safer and more efficient. If that alert can be actioned promptly before a small problem becomes a big one, big savings can be achieved. This is the sort of thing digital workflows are for. They carry real time data insights from the central data repository to where they’re needed in operations, and carry back updates once a task has been completed. In this way the 3D model is constantly being updated with the best and most reliable information that everyone else on the asset can see.

With dynamic data running through its workflows, the digital twin suddenly comes to life. The lights come on and people start using it, because it is making everybody’s lives easier, safer and more productive. With reliable data at their fingertips in one place, people can ‘connect the dots’ to solve problems that previously eluded them.

It doesn’t stop there. As confidence in the digital twin grows, the culture of the organisation becomes ripe for improvement and change. Enlightened operators grab the opportunity to re-organise staff into multi-disciplinary teams and experiment with new approaches.

The truth is that 3D models incorrectly labelled as digital twins have often disappointed operators, which has unfairly damaged the reputation of this transformative technology. But has the money spent on 3D models been entirely wasted? Fortunately not. The good news is that your existing 3D model can be upgraded to channel dynamic data by following a few logical steps.

First, you need to establish a central data repository that cleans and harmonises the data so that it can be accessed. Second, you need to install digital workflows. These act as the ‘wiring’ that carry data insights to where they’re needed in operations. Third, you need to give your workforce the chance to understand how useful a digital twin can be in their daily lives. This usually happens by allowing them to experiment with the technology in a safe space.

_

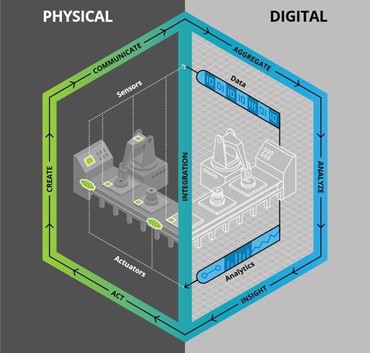

It is clear from above discussion that DT is different from computer models (CAD/CAE) and simulation. Even though many organizations use the term ‘Digital Twin’ synonymously to 3D model, a 3D model is only a part of DT. DT uses data to reflect the real world at any given point of time and thus can be used for observing and understanding the performance of the system and for its predictive maintenance. Computer models, just like DT, are also used for the generic understanding of a system or for making generalized predictions, but they are rarely used for accurately representing the status of a system in real time. A lack of real-time data makes these models or simulations static, which means that they do not change or cannot make new predictions unless new information is fed to them. However, having real-time data is not enough for DT to operate—the data also need to be loaded automatically to DT and the flow from physical to digital should be bidirectional as seen in the figure below:

The digital twin configuration above represents a journey from the physical world to the digital world and back to the physical world. This physical-digital-physical journey communicates, analyses, and uses information to drive further intelligent action back in the physical world.

_

A digital twin fuses design CAD, simulation and sensor data to create actionable data—data that can be used to predict outcomes based on certain scenarios—and to help businesses make productive decisions. It works by having the 3D geometry of a part or system, connecting it with sensor data coming from operation, so that we can see a digital replica in 3D of the behavior of the part or system in service. With this it is possible to run 2D and 3D simulations to predict behavior. 2D uses machine learning that looks at historical data to make predictions which is used for better control of the process and predictive maintenance. 3D uses physics to run innovative virtual scenarios to find new ways of improving efficiency of operation and reduce cost. Combining 2D and 3D gives the user a complete remote control of their physical asset. Digital twins have traditionally been very expensive to operate and only used in defense and aerospace industries where there is fierce competition, there are few players, and these few players have relatively large resources.

_

Traditionally, engineering design teams use computer-aided tools to design assets. A bicycle manufacturer might use Computer Aided Design (CAD), Computer Aided Engineering (CAE) and Computer Aided Manufacturing (CAM) across the entire product life cycle. These representations of assets are not new. Early applications of CAE date back to the late 1960s, most notably, by Dr. Swanson who developed the first version of ANSYS – a CAE tool – in 19703. Designs of complex machines such as gas turbines and jet engines, internal combustion engines, locomotives, automobiles, and so on rely upon CAx technologies to limit costly experimentation and testing, so designers can improve their confidence on how the part will behave in the field even before building a single part. Such computer-aided representations, in addition to insights gleaned from controlled experiments, allow us to evaluate how the asset will perform in the field, as well as optimize how it is manufactured – thereby serving as an important digital twin representation of the asset. These form the ‘as-designed’ and ‘as-built’ digital representations of the asset.

3D CAD (3D Computer Aided Design), 3D CAE (Computer Aided Engineering) and 3D CAM (Computer Aided Manufacturing) technologies are used to specify precise 3D models or mechanical, electrical, and electro-mechanical products for manufacturing. 3D Printing (a.k.a., Additive Manufacturing) technologies are capable of taking 3D CAD specifications and automatically constructing (“printing”) them directly, with neither human intervention nor a separate traditional human-intensive manufacturing process (a.k.a., Subtractive Manufacturing). 3D CAD & 3D Printing technologies provide the electro-mechanical infrastructure for the physical construction of Physical Twins, and by extension their Digital Twin counterparts. Since the [3D CAD] Design = Implementation [3D Printed Product] principle is rapidly transforming how we design and manufacture Systems-of-Systems, these complementary technologies will play a vital role in the evolution of Digital Twin technology.

_

3D Maps vs. 3D Models:

Many believe that a Digital Twin is built on two parts: 3D Models and Process Models integrated with plant data. But some believe a successful Digital Twin should consist of 3D Maps and Digital P&IDs, integrated with plant data silos. Why? Fundamentally, 3D models are a flawed approach for creating a successful Digital Twin of the plant. Sure, the vision that’s often pitched with model-based digital twins seems incredible. A 3D model provides a fully interactive simulated virtual plant. We’ve seen countless flashy demos and proof of concepts around augmented 3D process models. These demos often show 3D models of the plant, where operators and engineers can don augmented reality glasses, tweak process conditions, change pressures and temperatures, or see the impacts of opening a valve or resizing a pump — all within a 3D model. The vision of these demos is to provide an interactive 3D model of the plant for engineers and operators to experiment and find ways to drive more efficiency out of the operations. Basic process optimization. But the question is, does all of that flashy 3D simulation actually produce results? If you ask most process engineers to debottleneck a process, they’ll pull up the two most common process models in industry — the Process Flow Diagram (PFD) and the Piping and Instrumentation Diagram (P&ID). These two diagrams, the PFD and the P&ID, are ubiquitous and foundational elements of the process engineer’s toolkit. The PFD and the P&ID give the easiest way to understand view of how a complicated process unit operates. All engineers and operators understand the PFD and P&ID. Only the PFD can summarize all of the complicated flows of the plant into a single sheet of paper. And only the P&ID can show all of the tens of thousands of connections, pieces of equipment, and metadata in an easy to read format that the engineer’s eye can use to debottleneck the plant. In a 3D Model, the users field of view is just too narrow — you can’t get an overall picture for how the unit operates. Successful Digital Twin solution is one that’s integrated with digital P&IDs and PFDs; and that’s foundationally based on 3D Mapping technology (not 3D Modelling). For most users, 3D maps are easier to understand, navigate, and use than 3D models. And they can be updated for a fraction of the cost of a 3D model. 3D Maps are the easiest to use, visually rich representation of the real 3D world at the plant.

_

A digital twin is different from the traditional Computer Aided Design/Computer Aided Engineering (CAD/CAE) model in the following important ways:

(a) It is a specific instance that reflects the structure, performance, health status, and mission-specific characteristics such as miles flown, malfunctions experienced, and maintenance and repair history of the physical twin;

(b) It helps determine when to schedule preventive maintenance based on knowledge of the system’s maintenance history and observed system behavior;

(c) It helps in understanding how the physical twin is performing in the real world, and how it can be expected to perform with timely maintenance in the future;

(d) It allows developers to observe system performance to understand, for example, how modifications are performing, and to get a better understanding of the operational environment;

(e) It promotes traceability between life cycle phases through connectivity provided by the digital thread;

(f) It facilitates refinement of assumptions with predictive analytics-data collected from the physical system and incorporated in the digital twin can be analyzed along with other information sources to make predictions about future system performance;

(g) It enables maintainers to troubleshoot malfunctioning remote equipment and perform remote maintenance;

(h) It combines data from the IoT with data from the physical system to, for example, optimize service and manufacturing processes and identify needed design improvements (e.g., improved logistics support, improved mission performance);

(i) It reflects the age of the physical system by incorporating operational and maintenance data from the physical system into its models and simulations.

_____

_____

Digital twin versus simulation:

_

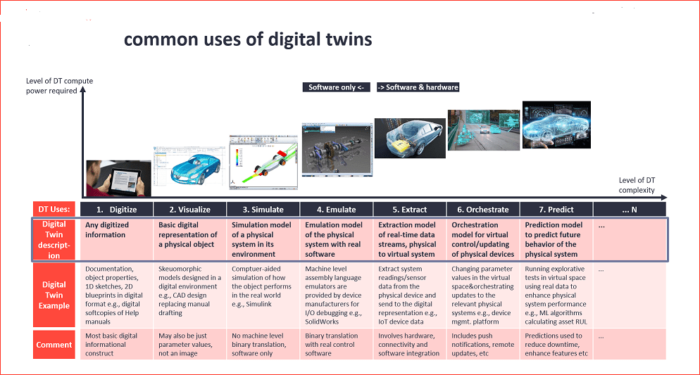

A simulation is the imitation of the operation of a real-world process or system over time. Simulations require the use of models; the model represents the key characteristics or behaviors of the selected system or process, whereas the simulation represents the evolution of the model over time. Computers are used to execute the simulation. A simulation is a model that mimics the operation of an existing or proposed system, providing evidence for decision-making by being able to test different scenarios or process changes. The terms simulation and digital twin are often used interchangeably, but they are different things. A simulation is designed with a CAD system or similar platform, and can be put through its simulated paces, but may not have a one-to-one analog with a real physical object. A digital twin, by contrast, is built out of input from IoT sensors on real equipment, which means it replicates a real-world system and changes with that system over time. Simulations tend to be used during the design phase of a product’s lifecycle, trying to forecast how a future product will work, whereas a digital twin provides all parts of the business insight into how some product or system they’re already using is working now.

_

Although simulation technology and digital twins share the ability to execute virtual simulations, they are not the same. While traditional simulation capabilities found in computer-aided design and engineering (CAD-CAE) applications are powerful product design tools, a digital twin can do much more. In both cases, the simulation is happening on a virtual model, but the model becomes a digital twin once the product is produced. When a digital twin is powered by an Industrial Internet of Things (IoT) platform, it can receive real-world data quickly and process it, enabling the designer to virtually “see” how the real product is operating. Indeed, when powered by an IoT platform, the model becomes an integrated, closed-loop digital twin that, once fully deployed and connected via the digital thread, is a business simulation tool that can drive strategy at every stage of the business.

_

Because of the similarities between simulation technology and digital twins, too many business and technology leaders fail to understand the profound differences between the two. Though both sound the same, find below the fine differences in this table:

|

Features/Attributes |

Digital Twin |

Simulation |

|

Scale |

Smaller business systems to massive manufacturing plants. |

Usually, businesses use the simulation for specific needs on a small scale. For example, flying airplanes or simulating a cyberattack. |

|

Live Data |

Real-time data collected from the environment where the digitized object performs is at the core of digital twin technology. |

A simulation does not require real-time data inputs. |

|

Scope of Study |

You can study a whole business or a minute task. |

The simulation focuses on one task at a time. |

|

Insight Sharing |

A digital twin automatically shares output insights with the physical object or process. |

A simulation does not share insights automatically. You need to implement the results manually. |

_

CAD simulations are theoretical, static and limited by the imaginations of their designers, while digital twin simulations are active and use actual dynamic data. Simulation is an important aspect of digital twin. Digital twin simulation enables virtual model to interact with physical entity bi-directionally in real-time. This interaction means information can flow in either direction to create a cyber-physical system, where a change in one affects the other. Multi-physics, multi-scale simulation is one of the most important visions of digital twin. You can’t have a digital twin without simulation. The advantages of digital twin over a more basic, non-integrated CAD-based simulation are evident for monitoring valuable products such as wind turbines. However, digital twins can be costly, requiring the fitting of sensors and their integration with analytical software and a user interface. For this reason, digital twins are usually only employed for more critical assets or procedures, where the cost is justifiable to a business.

_

Case Studies:

To better understand the difference between simulation and digital twin it is useful to look at some real life case studies.

For example, while an advanced simulation can analyse thousands of variables a digital twin can be used to assess an entire lifecycle. This was demonstrated by Boeing, who integrated digital twin into design and production, allowing them to assess how materials would perform throughout an aircraft’s lifecycle. As a result, they were able improve the quality of some parts by 40%.

Tesla also use digital twins in their vehicles to capture data that can be used to optimise designs, enhance efforts to create autonomous vehicles, provide predictive analytics and deliver information for maintenance purposes. This actual, rather than theoretical, two-way flow of data could lead to a future where a vehicle could deliver data directly to a garage ahead of a service detailing performance statistics, parts that have been replaced, service records, and potential problems picked up by the sensors. This would deliver time and cost savings as a mechanic could hone in on any problems based on the data rather than having to fully inspect each vehicle for problems.

_____

_____

Digital Twins versus BIM:

The architecture, engineering and construction (AEC) sector is notorious for its resource planning, risk management and logistic issues, which frequently lead to design flaws, project delays, cost overruns and contractual conflicts. Building information modelling (BIM) has been used to increase the efficiency of the construction process, eliminate waste during construction and enhance the quality of AEC projects throughout the last several decades. BIM enables the early identification and correction of possible issues before they reach the construction site. BIM provides a way of accessing detailed information about buildings and infrastructure assets, enabling the creation of 3D models which include data associated with the functional and physical characteristics of an asset. BIM is beneficial but insufficient, and the AEC sector needs something more substantial (Bakhshi et al., 2022; Rahimian et al., 2021). The existing constraints have prompted research into the use of powerful machine learning (ML) techniques, such as deep learning (DL), to diagnose and predict causes and preventive measures. Digital twins have quickly established themselves as the go-to method for developing reliable data models of all components of a building or city at various phases of its lifecycle. The AEC industry has likely struggled to distinguish between these two critical technologies. The misconception stems primarily from BIM software’s emphasis on digitally represented physical space and Digital Twin’s early categorization as a digital replica of a physical object or location. The main distinction is in how each technology is implemented. Building maintenance and operations are best served by Digital twins whereas construction and design are better addressed by BIM.

_

BIM stands for Building Information Modelling and as the name implies, the core of BIM is INFORMATION. BIM is an intelligent model-based process/methodology for creating 3D building design models that incorporate all of the information required for a building’s construction. It allows for easier coordination and collaboration among the project’s many teams. AEC experts can design, construct, and operate buildings and infrastructures more effectively.

Building Information Modeling (BIM) is the integrated process built on coordinated reliable information used to create coordinated digital design information and documentation, predict performance appearance and cost, deliver projects faster more economically, and with reduced environmental impact.

The cost-saving benefits of having a central point of building reference in a 3D digital model are now being explained by leading BIM software providers to the AEC (architects, engineers, and contractors) industry. BIM allows collaboration and much easier design recalibration in progressing projects.

_

It’s helpful to think about BIM as a layered view of a tower structure. The first layer might include design information about the tower structure, the next layer might be overlaid with an electrical diagram, and then information about manufacturer equipment, and so forth until every system is part of a complete model. BIM offers an up-to-date view of how a tower is designed, allowing personnel to peel back the layers to see how systems interact with one another. When a design change affects multiple tower systems, BIM provides a view of how the modification affects each layer and can simultaneously update information across layers after the change is complete.

_

BIM is a fundamental part of building a digital twin, but they should not be confused, because they are different. In many ways, digital twins are an evolution of BIM, enabling users to evolve their outputs and deepen the use of collaborative data. Over the years, BIM has proved a valuable tool for predictive maintenance, asset tracking, and facilities management. The highly-detailed 3D models help to improve process visibility, establish a clear project vision, and enable contractors, engineers and stakeholders to collaborate and gain richer insights. For these reasons, BIM and digital twins are built on common principles, and both enable teams to look at assets as ongoing projects. But, to improve and adapt projects for greater value, real-time insights are vital. And this is where digital twins come into play.

_

Often used solely as a 3D-modelling tool for design and construction phases, BIM builds static models which do not include the dynamics of a live digital twin. In comparison, digital twins harness live data to evolve and replicate the real world. In construction, a digital twin not only looks like the real building, but also acts like it – providing greater value through the asset lifecycle. At its core, a digital twin can be an output of a BIM process and is essentially a ‘living’ version of the project or asset view that BIM processes exist to create – able to evolve and transform using real-time data once the asset is in use.

The fundamental difference between digital twins and BIM is that the latter is a static digital model, while the former is a dynamic digital representation. In other words, BIM lacks spatial and temporal context elements that characterize digital twins. Digital twins provide a realistic environment in which the model resides instead of the model alone. It is kept up-to-date based on real-time data, context, and algorithmic reasoning. BIM, in contrast, offers up a fixed model in isolation, independent from its surroundings and the people who use it. So, BIM is not a Digital Twin. BIM is a small sub-set of a Digital Twin, frozen in time – typically during the design and construction phase. BIM is a finely tuned tool for more accurate design, collaboration, visualization, costing and construction sequencing phases of a building’s life. BIM is not designed for the operation and maintenance phases of a building’s life, nor is it designed to ingest nor action on the vast amounts of live IoT data generated by a ‘breathing’ and ‘thinking’ Smart Building. Its primary purpose is to design and construct a building, and post-construction, it serves to provide a digital record of a constructed asset. BIM is only focused on buildings – not people or processes. However, BIM is a small but very useful input into a Digital Twin, as it provides us with an accurate digital asset register and location data and is a great starting point for both a Smart Building and a Digital Twin. Digital twin technology, combined with the Internet of Things (IoT) and Industrial IoT (IIoT), may eventually render BIM as we know it obsolete. For now, however, BIM isn’t going anywhere. Between 2020 and 2027, its market size has a projected compound annual growth rate (CAGR) of 15.2%.

_____

_____

Types of digital twin: physics-based vs data-driven vs hybrid models:

Generally, there are two types of DTs — physics-based twins and data-based twins.

-1. Physics-based twins (also called virtual twins by some authors):

Physics-based twins rely on physical laws and expert knowledge. They can be built from CAD files and used to simulate the work of comparatively simple objects with predictable behavior — like a piece of machinery on the production line. A virtual twin is an ideal model of the product based on simulation. Here, physics-based simulation uses analytical and numerical methods to model the behavior of products and systems. Finite Element Analysis (FEA) for structural simulation is one example.

The key downside is that updating such twins takes hours rather than minutes or seconds. So, the approach makes sense in areas where you don’t need to make immediate decisions. There are limits to physics-based simulation. It requires computer resources proportional to the size and complexity of the problem. Large systems (or a system of systems) cannot be practically simulated. This is because the simulations take too long and the required IT infrastructure costs too much. Furthermore, the simulation setup may not exactly match the operating conditions of the real world, introducing a source of divergence between the simulation and the real, operating product. Recent advances in numerical techniques, cloud computing, and GPU processing have helped address this issue to some extent. But limitations still exist. Solving large systems (or a system of systems) is still impractical for many physics-based simulations.

_

-2. Data-based twins (machine learning based twin):

Contrasted with the physics-based type, data-based twins don’t require deep engineering expertise. Instead of understanding the physical principles behind the system, they use machine learning algorithms (typically, neural networks) to find hidden relationships between input and output. For example, you could have a digital twin of an operational aircraft engine sitting in the Pratt and Whitney data center. Here, the engine is the “product.” Sensors in the real engine send data to the digital twin. This data is stored and processed, creating a digital replica of the physical system. The sheer volume of transmitted data can be overwhelming.

The data-based method offers more accurate and quicker results and is applicable to products or processes with complex interactions and a large number of impact factors involved. On the other hand, to produce valid results it needs a vast amount of information not limited to live streams from sensors.

Algorithms have to be trained on historical data generated by the asset itself, accumulated from enterprise systems like ERP, and extracted from CAD drawings, bills of material, Excel files, and other documents.

Today, various combinations of two methods — or so-called hybrid twins — are often used to take advantage of both worlds.

_

-3. Hybrid twin: Marrying physics-based and data-based twins:

At the ESI Live 2020 event, ESI Group Scientific Director Francisco Chinesta introduced a new concept: the hybrid twin. The hybrid twin is a simulation model working at the intersection of virtual and digital twins.

It’s a clever idea. Physics-based simulation (which Chinesta also called the “virtual twin”) is often used to study the behavior of products and systems. But simulation has its limits as an emulator of reality. It represents an idealized version of an item. This is where data-based digital twin comes into play.

Sensor data from smart, connected products is gathered and analyzed for anomalies, offering up-to-date insight into behaviors. But can simulation learn from this real-world data to move closer towards reality? Maybe—and the answer lies in the hybrid twin.

A virtual twin has limitations, deviating from reality with margins of error that can be significant. When you create a hybrid twin by using the data from a digital twin as an input to a virtual twin, you can drastically reduce or even eliminate these errors. According to Chinesta, even a small sample of the digital twin’s data can reduce errors significantly.

A hybrid twin increases the accuracy of simulations by reducing the errors to near-zero. As a result, it enables you to study large systems or a system of systems. This would be completely impractical using physics-based simulation models alone.

A hybrid twin model is a powerful design and product development asset. You can further improve and expand it by integrating artificial intelligence and machine learning algorithms. Such an approach will facilitate the development of improved, reliable, and resilient products of the future.

But who actually benefits from hybrid twins?

First, companies building complex and connected products stand to benefit. Many of today’s products are now embedded with sensors, which collect and transmit all kinds of data in real time. Unlike a digital twin alone, a hybrid twin can make real use of this data to improve a company’s simulation models and move them closer to reality.

Second, the hybrid twin is a boon to companies adopting simulation-driven product development. They can now use their products in the field to improve their simulation models. They can use more accurate simulations to make more informed decisions, boosting innovation. They can also verify product and system behaviors more precisely, reducing the reliance on prototyping and testing.

Lastly, companies building large systems or a system of systems can now use simulation to study those systems. This helps them better understand their systems and stress-test them, virtually.

______

______

Thin Digital Twin:

In information technology “thin” is commonly associated with data storage components that do not consume space. A thin digital twin provides an exact replica of your IT infrastructure, applications, and data without the overhead or complexity of traditional simulation and modelling solutions. To make the twins thin the automation engine needs to have a very deep integration with advanced data cloning technology in modern storage systems and the ability to map the zero footprint clones to hypervisors, software defined networking, and container platforms.

_____

Digital Twin versus virtual commissioning:

Virtual commissioning is the process of creating and analyzing a digital prototype of a physical model to predict its performance in the real world. This means you will test the behaviour before physical commissioning. There is a blurry line between Digital Twins and Virtual Commissioning and many definitions and interpretations exist. One thing they have in common, they both use simulation technology. Virtual commissioning is the simulation of a production system to develop and test the behavior of that system before it is physically commissioned. Digital Twin captures sensor data from a production system and feeds that information into the simulation in real-time to copy the operation of the system in a virtual world. However, it is a common misconception that Digital Twins only come into use once the physical system has been created but Digital Twins are digital replicas of real and potential physical assets (i.e., “Physical Twins”). Digital twin prototype (DTP) is a constructed digital model of an object that has not yet been created in the physical world. So virtual commissioning may be considered as a type of digital twin.

____

Digital twins versus digital twinning:

The terms “digital twin” and “digital twinning” are often used interchangeably. But in reality, they’re very different concepts—and it’s important to recognise the difference.

Digital twinning is the process of digitising the physical environment. It’s a continuous journey that requires collecting and analysing large volumes of data to improve the physical environment around us. It’s a strategic transformation, and a process managed at the highest levels of a business or organisation.

Digital twins on the other hand are the outputs of digital twinning. Each digital twin is a digital reflection of an individual asset, process or system generated through the digital twinning process. By pursuing a strategy of digital twinning, you’ll eventually build up a vast ecosystem of digital twins.

_____

How DT differs from existing technologies:

|

Technology |

How the technology differs from DT |

|

Simulation |

No real-time twinning |

|

Machine Learning |

No twinning |

|

Digital Prototype |

No IoT components necessarily |

|

Optimisation |

No simulation and real-time tests |

|

Autonomous Systems |

No self-learning (learning from its past outcomes) necessarily |

|

Agent-based modelling |

No real-time twinning |

____

____

Digital Twin: Common Misconceptions:

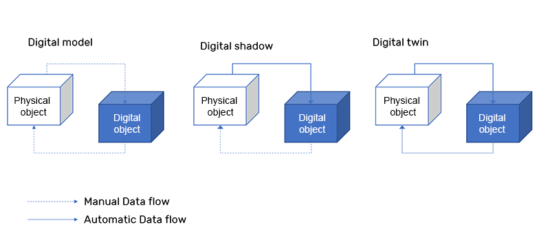

Almost half of the world’s population has no access to the internet, and only a few countries are exploiting the frontier edge of technological advancement. A digital divide has been witnessed between developed and developing countries/regions, different income levels, genders, ages and exclusions further exacerbate already existing inequalities, especially for those digitally disadvantaged groups. Despite the continuous efforts to bridge the digital divide, rapid technological advances, like the digital twin, may have further accelerated human vulnerability in technology evolution. A lack of understanding, knowledge, and access to the frontier edge of developments increases the chances of possible misconceptions, false or inflated expectations of the public, adverse reputational outcomes, and misunderstandings, as well as more significant risks of the most digitally vulnerable groups being exploited. Therefore, this section aims to clarify the common misconceptions about the digital twin. There are some common misconceptions regarding digital twins as can be seen in Table below. In brief, common digital twin misconceptions arise from the closely related technologies of digital twins, 2D/3D modelling, system simulation, validation computation, digital prototyping, and so on. Without a comprehensive understanding of the digital twin and its related technologies, confusion with one of its rooting technologies is common, often confusing elements or steps of digital twin with the digital twin itself. Digital twin’s dynamic, real-time, and bi-directional data connection features are keys to distinguishing the digital twin, but also the most common source of misconception.

_

Common misconceptions of the digital twin:

|

Term |

Reasons and Differences |

|

Digital shadow |

A digital shadow contains a physically existing product and its virtual twin, but it has only a unidirectional data connection from the physical entity to its virtual representative, meaning the virtual twin only digitally reflects the physical product. |

|

Digital modelling |

Modelling is the essential aspect of a digital twin but is not an alternative term to represent digital twin as a whole. There are bi-directional data connections between the physical product and its virtual twin; however, the data is exchanged manually, meaning the virtual twin represents a certain status of the physical product with the manually controlled process of synthesis. |

|

Digital thread |

The digital thread represents the continuous lifetime digital/traceable record of a physical product, starting from its innovation and designing stage to the end of its lifespan, and it plays an important role in the digitalisation process and functions as the enablers of interdisciplinary information exchange. |

|

Simulation |

Simulation refers to the important imitating functionality of digital twin technology from the virtual twin’s perspective, and simulation indicates a broader range of models; it is an essential aspect of the digital twin rather than an alternative term representing digital twin, as it does not consider the real-time data exchange in between the physically existing object. |

|

Fidelity model/ Simulation |

Fidelity refers to the level of imitation state of a simulation model compared with the physical product it is reproducing. It is common to find terms like high/low/core/multi fidelity model/simulation, which describe different fidelity levels or considerations while building up the simulation model. It is also frequently found that researchers use high fidelity or even ultrahigh fidelity to describe the common feature of the digital twin considering its real-time dynamic data exchange between the physical object and virtual twin. |

|

Cyber twin |

Some researchers referred to cyber twin and digital twin interchangeably as a result of understanding “cyber” as another alternative term for “digital”. It is also common to see terms like cyber digital twin, cyber twin simulation, cyber-physical system, and so on. The key aspect the cyber twin or cyber-physical system would like to address is a network (internet architecture), closely related to the advancements and implementations of IoE (Internet of Everything). It is also common to mix the cyber twin or cyber-physical system network architecture with a digital thread. |

|

Device shadow |

It is common to find research on device shadow in areas of cloud computing platforms and the Internet of Things (IoT). Device shadow highlights the virtual representation of the physically existing object; in brief, it refers to the service of maintaining a copy of information extracted from the physical object, which is connected to IoT. |

|

Product Avatar |

It is a distributed and decentralised approach for product information management with no feedback concept; it may capture information of only parts of the product. |

|

Product Lifecycle Management (PLM) |

PLM are focused more on ‘managing’ the components, products and systems of a company across its lifecycles, whereas a DT can be a set of models for real-time data monitoring and processing. |

_____

_____

Data integration in Digital Model, Digital Shadow and Digital Twin:

|

Data flow from physical object to digital object |

Data flow from digital object to physical object |

|

|

Digital Model |

Manual |

Manual |

|

Digital Shadow |

Automatic |

Manual |

|

Digital Twin |

Automatic |

Automatic |

Digital Model:

A digital model has the lowest level of data integration. The term indicates a digital representation of an existing and physical object characterised by the absence of automated data flow between the physical and digital object. This suggests that the data flow from a physical object to a digital object and vice versa is provided manually. Consequently, any change occurred in the physical element does not impact the digital element and at the same way any modification of the digital element does not affect the physical element. A digital model ranges from the simple building component to the whole building considering the construction sector. In this case, it is used to represent and describe digitally a concept, to compare different options avoiding the application in the physical. In addition, the term refers to simulation models of planned factories or mathematical models of new products.

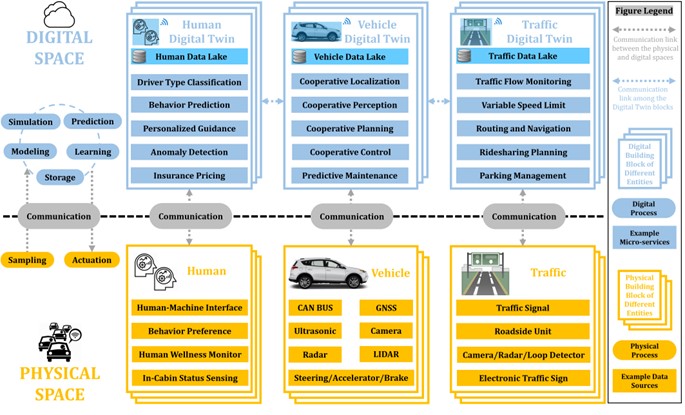

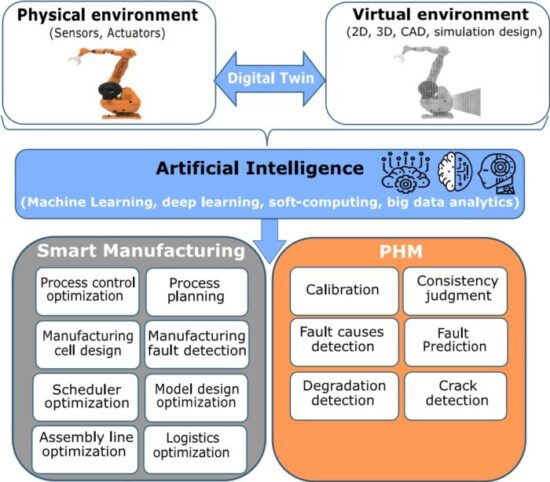

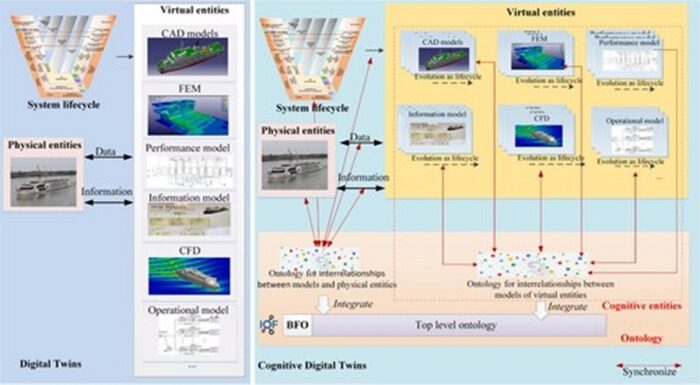

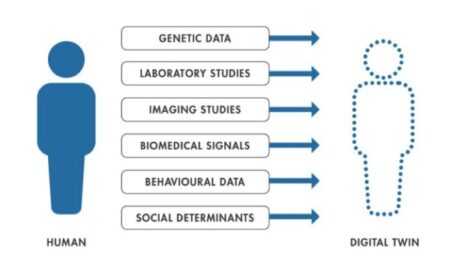

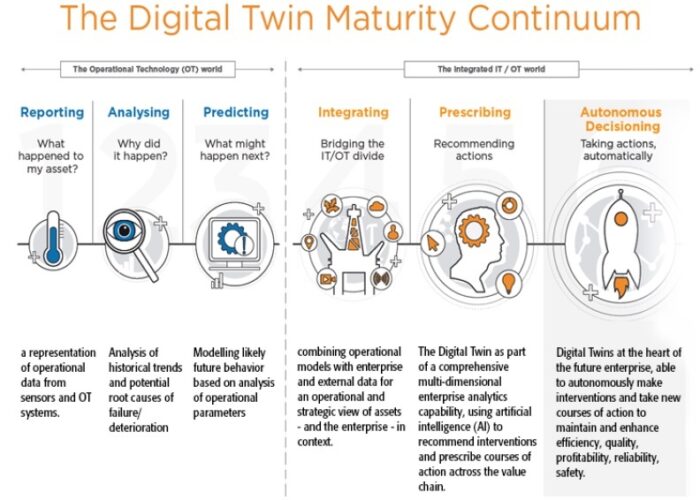

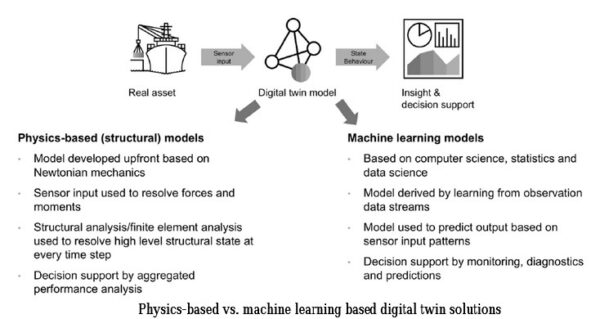

Digital Shadow: