Dr Rajiv Desai

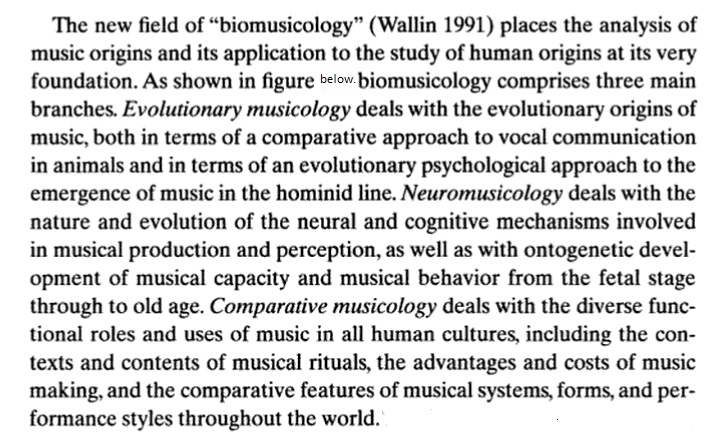

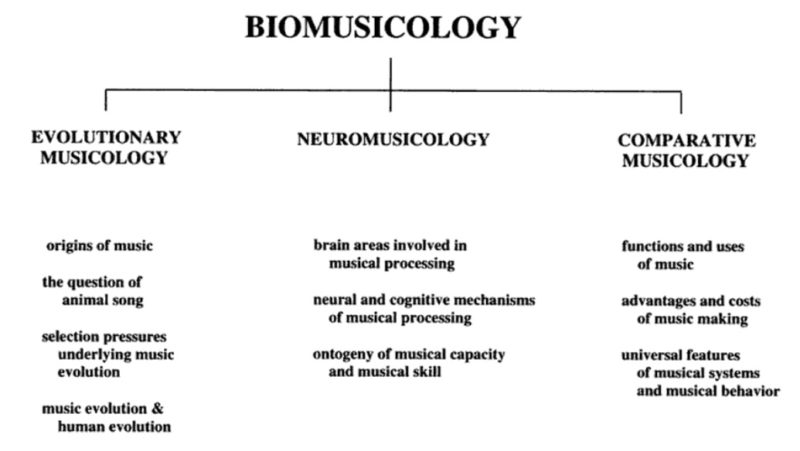

An Educational Blog

SCIENCE OF MUSIC

Science of Music:

_____

“Happy Birthday, Mr. President” is a song sung by actress and singer Marilyn Monroe on May 19, 1962, for President John F. Kennedy at a celebration of his 45th birthday, 10 days before the actual date (May 29). The event marked one of Monroe’s final public appearances; she was found dead in August 1962 at the age of 36, and JFK was assassinated the following year.

_____

Prologue:

The fascinating thing about music is that technically- in a very literal way- it doesn’t exist. A painting, a sculpture or a photograph can physically exist, while music is just air hitting the eardrum in a slightly different way than it would randomly. Somehow that air- which has almost no substance whatsoever- when vibrated and when made to hit the eardrum in tiny subtle ways- can make people dance, love, cry, enjoy, move across country, go to war and more. It’s amazing that something so subtle can elicit profound emotional reactions in people.

Is music a science or an art? The answer to this question naturally depends on the meanings we ascribe to the word music, science and art. Science is essentially related to intellect and mind, and Art to emotion and intuition. Music helps us express and experience emotion. Music is said to be highest of the fine arts. Although music is essentially an art, but it uses methods of science for its own purposes. Music is based on sound and a knowledge of sound from a scientific standpoint will be an advantage. Mathematics and Music have gone hand in hand in ancient Greece. Pluto insisted on a knowledge of music and mathematics as the part of any one who sought admission to his school. Similarly, Pythagoras laid down the condition that would be pupil should know geometry and music. Both music and science use mathematical principles and logic, blended with creative thinking and inspiration to arrive at conclusions that are both enlightening and inspirational. Music composition is basically a mathematical exercise. From a basic source of sounds, rhythms and tempos, an infinite variety of musical expressions and emotions can be produced. It is the interaction of sounds, tempo, and pitch that creates music, just as the interaction of known facts and knowledge coupled with imagination, conjecture and inspiration produces new scientific discoveries.

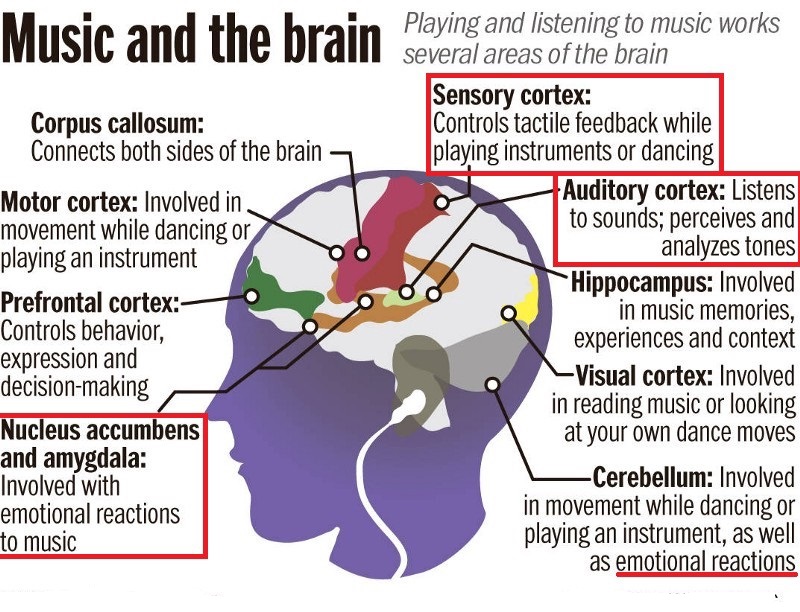

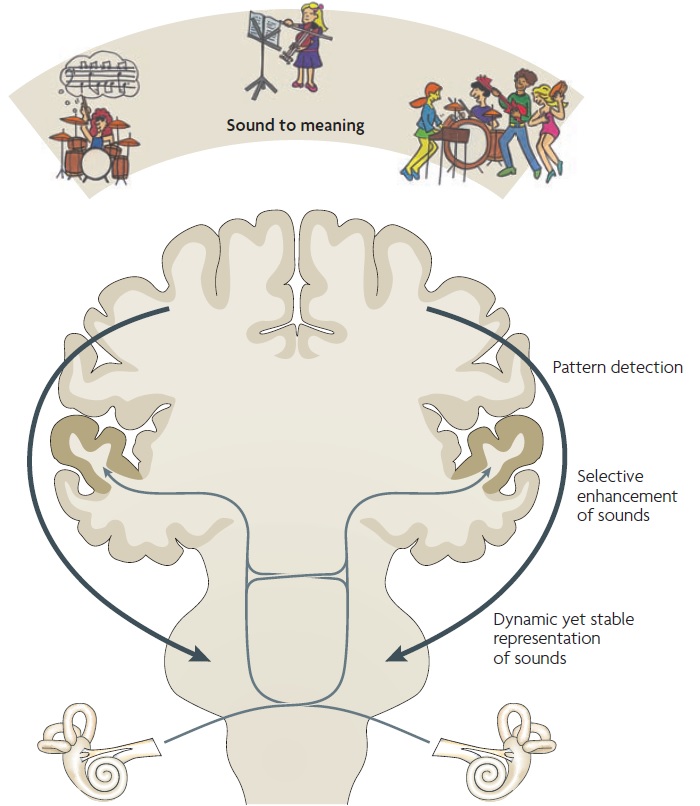

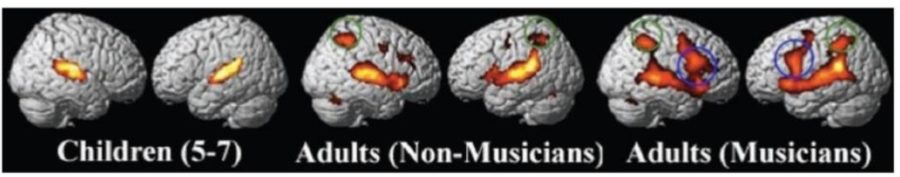

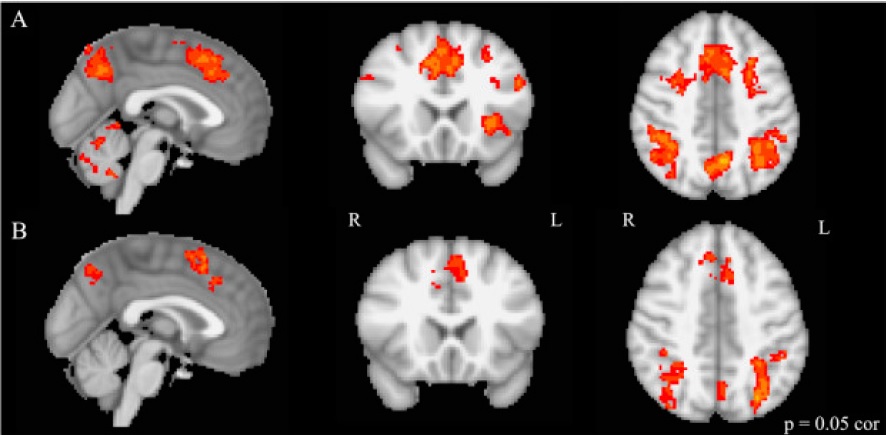

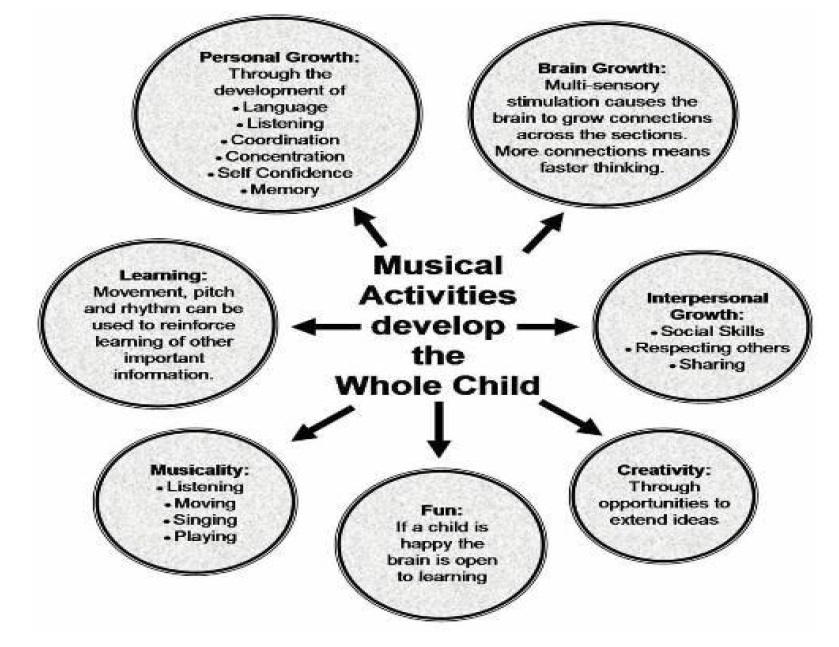

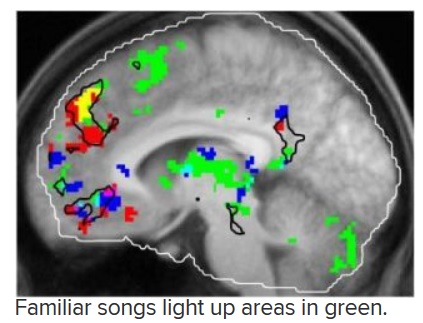

I quote myself from my article ‘Science of language’ posted on this website on May 12, 2014. “Language and music are among the most cognitively complex uses of sound by humans; language and music share common auditory pathways, common vocal tracts and common syntactic structure in mirror neurons; therefore musical training does help language processing and learning tonal languages (Mandarin, Cantonese and Vietnamese) makes you more musical. However, language and music are not identical as they are processed by different neural networks. Language is correlated with cognition while music is correlated with emotions”. Cognition and emotions are not unconnected as neuroscientists have discovered that listening to and producing music involves a tantalizing mix of practically every human cognitive function. Thus, music has emerged as an invaluable tool to study various aspects of the human brain such as auditory, motor, learning, attention, memory, and emotion.

Throughout history, man has created and listened to music for many purposes. Music is the common human denominator. Music varies from culture to culture. All cultures have it. All cultures share it. Music is a force that can unite humans even as they are separated by distance and culture. Music has been perceived to have transcendental qualities, and has thus been used pervasively within forms of religious worship. Today it is used in many hospitals to help patients relax and ease pain, stress and anxiety.

As a biological son of a Hollywood singer and artist, it is my biological duty to discuss the finest art of all time, the ‘Music’. And since my orientation is scientific, the article is named “Science of Music”.

______

______

Musical Lexicon:

Tone

A single musical sound of specific pitch.

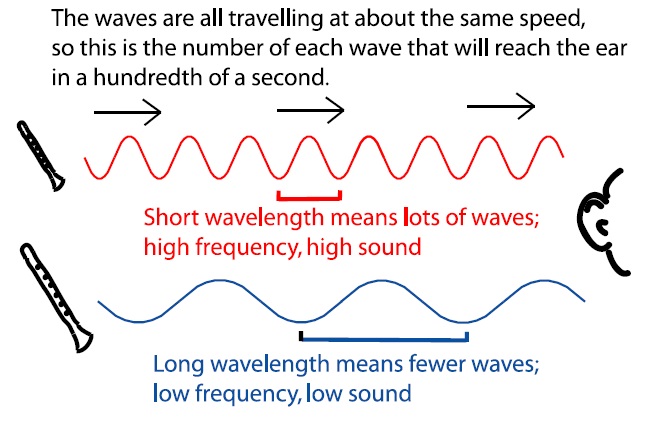

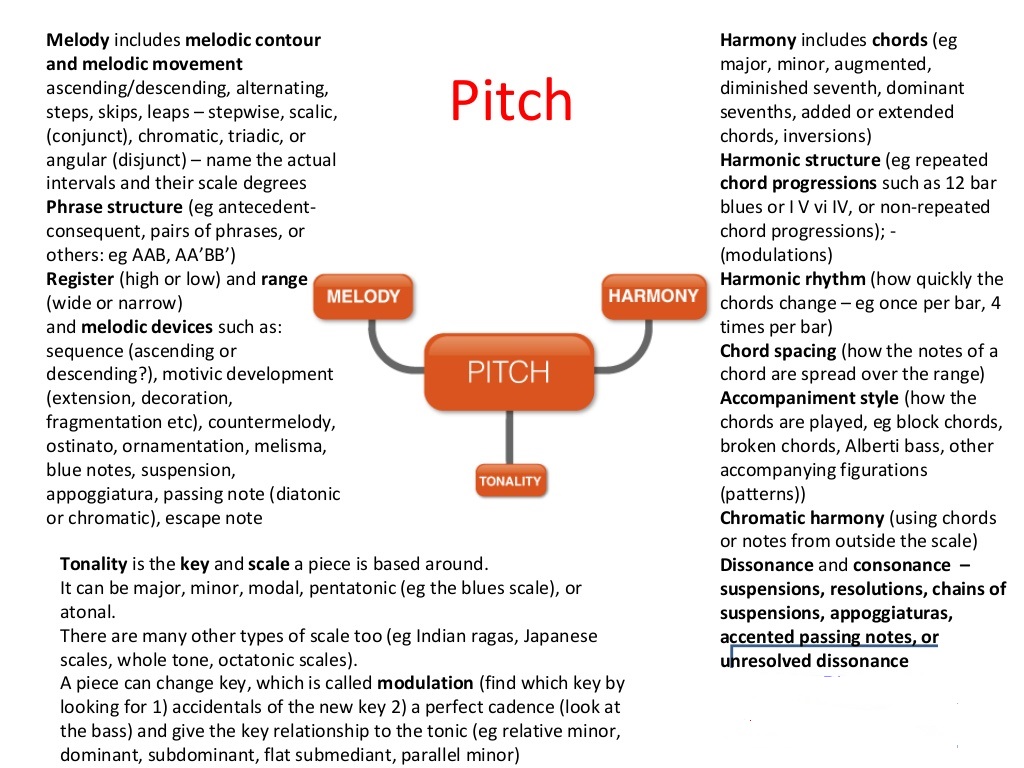

Pitch

The musical quality of a tone, sounding higher or lower based on the frequency of its sound waves.

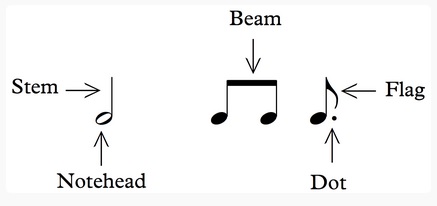

Note

The term “note” in music describes the pitch and the duration of a musical sound. A note has many component waves in it whereas a tone is represented by a single wave form.

Interval

An interval is the difference in pitch between two sounds. Interval is distance between one note and another, whether sounded successively (melodic interval) or simultaneously (harmonic interval).

Scale

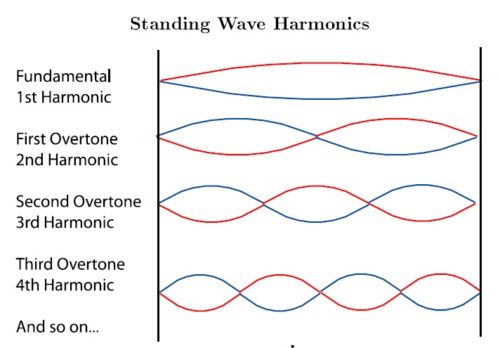

Notes are the building blocks for creating scales, which are just a collection of notes in order of pitch. A scale ordered by increasing pitch is an ascending scale, and a scale ordered by decreasing pitch is a descending scale. Resonance

Amplification of a musical tone by interaction of sound vibrations with a surface or enclosed space.

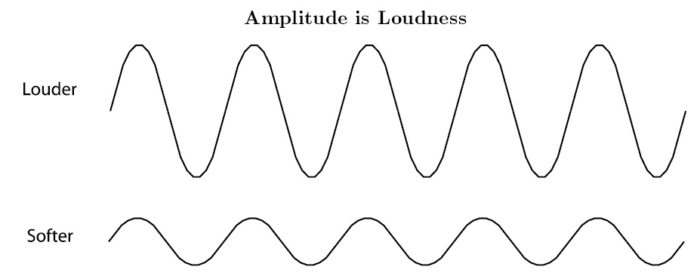

Volume

The pressure of sound vibrations, heard in terms of the loudness of music.

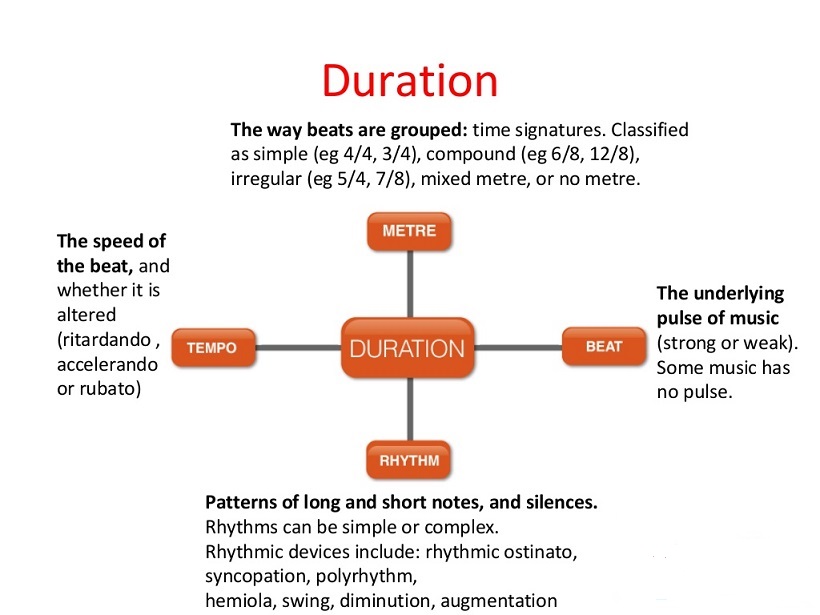

Tempo

The pace of a composition’s performance, not tied to individual notes. Often measured in beats per minute.

Beat

The beat is the basic unit of time, the pulse (regularly repeating event) of the mensural level (or beat level).

Rhythm

An arrangement of tones of different durations and stresses. Rhythm is described as the systematic patterning of sound in terms of timing and grouping leading to multiple levels of periodicity.

Melody (Tune)

A succession of individual notes arranged to musical effect, aka a tune. A melody is a linear succession of musical notes that the listener perceives as a single entity.

Chord

Three or more notes sounded simultaneously. Chords are the ingredients of harmony. Intervals are the building blocks of chords.

Harmony

The musical arrangement of chords. Harmony is the organization of notes played simultaneously. In Western classical music, harmony is the simultaneous occurrence of multiple frequencies and pitches (tones or chords).

Vibrato

A small, rapid modulation in the pitch of a tone. The technique may express emotion.

Counterpoint

Counterpoint is the art of combining different melodic lines in a musical composition and is a characteristic element of Western classical musical practice.

Key

In music a key is the major or minor scale around which a piece of music revolves.

Modulation

Modulation is the change from one key to another; also, the process by which this change is brought about.

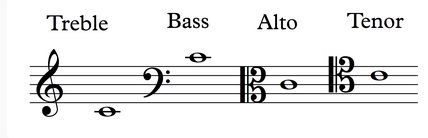

Treble

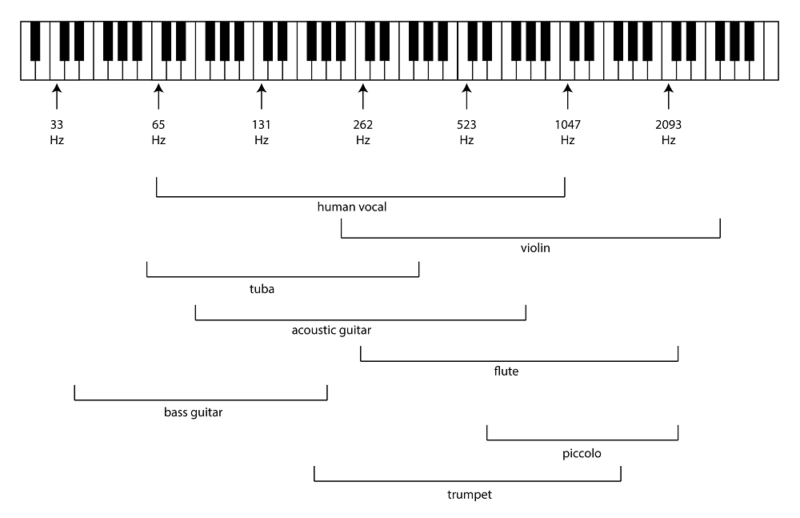

Treble refers to tones whose frequency or range is at the higher end of human hearing. In music this corresponds to “high notes”. The treble clef is often used to notate such notes. Examples of treble sounds are soprano voices, flute tones, piccolos, etc., having frequencies from 2048–16384 Hz.

Bass

The quality of sound that has frequency, range, and pitch in the zone of 16-256 Hz. Or in simpler terms, refers to a deep/low sound is described as one having a bass quality to it. Bass instruments, therefore, are devices that produce music, or pitches in low range.

MP3 is MPEG (Motion Picture Experts Group) Layer-3 sound file, that is compression of sound sequence into a very small file, to enable digital storage and transmission. MP2 and MP3 are audio files while MP4 files usually contain both video and audio (for example movies, video clips, etc.).

Vocal folds:

Actually “vocal cords” is not really a good name, because they are not string at all, but rather flexible stretchy material more like a stretched piece of balloon, in fact a membrane. The correct name is vocal folds. So vocal folds are vocal cords.

_____

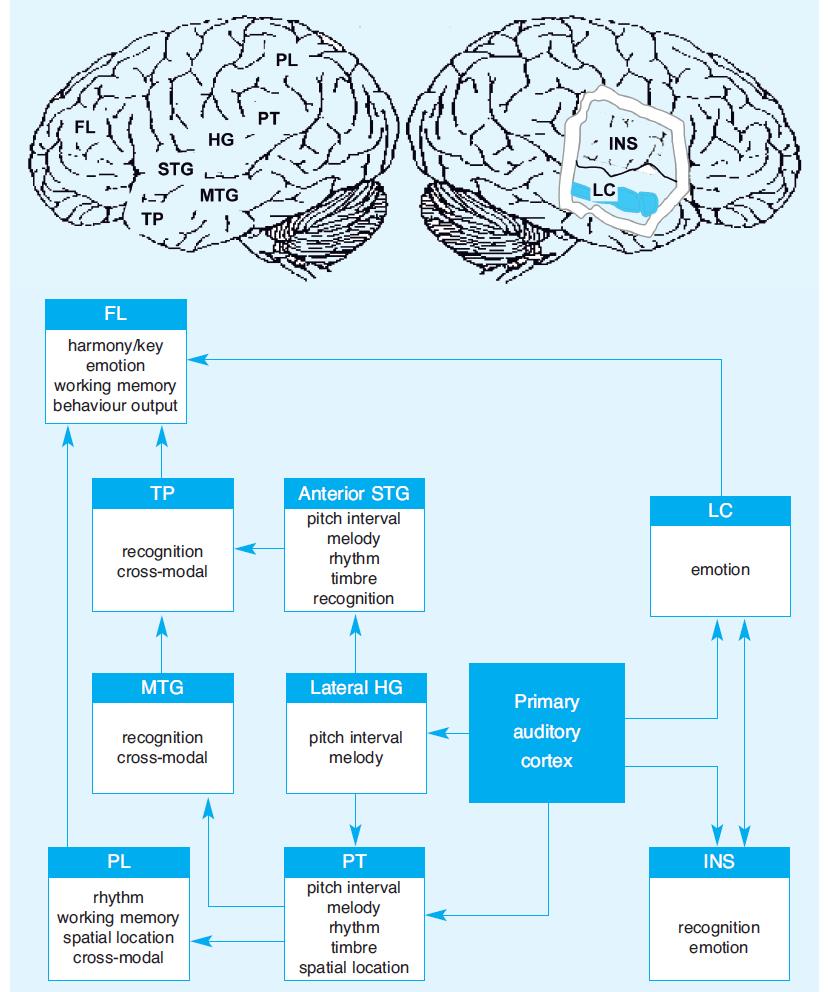

Brain areas abbreviations in this article:

FL = frontal lobe

HG = Heschl’s gyrus (site of primary auditory cortex)

INS = insula

LC = limbic circuit

MTG = middle temporal gyrus

PL = parietal lobe

PT = planum temporale

STG = superior temporal gyrus

TP = temporal pole

IPS = intraparietal sulcus

STS = superior temporal sulcus

SFG = superior frontal gyrus

IFG = inferior frontal gyrus

PMC = pre-motor cortex

OFC = orbitofrontal cortex

SMA = supplementary motor area

_____

_____

Introduction to music:

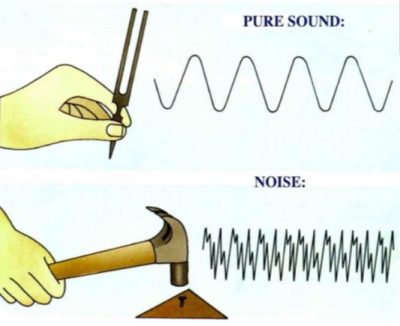

Music is a form of art. When different kinds of sounds are put together or mixed together to form a new sound which is pleasing to the human beings, it is called music. Music is derived from the Greek word Mousike, which means the art of muses. In Greek mythology, the nine Muses were the goddesses who inspired literature, science, and the arts and who were the source of the knowledge embodied in the poetry, song-lyrics, and myths in the Greek culture. Music is sound that has been organized by using rhythm, melody or harmony. If someone bangs saucepans while cooking, it makes noise. If a person bangs saucepans or pots in a rhythmic way, they are making a simple type of music. Music includes two things, one is the people singing, and the other is sound from the musical instruments. The person who makes music is called a musician. Every sound cannot be categorized as music. A sound may be noise or music. Music is the sound which is pleasing to the human ear but noise is not. It is also possible that a sound which is music for one person may be noise for some other person. For example, the loud rock music or hip-hop is music for the teenagers or the younger generation but it is the noise for the elderly.

_

Music is a fundamental part of our evolution; we probably sang before we spoke in syntactically guided sentences. Song is represented across animal worlds; birds and whales produce sounds, though not always melodic to our ears, but still rich in semantically communicative functions. Song is not surprisingly tied to a vast array of semiotics that pervade nature: calling attention to oneself, expanding oneself, selling oneself, deceiving others, reaching out to others and calling on others. The creative capability so inherent in music is a unique human trait. Music is strongly linked to motivation and to human social contact. Only a portion of people may play music, but all can, and do, at least sing or hum a tune. Music is a core human experience and a generative process that reflects cognitive capabilities. It is intertwined with many basic human needs and is the result of thousands of years of neurobiological development. Music, as it has evolved in humankind, allows for unique expressions of social ties and the strengthening of relational connectedness.

_

Underlying the behavior of what we might call a basic proclivity to sing and to express music are appetitive urges, consummatory expression, drive and satisfaction (Dewey). Music, like food ingestion, is rooted in biology. Appetitive expression is the buildup of need, and consummatory experiences are its release and reward. Appetitive and consummatory musical experiences are embedded in culturally rich symbols of meaning. Music is linked to learning, and humans have a strong pedagogical predilection. Learning not only takes place in the development of direct musical skills, but in the connections between music and emotional experiences.

_

Different types of sounds can be heard in our surroundings. Some sounds are soothing while others may be irritating or even hazardous. Music is sound organized in varying rhythmic, melodic and dynamic patterns performed on different instruments. Much research has been conducted on the effects of music on learning and its therapeutic role during rehabilitation. Some studies have demonstrated that music not only affects humans but also impact on animals, plants and bacterial growth.

Each type of sound has its own range of frequencies and power, affecting listeners in positive or negative ways. Organized sound in Western Classical music is generally soothing to the human ear. On the other hand, very loud sounds or noise, which emits power of more than 85 dB may cause permanent hearing loss in humans when exposed continuously to it for a period of more than eight hours. Unwanted sounds are unpleasant to humans and may cause stress and hypertension as well as affect the cognitive function of children.

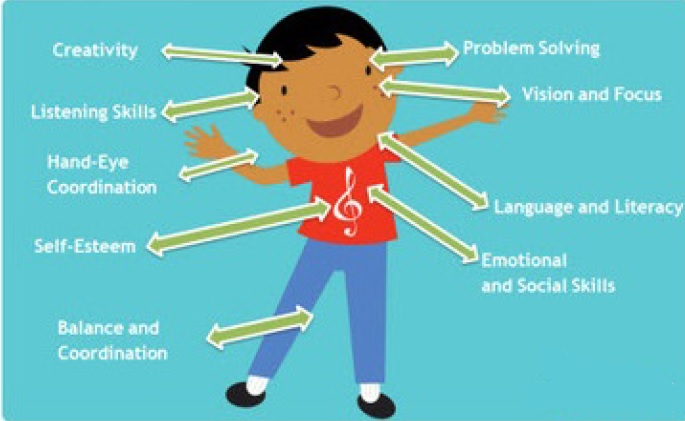

Sound in the form of music has been used positively in enhancing brain plasticity. Research has shown that listening to particular types of music can aid learning and encourage creativity in humans. Music has been used in medical treatment such as improving mental illness, reducing anxiety and stress during medical treatments, enhancing spatial-temporal reasoning, generating higher brain functional skills in reading, literacy, mathematical abilities and enhancing emotional intelligence. Sound stimulation has even been used in the sterilization process and the growth of cells and bacteria.

_

There are four things which music has most of the time:

- Music often has pitch. This means high and low notes. Tunes are made of notes that go up or down or stay on the same pitch.

- Music often has rhythm. Rhythm is the way the musical sounds and silences are put together in a sequence. Every tune has a rhythm that can be tapped. Music usually has a regular beat.

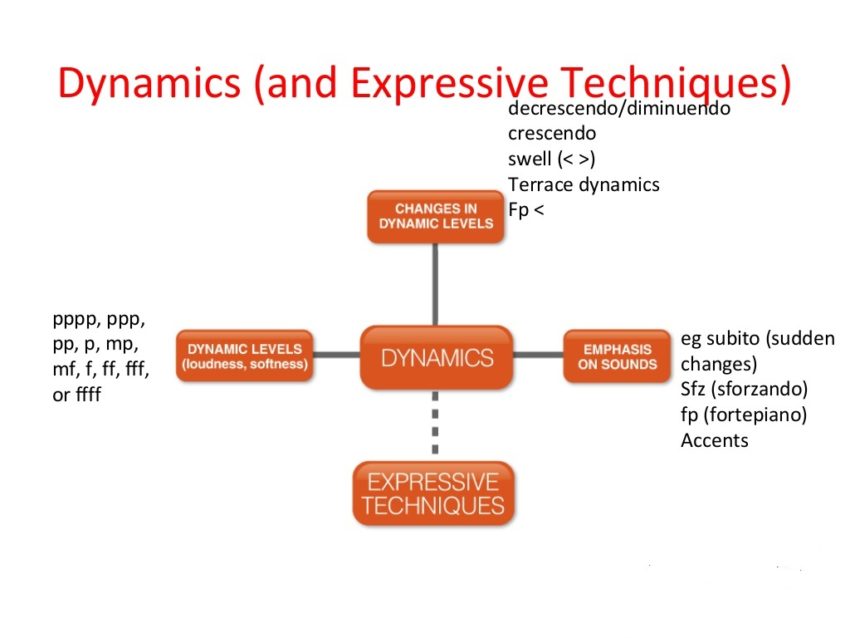

- Music often has dynamics. This means whether it is quiet or loud or somewhere in between.

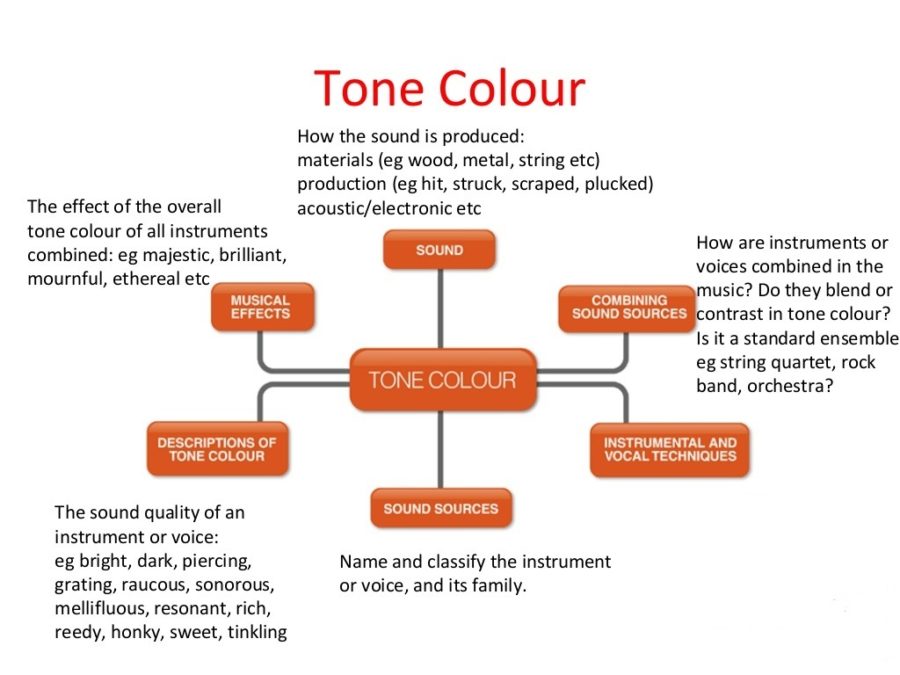

- Music often has timbre. The “timbre” of a sound is the way that a sound is interesting. The sort of sound might be harsh, gentle, dry, warm, or something else. Timbre is what makes a clarinet sound different from an oboe, and what makes one person’s voice sound different from another person.

_

Music denotes a particular type of human sound production and that those sounds are associated with the human voice or with human movement. These sounds have functions involving particular aspects of communication in particular social and cultural situations. Music is an art form and cultural activity whose medium is sound organized in time. General definitions of music include common elements such as pitch (which governs melody and harmony), rhythm (and its associated concepts tempo, meter, and articulation), dynamics (loudness and softness), and the sonic qualities of timbre and texture (which are sometimes termed the “color” of a musical sound). Different styles or types of music may emphasize, de-emphasize or omit some of these elements. Music is performed with a vast range of instruments and vocal techniques ranging from singing to rapping; there are solely instrumental pieces, solely vocal pieces (such as songs without instrumental accompaniment) and pieces that combine singing and instruments. In its most general form, the activities describing music as an art form or cultural activity include the creation of works of music (songs, tunes, symphonies, and so on), the criticism of music, the study of the history of music, and the aesthetic examination of music. Ancient Greek and Indian philosophers defined music as tones ordered horizontally as melodies and vertically as harmonies. Common sayings such as “the harmony of the spheres” and “it is music to my ears” point to the notion that music is often ordered and pleasant to listen to. However, 20th-century composer John Cage thought that any sound can be music, saying, for example, “There is no noise, only sound.”

_

Music is an art concerned with combining vocal or instrumental sounds for beauty of form or emotional expression, usually according to cultural standards of rhythm, melody, and, in most Western music, harmony. Both the simple folk song and the complex electronic composition belong to the same activity, music. Both are humanly engineered; both are conceptual and auditory, and these factors have been present in music of all styles and in all periods of history, throughout the world. The creation, performance, significance, and even the definition of music vary according to culture and social context. Indeed, throughout history, some new forms or styles of music have been criticized as “not being music”, including Beethoven’s Grosse Fuge string quartet in 1825, early jazz in the beginning of the 1900s and hardcore punk in the 1980s. There are many types of music, including popular music, traditional music, art music, music written for religious ceremonies and work songs such as chanteys. Music ranges from strictly organized compositions–such as Classical music symphonies from the 1700s and 1800s, through to spontaneously played improvisational music such as jazz, and avant-garde styles of chance-based contemporary music from the 20th and 21st centuries.

_

In many cultures, music is an important part of people’s way of life, as it plays a key role in religious rituals, rite of passage ceremonies (e.g., graduation and marriage), social activities (e.g., dancing) and cultural activities ranging from amateur karaoke singing to playing in an amateur funk band or singing in a community choir. People may make music as a hobby, like a teen playing cello in a youth orchestra, or work as a professional musician or singer. The music industry includes the individuals who create new songs and musical pieces (such as songwriters and composers), individuals who perform music (which include orchestra, jazz band and rock band musicians, singers and conductors), individuals who record music (music producers and sound engineers), individuals who organize concert tours, and individuals who sell recordings, sheet music, and scores to customers.

_____

_____

Definitions of music:

There is no simple definition of music which covers all cases. It is an art form, and opinions come into play. Music is whatever people think is music. A different approach is to list the qualities music must have, such as, sound which has rhythm, melody, pitch, timbre, etc. These and other attempts, do not capture all aspects of music, or leave out examples which definitely are music. Confucius defines music as department of ethics, he was concerned to adjust the specific notes for their presumed effect on human beings. Plato observes the association between the personality of the man and the music he played. For Schopenhauer “like other arts music is not copy of idea, it is idea itself, complete in itself”. Other form of arts articulate imitations but it is powerful, infallible, and pierce; and it is connected to human feelings which “restore to us all the emotions of our inmost nature, but entirely without reality and far removed from their pain.”

_

Various definitions of music:

- an art of sound in time that expresses ideas and emotions in significant forms through the elements of rhythm, melody, harmony, and color.

- an art form consisting of sequences of sounds in time, esp tones of definite pitch organized melodically, harmonically, rhythmically and according to tone colour

- the science or art of ordering tones or sounds in succession, in combination, and in temporal relationships to produce a composition having unity and continuity

- vocal, instrumental, or mechanical sounds having rhythm, melody, or harmony

_

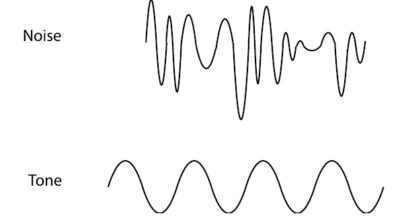

Organized sound:

An often-cited definition of music is that it is “organized sound”, a term originally coined by modernist composer Edgard Varèse in reference to his own musical aesthetic. Varèse’s concept of music as “organized sound” fits into his vision of “sound as living matter” and of “musical space as open rather than bounded”. He conceived the elements of his music in terms of “sound-masses”, likening their organization to the natural phenomenon of crystallization. Varèse thought that “to stubbornly conditioned ears, anything new in music has always been called noise”, and he posed the question, “what is music but organized noises?”

_

The fifteenth edition of the Encyclopedia Britannica states that “while there are no sounds that can be described as inherently unmusical, musicians in each culture have tended to restrict the range of sounds they will admit.” A human organizing element is often felt to be implicit in music (sounds produced by non-human agents, such as waterfalls or birds, are often described as “musical”, but perhaps less often as “music”). The composer R. Murray Schafer (1996, 284) states that the sound of classical music “has decays; it is granular; it has attacks; it fluctuates, swollen with impurities—and all this creates a musicality that comes before any ‘cultural’ musicality.” However, in the view of semiologist Jean-Jacques Nattiez, “just as music is whatever people choose to recognize as such, noise is whatever is recognized as disturbing, unpleasant, or both” (Nattiez 1990, 47–48).

_

The Concise Oxford Dictionary defines music as “the art of combining vocal or instrumental sounds (or both) to produce beauty of form, harmony, and expression of emotion” (Concise Oxford Dictionary 1992). However, the music genres known as noise music and musique concrète, for instance, challenge these ideas about what constitutes music’s essential attributes by using sounds not widely considered as musical, like randomly produced electronic distortion, feedback, static, cacophony, and compositional processes using indeterminacy. The Websters definition of music is a typical example: “the science or art of ordering tones or sounds in succession, in combination, and in temporal relationships to produce a composition having unity and continuity” (Webster’s Collegiate Dictionary, online edition).

_

Ben Watson points out that Ludwig van Beethoven’s Grosse Fuge (1825) “sounded like noise” to his audience at the time. Indeed, Beethoven’s publishers persuaded him to remove it from its original setting as the last movement of a string quartet. He did so, replacing it with a sparkling Allegro. They subsequently published it separately. Musicologist Jean-Jacques Nattiez considers the difference between noise and music nebulous, explaining that “The border between music and noise is always culturally defined—which implies that, even within a single society, this border does not always pass through the same place; in short, there is rarely a consensus … By all accounts there is no single and intercultural universal concept defining what music might be” (Nattiez 1990, 48, 55). “Music, often an art/entertainment, is a total social fact whose definitions vary according to era and culture,” according to Jean Molino. It is often contrasted with noise.

_

Subjective experience:

This approach to the definition focuses not on the construction but on the experience of music. An extreme statement of the position has been articulated by the Italian composer Luciano Berio: “Music is everything that one listens to with the intention of listening to music”. This approach permits the boundary between music and noise to change over time as the conventions of musical interpretation evolve within a culture, to be different in different cultures at any given moment, and to vary from person to person according to their experience and proclivities. It is further consistent with the subjective reality that even what would commonly be considered music is experienced as nonmusic if the mind is concentrating on other matters and thus not perceiving the sound’s essence as music.

_

In his 1983 book, Music as Heard, which sets out from the phenomenological position of Husserl, Merleau-Ponty, and Ricœur, Thomas Clifton defines music as “an ordered arrangement of sounds and silences whose meaning is presentative rather than denotative. . . . This definition distinguishes music, as an end in itself, from compositional technique, and from sounds as purely physical objects.” More precisely, “music is the actualization of the possibility of any sound whatever to present to some human being a meaning which he experiences with his body—that is to say, with his mind, his feelings, his senses, his will, and his metabolism”. It is therefore “a certain reciprocal relation established between a person, his behavior, and a sounding object”. Clifton accordingly differentiates music from non-music on the basis of the human behavior involved, rather than on either the nature of compositional technique or of sounds as purely physical objects. Consequently, the distinction becomes a question of what is meant by musical behavior: “a musically behaving person is one whose very being is absorbed in the significance of the sounds being experienced.” However, “It is not altogether accurate to say that this person is listening to the sounds. First, the person is doing more than listening: he is perceiving, interpreting, judging, and feeling. Second, the preposition ‘to’ puts too much stress on the sounds as such. Thus, the musically behaving person experiences musical significance by means of, or through, the sounds”.

In this framework, Clifton finds that there are two things that separate music from non-music: (1) musical meaning is presentative, and (2) music and non-music are distinguished in the idea of personal involvement. “It is the notion of personal involvement which lends significance to the word ordered in this definition of music”. This is not to be understood, however, as a sanctification of extreme relativism, since “it is precisely the ‘subjective’ aspect of experience which lured many writers earlier in this century down the path of sheer opinion-mongering. Later on this trend was reversed by a renewed interest in ‘objective,’ scientific, or otherwise non-introspective musical analysis. But we have good reason to believe that a musical experience is not a purely private thing, like seeing pink elephants, and that reporting about such an experience need not be subjective in the sense of it being a mere matter of opinion”. Clifton’s task, then, is to describe musical experience and the objects of this experience which, together, are called “phenomena,” and the activity of describing phenomena is called “phenomenology”. It is important to stress that this definition of music says nothing about aesthetic standards.

______

To make working definition of music, few axioms are needed.

- Music does not exist unless it is heard by someone, whether out loud or inside someone’s head. Sounds which no-one hears, even a recording of music out of human earshot, is only potentially, not really, music.

- Although the original source of musical sound does not have to be human, music is always the result of some kind of human mediation, intention or organisation through production practices such as composition, arrangement, performance or presentation. In other words, to become music, one or more humans has/have to organise sounds (that may or may not be considered musical in themselves), into sequentially, and sometimes synchronically, ordered patterns. For example, the sound of a smoke alarm is unlikely to be regarded in itself as music, but sampled and repeated over a drum track, or combined with sounds of screams and conflagration edited in at certain points, it can become music.

- If points 1 and 2 are valid, then music is a matter of interhuman communication.

- Like speech, music is mediated as sound but, unlike speech, music’s sounds do not need to include words, even though one of the most common forms of musical expression around the world entails the singing, chanting or reciting of words. Another way of understanding the distinction is to remember that while the prosodic, or `musical’ aspects of speech — tonal, durational and metric elements such as inflexion, intonation, accentuation, intonation, rhythm, periodicity — are important to the communication of the spoken word, a wordless utterance consisting only of prosodic elements ceases by definition to be speech (it has no words) and is more likely to be understood as `music’.

- Although closely related to human gesture and movement — for example, dancing, marching, caressing, jumping — human gesture and movement can exist without music even if music cannot be produced without some sort of human gesture or movement.

- If points 4 and 5 are valid, music `is’ no more gesture or movement than it `is’ speech, even though it is intimately associated with all three.

- If music involves the human organisation and perception of non-verbal sound (points 1-6, above), and if it is closely associated with gesture and movement, it is close to preverbal modes of sensory perception and, consequently, to the mediation of somatic (corporeal) and affective (emotional) aspects of human cognition.

- Although music is a universal human phenomenon, and even though there may be a few general bio-acoustic universals of musical expression, the same sounds or combinations of sounds are not necessarily intended, heard, understood or used in the same way in different musical cultures.

On the basis of the eight points just presented, some authors have posited a working definition of music:

Music is that form of interhuman communication in which humanly organised, non-verbal sound is perceived as vehiculating primarily affective (emotional) and/or gestural (corporeal) patterns of cognition.

_____

Basic tenets of music:

- Concerted simultaneity and collective identity

Musical communication can take place between:

-an individual and himself/herself;

-two individuals;

-an individual and a group;

-a group and an individual;

-individuals within the same group;

-members of one group and those of another.

Particularly musical (and choreographic) states of communication are those involving a concerted simultaneity of sound events or movements, that is, between a group and its members, between a group and an individual or between two groups. While you can sing, play, dance, talk, paint, sculpt and write to or for yourself and for others, it is very rare for several people to simultaneously talk, write, paint or sculpt in time with each other. In fact, as soon as speech is subordinated to temporal organisation of its prosodic elements (rhythm, accentuation, relative pitch, etc.), it becomes intrinsically musical, as is evident from the choral character of rhythmically chanted of slogans in street demonstrations or in the role of the choir in Ancient Greek drama. Thanks to this factor of concerted simultaneity, music and dance are particularly suited to expressing collective messages of affective and corporeal identity of individuals in relation to themselves, each other, and their social, as well as physical, surroundings.

- Intra- and extrageneric

Direct imitations of, or reference to, sound outside the framework of musical discourse are relatively uncommon elements in most European and North American music. In fact, musical structures often seem to be objectively related to either: [a] nothing outside themselves; or [b] their occurrence in similar guise in other music; or [c] their own context within the piece of music in which they (already) occur. At the same time, it would be silly to treat music as a self-contained system of sound combinations because changes in musical style are found in conjunction with (accompanying, preceding, following) change in the society and culture of which the music is part.

The contradiction between music only refers to music (the intrageneric notion) and music is related to society (extragenric) is non-antagonistic. A recurrent symptom observed when studying how musics vary inside society and from one society to another in time or place is the way in which new means of musical expression are incorporated into the main body of any given musical tradition from outside the framework of its own discourse. These `intonation crises’ (Assafyev 1976: 100-101) work in a number of different ways. They can:

-`refer’ to other musical codes, by acting as social connotors of what sort of people use those `other’ sounds in which situations;

-reflect changes in sound technology, acoustic conditions, or the soundscape and changes in collective self-perception accompanying these developments, for example, from clavichord to grand piano, from bagpipe to accordion, from rural to urban blues, from rock music to technopop.

-reflect changes in class structure or other notable demographic change, such as reggae influences on British rock, or the shift in dominance of US popular music (1930s – 1960s) from Broadway shows to the more rock, blues and country-based styles from the US South and West.

-act as a combination of any of the three processes just mentioned.

- Musical `universals’

Cross-cultural universals of musical code are bioacoustic. While such relationships between musical sound and the human body are at the basis of all music, the majority of musical communication is nevertheless culturally specific. The basic bioacoustic universals of musical code can be summarised as the following relationships:

-between [a] musical tempo (pulse) and [b] heartbeat (pulse) or the speed of breathing, walking, running and other bodily movement. This means that no-one can musically sleep in a hurry, stand still while running, etc.

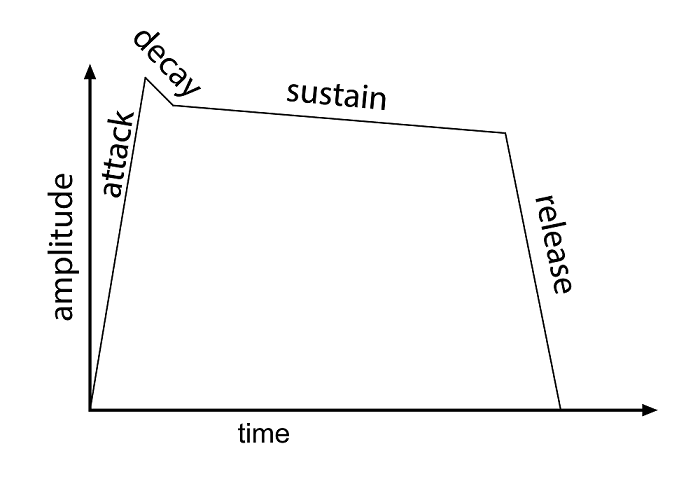

-between [a] musical loudness and timbre (attack, envelope, decay, transients) and [b] certain types of physical activity. This means no-one can make gentle or `caressing’ kinds of musical statement by striking hard objects sharply, that it is counterproductive to yell jerky lullabies at breakneck speed and that no-one uses legato phrasing or soft, rounded timbres for hunting or war situations.

-between [a] speed and loudness of tone beats and [b] the acoustic setting. This means that quick, quiet tone beats are indiscernible if there is a lot of reverberation and that slow, long, loud ones are difficult to produce and sustain acoustically if there is little or no reverberation. This is why a dance or pub rock band is well advised to take its acoustic space around with it in the form of echo effects to overcome all the carpets and clothes that would otherwise damp the sounds the band produces.

-between [a] musical phrase lengths and [b] the capacity of the human lung. This means that few people can sing or blow and breathe in at the same time. It also implies that musical phrases tend to last between two and ten seconds.

The general areas of connotation just mentioned (acoustic situation, movement, speed, energy and non-musical sound) are all in a bioacoustic relationship to the musical parameters cited (pulse, volume, phrase duration and timbre). These relationships may well be cross-cultural, but that does not mean that emotional attitudes towards such phenomena as large spaces (cold and lonely versus free and open), hunting (exhilarating versus cruel), hurrying (pleasant versus unpleasant) will also be the same even inside one and the same culture, let alone between cultures. One reason for such discrepancy is that the musical parameters mentioned in the list of `universals’ (pulse, volume, general phrase duration and certain aspects of timbre and pitch) do not include the way in which rhythmic, metric, timbral, tonal, melodic, instrumentational or harmonic parameters are organised in relation to each other inside the musical discourse. Such musical organisation presupposes some sort of social organisation and cultural context before it can be created, understood or otherwise invested with meaning. In other words: only extremely general bioacoustic types of connotation can be considered as cross-cultural universals of music. Therefore, even if musical and linguistic cultural boundaries do not necessarily coincide, it is fallacious to regard music as a universal language according to some authors.

_____

Music as communication.

Music is a form of communication, although it does not employ linguistic symbols or signs. It is considered to be a closed system because it does not refer to objects or concepts outside of the realm of music. This sets music apart from other art forms and sciences. Mathematics is another closed system, but falls short of music in that it communicates only intellectual meanings whereas music also conveys emotional and aesthetic meanings. These meanings, however, are not universal, as comparative musicologists have discovered (Meyer, 1956). In fact, although musical meanings do not seem to be common across cultures, the elements of music such as pitch and rhythm are regarded across cultures as abstract and enigmatic symbols that are then associated with intrinsic meaning according to the knowledge base of musical style and experience a person or culture has gained (Lefevre, 2004).

Music is a true communication form. A 1990 study found that 80% of adults surveyed described experiencing physical responses to music, such as laughter, tears, and thrills. A 1995 study also revealed that 70% of young adults claimed to enjoy benefits for the emotions evoked by it (Panksepp & Bernatzky, 2002). A further study performed at Cornell University in 1997 measured physiological responses of subjects listening to several different pieces of music that were generally thought to convey certain emotions (Krumhansl, 1997). Each subject consistently matched his or her physiological response with the expected emotion of the music. When a person experiences thrills while listening to music, the same pleasure centers of the brain are activated as if they were eating chocolate, having sex or taking cocaine (Blood & Zatorre, 2001).

_______

Music and dance:

It is unlikely that any human society (at any rate until the invention of puritanism) has denied itself the excitement and pleasure of dancing. Like cave painting, the first purpose of dance is probably ritual – appeasing a nature spirit or accompanying a rite of passage. But losing oneself in rhythmic movement with other people is an easy form of intoxication. Pleasure can never have been far away. Rhythm, indispensable in dancing, is also a basic element of music. It is natural to beat out the rhythm of the dance with sticks. It is natural to accompany the movement of the dance with rhythmic chanting. Dance and music begin as partners in the service of ritual. Music is sound, composed in certain rhythms to express people’s feelings or to transfer certain feelings. Dance is physical movement also used to express joy or other intense feelings. It can be anything from ballet to break-dance.

_______

The story of music is the story of humans:

Where did music come from? How did music begin? Did our early ancestors first start by beating things together to create rhythm, or use their voices to sing? What types of instruments did they use? Has music always been important in human society, and if so, why? These are some of the questions explored in a recent Hypothesis and Theory article published in Frontiers in Sociology. The answers reveal that the story of music is, in many ways, the story of humans. So, what is music? This is difficult to answer, as everyone has their own idea. “Sound that conveys emotion,” is what Jeremy Montagu, of the University of Oxford and author of the article, describes as his. A mother humming or crooning to calm her baby would probably count as music, using this definition, and this simple music probably predated speech.

But where do we draw the line between music and speech? You might think that rhythm, pattern and controlling pitch are important in music, but these things can also apply when someone recites a sonnet or speaks with heightened emotion. Montagu concludes that “each of us in our own way can say ‘Yes, this is music’, and ‘No, that is speech’.”

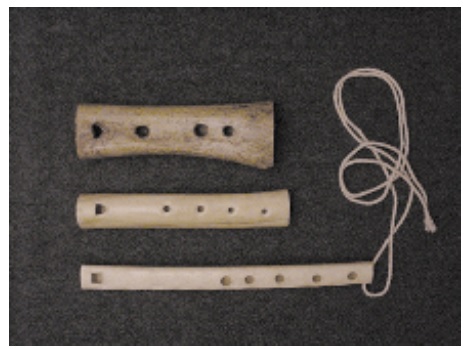

So, when did our ancestors begin making music? If we take singing, then controlling pitch is important. Scientists have studied the fossilized skulls and jaws of early apes, to see if they were able to vocalize and control pitch. About a million years ago, the common ancestor of Neanderthals and modern humans had the vocal anatomy to “sing” like us, but it’s impossible to know if they did. Another important component of music is rhythm. Our early ancestors may have created rhythmic music by clapping their hands. This may be linked to the earliest musical instruments, when somebody realized that smacking stones or sticks together doesn’t hurt your hands as much. Many of these instruments are likely to have been made from soft materials like wood or reeds, and so haven’t survived. What have survived are bone pipes. Some of the earliest ever found are made from swan and vulture wing bones and are between 39,000 and 43,000 years old. Other ancient instruments have been found in surprising places. For example, there is evidence that people struck stalactites or “rock gongs” in caves dating from 12,000 years ago, with the caves themselves acting as resonators for the sound.

So, we know that music is old, and may have been with us from when humans first evolved. But why did it arise and why has it persisted? There are many possible functions for music. One is dancing. It is unknown if the first dancers created a musical accompaniment, or if music led to people moving rhythmically. Another obvious reason for music is entertainment, which can be personal or communal. Music can also be used for communication, often over large distances, using instruments such as drums or horns. Yet another reason for music is ritual, and virtually every religion uses music.

However, the major reason that music arose and persists may be that it brings people together. “Music leads to bonding, such as bonding between mother and child or bonding between groups,” explains Montagu. “Music keeps workers happy when doing repetitive and otherwise boring work, and helps everyone to move together, increasing the force of their work. Dancing or singing together before a hunt or warfare binds participants into a cohesive group.” He concludes: “It has even been suggested that music, in causing such bonding, created not only the family but society itself, bringing individuals together who might otherwise have led solitary lives.”

______

What is the oldest known piece of music?

The history of music is as old as humanity itself. Archaeologists have found primitive flutes made of bone and ivory dating back as far as 43,000 years, and it’s likely that many ancient musical styles have been preserved in oral traditions. When it comes to specific songs, however, the oldest known examples are relatively more recent. The earliest fragment of musical notation is found on a 4,000-year-old Sumerian clay tablet, which includes instructions and tunings for a hymn honoring the ruler Lipit-Ishtar. But for the title of oldest extant song, most historians point to “Hurrian Hymn No. 6,” an ode to the goddess Nikkal that was composed in cuneiform by the ancient Hurrians sometime around the 14th century B.C. The clay tablets containing the tune were excavated in the 1950s from the ruins of the city of Ugarit in Syria. Along with a near-complete set of musical notations, they also include specific instructions for how to play the song on a type of nine-stringed lyre. “Hurrian Hymn No. 6” is considered the world’s earliest melody, but the oldest musical composition to have survived in its entirety is a first century A.D. Greek tune known as the “Seikilos Epitaph.” The song was found engraved on an ancient marble column used to mark a woman’s gravesite in Turkey. “I am a tombstone, an image,” reads an inscription. “Seikilos placed me here as an everlasting sign of deathless remembrance.” The column also includes musical notation as well as a short set of lyrics that read: “While you live, shine / Have no grief at all / Life exists only for a short while / And time demands its toll.”

______

______

Musical composition:

“Composition” is the act or practice of creating a song, an instrumental music piece, a work with both singing and instruments, or another type of music. In many cultures, including Western classical music, the act of composing also includes the creation of music notation, such as a sheet music “score”, which is then performed by the composer or by other singers or musicians. In popular music and traditional music, the act of composing, which is typically called songwriting, may involve the creation of a basic outline of the song, called the lead sheet, which sets out the melody, lyrics and chord progression. In classical music, the composer typically orchestrates his or her own compositions, but in musical theatre and in pop music, songwriters may hire an arranger to do the orchestration. In some cases, a songwriter may not use notation at all, and instead compose the song in her mind and then play or record it from memory. In jazz and popular music, notable recordings by influential performers are given the weight that written scores play in classical music.

Even when music is notated relatively precisely, as in classical music, there are many decisions that a performer has to make, because notation does not specify all of the elements of music precisely. The process of deciding how to perform music that has been previously composed and notated is termed “interpretation”. Different performers’ interpretations of the same work of music can vary widely, in terms of the tempos that are chosen and the playing or singing style or phrasing of the melodies. Composers and songwriters who present their own music are interpreting their songs, just as much as those who perform the music of others. The standard body of choices and techniques present at a given time and a given place is referred to as performance practice, whereas interpretation is generally used to mean the individual choices of a performer.

Although a musical composition often uses musical notation and has a single author, this is not always the case. A work of music can have multiple composers, which often occurs in popular music when a band collaborates to write a song, or in musical theatre, when one person writes the melodies, a second person writes the lyrics, and a third person orchestrates the songs. In some styles of music, such as the blues, a composer/songwriter may create, perform and record new songs or pieces without ever writing them down in music notation. A piece of music can also be composed with words, images, or computer programs that explain or notate how the singer or musician should create musical sounds. Examples range from avant-garde music that uses graphic notation, to text compositions such as Aus den sieben Tagen, to computer programs that select sounds for musical pieces. Music that makes heavy use of randomness and chance is called aleatoric music, and is associated with contemporary composers active in the 20th century, such as John Cage, Morton Feldman, and Witold Lutosławski. A more commonly known example of chance-based music is the sound of wind chimes jingling in a breeze.

The study of composition has traditionally been dominated by examination of methods and practice of Western classical music, but the definition of composition is broad enough to include the creation of popular music and traditional music songs and instrumental pieces as well as spontaneously improvised works like those of free jazz performers and African percussionists such as Ewe drummers. Although in the 2000s, composition is considered to consist of the manipulation of each aspect of music (harmony, melody, form, rhythm, and timbre), composition mainly consists in two things only. The first is the ordering and disposing of several sounds…in such a manner that their succession pleases the ear. This is what the Ancients called melody. The second is the rendering audible of two or more simultaneous sounds in such a manner that their combination is pleasant. This is what we call harmony, and it alone merits the name of composition.

Musical technique is the ability of instrumental and vocal musicians to exert optimal control of their instruments or vocal cords to produce precise musical effects. Improving technique generally entails practicing exercises that improve muscular sensitivity and agility. To improve technique, musicians often practice fundamental patterns of notes such as the natural, minor, major, and chromatic scales, minor and major triads, dominant and diminished sevenths, formula patterns and arpeggios. For example, triads and sevenths teach how to play chords with accuracy and speed. Scales teach how to move quickly and gracefully from one note to another (usually by step). Arpeggios teach how to play broken chords over larger intervals. Many of these components of music are found in compositions, for example, a scale is a very common element of classical and romantic era compositions. Heinrich Schenker argued that musical technique’s “most striking and distinctive characteristic” is repetition. Works known as études (meaning “study”) are also frequently used for the improvement of technique.

_______

Musical forms:

Musical form is the wider perspective of a piece of music. It describes the layout of a composition as divided into sections, akin to the layout of a city divided into neighborhoods. Musical works may be classified into two formal types: A and A/B. Compositions exist in a boundless variety of styles, instrumentation, length and content–all the factors that make them singular and personal. Yet, underlying this individuality, any musical work can be interpreted as either an A or A/B-form. An A-form emphasizes continuity and prolongation. It flows, unbroken, from beginning to end. In a unified neighborhood, wander down any street and it will look very similar to any other. Similarly, in an A-form, the music has a recognizable consistency. The other basic type is the A/B-form. Whereas A-forms emphasize continuity, A/B-forms emphasize contrast and diversity. A/B-forms are clearly broken up into sections, which differ in aurally immediate ways. The sections are often punctuated by silences or resonant pauses, making them more clearly set off from one another. Here, you travel among neighborhoods travels that are noticeably different from one another: The first might be a residential neighborhood, with tree-lined streets and quiet cul-de-sacs. The next is an industrial neighborhood, with warehouses and smoke-stacks. The prime articulants of form are rhythm and texture. If the rhythm and texture remain constant, you will tend to perceive an A-form. If there is a marked change in rhythm or texture, you will tend to perceive a point of contrast–a boundary, from which you pass into a new neighborhood. This will indicate an A/B-form.

_______

Music versus song:

The early man did not know about music yet he heard it in the whispering of air and leaves of trees, singing of birds, falling of water in a water fall, and so on. It is hard to tell if music came first or is what lyrics of a song or poetry that was produced first. The sacred chant of Ohm in Hindus and Shlokas in Buddhism appear to be amazingly musical without any music incorporated. The music as various cultures know and practice today is ancient. It involves producing sounds that are in rhythm and are melodious. Whether music is produced using musical instruments (whether percussion or string) or is vocal sung by a person doesn’t make a difference as it is rhythmical and has a soothing and relaxing effect on our minds. To call a composition when it is rendered by musical instrument, as music, and not to refer as music a song or poetry sung in a rhythm by an individual does not make sense though this is how many people feel. Isn’t a lullaby sung by a mother to her child without any music, music? Similarly the tap of fingers or feet on an object that produces lyrical sound is also a kind of music.

– Thus, any composition whether or not accompanied by instruments is referred to as music, if it is in rhythm and appears melodious to ears.

– A song is usually referred to as lyrics when it is on paper, but becomes music when sung by an individual. However, any piece of composition, when played on a musical instrument is also music.

– A song is merely poetry when it is rendered as if reading a text without any rhythm, but becomes music when set to a tune and sung accordingly.

_____

Orchestra:

An orchestra is a group of musicians playing instruments together. They usually play classical music. A large orchestra is sometimes called a “symphony orchestra” and a small orchestra is called a “chamber orchestra”. A symphony orchestra may have about 100 players, while a chamber orchestra may have 30 or 40 players. The number of players will depend on what music they are playing and the size of the place where they are playing. The word “orchestra” originally meant the semi-circular space in front of a stage in a Greek theatre which is where the singers and instruments used to play. Gradually the word came to mean the musicians themselves.

Ensembles:

The word “ensemble” comes from the French meaning “together” and is a broad concept that encompasses groupings of various constituencies and sizes. Ensembles can be made up of singers alone, instruments alone, singers and instruments together, two performers or hundreds. Ensemble performance is part of virtually every musical tradition. Examples of large ensembles are the symphony orchestra, marching band, jazz band, West Indian steel pan orchestra, Indonesia gamelan, African drum ensembles, chorus, and gospel choir. In such large groups, performers are usually divided into sections, each with its particular material or function. So, for example, all the tenors in a chorus sing the same music, and all the alto saxes in a jazz big band play the same part. Usually a conductor or lead performer is responsible for keeping everyone together.

The large vocal ensemble most familiar to Westerners is the chorus, twenty or more singers grouped in soprano, alto, tenor, and bass sections. The designation choir is sometimes used for choruses that sing religious music. There is also literature for choruses comprised of men only, women only, and children. Small vocal ensembles, in which there are one to three singers per part, include the chamber chorus and barber shop quartet. Vocal ensemble music is sometimes intended to be performed a cappella, that is, by voices alone, and sometimes with instruments. Choral numbers are commonly included in operas, oratorios, and musicals.

The most important large instrumental ensemble in the Western tradition is the symphony orchestra. Orchestras such as the New York Philharmonic, Brooklyn Philharmonic, and those of the New York City Opera and Metropolitan Opera, consist of 40 or more players, depending on the requirements of the music they are playing. The players are grouped by family into sections – winds, brass, percussion and strings. Instruments from different sections frequently double each other, one instrument playing the same material as another, although perhaps in different octaves. Thus, while a symphony by Mozart may have parts for three sections, the melody given to the first violins is often identical to that of the flutes and clarinets; the bassoons, cellos and basses may join forces in playing the bass line supporting that melody while the second violins, violas, and French horns are responsible for the pitches that fill out the harmony. The term orchestration refers to the process of designating particular musical material to particular instruments.

_____

Musical improvisation:

Musical improvisation is the creation of spontaneous music, often within (or based on) a pre-existing harmonic framework or chord progression. Improvisation is the act of instantaneous composition by performers, where compositional techniques are employed with or without preparation. Improvisation is a major part of some types of music, such as blues, jazz, and jazz fusion, in which instrumental performers improvise solos, melody lines and accompaniment parts. In the Western art music tradition, improvisation was an important skill during the Baroque era and during the Classical era. In the Baroque era, performers improvised ornaments and basso continuo keyboard players improvised chord voicings based on figured bass notation. In the Classical era, solo performers and singers improvised virtuoso cadenzas during concerts. However, in the 20th and early 21st century, as “common practice” Western art music performance became institutionalized in symphony orchestras, opera houses and ballets, improvisation has played a smaller role. At the same time, some modern composers have increasingly included improvisation in their creative work. In Indian classical music, improvisation is a core component and an essential criterion of performances.

_____

Analysis of styles:

Some styles of music place an emphasis on certain of these fundamentals, while others place less emphasis on certain elements. To give one example, while Bebop-era jazz makes use of very complex chords, including altered dominants and challenging chord progressions, with chords changing two or more times per bar and keys changing several times in a tune, funk places most of its emphasis on rhythm and groove, with entire songs based around a vamp on a single chord. While Romantic era classical music from the mid- to late-1800s makes great use of dramatic changes of dynamics, from whispering pianissimo sections to thunderous fortissimo sections, some entire Baroque dance suites for harpsichord from the early 1700s may use a single dynamic. To give another example, while some art music pieces, such as symphonies are very long, some pop songs are just a few minutes long.

_____

Performance:

Performance is the physical expression of music, which occurs when a song is sung or when a piano piece, electric guitar melody, symphony, drum beat or other musical part is played by musicians. In classical music, a musical work is written in music notation by a composer and then it is performed once the composer is satisfied with its structure and instrumentation. However, as it gets performed, the interpretation of a song or piece can evolve and change. In classical music, instrumental performers, singers or conductors may gradually make changes to the phrasing or tempo of a piece. In popular and traditional music, the performers have a lot more freedom to make changes to the form of a song or piece. As such, in popular and traditional music styles, even when a band plays a cover song, they can make changes to it such as adding a guitar solo to or inserting an introduction.

A performance can either be planned out and rehearsed (practiced)—which is the norm in classical music, with jazz big bands and many popular music styles–or improvised over a chord progression (a sequence of chords), which is the norm in small jazz and blues groups. Rehearsals of orchestras, concert bands and choirs are led by a conductor. Rock, blues and jazz bands are usually led by the bandleader. A rehearsal is a structured repetition of a song or piece by the performers until it can be sung and/or played correctly and, if it is a song or piece for more than one musician, until the parts are together from a rhythmic and tuning perspective. Improvisation is the creation of a musical idea–a melody or other musical line–created on the spot, often based on scales or pre-existing melodic riffs.

Many cultures have strong traditions of solo performance (in which one singer or instrumentalist performs), such as in Indian classical music, and in the Western art-music tradition. Other cultures, such as in Bali, include strong traditions of group performance. All cultures include a mixture of both, and performance may range from improvised solo playing to highly planned and organised performances such as the modern classical concert, religious processions, classical music festivals or music competitions. Chamber music, which is music for a small ensemble with only a few of each type of instrument, is often seen as more intimate than large symphonic works.

_____

Oral and aural tradition:

Many types of music, such as traditional blues and folk music were not written down in sheet music; instead, they were originally preserved in the memory of performers, and the songs were handed down orally, from one musician or singer to another, or aurally, in which a performer learns a song “by ear”. When the composer of a song or piece is no longer known, this music is often classified as “traditional” or as a “folk song”. Different musical traditions have different attitudes towards how and where to make changes to the original source material, from quite strict, to those that demand improvisation or modification to the music. A culture’s history and stories may also be passed on by ear through song.

_____

Ornamentation:

In music, an “ornament” is a decoration to a melody, bassline or other musical part. The detail included explicitly in the music notation varies between genres and historical periods. In general, art music notation from the 17th through the 19th centuries required performers to have a great deal of contextual knowledge about performing styles. For example, in the 17th and 18th centuries, music notated for solo performers typically indicated a simple, unadorned melody. However, performers were expected to know how to add stylistically appropriate ornaments to add interest to the music, such as trills and turns.

In the 19th century, art music for solo performers may give a general instruction such as to perform the music expressively, without describing in detail how the performer should do this. The performer was expected to know how to use tempo changes, accentuation, and pauses (among other devices) to obtain this “expressive” performance style. In the 20th century, art music notation often became more explicit and used a range of markings and annotations to indicate to performers how they should play or sing the piece.

______

The experience of music:

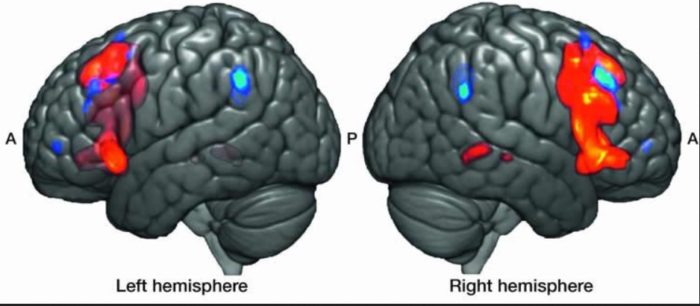

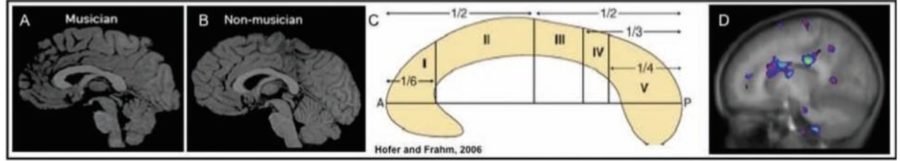

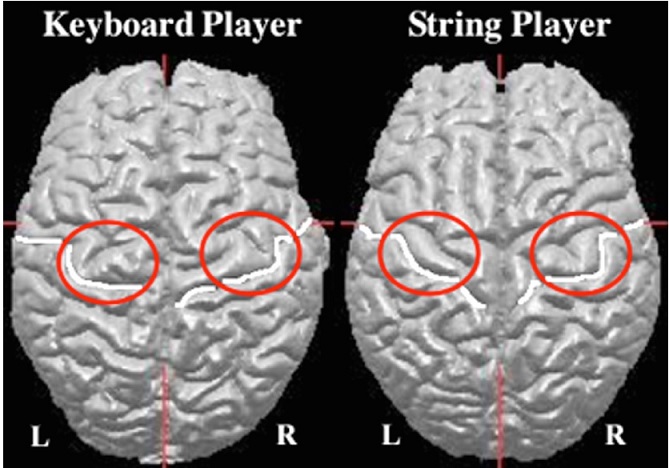

A highly significant finding to emerge from the studies of the effects in the brain of listening to music is the emphasis on the importance of the right (non-dominant) hemisphere. Thus, lesions following cerebral damage lead to impairments of appreciation of pitch, timbre and rhythm (Stewart et al, 2006) and studies using brain imaging have shown that the right hemisphere is preferentially activated when listening to music in relation to the emotional experience, and that even imagining music activates areas on this side of the brain (Blood et al, 1999). This should not be taken to imply that there is a simple left–right dichotomy of functions in the human brain. However, it is the case that traditional neurology has to a large extent ignored the talents of the non-dominant hemisphere, much in favour of the dominant (normally left) hemisphere. In part this stems from an overemphasis on the role of the latter in propositional language and a lack of interest in the emotional intonations of speech (prosody) that give so much meaning to expression.

The link between music and emotion seems to have been accepted for all time. Plato considered that music played in different modes would arouse different emotions, and as a generality most of us would agree on the emotional significance of any particular piece of music, whether it be happy or sad; for example, major chords are perceived to be cheerful, minor ones sad. The tempo or movement in time is another component of this, slower music seeming less joyful than faster rhythms. This reminds us that even the word motion is a significant part of emotion, and that in the dance we are moving – as we are moved emotionally by music.

Until recently, musical theorists had largely concerned themselves with the grammar and syntax of music rather than with the affective experiences that arise in response to music. Music, if it does anything, arouses feelings and associated physiological responses, and these can now be measured. For the ordinary listener, however, there may be no necessary relationship of the emotion to the form and content of the musical work, since ‘the real stimulus is not the progressive unfolding of the musical structure but the subjective content of the listener’s mind’ (Langer, 1951, p. 258). Such a phenomenological approach directly contradicts the empirical techniques of so much current neuroscience in this area, yet is of direct concern to psychiatry, and topics such as compositional creativity.

If it is a language, music is a language of feeling. Musical rhythms are life rhythms, and music with tensions, resolutions, crescendos and diminuendos, major and minor keys, delays and silent interludes, with a temporal unfolding of events, does not present us with a logical language, but, to quote Langer again, it ‘reveals the nature of feelings with a detail and truth that language cannot approach’ (Langer, 1951, p. 199, original emphasis). This idea seems difficult for a philosophical mind to follow, namely that there can be knowledge without words. Indeed, the problem of describing a ‘language’ of feeling permeates the whole area of philosophy and neuroscience research, and highlights the relative futility of trying to classify our emotions – ‘Music is revealing, where words are obscuring’ (Langer, 1951, p. 206).

______

Understanding Music:

Animals can hear music in a sense—your dog might be frightened by the loud noise emitted by your stereo. But we do not hear music in this way; we can listen to it with understanding. What constitutes this experience of understanding music? To use an analogy, while the mere sound of a piece of music might be represented by a sonogram, our experience of it as music is better represented by something like a marked-up score. We hear individual notes that make up distinct melodies, harmonies, rhythms, sections, and so on, and the interaction between these elements. Such musical understanding comes in degrees along a number of dimensions. Your understanding of a given piece or style may be deeper than mine, while the reverse is true for another piece or style. I may hear more in a particular piece than you do, but my understanding of it may be inaccurate. My general musical understanding may be narrow, in the sense that I only understand one kind of music, while you understand many different kinds. Moreover, different pieces or kinds of pieces may call on different abilities, since some music has no harmony to speak of, some no melody, and so on. Many argue that, in addition to purely musical features, understanding the emotions expressed in a piece is essential to adequately understanding it.

Though one must have recourse to technical terms, such as “melody”, “dominant seventh”, “sonata form”, and so on, in order to describe specific musical experiences and the musical experience in general, it is widely agreed that one need not possess these concepts explicitly, nor the correlative vocabulary, in order to listen with understanding. However, it is also widely acknowledged that such explicit theoretical knowledge can aid deeper musical understanding and is requisite for the description and understanding of one’s own musical experience and that of others.

At the base of the musical experience seem to be (i) the experience of tones, as opposed to mere pitched sounds, where a tone is heard as being in “musical space”, that is, as bearing relations to other tones such as being higher or lower, or of the same kind (at the octave), and (ii) the experience of movement, as when we hear a melody as wandering far afield and then coming to rest where it began. Roger Scruton argues that these experiences are irreducibly metaphorical, since they involve the application of spatial concepts to that which is not literally spatial. (There is no identifiable individual that moves from place to place in a melody) Malcolm Budd (1985b) argues that to appeal to metaphor in this context is unilluminating since, first, it is unclear what it means for an experience to be metaphorical and, second, a metaphor is only given meaning through its interpretation, which Scruton not only fails to give, but argues is unavailable. Budd suggests that the metaphor is reducible, and thus eliminable, apparently in terms of purely musical (i.e., non-spatial) concepts or vocabulary. Stephen Davies doubts that the spatial vocabulary can be eliminated, but he is sympathetic to Budd’s rejection of the centrality of metaphor. Instead, he argues that our use of spatial and motion terms to describe music is a secondary, but literal, use of those terms that is widely used to describe temporal processes, such as the ups and downs of the stock market, the theoretical position one occupies, one’s spirits plunging, and so on. The debate continues….

Davies is surely right about the ubiquity of the application of the language of space and motion to processes that lack individuals located in space. The appeal to secondary literal meanings, however, can seem as unsatisfying as the appeal to irreducible metaphor. We do not hear music simply as a temporal process, it might be objected, but as moving in the primary sense of the word, though we know that it does not literally so move. Andrew Kania (2015) develops a position out of this intuition by emphasizing Scruton’s appeal to imagination while dropping the appeal to metaphor, arguing that hearing the music as moving is a matter of imagining that its constituent sounds move.

______

Musical aptitude:

Musical aptitude refers to a person’s innate ability to acquire skills and knowledge required for musical activity, and may influence the speed at which learning can take place and the level that may be achieved. Study in this area focuses on whether aptitude can be broken into subsets or represented as a single construct, whether aptitude can be measured prior to significant achievement, whether high aptitude can predict achievement, to what extent aptitude is inherited, and what implications questions of aptitude have on educational principles. It is an issue closely related to that of intelligence and IQ, and was pioneered by the work of Carl Seashore. While early tests of aptitude, such as Seashore’s The Measurement of Musical Talent, sought to measure innate musical talent through discrimination tests of pitch, interval, rhythm, consonance, memory, etc., later research found these approaches to have little predictive power and to be influenced greatly by the test-taker’s mood, motivation, confidence, fatigue, and boredom when taking the test.

______

How to enjoy music:

- By listening

People can enjoy music by listening to it. They can go to concerts to hear musicians perform. Classical music is usually performed in concert halls, but sometimes huge festivals are organized in which it is performed outside, in a field or stadium, like pop festivals. People can listen to music on CDs, Computers, iPods, television, the radio, cassette/record-players and even mobile phones. There is so much music today, in elevators, shopping malls, and stores, that it often becomes a background sound that we do not really hear.

- By playing or singing

People can learn to play an instrument. Probably the most common for complete beginners is the piano or keyboard, the guitar, or the recorder (which is certainly the cheapest to buy). After they have learnt to play scales, play simple tunes and read the simplest musical notation, then they can think about which instrument for further development. They should choose an instrument that is practical for their size. For example, a very short child cannot play a full-size double bass, because the double bass is over five feet high. People should choose an instrument that they enjoy playing, because playing regularly is the only way to get better. Finally, it helps to have a good teacher.

- By composing

Anyone can make up his or her own pieces of music. It is not difficult to compose simple songs or melodies (tunes). It’s easier for people who can play an instrument themselves. All it takes is experimenting with the sounds that an instrument makes. Someone can make up a piece that tells a story, or just find a nice tune and think about ways it can be changed each time it is repeated. The instrument might be someone’s own voice.

_____

Are you passionate about music?

Here are some signs through which you can understand if you’re passionate about music:

- Concerts are never missed

- Love to be a part of a band

- Followers of music communities

- Have a collection of records

- You have a song for every situation and every word that anyone says

- Learning music as a hobby

- Music becomes profession

- Mostly gift things related to music

- When music can change your mood swings

- Attach memories to music

____

____

History and philosophy of music:

_

History of Music in the West:

There are so many theories which indicate when and where did music originate. Some historiographers believe that music existed even before the time when the man came into existence. They have categorized music into six eras. Each era is classified by the change in the musical style. These changes have shaped the music we listen to now. The first era was medieval or the middle ages. This age marks the beginning of polyphony and musical notations. Monophonic music and polyphonic music were the two main types of music which were popular in that era. In this era, the newly emerged Christian churches established universities which dictated the destiny of music. This was the time when the music called Gregorian chant was collected and codified. His era also created a new type of music called organum. Guillaume de Machaut, the great name in music, appeared in this era. This era was followed by the Renaissance. The word renaissance literally means rebirth. This era was from CA 1420 to 1600. At this time, the sacred music broke the walls of the church began to spread in schools. The music started to be composed in schools. In this era, dance music and instrumental music was being performed in abundance. English madrigal also started to flourish in the late renaissance period. This type of music was composed by masters like William Byrd, John Dowland, Thomas Morley and many others. After this, came the Baroque age which began in CA 1600 and ended in 1750. The word baroque is derived from an Italian word Barocco which means bizarre, weird or strange. This age is characterized by the different experiments performed on music. Instrumental music and opera started to develop at this age. Johann Sebastian Bach was the greater music composer of this period. It was then followed by the classical age which roughly began in 1750 and ended in 1820. The style of music transformed into simple melodies from the heavy ornamental music of the baroque age. A piano was the primary instrument used by the composers. The Austrian capital Vienna became the musical center of Europe. Composers came to Vienna to learn music from all over Europe. The music which was composed in this era is now referred to as the Viennese style of music. Now came the Romantic era which began in 1820 and ended in 1900. In this era, the music composers added very deep emotions in their music. The artists started to express their emotions using music. The late nineteenth century was characterized by the Late Romantic composers. As the century turns, so did the music. Now came the twentieth-century music. This phase is characterized by many innovations which were performed in music. New types of music were created. Technologies were developed which enhanced the quality of music.

_

Classicism:

Wolfgang Amadeus Mozart (seated at the keyboard in the figure above) was a child prodigy virtuoso performer on the piano and violin. Even before he became a celebrated composer, he was widely known as a gifted performer and improviser.

The music of the Classical period (1730 to 1820) aimed to imitate what were seen as the key elements of the art and philosophy of Ancient Greece and Rome: the ideals of balance, proportion and disciplined expression. (Note: the music from the Classical period should not be confused with Classical music in general, a term which refers to Western art music from the 5th century to the 2000s, which includes the Classical period as one of a number of periods). Music from the Classical period has a lighter, clearer and considerably simpler texture than the Baroque music which preceded it. The main style was homophony, where a prominent melody and a subordinate chordal accompaniment part are clearly distinct. Classical instrumental melodies tended to be almost voicelike and singable. New genres were developed, and the fortepiano, the forerunner to the modern piano, replaced the Baroque era harpsichord and pipe organ as the main keyboard instrument. The best-known composers of Classicism are Carl Philipp Emanuel Bach, Christoph Willibald Gluck, Johann Christian Bach, Joseph Haydn, Wolfgang Amadeus Mozart, Ludwig van Beethoven and Franz Schubert. Beethoven and Schubert are also considered to be composers in the later part of the Classical era, as it began to move towards Romanticism.

_

20th and 21st century music:

In the 19th century, one of the key ways that new compositions became known to the public was by the sales of sheet music, which middle class amateur music lovers would perform at home on their piano or other common instruments, such as violin. With 20th-century music, the invention of new electric technologies such as radio broadcasting and the mass market availability of gramophone records meant that sound recordings of songs and pieces heard by listeners (either on the radio or on their record player) became the main way to learn about new songs and pieces. There was a vast increase in music listening as the radio gained popularity and phonographs were used to replay and distribute music, because whereas in the 19th century, the focus on sheet music restricted access to new music to the middle class and upper-class people who could read music and who owned pianos and instruments, in the 20th century, anyone with a radio or record player could hear operas, symphonies and big bands right in their own living room. This allowed lower-income people, who would never be able to afford an opera or symphony concert ticket to hear this music. It also meant that people could hear music from different parts of the country, or even different parts of the world, even if they could not afford to travel to these locations. This helped to spread musical styles.