Dr Rajiv Desai

An Educational Blog

ARTIFICIAL INTELLIGENCE (AI)

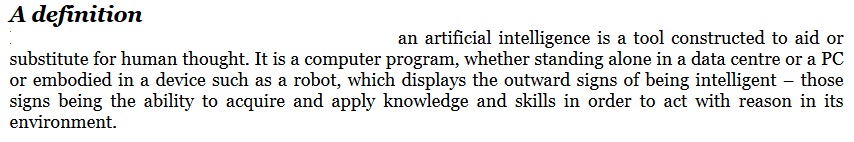

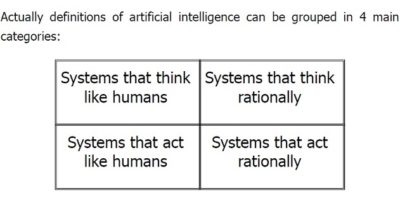

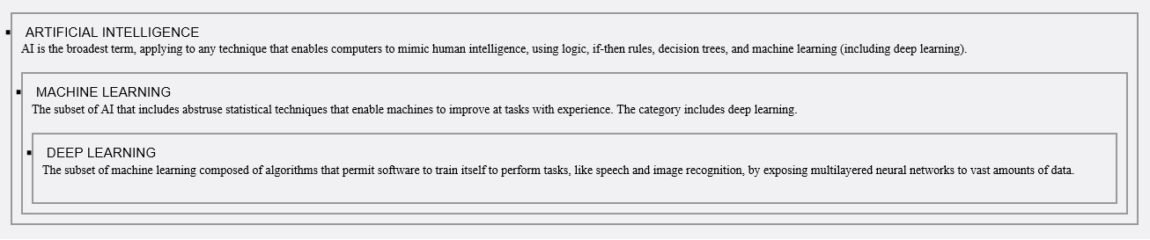

Artificial Intelligence (AI):

_____

______

Prologue:

Mention Artificial Intelligence (AI) and most people are immediately transported into a distant future inspired by popular science fiction such as Terminator and HAL 9000. While these two artificial entities do not exist, the algorithms of AI have been able to address many real issues, from performing medical diagnoses to navigating difficult terrain to monitoring possible failures of spacecraft. In the early 20th century, Jean Piaget remarked, “Intelligence is what you use when you don’t know what to do, when neither innateness nor learning has prepared you for the particular situation.” A 1969 McKinsey article claimed that computers were so dumb that they were not capable of making any decisions and it was human intelligence that drives the dumb machine. Alas, this claim has become a bit of a “joke” over the years, as the modern computers are gradually replacing skilled practitioners in fields across many industries such as architecture, medicine, geology, and education. Artificial Intelligence pursues creating computers or machines as intelligent as human beings. Michael A. Arbib advanced the notion that the brain is not a computer in the recent technological sense, but that we can learn much about brains from studying machines, and much about machines from studying brains. While AI seems like a futuristic concept, it’s actually something that many people use daily, although 63 percent of users don’t realize they’re using it. We use artificial intelligence all day, every day. Siri, Google Now, and Cortana are obvious examples of artificial intelligence, but AI is actually all around us. It can be found in vacuum cleaners, cars, lawnmowers, video games, Hollywood special effects, e-commerce software, medical research and international finance markets – among many other examples. John McCarthy, who originally coined the term “Artificial Intelligence” in 1956, famously quipped: “As soon as it works, no-one calls it AI anymore.” While science fiction often portrays AI as robots with human-like characteristics, AI can encompass anything from Google’s search algorithms to IBM’s Watson to autonomous weapons. In many unforeseen ways, AI is helping to improve and make our lives more efficient, though the reverse degeneration of human economic and cultural structures is also a potential reality. The Future of Life Institute‘s tagline sums it up in succinct fashion: “Technology is giving life the potential to flourish like never before…or to self-destruct.” Humans are the creators, but will we always have control of our revolutionary inventions? Scientists reckon there have been at least five mass extinction events in the history of our planet, when a catastrophically high number of species were wiped out in a relatively short period of time. Global warming, nuclear holocaust and artificial intelligence are debated as causes of sixth mass extinction, where human species could become extinct by human activities!!!

_____

Synonyms and abbreviations:

CPU = central processing unit

GPU = graphics processing unit

RAM = random access memory

ANI = artificial narrow intelligence = weak AI

AGI = artificial general intelligence = strong AI

CI = computational intelligence

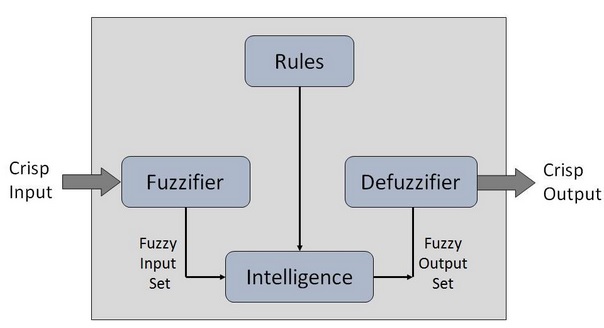

FLS = Fuzzy Logic Systems

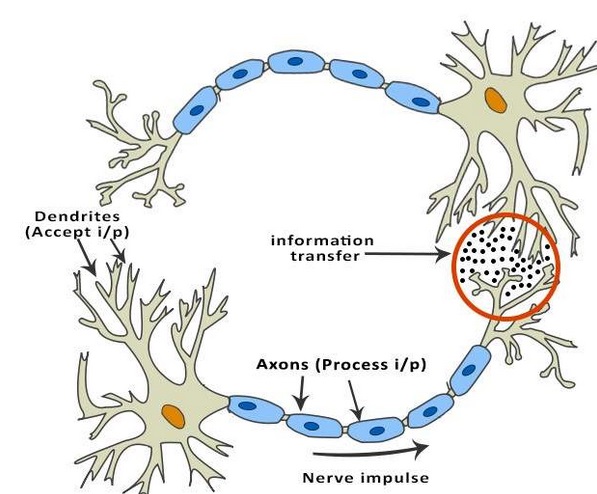

ANN = Artificial neural network

EA = evolutionary algorithms

GA = genetic algorithm

ES = expert system

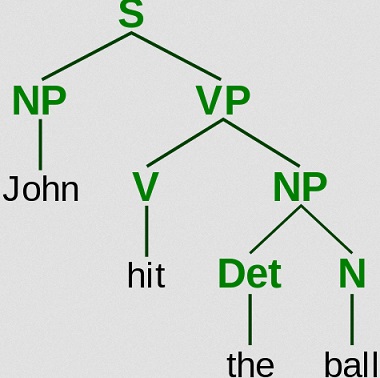

NLP = natural language processing

ML = machine learning

API = application program interface

RPA = remotely powered aircraft

___

Artificial Intelligence Terminology:

Here is the list of frequently used terms in the domain of AI:

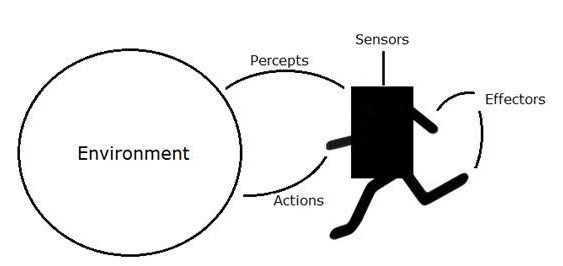

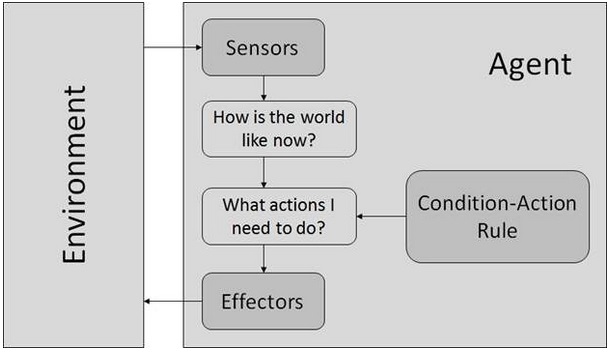

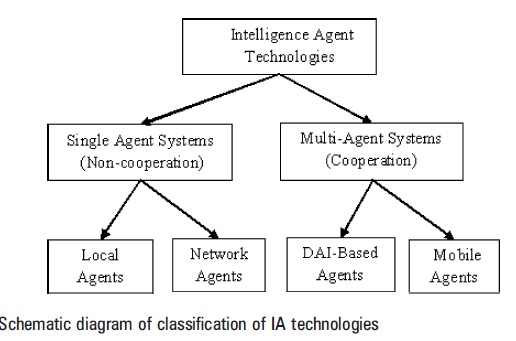

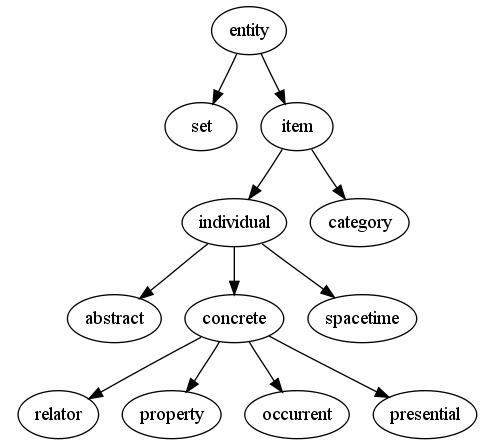

Agent:

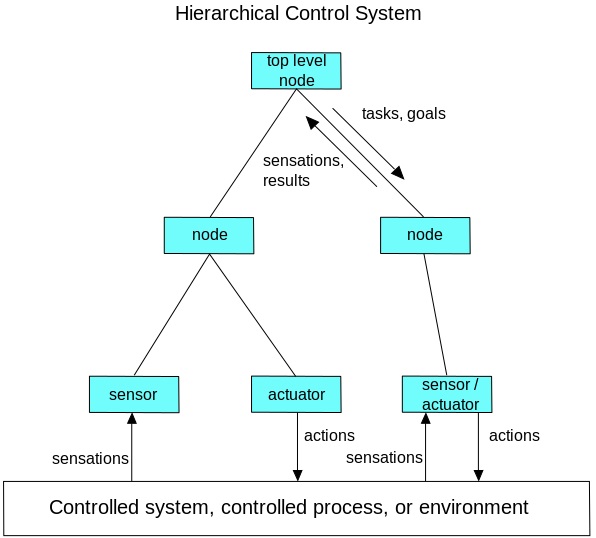

Agents are systems or software programs capable of autonomous, purposeful and reasoning directed towards one or more goals. They are also called assistants, brokers, bots, droids, intelligent agents, and software agents.

Environment:

It is the part of real or computational world inhabited by the agent.

Autonomous Robot:

Robot free from external control or influence and able to control itself independently.

Heuristics:

It is the knowledge based on Trial-and-error, evaluations, and experimentation.

Knowledge Engineering:

Acquiring knowledge from human experts and other resources.

Percepts:

It is the format in which the agent obtains information about the environment.

Pruning:

Overriding unnecessary and irrelevant considerations in AI systems.

Rule:

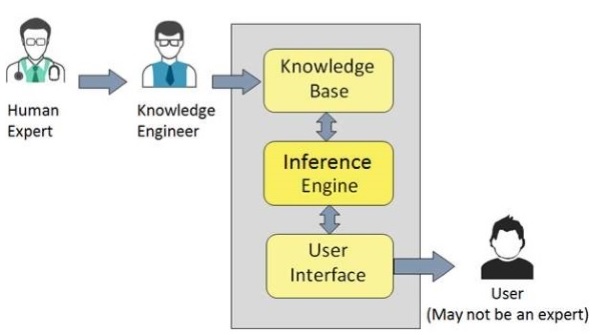

It is a format of representing knowledge base in Expert System. It is in the form of IF-THEN-ELSE.

Shell:

A shell is a software that helps in designing inference engine, knowledge base, and user interface of an expert system.

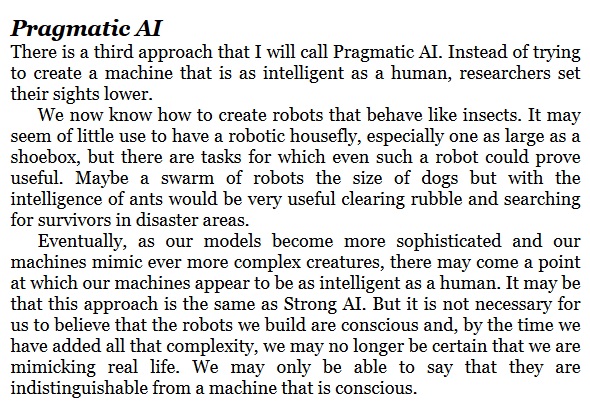

Task:

It is the goal the agent is tries to accomplish.

Turing Test:

A test developed by Allan Turing to test the intelligence of a machine as compared to human intelligence.

Existential threat:

A force capable of completely obviating human existence.

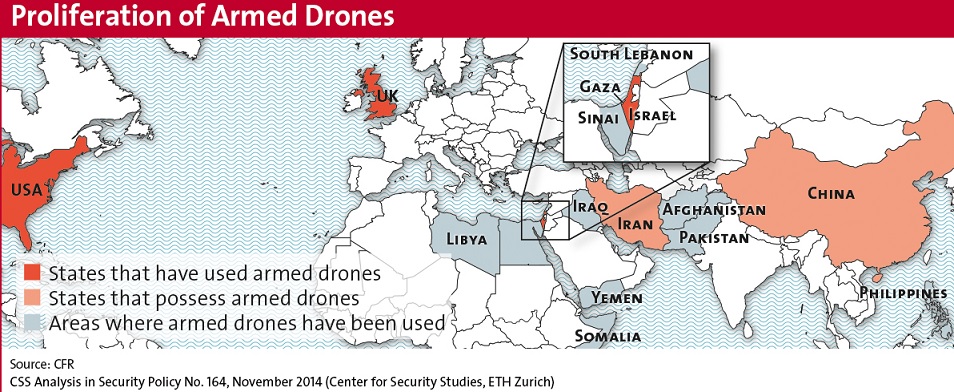

Autonomous weapons:

The proverbial killer A.I., autonomous weapons would use artificial intelligence rather than human intelligence to select their targets.

Machine learning:

Unlike conventional computer programs, machine-learning algorithms modify themselves to better perform their assigned tasks.

Alignment problem:

A situation in which the methods artificial intelligences use to complete their tasks fail to correspond to the needs of the humans who created them.

Singularity:

Although the term has been used broadly, the singularity typically describes the moment at which computers become so adept at modifying their own programming that they transcend current human intellect.

Superintelligence:

Superintelligence would exceed current human mental capacities in virtually every way and be capable of transformative cognitive feats.

_______

_______

Consider the following 3 practical examples:

A computer system allows a severely handicapped person to type merely by moving their eyes. The device is able to follow eye movements that briefly fix on letters of the alphabet. Then it types the letters at a rate that has allowed some volunteers to achieve speeds of 18 words per minute after practice. A similar computer system for the handicapped is installed on mechanized wheelchairs. It allows paralytics to “order” their wheelchairs wherever they want to go merely by voice commands. A couple in London reportedly have adapted a home computer to act as a nanny for their baby. The baby’s father, a computer consultant, programmed the computer to respond the instant baby Gemma cries by talking to her in a soothing tone, using parental voices. The surrogate nanny will also tell bedtime stories and teach the baby three languages as she begins to talk. These are just a few examples of Artificial Intelligence (AI), where a set of instructions, or programs are fed into the computer which enables it to solve problems on its own, the way a human does. AI is now being used in many fields such as medical diagnosis, robot control, computer games, flying airplanes etc.

_

_

_

_

_

_

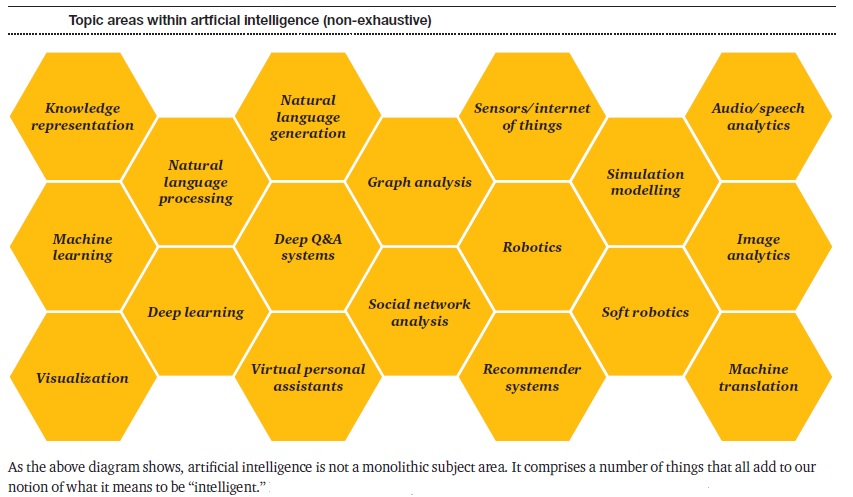

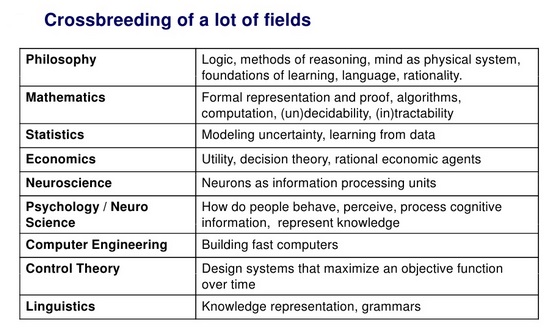

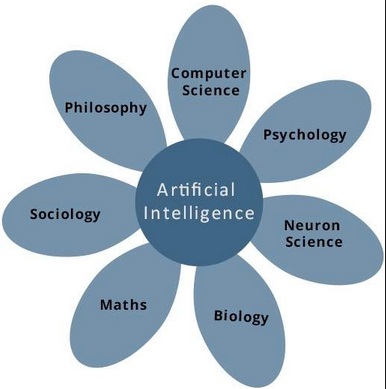

Besides computer science, AI is linked with many fields and disciplines:

______

______

History of AI:

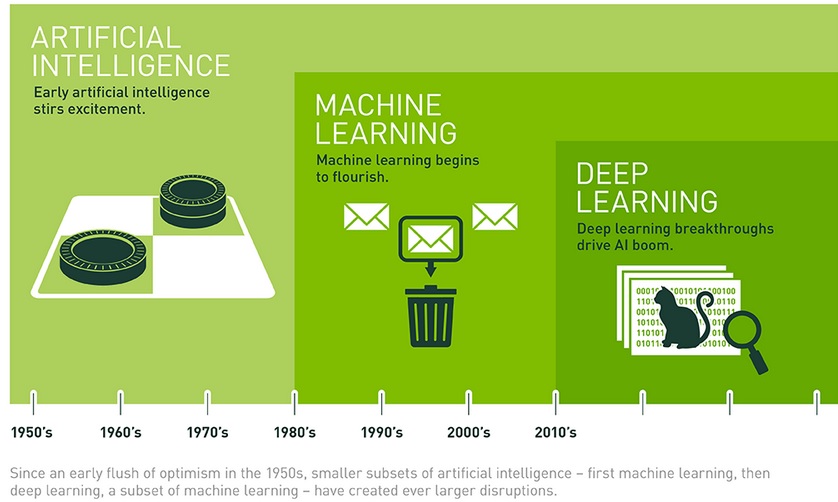

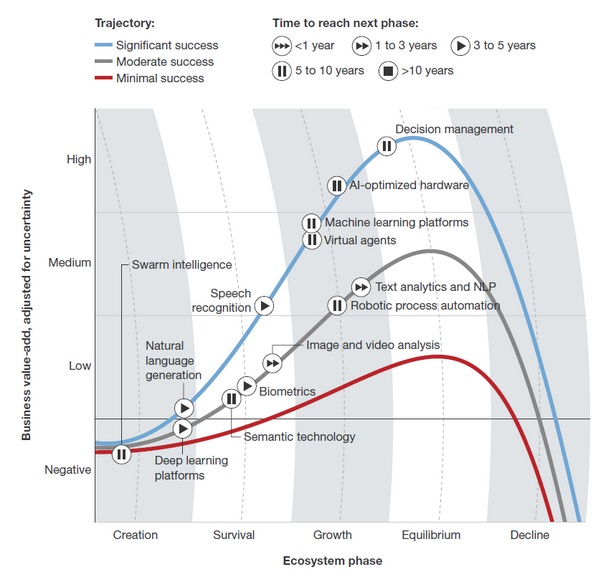

The study of mechanical or “formal” reasoning began with philosophers and mathematicians in antiquity. In the 19th century, George Boole refined those ideas into propositional logic and Gottlob Frege developed a notational system for mechanical reasoning (a “predicate calculus”). Around the 1940s, Alan Turing’s theory of computation suggested that a machine, by shuffling symbols as simple as “0” and “1”, could simulate any conceivable act of mathematical deduction. This insight, that digital computers can simulate any process of formal reasoning, is known as the Church–Turing thesis. Along with concurrent discoveries in neurology, information theory and cybernetics, this led researchers to consider the possibility of building an electronic brain. The first work that is now generally recognized as AI was McCullouch and Pitts’ 1943 formal design for Turing-complete “artificial neurons”. The field of AI research was founded at a conference at Dartmouth College in 1956. The attendees, including John McCarthy, Marvin Minsky, Allen Newell, Arthur Samuel and Herbert Simon, became the leaders of AI research. They and their students wrote programs that were astonishing to most people: computers were winning at checkers, solving word problems in algebra, proving logical theorems and speaking English. By the middle of the 1960s, research in the U.S. was heavily funded by the Department of Defense and laboratories had been established around the world. AI’s founders were optimistic about the future: Herbert Simon predicted, “machines will be capable, within twenty years, of doing any work a man can do.” Marvin Minsky agreed, writing, “within a generation … the problem of creating ‘artificial intelligence’ will substantially be solved.” They failed to recognize the difficulty of some of the remaining tasks. Progress slowed and in 1974, in response to the criticism of Sir James Lighthill and ongoing pressure from the US Congress to fund more productive projects, both the U.S. and British governments cut off exploratory research in AI. The next few years would later be called an “AI winter”, a period when funding for AI projects was hard to find. In the early 1980s, AI research was revived by the commercial success of expert systems, a form of AI program that simulated the knowledge and analytical skills of human experts. By 1985 the market for AI had reached over a billion dollars. At the same time, Japan’s fifth generation computer project inspired the U.S and British governments to restore funding for academic research. However, beginning with the collapse of the Lisp Machine market in 1987, AI once again fell into disrepute, and a second, longer-lasting hiatus began. In the late 1990s and early 21st century, AI began to be used for logistics, data mining, medical diagnosis and other areas. The success was due to increasing computational power, greater emphasis on solving specific problems, new ties between AI and other fields and a commitment by researchers to mathematical methods and scientific standards. Deep Blue became the first computer chess-playing system to beat a reigning world chess champion, Garry Kasparov on 11 May 1997. Advanced statistical techniques (loosely known as deep learning), access to large amounts of data and faster computers enabled advances in machine learning and perception. By the mid 2010s, machine learning applications were used throughout the world. In a Jeopardy! quiz show exhibition match, IBM’s question answering system, Watson, defeated the two greatest Jeopardy champions, Brad Rutter and Ken Jennings, by a significant margin. The Kinect, which provides a 3D body–motion interface for the Xbox 360 and the Xbox One use algorithms that emerged from lengthy AI research as do intelligent personal assistants in smartphones. In March 2016, AlphaGo won 4 out of 5 games of Go in a match with Go champion Lee Sedol, becoming the first computer Go-playing system to beat a professional Go player without handicaps. According to Bloomberg’s Jack Clark, 2015 was a landmark year for artificial intelligence, with the number of software projects that use AI within Google increasing from a “sporadic usage” in 2012 to more than 2,700 projects. Clark also presents factual data indicating that error rates in image processing tasks have fallen significantly since 2011. He attributes this to an increase in affordable neural networks, due to a rise in cloud computing infrastructure and to an increase in research tools and datasets. Other cited examples include Microsoft’s development of a Skype system that can automatically translate from one language to another and Facebook’s system that can describe images to blind people.

__

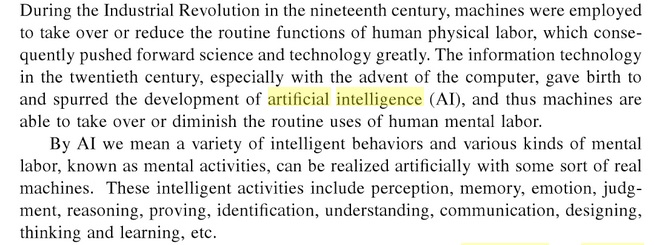

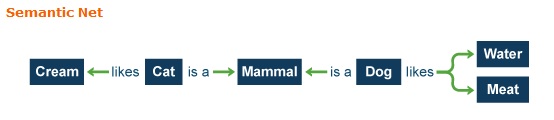

Although in the eighteenth, nineteenth, and early twentieth centuries the formalization of science and mathematics created the intellectual prerequisite for the study of artificial intelligence, it was not until the twentieth century and the introduction of the digital computer that AI became a viable scientific discipline. By the end of the 1940s electronic digital computers had demonstrated their potential to provide the memory and processing power required by intelligent programs. It was now possible to implement formal reasoning systems on a computer and empirically test their sufficiency for exhibiting intelligence. An essential component of the science of artificial intelligence is this commitment to digital computers as the vehicle of choice for creating and testing theories of intelligence. Digital computers are not merely a vehicle for testing theories of intelligence. Their architecture also suggests a specific paradigm for such theories: intelligence is a form of information processing. The notion of search as a problem-solving methodology, for example, owes more to the sequential nature of computer operation than it does to any biological model of intelligence. Most AI programs represent knowledge in some formal language that is then manipulated by algorithms, honoring the separation of data and program fundamental to the von Neumann style of computing. Formal logic has emerged as an important representational tool for AI research, just as graph theory plays an indispensable role in the analysis of problem spaces as well as providing a basis for semantic networks and similar models of semantic meaning. We often forget that the tools we create for our own purposes tend to shape our conception of the world through their structure and limitations. Although seemingly restrictive, this interaction is an essential aspect of the evolution of human knowledge: a tool (and scientific theories are ultimately only tools) is developed to solve a particular problem. As it is used and refined, the tool itself seems to suggest other applications, leading to new questions and, ultimately, the development of new tools.

__

Here is synopsis of history of AI during 20th century:

| Year | Milestone / Innovation |

| 1923 | Karel Čapek play named “Rossum’s Universal Robots” (RUR) opens in London, first use of the word “robot” in English. |

| 1943 | Foundations for neural networks laid. |

| 1945 | Isaac Asimov, a Columbia University alumni, coined the term Robotics. |

| 1950 | Alan Turing introduced Turing Test for evaluation of intelligence and published Computing Machinery and Intelligence. Claude Shannon published Detailed Analysis of Chess Playing as a search. |

| 1956 | John McCarthy coined the term Artificial Intelligence. Demonstration of the first running AI program at Carnegie Mellon University. |

| 1958 | John McCarthy invents LISP programming language for AI. |

| 1964 | Danny Bobrow’s dissertation at MIT showed that computers can understand natural language well enough to solve algebra word problems correctly. |

| 1965 | Joseph Weizenbaum at MIT built ELIZA, an interactive problem that carries on a dialogue in English. |

| 1969 | Scientists at Stanford Research Institute Developed Shakey, a robot, equipped with locomotion, perception, and problem solving. |

| 1973 | The Assembly Robotics group at Edinburgh University built Freddy, the Famous Scottish Robot, capable of using vision to locate and assemble models. |

| 1979 | The first computer-controlled autonomous vehicle, Stanford Cart, was built. |

| 1985 | Harold Cohen created and demonstrated the drawing program, Aaron. |

| 1990 | Major advances in all areas of AI −

|

| 1997 | The Deep Blue Chess Program beats the then world chess champion, Garry Kasparov. |

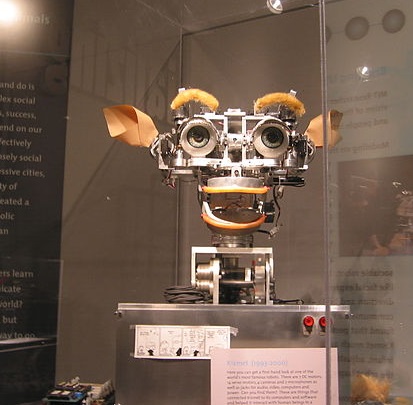

| 2000 | Interactive robot pets become commercially available. MIT displays Kismet, a robot with a face that expresses emotions. The robot Nomad explores remote regions of Antarctica and locates meteorites. |

______

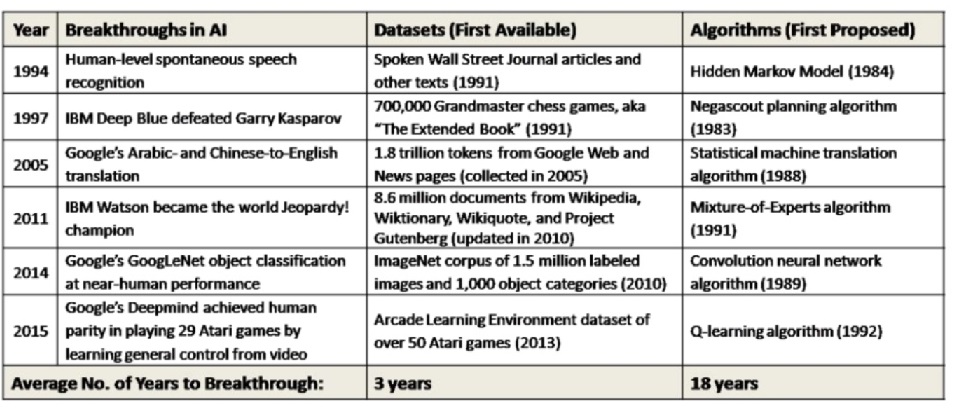

Breakthroughs in AI are depicted in chronological order:

_______

_______

Mathematics, computer science and AI:

To know artificial intelligence, you have to know computer science, and to know computer science you have to know mathematics.

Definition of mathematics:

Mathematics makes up that part of the human conceptual system that is special in the following way:

It is precise, consistent, stable across time and human communities, symbolizable, calculable, generalizable, universally available, consistent within each of its subject matters, and effective as a general tool for description, explanation, and prediction in a vast number of everyday activities, [ranging from] sports, to building, business, technology, and science. There is no branch of mathematics, however abstract, which may not someday be applied to phenomena of the real world. Mathematics arises from our bodies and brains, our everyday experiences, and the concerns of human societies and cultures.

_

Computer science to artificial intelligence:

Since the invention of computers or machines, their capability to perform various tasks went on growing exponentially. Humans have developed the power of computer systems in terms of their diverse working domains, their increasing speed, and reducing size with respect to time. A branch of Computer Science named Artificial Intelligence pursues creating the computers or machines as intelligent as human beings. Theoretical Computer Science has its roots in mathematics, where there was a lot of discussion of logic. It began with Pascal and Babbage in the 1800’s. Pascal and Babbage eventually tried to come up with computing machines that would help in calculating arithmetic. Some of them actually worked, but they were mechanical machines built on physics, without a real theoretical background. Another person in the 1800’s was a man named George Boole, who tried to formulate a mathematical form of logic. This was eventually called Boolean Logic in his honor, and we still use it today to form the heart of all computer hardware. All those transistors and things you see on a circuit board are really just physical representations of what George Boole came up with. Computer Science, however, hit the golden age with John von Neumann and Alan Turing in the 1900’s. Von Neumann formulated the theoretical form of computers that is still used today as the heart of all computer design: the separation of the CPU, the RAM, the BUS, etc. This is all known collectively as Von Neumann architecture. Alan Turing, however, is famous for the theoretical part of Computer Science. He invented something called the Universal Turing Machine, which told us exactly what could and could not be computed using the standard computer architecture of today. This formed the basis of Theoretical Computer Science. Ever since Turing formulated this extraordinary concept, Computer Science has been dedicated to answering one question: “Can we compute this?” This question is known as computability, and it is one of the core disciplines in Computer Science. Another form of the question is “Can we compute this better?” This leads to more complications, because what does “better” mean? So, Computer Science is partly about finding efficient algorithms to do what you need. Still, there are other forms of Computer Science, answering such related questions as “Can we compute thought?” This leads to fields like Artificial Intelligence. Computer Science is all about getting things done, to find progressive solutions to our problems, to fill gaps in our knowledge. Sure, Computer Science may have some math, but it is different from math. In the end, Computer Science is about exploring the limitations of humans, of expanding our horizons.

_

Basic of computer science:

Computer science is the study of problems, problem-solving, and the solutions that come out of the problem-solving process. Given a problem, a computer scientist’s goal is to develop an algorithm, a step-by-step list of instructions for solving any instance of the problem that might arise. Algorithms are finite processes that if followed will solve the problem. Algorithms are solutions. Computer science can be thought of as the study of algorithms. However, we must be careful to include the fact that some problems may not have a solution. Although proving this statement is beyond the scope of this text, the fact that some problems cannot be solved is important for those who study computer science. We can fully define computer science, then, by including both types of problems and stating that computer science is the study of solutions to problems as well as the study of problems with no solutions. It is also very common to include the word computable when describing problems and solutions. We say that a problem is computable if an algorithm exists for solving it. An alternative definition for computer science, then, is to say that computer science is the study of problems that are and that are not computable, the study of the existence and the nonexistence of algorithms. In any case, you will note that the word “computer” did not come up at all. Solutions are considered independent from the machine. Computer science, as it pertains to the problem-solving process itself, is also the study of abstraction. Abstraction allows us to view the problem and solution in such a way as to separate the so-called logical and physical perspectives. Most people use computers to write documents, send and receive email, surf the web, play music, store images, and play games without any knowledge of the details that take place to allow those types of applications to work. They view computers from a logical or user perspective. Computer scientists, programmers, technology support staff, and system administrators take a very different view of the computer. They must know the details of how operating systems work, how network protocols are configured, and how to code various scripts that control function. This is known as the physical perspective.

_

Fields of Computer Science:

Computer science is often said to be neither a science nor about computers. There is certainly some truth to this claim–computers are merely the device upon which the complex and beautiful ideas in computer science are tested and implemented. And it is hardly a science of discovery, as might be physics or biology, so much as it is a discipline of mathematics or engineering. But this all depends on which branch of computer science you are involved in, and there are many: theory, hardware, networking, graphics, programming languages, software engineering, systems, and of course, AI.

_

Theory:

Computer science (CS) theory is often highly mathematical, concerning itself with questions about the limits of computation. Some of the major results in CS theory include what can be computed and how fast certain problems can be solved. Some things are simply impossible to figure out! Other things are merely difficult, meaning they take a long time. The long-standing question of whether “P=NP” lies in the realm of theory. The P versus NP problem is a major unsolved problem in computer science. Informally speaking, it asks whether every problem whose solution can be quickly verified by a computer can also be quickly solved by a computer. A subsection of theory is algorithm development. For instance, theorists might work to develop better algorithms for graph coloring, and theorists have been involved in improving algorithms used by the human genome project to produce faster algorithms for predicting DNA similarity.

_

Algorithm:

In mathematics and computer science, an algorithm is a self-contained sequence of actions to be performed. Algorithms perform calculation, data processing, and/or automated reasoning tasks. An algorithm is an effective method that can be expressed within a finite amount of space and time and in a well-defined formal language for calculating a function. Starting from an initial state and initial input (perhaps empty), the instructions describe a computation that, when executed, proceeds through a finite number of well-defined successive states, eventually producing “output” and terminating at a final ending state. The transition from one state to the next is not necessarily deterministic; some algorithms, known as randomized algorithms, incorporate random input. An informal definition could be “a set of rules that precisely defines a sequence of operations.” which would include all computer programs, including programs that do not perform numeric calculations. Generally, a program is only an algorithm if it stops eventually. Algorithms are essential to the way computers process data. Many computer programs contain algorithms that detail the specific instructions a computer should perform (in a specific order) to carry out a specified task, such as calculating employees’ paychecks or printing students’ report cards. In computer systems, an algorithm is basically an instance of logic written in software by software developers to be effective for the intended “target” computer(s) to produce output from given (perhaps null) input. An optimal algorithm, even running in old hardware, would produce faster results than a non-optimal (higher time complexity) algorithm for the same purpose, running in more efficient hardware; that is why algorithms, like computer hardware, are considered technology.

_

Recursion and Iteration:

Recursion and iteration are two very commonly used, powerful methods of solving complex problems, directly harnessing the power of the computer to calculate things very quickly. Both methods rely on breaking up the complex problems into smaller, simpler steps that can be solved easily, but the two methods are subtlely different. The difference between iteration and recursion is that with iteration, each step clearly leads onto the next, like stepping stones across a river, while in recursion, each step replicates itself at a smaller scale, so that all of them combined together eventually solve the problem. These two basic methods are very important to understand fully, since they appear in almost every computer algorithm ever made.

_

Decision tree:

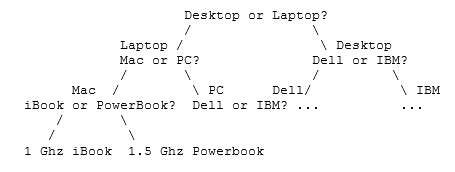

The idea behind decision trees is that, given an input with well-defined attributes, you can classify the input entirely based on making choices about each attribute. For instance, if you’re told that someone has a piece of US currency, you could ask what denomination it is; if the denomination is less than one dollar, it must be the coin with that denomination. That could be one branch of the decision tree that was split on the attribute of “value”. On the other hand, if the denomination happened to be one dollar, you’d then need to ask what type of currency it is: if it were a bill, it would have to be a dollar bill. If it were a coin, it could still be one of a few things (such as a Susan B. Anthony Dollar or a Sacajawea dollar). Then your next question might be, is it named after a female; but that wouldn’t tell you anything at all! This suggests that some attributes may be more valuable for making decisions than others. A useful way of thinking about decision trees is as though each node of the tree were a question to be answered. Once you’ve reached the leaves of the tree, you’ve reached the answer! For instance, if you were choosing a computer to buy, your first choice might be “Laptop or desktop”. If you chose a laptop, your second choice might be “Mac or PC”. If you chose a desktop, you might have more important factors than Mac or PC (perhaps you know that you will buy a PC because you think they are faster, and if you want a desktop, you want a fast machine). In essence, with a decision tree, the algorithm is designed to make choices in a similar fashion. Each of the questions corresponds to a node in the decision tree, and each node has branches for each possible answer. Eventually, the algorithm reaches a leaf node that contains the correct classification of the input or the correct decision to make.

_

Visually, a decision tree looks something like this:

_

In the case of the decision tree learning algorithm, the questions at each node will correspond to a question about the value of an attribute on the input, and each branch from the node will correspond. The requirement is that we want the shortest decision trees possible (we’d like to find the shortest, simplest way of characterizing the inputs). This introduces a bit of the bias that we know is necessary for learning by favoring simpler solutions over more complex solutions. We make this choice because it works well in general, and has certainly been a useful principle for scientists trying to come up with explanations for data. So our goal is to find the shortest decision tree possible. Unfortunately, this is rather difficult; there’s no known way of generating the shortest possible tree without looking at nearly every possible tree. Unfortunately, the number of possible decision trees is exponential in the number of attributes. Since there are way too many possible trees for an algorithm to simply try every one of them and use the best, we’ll take a less time-consuming approach that usually results in fairly good results. In particular, we’ll learn a tree that seems to split on attributes that tend to give a lot of information about the data.

_

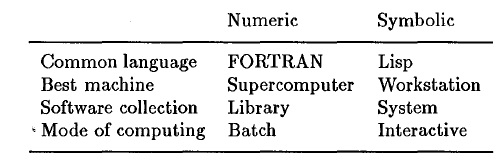

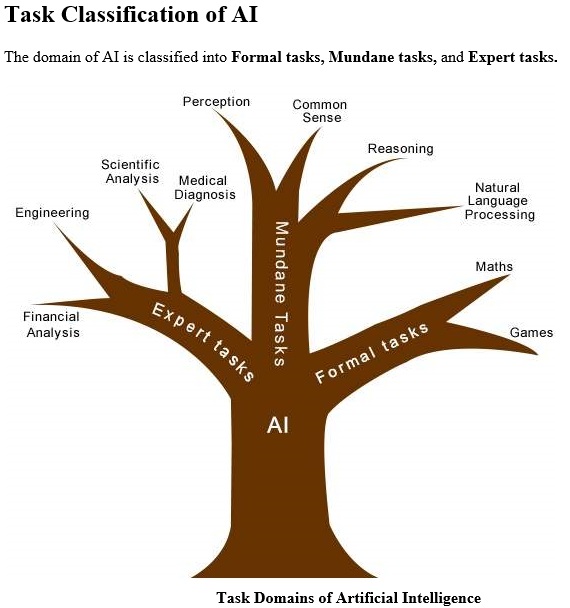

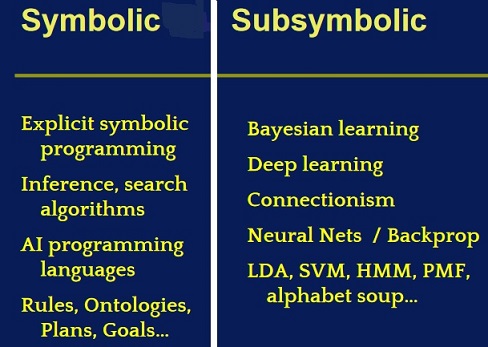

Symbolic and Numeric Computation:

“Computation” can mean different things to different people at different times. Indeed, it was only in the 1950s that “computer” came to mean a machine rather than a human being. The great computers of the 19th century, such as Delaunay, would produce formulae of great length, and only convert to numbers at the last minute. Today, “compute” is largely synonymous with “produce numbers”, and often means “produce numbers in FORTRAN using the NAG library”. However, a dedicated minority use computers to produce formulae. Historically symbolic and numeric computations have pursued different lines of evolution, have been written in different languages and generally seen to be competitive rather than complementary techniques. Even when both were used to solve a problem, ad hoc methods were used to transfer the data between them. Whereas calculators brought more numeric computation into the classroom, computer algebra systems enable the use of more symbolic computation. Generally the two methods have been viewed as alternatives, and indeed the following table shows some of the historic differences:

Of course there are exceptions to these rules. MAPLE and MATHEMATICA are written in C, and MATHLAB is an interactive package of numerical algorithms; but in general it has been the case that computer algebra systems were interactive packages run on personal workstations, while numerical computation was done on large machines in a batch-oriented environment. The reason for this apparent dichotomy is clear. Numerical computation tends to be very CPU-intensive, so the more powerful the host computer the better; while symbolic computation is more memory-intensive, and performing it on a shared machine might be considered anti-social behavior by other users. However numeric and symbolic computations do need each other. It is the combination of numeric and symbolic capabilities, enriched by graphical ones, that gives a program like DERIVE an essential advantage over a calculator (or graphics calculator). Hardware has, of course, come a long way since these lines were drawn. Most researchers now have access to powerful workstations with a reasonable amount of memory and high quality display devices. Graphics have become more important to most users and, even if numerical programs are still run on the departmental supercomputer, they are probably developed and tested on the individual’s desk-top machine. Modern hardware is thus perfectly suited for both symbolic and numeric applications. AI involves symbolic computation.

_

Cryptography is another booming area of the theory section of computer science, with applications from e-commerce to privacy and data security. This work usually involves higher-level mathematics, including number theory. Even given all of the work in the field, algorithms such as RSA encryption have yet to be proven totally secure. Work in theory even includes some aspects of machine learning, including developing new and better learning algorithms and coming up with bounds on what can be learned and under what conditions.

_

Hardware:

Computer hardware deals with building circuits and chips. Hardware design lies in the realm of engineering, and covers topics such as chip architecture, but also more general electrical engineering-style circuit design. Computer systems generally consist of three main parts: the central processing unit (CPU) that processes data, memory that holds the programs and data to be processed, and I/O (input/output) devices as peripherals that communicate with the outside world. In a modern system we might find a multi-core CPU, DDR4 SDRAM for memory, a solid-state drive for secondary storage, a graphics card and LCD as a display system, a mouse and keyboard for interaction, and a Wi-Fi connection for networking. Computer buses move data between all of these devices.

_

Networking:

Networking covers topics dealing with device interconnection, and is closely related to systems. Network design deals with anything from laying out a home network to figuring out the best way to link together military installations. Networking also covers a variety of practical topics such as resource sharing and creating better protocols for transmitting data in order to guarantee delivery times or reduce network traffic. Other work in networking includes algorithms for peer-to-peer networks to allow resource detection, scalable searching of data, and load balancing to prevent network nodes from exploiting or damaging the network. Networking often relies on results from theory for encryption and routing algorithms and from systems for building efficient, low-power network nodes.

_

Graphics:

The field of graphics has become well-known for work in making amazing animated movies, but it also covers topics such as data visualization, which make it easier to understand and analyse complex data. You may be most familiar with the work in computer graphics because of the incredible strides that have been made in creating graphical 3D worlds!

_

Programming Languages:

A programming language is a formal computer language designed to communicate instructions to a machine, particularly a computer. Programming languages can be used to create programs to control the behavior of a machine or to express algorithms. The term programming language usually refers to high-level languages, such as BASIC, C, C++, COBOL, FORTRAN, Ada, and Pascal. Each language has a unique set of keywords (words that it understands) and a special syntax for organizing program instructions. Programming languages are the heart of much work in computer science; most non-theory areas are dependent on good programming languages to get the job done. Programming language works focuses on several topics. One area of work is optimization–it’s often said that it’s better to let the compiler figure out how to speed up your program instead of hand-coding assembly. And these days, that’s probably true because compiler optimizations can do amazing things. Proving program correctness is another aspect of programming language study, which has led to a class of “functional” programming languages. Much recent work has focused on optimizing functional languages, which turn out to be easier to analyze mathematically and prove correct, and also sometimes more elegant for expressing complex ideas in a compact way. Other work in programming languages deals with programmer productivity, such as designing new language paradigms or simply better implementations of current programming paradigms (for instance, one could see Java as an example of a cleaner object-oriented implementation than C++) or simply adding new features, such as garbage collection or the ability to create new functions dynamically, to languages and studying how this improves the programmer’s productivity. Recently, language-based security has become more interesting, as questions of how to make “safer” languages that make it easier to write secure code.

_

Software Engineering:

Software engineering relies on some of the work from the programming languages community, and deals with the design and implementation of software. Often, software engineering will cover topics like defensive programming, in which the code includes apparently extraneous work to ensure that it is used correctly by others. Software engineering is generally a practical discipline, with a focus on designing and working on large-scale projects. As a result, appreciating software engineering practices often requires a fair amount of actual work on software projects. It turns out that as programs grow larger, the difficulty of managing them dramatically increases in sometimes unexpected ways.

_

Systems:

Systems work deals, in a nutshell, with building programs that use a lot of resources and profiling that resource usage. Systems work includes building operating systems, databases, and distributed computing, and can be closely related to networking. For instance, some might say that the structure of the internet falls in the category of systems work. The design, implementation, and profiling of databases is a major part of systems programming, with a focus on building tools that are fast enough to manage large amounts of data while still being stable enough not to lose it. Sometimes work in databases and operating systems intersects in the design of file systems to store data on disk for the operating system. For example, Microsoft has spent years working on a file system based on the relational database model. Systems work is highly practical and focused on implementation and understanding what kinds of usage a system will be able to handle. As such, systems work can involve trade-offs that require tuning for the common usage scenarios rather than creating systems that are extremely efficient in every possible case. Some recent work in systems has focused on solving the problems associated with large-scale computation (distributed computing) and making it easier to harness the power of many relatively slow computers to solve problems that are easy to parallelize.

__

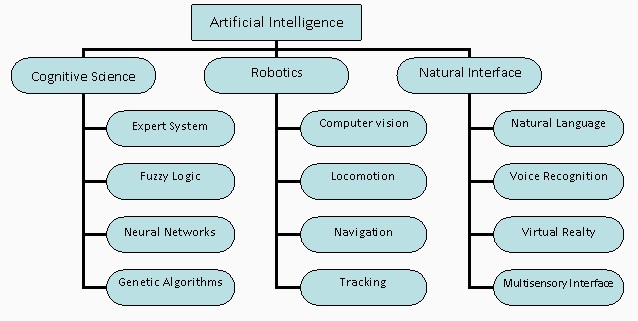

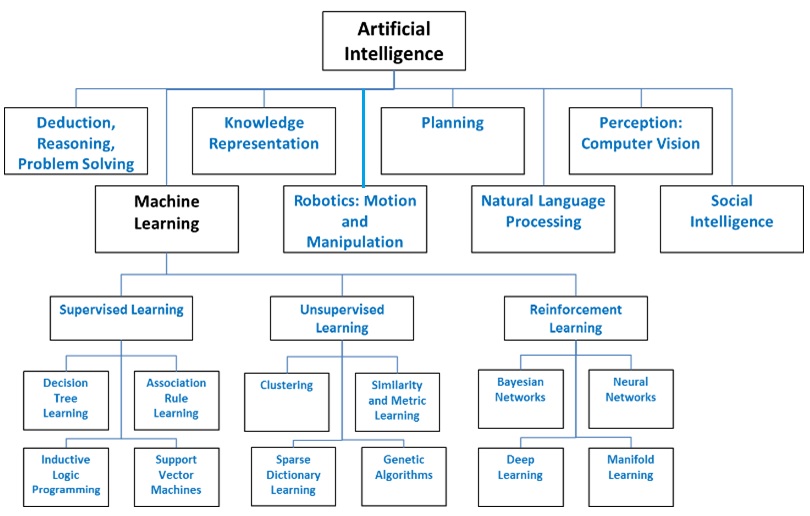

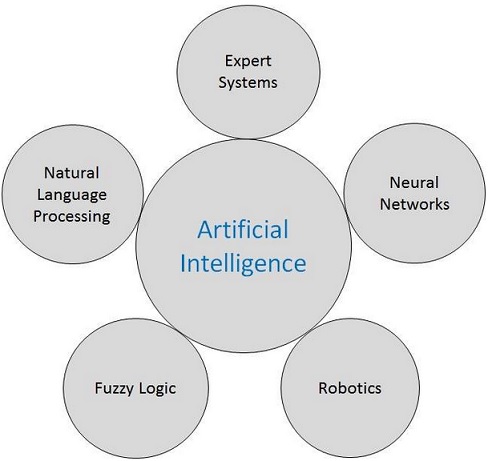

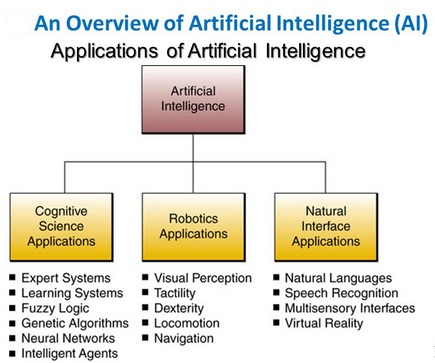

Artificial Intelligence (AI):

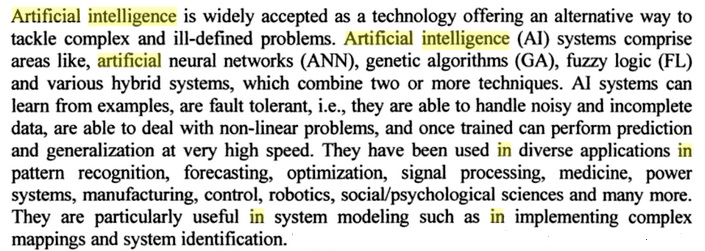

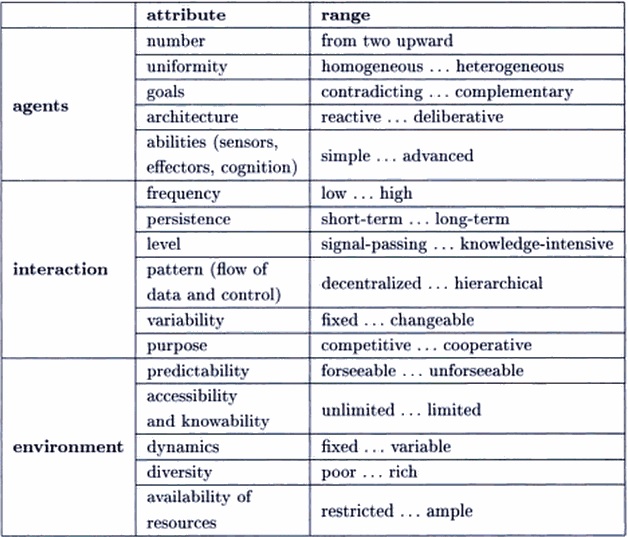

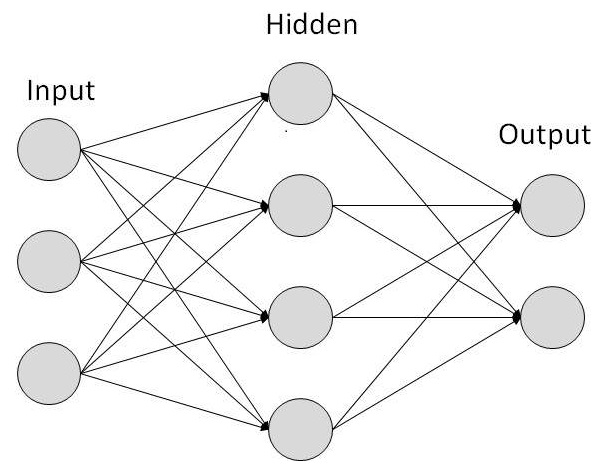

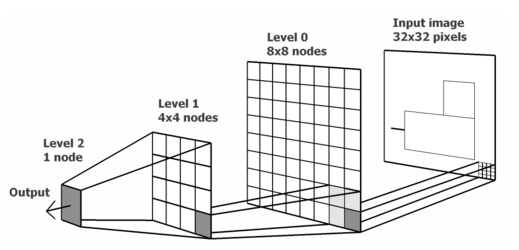

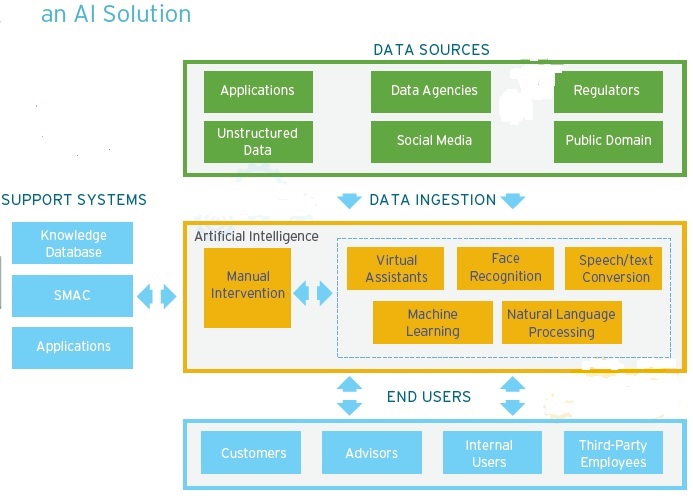

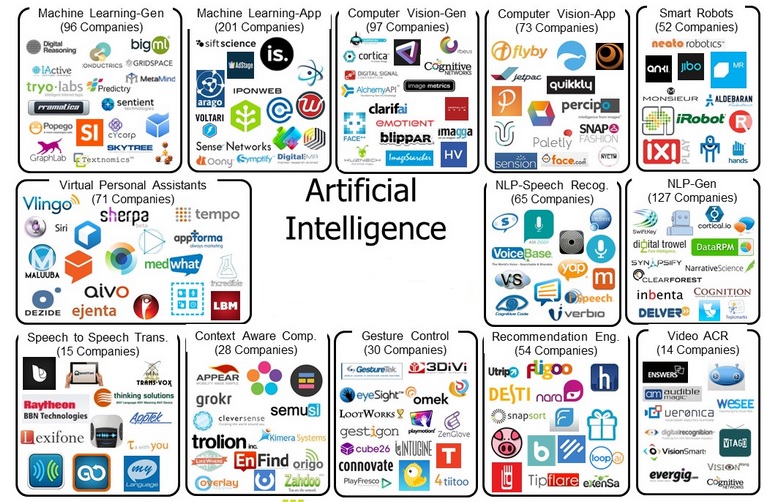

Last, but not least, is artificial intelligence, which covers a wide range of topics. AI work includes everything from planning and searching for solutions (for instance, solving problems with many constraints) to machine learning. There are areas of AI that focus on building game playing programs for Chess and Go. Other planning problems are of more practical significance–for instance, designing programs to diagnose and solve problems in spacecraft or medicine. AI also includes work on neural networks and machine learning, which is designed to solve difficult problems by allowing computers to discover patterns in a large set of input data. Learning can be either supervised, in which case there are training examples that have been classified into different categories (for instance, written numerals classified as being the numbers 1 through 9), or unsupervised, in which case the goal is often to cluster the data into groups that appear to have similar features (suggesting that they all belong to the same category). AI also includes work in the field of robotics (along with hardware and systems) and multiagent systems, and is focused largely on improving the ability of robotic agents to plan courses of action or strategize about how to interact with other robots or with people. Work in this area has often focused on multiagent negotiation and applying the principles of game theory (for interacting with other robots) or behavioral economics (for interacting with people). Although AI holds out some hope of creating a truly conscious machine, much of the recent work focuses on solving problems of more obvious importance. Thus, the applications of AI to research, in the form of data mining and pattern recognition, are at present more important than the more philosophical topic of what it means to be conscious. Nevertheless, the ability of computers to learn using complex algorithms provides clues about the tractability of the problems we face.

_______

_______

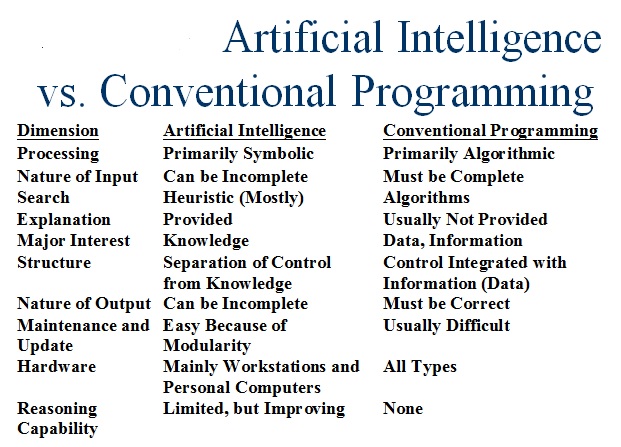

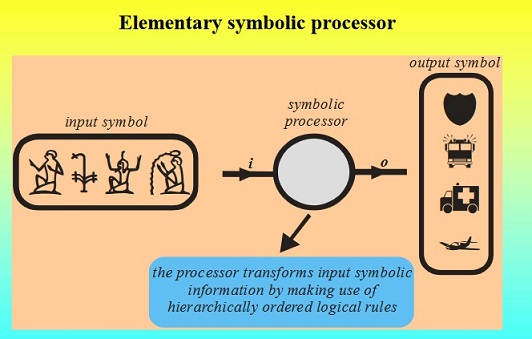

Conventional vs. AI computing:

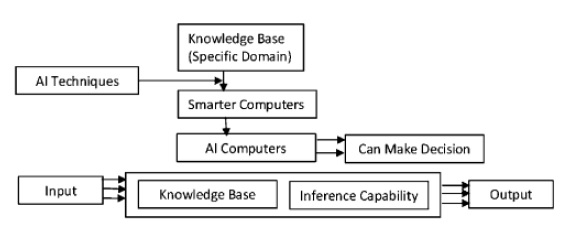

How does computer work like human being, this can be known by the considering few question as: how does a human being store knowledge, how does human being learn and how does human being reason? The art of performing these actions is the aim of AI. The major difference between conventional and AI computing is that in conventional computing the computer is given data and is told how to solve a problem whereas in AI knowledge is given about a domain and some inference capability.

_

Heuristic in artificial intelligence:

A heuristic technique, often called simply a heuristic, is any approach to problem solving, learning, or discovery that employs a practical method not guaranteed to be optimal or perfect, but sufficient for the immediate goals. Where finding an optimal solution is impossible or impractical, heuristic methods can be used to speed up the process of finding a satisfactory solution. Heuristics can be mental shortcuts that ease the cognitive load of making a decision. Examples of this method include using a rule of thumb, an educated guess, an intuitive judgment, stereotyping, profiling, or common sense. AI is the study of heuristics, rather than algorithms. Heuristic means rule of thumb, which usually works but may not do so in all circumstances. Example: getting to university in time for 8.00 AM lectures. Algorithm means prescription for solving a given problem over a defined range of input conditions. Example: solving a polynomial equation, or a set of N linear equations involving N variables. It may be more appropriate to seek and accept a sufficient solution (Heuristic search) to a given problem, rather than an optimal solution (algorithmic search). Heuristic is the integrated sum of those facts, which give us the ability to remember a face not seen for thirty or more years. In short we can say:

- It is the ability to think and understand instead of doing things by instinct or automatically.

- It is the ability to learn or understand to deal with new or trying situation.

- It is the ability to apply knowledge to manipulate one’s environment or think abstractly as, measured by objectives criteria.

- It is the ability to acquire, understand and apply knowledge or the ability to exercise thought and reason.

_

How AI techniques help Computers to be Smarter?

It is the question that we think when we think about AI. So let us think once again about the constraints of knowledge between them. Figure below shows how computers become intelligent by infusing inference capability into them.

_

_

The table below compares conventional computing and AI computing:

__

The programming without and with AI is different in following ways:

| Programming Without AI | Programming With AI |

| A computer program without AI can answer the specific questions it is meant to solve. | A computer program with AI can answer the generic questions it is meant to solve. |

| Modification in the program leads to change in its structure. | AI programs can absorb new modifications by putting highly independent pieces of information together. Hence you can modify even a minute piece of information of program without affecting its structure. |

| Modification is not quick and easy. It may lead to affecting the program adversely. | Quick and Easy program modification. |

_

Knowledge based systems proved to be much successful that earlier, more general problem solving systems. Since knowledge based system depend on large quantities of high quality knowledge for their success, the ultimate goal is to develop technique that permits systems to learn new knowledge autonomously and continually improve the quality of the knowledge they possess.

______

______

Human versus machine intelligence:

_

Intelligence:

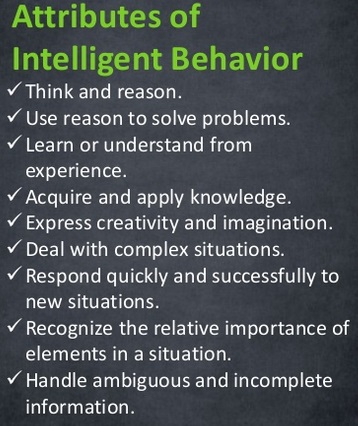

In the early 20th century, Jean Piaget remarked, “Intelligence is what you use when you don’t know what to do, when neither innateness nor learning has prepared you for the particular situation.” In simpler terms, intelligence can be defined as doing the right thing at the right time in a flexible manner that helps you survive proactively and improve productivity in various facets of life. Intelligence is the ability of a system to calculate, reason, perceive relationships and analogies, learn from experience, store and retrieve information from memory, solve problems, comprehend complex ideas, use natural language fluently, classify, generalize, and adapt new situations. There are various forms of intelligence: There is the more rational variety that is necessitated for intellectually demanding tasks like playing chess, solving complex problems and making discerning choices about the future. There is also the concept of social intelligence, characterized by courteous social behavior. Then there is emotional intelligence, which is about being empathetic toward the emotions and thoughts of the people with whom you engage. We generally experience each of these contours of intelligence in some combination, but that doesn’t mean they cannot be comprehended independently, perhaps even creatively within the context of AI. Most human behaviors are essentially instincts or reactions to outward stimuli; we generally don’t think we need to be intelligent to execute them. In reality though, our brains are wired smartly to perform these tasks. Most of what we do is reflexive and automatic, in that we sometimes don’t even need to be conscious of these processes, but our brain is always in the process of assimilating, analyzing and implementing instructions. It is very difficult to program robots to do things we typically find very easy to do. In the field Education, intelligence is defined as the capability to understand, deal with and adapt to new situations. When it comes to Psychology, it is defined as the capability to apply knowledge to change one’s environment. For example, a physician learning to treat a patient with unfamiliar symptoms or an artist modifying a painting to change the impression it makes, comes under this definition very neatly. Effective adaptation requires perception, learning, memory, logical reasoning and solving problems. This means that intelligence is not particularly a mental process; it is rather a summation of these processes toward effective adaptation to the environment. So when it comes to the example of the physician, he/she is required to adapt by seeing material about the disease, learning the meaning behind the material, memorizing the most important facts and reasoning to understand the new symptoms. So, as a whole, intelligence is not considered a mere ability, but a combination of abilities using several cognitive processes to adapt to the environment. Artificial Intelligence is the field dedicated to developing machines that will be able to mimic and perform as humans.

__

__

There is a huge difference between (artificial) intelligence (being intelligent) and intelligent behaviors (behaving intelligently). Intelligent behaviors can be simulated after the gathered facts and rules and constructed algorithms for some limited input data and defined goals. From this point of view even machines (e.g. calculator, computers programs) can behave intelligently. Such machines are not aware of doing intelligent computations that have been programmed by intelligent programmers. It is much easier to simulate intelligent behaviors than reproduce intelligence. On the other hand, (artificial) intelligence can produce rules and algorithms to solve new (usually similar) tasks using gained knowledge and some associative mechanisms that allow it to generalize facts and rules and then memorize or adapt the results of generalization. Today, we can program computers to behave intelligently, but we still work on making computers to be intelligent (have their own artificial intelligence). Artificial intelligence like real intelligence cannot work without knowledge that has to be somehow represented, generalizable, and easily available in various contexts and circumstances. Knowledge is indispensable for implementing intelligent behaviors in machines. It automatically steers the associative processes that take place in a brain. Knowledge as well as intelligence are automatically formed in brain structures under the influence of incoming data, their combinations, and sequences.

______

Human brain versus computer:

One thing that definitely needs to happen for AI to be a possibility is an increase in the power of computer hardware. If an AI system is going to be as intelligent as the brain, it’ll need to equal the brain’s raw computing capacity. One way to express this capacity is in the total calculations per second (cps) the brain could manage, and you could come to this number by figuring out the maximum cps of each structure in the brain and then adding them all together. For comparison, if a “computation” was equivalent to one “Floating Point Operation” – a measure used to rate current supercomputers – then 1 quadrillion “computations” would be equivalent to 1 Petaflops The most powerful systems in the world are ranked by the total number of “petaflops” they can achieve. A single petaflop is equal to 1 quadrillion calculations per second (cps) — or 1,000 trillion operations.

_

Digital Computers:

A digital computer system is one in which information has discrete values. The design includes transistors (on/off switches); a central processing unit (CPU), some kind of operating system (like windows) and it is based on binary logic (instructions coded as 0s and 1s). Computers are linear designs and have continually grown in terms of size, speed and capacity. In 1971 the first Intel microprocessor (model 4004) had 2,300 transistors, but by 2011 the Intel Pentium microprocessor had 2.3 billion transistors. One of the fastest super computers today is built by Fujitsu . It has 864 racks containing 88,128 individual CPUs and can operate at a speed of 10.51 peta-flops or 10.5 quadrillion calculations per second. The Sequoia supercomputer can perform 16.32 quadrillion floating operations (or petaflops) every second. Supercomputers have led many people to think that we must be finally approaching the capabilities of the human brain in terms of speed and capability, and we must be on the verge of creating a C3PO type robot that can think and converse just like a human. But the fact is that we still have a long way to go.

_

Arthur R. Jensen, a leading researcher in human intelligence, suggests “as a heuristic hypothesis” that all normal humans have the same intellectual mechanisms and that differences in intelligence are related to “quantitative biochemical and physiological conditions” resulting in differences in speed, short term memory, and the ability to form accurate and retrievable long term memories. Whether or not Jensen is right about human intelligence, the situation in AI today is the reverse. Computer programs have plenty of speed and memory but their abilities correspond to the intellectual mechanisms that program designers understand well enough to put in programs. Some abilities that children normally don’t develop till they are teenagers may be in, and some abilities possessed by two year olds are still out. The matter is further complicated by the fact that the cognitive sciences still have not succeeded in determining exactly what the human abilities are. Very likely the organization of the intellectual mechanisms for AI can usefully be different from that in people. Whenever people do better than computers on some task or computers use a lot of computation to do as well as people, this demonstrates that the program designers lack understanding of the intellectual mechanisms required to do the task efficiently. AI aim to make computer programs that can solve problems and achieve goals in the world as well as humans. However, many people involved in particular research areas are much less ambitious. A few people think that human-level intelligence can be achieved by writing large numbers of programs of the kind people are now writing and assembling vast knowledge bases of facts in the languages now used for expressing knowledge. However, most AI researchers believe that new fundamental ideas are required, and therefore it cannot be predicted when human-level intelligence will be achieved. Many researchers invented non-computer machines, hoping that they would be intelligent in different ways than the computer programs could be. However, they usually simulate their invented machines on a computer and come to doubt that the new machine is worth building. Because many billions of dollars that have been spent in making computers faster and faster, another kind of machine would have to be very fast to perform better than a program on a computer simulating the machine.

_

The Human Brain:

The human brain is not a digital computer design. It is some kind of analogue neural network that encodes information on a continuum. It does have its own important parts that are involved in the thinking process, such as the pre-frontal cortex, amygdale, thalamus, hippocampus, limbic system that are inter-connected by neurons. However, the way they communicate and work is totally different from a digital computer. Neurons are the real key to how the brain learns, thinks, perceives, stores memory, and a host of other functions. The average brain has at least 100 billion neurons which are connected via thousands of synapses that transmit signals via electro/chemical connections. It is the synapses that are most comparable to transistors because they turn off or on. The human brain has a huge number of synapses. Each of the one hundred billion neurons has on average 7,000 synaptic connections to other neurons. It has been estimated that the brain of a three-year-old child has about 10^15 synapses (1 quadrillion). This number declines with age, stabilizing by adulthood. Estimates vary for an adult, ranging 100 to 500 trillion. An estimate of the brain’s processing power, based on a simple switch model for neuron activity, is around 100 trillion synaptic updates per second (SUPS). Each neuron is a living cell and a computer in its own right. A neuron has the signal processing power of thousands of transistors. Unlike transistors neurons can modify their synapses and modulate the frequency of their signals. Unlike digital computers with fixed architecture, the brain can constantly re-wire its neurons to learn and adapt. Instead of programs, neural networks learn by doing and remembering and this vast network of connected neurons gives the brain excellent pattern recognition. Using visual processing as a starting point, robotics expert Hans Moravec of Carnegie Mellon institute estimated that humans can process about 100 trillion instructions per second (or teraflops). But Chris Westbury, associate professor at the University of Alberta, estimates the brain may be capable of 20 million billion calculations per second, or around 20 petaflops. Westbury bases this estimation on the number of neurons in an average brain and how quickly they can send signals to one another. IBM researchers have estimated that a single human brain can process 36.8 petaflops of data. Ray Kurzweil calculated it to be around 10 quadrillion cps. Currently, the world’s fastest supercomputer, China’s Tianhe-2, has actually beaten that number, clocking in at about 34 quadrillion cps. But Tianhe-2 is also a dick, taking up 720 square meters of space, using 24 megawatts of power (the brain runs on just 20 watts), and costing $390 million to build. Not especially applicable to wide usage, or even most commercial or industrial usage yet. The combined processing power of the 500 most powerful supercomputers has grown to 123.4 petaflops. What’s clear is that computer processing power is at least approaching, if not outpacing, human thought. The brain processes information slowly, since neurons are slow in action (order of milliseconds). Biological neurons operate at a peak speed of about 200 Hz, a full seven orders of magnitude slower than a modern microprocessor (~2 GHz). Moreover, neurons transmit spike signals across axons at no greater than 120 m/s, whereas existing electronic processing cores can communicate optically at the speed of light.

_

Computer intelligence versus Human intelligence:

Intelligent systems (both natural and artificial) have several key features. Some intelligence features are more developed in a human’s brain; other intelligence features are more developed in modern computers.

| Name of intelligence feature | Who has the advantage | Comments about comparison |

| Experimental learning | Human | Currently computers are not able to general experimenting. Computers are able to make some specific (narrow) experimentation though. |

| Direct gathering information | Computer | Modern computers are very strong in gathering information. Search engines and particularly Google is the best example |

| Decision making ability to achieve goals | Human | Currently computers are not able to make good decisions in “general” environment |

| Hardware processing power | Computer | Processing power of modern computers is tremendous (several billion operations per second) |

| Hardware memory storage | Computer | HDD memory storage of modern computers is huge |

| Information retrieval speed | Computer | Data retrieval speed of modern computers is ~1000 times faster that human’s ability.

Examples of high-speed data retrieval systems: RDBMS, Google |

| Information retrieval depths | It’s not clear | Both humans and computers have limited ability in “deep” informational retrieval. |

| Information breadth | Computer | Practically every internet search engine beats human’s in the breadth of stored and available information |

| Information retrieval relevancy | Human | Usually human’s brain retrieves more relevant information than computer program. But advantage of humans disappears every year. |

| Ability to find and establish correlation between concepts | Human | Currently computers are not able to establish correlation between general concepts |

| Ability to derive concepts from other concepts | Human | Usually computers are not able to derive concepts from other concepts |

| Consistent system of super goals | Human | Humans have highly developed system of super goals (“avoid pain”, “avoid hunger”, sexuality, “desire to talk” …). Super goals implementation in modern computers is very limited. |

_

Limitations of Digital Computers

We have been so successful with Large Scale Integration (LSI) in continuously shrinking microprocessor circuits and adding more transistors year after year that people have begun to believe that we might actually equal the human brain. But, there are problems. The first problem is that in digital computers all calculations must pass through the CPU which eventually slows down its program. The human brain doesn’t use a CPU and is much more efficient. The second problem is the limitations to shrinking circuits. In 1971 when Intel introduced the 4004 microprocessor, it could hold 2.300 transistors and they were about 10 microns wide. Today a Pentium chip has 1.4 billion transistors and the transistors are down to 22 nanometers wide (a nanometer is one billionth of a meter). They are currently working at 14 nanometers and hope to reach 10 nanometers. The problem is that they are getting close to the size of a few atoms where they will begin to run into problems of quantum physics such as the “uncertainty principle where you wouldn’t be able to determine precisely where the electron is and it could leak out of the wire.” This could end size reduction for digital computers. Also, all that a transistor in computer can do is to switch on or off current. Transistors have no metabolism, cannot manufacture chemicals and cannot reproduce. Major advantage of transistor is that it can process information very fast, near speed of light which a neuron cannot do.

_

Seth Lloyd considers computers as physical systems. He shows in a nice way that the speed with which a physical device can process information is limited by its energy, and the number of degrees of freedom it possesses limits the amount of information that it can process. So, the physical limits of computation can be calculated as determined by the speed of light, the quantum scale and the gravitational constant. Currently, the basic unit of computer computation — the silicon chip — relies on the same outdated computational architecture that was first proposed nearly 70 years ago. These chips separate processing and memory — the two main functions that chips carry out — into different physical regions, which necessitates constant communications between the regions and lowers efficiency. Although this organization is sufficient for basic number crunching and tackling spreadsheets, it falters when fed torrents of unstructured data, as in vision and language processing.

_

Each human eye has about 120 high-quality megapixels. A really good digital camera has about 16 megapixels. The numbers of megapixels between the eye and the camera are not that dramatically different, but the digital camera has no permanent wire connections between the physical sensors and the optical, computational, and memory functions of the camera. The microprocessor input and output need to be multiplexed to properly channel the flow of the arriving and exiting information. Similarly, the functional heart of a digital computer only time-shares its faculties with the attached devices: memory, camera, speaker, or printer. If such an arrangement existed in the human brain, you could do only one function at a time. You could look, then think, and then stretch out your hand to pick up an object. But you could not speak, see, hear, think, move, and feel at the same time. These problems could be solved by operating numerous microprocessors concurrently, but the hardware would be too difficult to design, too bulky to package, and too expensive to implement. By contrast, parallel processing poses no problem in the human brain. Neurons are tiny, come to life in huge numbers, and form connections spontaneously. Just as important is energy efficiency. Human brains require negligible amounts of energy, and power dissipation does not overheat the brain. A computer as complex as the human brain would need its own power plant with megawatts of power, and a heat sink the size of a city.

___

How human brain is superior to computer:

The brain has a processing capacity of 10 quadrillion instructions (calculations) per second according to Ray Kurzweil. Currently, the world’s fastest supercomputer, China’s Tianhe-2, has actually beaten that number, clocking in at about 34 quadrillion cps. However the computational power of the human brain is difficult to ascertain, as the human brain is not easily paralleled to the binary number processing of computers. For while the human brain is calculating a math problem, it is subconsciously processing data from millions of nerve cells that handle the visual input of the paper and surrounding area, the aural input from both ears, and the sensory input of millions of cells throughout the body. The brain is also regulating the heartbeat, monitoring oxygen levels, hunger and thirst requirements, breathing patterns and hundreds of other essential factors throughout the body. It is simultaneously comparing data from the eyes and the sensory cells in the arms and hands to keep track of the position of the pen and paper as the calculation is being performed. Human brains can process far more information than the fastest computers. In fact, in the 2000s, the complexity of the entire Internet was compared to a single human brain. This is so because brains are great at parallel processing and sorting information. Brains are also about 100,000 times more energy-efficient than computers, but that will change as technology advances.

_

Although the brain-computer metaphor has served cognitive psychology well, research in cognitive neuroscience has revealed many important differences between brains and computers. Appreciating these differences may be crucial to understanding the mechanisms of neural information processing, and ultimately for the creation of artificial intelligence. The most important of these differences are listed below:

Difference 1: Brains are analogue; computers are digital:

Difference 2: The brain uses content-addressable memory:

Difference 3: The brain is a massively parallel machine; computers are modular and serial:

Difference 4: Processing speed is not fixed in the brain; there is no system clock

Difference 5: Short-term memory is not like RAM

Difference 6: No hardware/software distinction can be made with respect to the brain or mind

Difference 7: Synapses are far more complex than electrical logic gates

Difference 8: Unlike computers, processing and memory are performed by the same components in the brain

Difference 9: The brain is a self-organizing system

Difference 10: Brains have bodies

_

- Humans perceive by patterns whereas the machines perceive by set of rules and data.

- Humans store and recall information by patterns, machines do it by searching algorithms. For example, the number 40404040 is easy to remember, store, and recall as its pattern is simple.

- Humans can figure out the complete object even if some part of it is missing or distorted; whereas the machines cannot do it correctly.

- Human intelligence is analogue as work in the form of signals and artificial intelligence is digital, they majorly works in the form of numbers.

__

Processing power needed to simulate a brain:

Whole brain emulation:

A popular approach discussed to achieving general intelligent action is whole brain emulation. A low-level brain model is built by scanning and mapping a biological brain in detail and copying its state into a computer system or another computational device. The computer runs a simulation model so faithful to the original that it will behave in essentially the same way as the original brain, or for all practical purposes, indistinguishably. Whole brain emulation is discussed in computational neuroscience and neuroinformatics, in the context of brain simulation for medical research purposes. It is discussed in artificial intelligence research as an approach to strong AI. Neuroimaging technologies that could deliver the necessary detailed understanding are improving rapidly, and futurist Ray Kurzweil in the book The Singularity Is Near predicts that a map of sufficient quality will become available on a similar timescale to the required computing power.

_

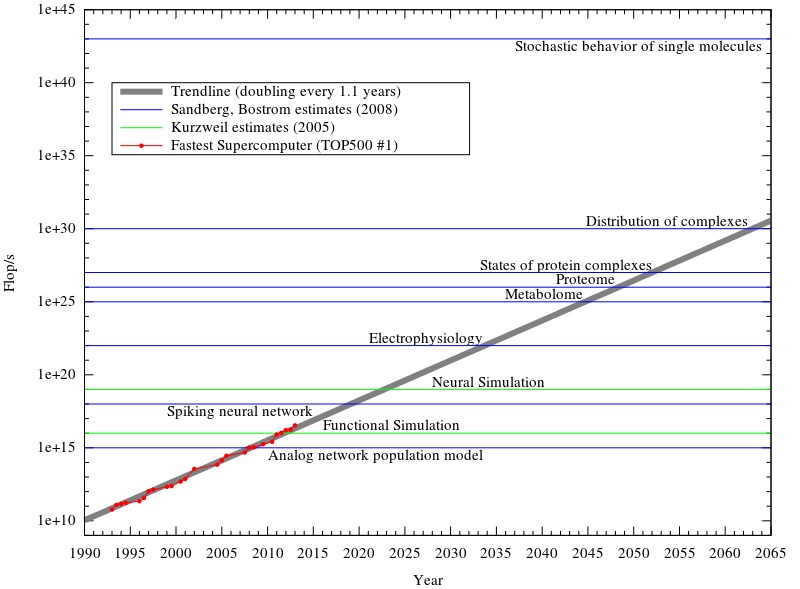

The figure above estimates of how much processing power is needed to emulate a human brain at various levels (from Ray Kurzweil, and Anders Sandberg and Nick Bostrom), along with the fastest supercomputer from TOP500 mapped by year. Note the logarithmic scale and exponential trendline, which assumes the computational capacity, doubles every 1.1 years. Kurzweil believes that mind uploading will be possible at neural simulation, while the Sandberg, Bostrom report is less certain about where consciousness arises.

______

______

Testing intelligence of machine:

Turing test:

Alan Turing’s research into the foundations of computation had proved that a digital computer can, in theory, simulate the behaviour of any other digital machine, given enough memory and time. (This is the essential insight of the Church–Turing thesis and the universal Turing machine.) Therefore, if any digital machine can “act like it is thinking” then, every sufficiently powerful digital machine can. Turing writes, “all digital computers are in a sense equivalent.” The Turing test is a test, developed by Alan Turing in 1950, of a machine’s ability to exhibit intelligent behaviour equivalent to, or indistinguishable from, that of a human. Turing proposed that a human evaluator would judge natural language conversations between a human and a machine that is designed to generate human-like responses. The evaluator would be aware that one of the two partners in conversation is a machine, and all participants would be separated from one another. The conversation would be limited to a text-only channel such as a computer keyboard and screen so that the result would not be dependent on the machine’s ability to render words as speech. If the evaluator cannot reliably tell the machine from the human (Turing originally suggested that the machine would convince a human 70% of the time after five minutes of conversation), the machine is said to have passed the test. The test does not check the ability to give correct answers to questions, only how closely answers resemble those a human would give. Since Turing first introduced his test, it has proven to be both highly influential and widely criticised, and it has become an important concept in the philosophy of artificial intelligence. Drawback of the Turing Test is that the test is only as good as the human who is asking the questions and the knowledge of the human answering the questions.

_

Two of the objections cited by Turing are worth considering further. Lady Lovelace’s Objection, first stated by Ada Lovelace, argues that computers can only do as they are told and consequently cannot perform original (hence, intelligent) actions. This objection has become a reassuring if somewhat dubious part of contemporary technological folklore. Expert systems, especially in the area of diagnostic reasoning, have reached conclusions unanticipated by their designers. Indeed, a number of researchers feel that human creativity can be expressed in a computer program.

_

The other related objection, the Argument from Informality of Behavior, asserts the impossibility of creating a set of rules that will tell an individual exactly what to do under every possible set of circumstances. Certainly, the flexibility that enables a biological intelligence to respond to an almost infinite range of situations in a reasonable if not necessarily optimal fashion is a hallmark of intelligent behavior. While it is true that the control structure used in most traditional computer programs does not demonstrate great flexibility or originality, it is not true that all programs must be written in this fashion. Indeed, much of the work in AI over the past 25 years has been to develop programming languages and models such as production systems, object-based systems, network representations, and others attempt to overcome this deficiency. Many modern AI programs consist of a collection of modular components, or rules of behavior, that do not execute in a rigid order but rather are invoked as needed in response to the structure of a particular problem instance. Pattern matchers allow general rules to apply over a range of instances. These systems have an extreme flexibility that enables relatively small programs to exhibit a vast range of possible behaviors in response to differing problems and situations. Whether these systems can ultimately be made to exhibit the flexibility shown by a living organism is still the subject of much debate. Nobel laureate Herbert Simon has argued that much of the originality and variability of behavior shown by living creatures is due to the richness of their environment rather than the complexity of their own internal programs. In The Sciences of the Artificial, Simon (1981) describes an ant progressing circuitously along an uneven and cluttered stretch of ground. Although the ant’s path seems quite complex, Simon argues that the ant’s goal is very simple: to return to its colony as quickly as possible. The twists and turns in its path are caused by the obstacles it encounters on its way. Simon concludes that an ant, viewed as a behaving system, is quite simple. The apparent complexity of its behavior over time is largely a reflection of the complexity of the environment in which it finds itself. This idea, if ultimately proved to apply to organisms of higher intelligence as well as to such simple creatures as insects, constitutes a powerful argument that such systems are relatively simple and, consequently, comprehensible. It is interesting to note that if one applies this idea to humans, it becomes a strong argument for the importance of culture in the forming of intelligence. Rather than growing in the dark like mushrooms, intelligence seems to depend on an interaction with a suitably rich environment. Culture is just as important in creating humans as human beings are in creating culture. Rather than denigrating our intellects, this idea emphasizes the miraculous richness and coherence of the cultures that have formed out of the lives of separate human beings. In fact, the idea that intelligence emerges from the interactions of individual elements of a society is one of the insights supporting the approach to AI technology.

_

ELIZA and PARRY:

In 1966, Joseph Weizenbaum created a program which appeared to pass the Turing test. The program, known as ELIZA, worked by examining a user’s typed comments for keywords. If a keyword is found, a rule that transforms the user’s comments is applied, and the resulting sentence is returned. If a keyword is not found, ELIZA responds either with a generic riposte or by repeating one of the earlier comments. In addition, Weizenbaum developed ELIZA to replicate the behaviour of a Rogerian psychotherapist, allowing ELIZA to be “free to assume the pose of knowing almost nothing of the real world.” With these techniques, Weizenbaum’s program was able to fool some people into believing that they were talking to a real person, with some subjects being “very hard to convince that ELIZA […] is not human.” Thus, ELIZA is claimed by some to be one of the programs (perhaps the first) able to pass the Turing Test, even though this view is highly contentious. Kenneth Colby created PARRY in 1972, a program described as “ELIZA with attitude”. It attempted to model the behaviour of a paranoid schizophrenic, using a similar (if more advanced) approach to that employed by Weizenbaum. To validate the work, PARRY was tested in the early 1970s using a variation of the Turing Test. A group of experienced psychiatrists analysed a combination of real patients and computers running PARRY through teleprinters. Another group of 33 psychiatrists were shown transcripts of the conversations. The two groups were then asked to identify which of the “patients” were human and which were computer programs. The psychiatrists were able to make the correct identification only 48 percent of the time – a figure consistent with random guessing. In the 21st century, versions of these programs (now known as “chatterbots”) continue to fool people. “CyberLover”, a malware program, preys on Internet users by convincing them to “reveal information about their identities or to lead them to visit a web site that will deliver malicious content to their computers”. The program has emerged as a “Valentine-risk” flirting with people “seeking relationships online in order to collect their personal data”.

_

The Chinese room:

John Searle’s 1980 paper Minds, Brains, and Programs proposed the “Chinese room” thought experiment and argued that the Turing test could not be used to determine if a machine can think. Searle noted that software (such as ELIZA) could pass the Turing Test simply by manipulating symbols of which they had no understanding. Without understanding, they could not be described as “thinking” in the same sense people do. Therefore, Searle concludes, the Turing Test cannot prove that a machine can think. Much like the Turing test itself, Searle’s argument has been both widely criticised and highly endorsed. Arguments such as Searle’s and others working on the philosophy of mind sparked off a more intense debate about the nature of intelligence, the possibility of intelligent machines and the value of the Turing test that continued through the 1980s and 1990s.

_

Loebner Prize:

The Loebner Prize provides an annual platform for practical Turing Tests with the first competition held in November 1991. It is underwritten by Hugh Loebner. The Cambridge Center for Behavioral Studies in Massachusetts, United States, organised the prizes. As Loebner described it, one reason the competition was created is to advance the state of AI research, at least in part, because no one had taken steps to implement the Turing Test despite 40 years of discussing it. The Loebner Prize tests conversational intelligence; winners are typically chatterbot programs, or Artificial Conversational Entities (ACE). During the 2009 competition, held in Brighton, UK, the communication program restricted judges to 10 minutes for each round, 5 minutes to converse with the human, 5 minutes to converse with the program. This was to test the alternative reading of Turing’s prediction that the 5-minute interaction was to be with the computer. For the 2010 competition, the Sponsor again increased the interaction time, between interrogator and system, to 25 minutes, well above the figure given by Turing.

__

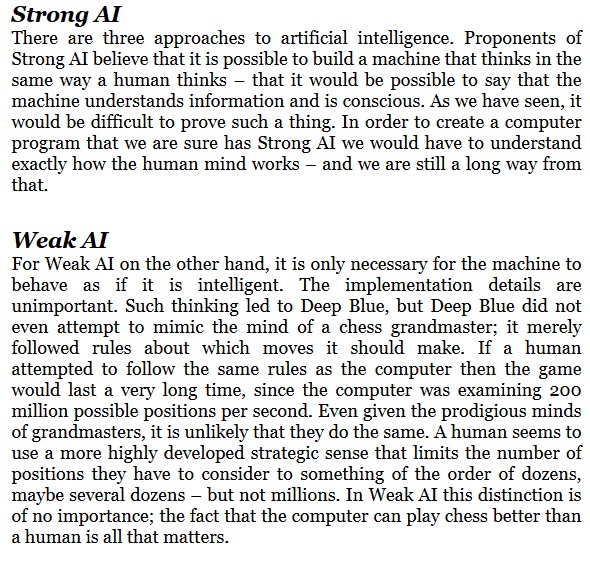

IBM’s Deep Blue and chess: