Dr Rajiv Desai

An Educational Blog

FACIAL RECOGNITION (TECHNOLOGY)

Facial Recognition (Technology):

_____

_____

Prologue:

Faces are special. Days after birth, an infant can distinguish his/her mother’s face from those of other women. Babies are more reliably engaged by a sketch of a face than they are by other images. Though human faces are quite similar in their basic composition, most of us can differentiate effortlessly among them. A face is a codex of social information: it can often tell us at a glance, someone’s age, gender, racial background and mood. The human brain is often less reliable than digital algorithms, but it remains superior at facial recognition. At the airport, when a scanner compares your face with your passport photo, the lighting is perfect and the angle is perfect. By contrast, an average human can recognize a family member from behind. No computer will ever be able to do that. Though we may take for granted our brain’s ability to recognize the faces of friends, family, and acquaintances, it is actually an extraordinary gift. Designing an algorithm that can effectively scan through a series of digitized photographs or still video images of faces and detect all occurrences of a previously encountered face is a monumental task. This challenge and many others are the focus of a broad area of computer science research known as facial recognition. The discipline of facial recognition spans the subjects of graphics and artificial intelligence, and it has been the subject of decades of research and the product of significant government and corporate investment.

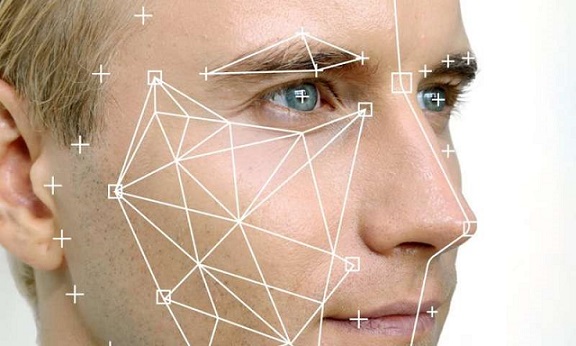

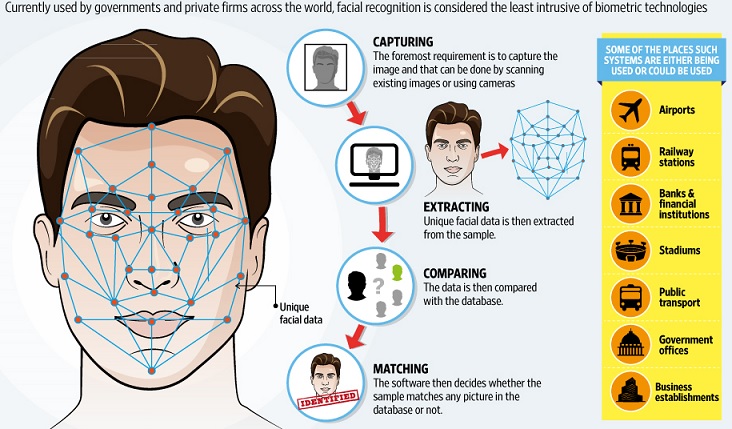

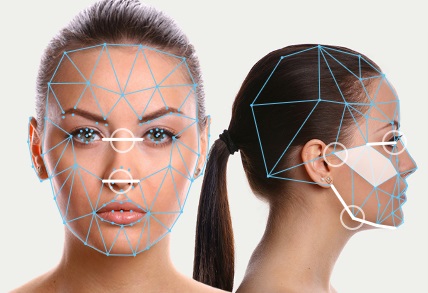

At its most basic, facial recognition technology, sometimes referred to as facial biometrics, involves using a 2D or 3D camera to capture an image of a human face. The algorithms measure numerous points on the face down to the sub-millimeter. The pattern is then compared to others in a database for a match, whether it’s the billion people on Facebook or the one that unlocks your smartphone. The ability of computers to recognize people’s faces is improving rapidly, along with the ubiquity of cameras and the power of computing hosted in the internet cloud to figure out identities in real time. All biometrics whether a finger, face, iris, voice or any other – is a matter of matching a pattern. For fingerprints its swirls and whorls. In faces, its landmark points like eyes, nose, and mouth. Fingerprints cannot lie, but liars can make fingerprints. Unfortunately, this paraphrase has been proven right in many occasions now; and not only for fingerprints, but also for facial recognition.

______

______

Glossary of terms, acronyms and abbreviations:

Biometric characteristic: A biological and/or behavioral characteristic of an individual that can be detected and from which distinguishing biometric features can be repeatedly extracted for the purpose of automated recognition of individuals

Biometric feature: A biometric characteristic that has been processed so as to extract numbers or labels which can be used for comparison

Biometric feature extraction: The process through which biometric characteristics are converted into biometric templates

Biometric identification: Search against a gallery to find and return a sufficiently similar biometric template

Biometric probe: Biometric characteristics obtained at the site of verification or identification (e.g., an image of an individual’s face) that are passed through an algorithm which convert the characteristic into biometric features for comparison with biometric templates

Biometric template: Set of stored biometric features comparable directly to biometric features of a probe biometric sample

Biometric verification: The process by which an identification claim is confirmed through biometric comparison

Database: same as Gallery

Enrolment: The process through which a biometric characteristic is captured and must pass in order to enter into the image gallery as a biometric template

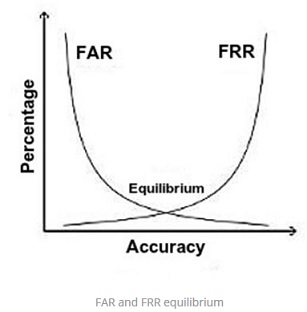

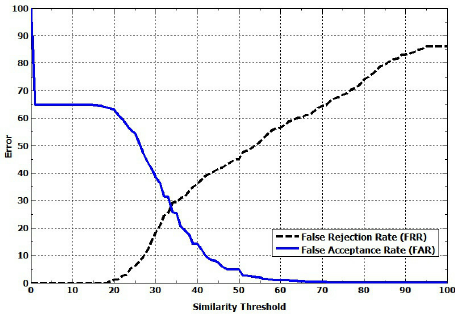

Equal error rate (EER): The rate at which the false accept rate is exactly equal to the false reject rate

Facial features: The essential distinctive characteristics of a face, which algorithms attempt to express or translate into mathematical terms so as to make recognition possible.

Facial landmarks: important locations in the face-geometry such as position of eyes, nose, mouth, etc.

False accept rate (FAR): A statistic used to measure biometric performance when performing the verification task. The percentage of times a face recognition algorithm, technology, or system falsely accepts an incorrect claim to existence or non-existence of a candidate in the database over all comparisons between a probe and gallery image

False negative: An incorrect non-match between a probe and a candidate in the gallery returned by a face recognition algorithm, technology, or system

False positive: An incorrect match between a biometric probe and biometric template returned by a face recognition algorithm, technology, or system

False reject rate (FRR): A statistic used to measure biometric performance when performing the verification task. The percentage of times a face recognition algorithm, technology, or system incorrectly rejects a true claim to existence or non-existence of a match in the gallery, based on the comparison of a biometric probe and biometric template

Gallery: A database in which stored biometric templates reside

Impostor: A person who submits a biometric sample in either an intentional or inadvertent attempt to claim the identity of another person to a biometric system

Match: A match is where the similarity score (of the probe compared to a biometric template in the reference database) is within a predetermined threshold

Matching score: Numerical value (or set of values) resulting from the comparison of a biometric probe and biometric template

Recognition rate: A generic metric used to describe the results of the repeated performance of a biometric system to indicate the probability that a probe and a candidate in the gallery be matched

Similarity score: A value returned by a biometric algorithm that indicates the degree of similarity or correlation between a biometric template (probe) and a previously stored template in the reference database

Three-dimensional (3D) algorithm: A recognition algorithm that makes use of images from multiple perspectives, whether feature-based or holistic

Threshold: Numerical value (or set of values) at which a decision boundary exists

True accept rate (TAR) = 1-false reject rate

True reject rate (TRR) = 1-false accept rate

Python (programming language): Python is a general purpose programming language started by Guido van Rossum that became very popular very quickly, mainly because of its simplicity and code readability. It enables the programmer to express ideas in fewer lines of code without reducing readability.

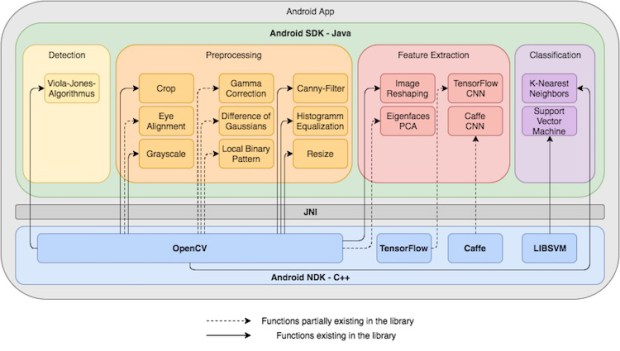

OpenCV: OpenCV (Open Source Computer Vision) is a library of programming functions mainly aimed at real-time computer vision. OpenCV supports a wide variety of programming languages such as C++, Python, Java, etc., and is available on different platforms including Windows, Linux, OS X, Android, and iOS.

OpenCV-Python: OpenCV-Python is the Python API for OpenCV, combining the best qualities of the OpenCV C++ API and the Python language. OpenCV-Python is a library of Python bindings designed to solve computer vision problems.

Face recognition vendor test (FRVT): FRVT is an independently administered technology evaluation of mature face recognition systems. FRVT provides performance measures for assessing the capability of face recognition systems to meet requirements for large-scale, real-world applications.

_

Abbreviations:

AI = artificial intelligence

ACLU = American Civil Liberties Union

API = Application Programming Interface

EER = Equal Error Rate

FR = facial recognition = face recognition

FAR = False Accept Rate

FRGC = Face Recognition Grand Challenge

FRR = False Reject Rate

FRS = Face/Facial Recognition System

FRT = Face/Facial Recognition Technology

FRVT = Face Recognition Vendor Test

ICA = Independent component analysis

ISO/IEC = International Standard Organization/International Electro technical Commission

JPEG = Joint Photographic Experts Group

LFA = Local Feature Analysis

NIST = National Institute of Standards and Technology

PCA = Principal Component Analysis

PIN = personal identification number

ROC = Receiver Operating Characteristic

SVM = Support Vector Machines

TAR = True Accept Rate

TRR = True Reject Rate

IR = infrared

______

______

Biometrics:

_

The term “biometrics” is derived from the Greek words “bio” (life) and “metrics” (to measure). Automated biometric systems have only become available over the last few decades, due to significant advances in the field of computer processing. Many of these new automated techniques, however, are based on ideas that were originally conceived hundreds, even thousands of years ago. One of the oldest and most basic examples of a characteristic that is used for recognition by humans is the face. Since the beginning of civilization, humans have used faces to identify known (familiar) and unknown (unfamiliar) individuals. This simple task became increasingly more challenging as populations increased and as more convenient methods of travel introduced many new individuals into- once small communities. Other characteristics have also been used throughout the history of civilization as a more formal means of recognition.

_

A biometric is a unique, measurable characteristic of a human being that can be used to automatically recognize an individual or verify an individual identity. Biometrics authentication is used in computer science as a form of identification and access control. It is also used to identify individuals in groups that are under surveillance. Biometric identifiers are the distinctive, measurable characteristics used to label and describe individuals. Biometric identifiers are often categorized as physiological versus behavioral characteristics. Physiological characteristics are related to the shape of the body. Examples include, but are not limited to fingerprint, palm veins, face recognition, DNA, palm print, hand geometry, iris recognition, retina and odour/scent. Behavioral characteristics are related to the pattern of behavior of a person, including but not limited to typing rhythm, gait, and voice. Some researchers have coined the term behaviometrics to describe the latter class of biometrics.

_

Biometrics is used to identify and authenticate a person using a set of recognizable and verifiable data unique and specific to that person.

Identification answers the question: “Who are you?”

Authentication answers the question: “Are you really who you say you are?”

_

More traditional means of access control include token-based identification systems, such as a driver’s license or passport, and knowledge-based identification systems, such as a password or personal identification number. According to Pew Research, the average individual has to remember between 25 and 150 passwords or PIN codes, with 39% saying they use the same or similar passwords in order to avoid confusion. While this, of course, is understandable given the number of online accounts a person may have, it creates the perfect environment for identity theft. Although Password/Pin systems and Token systems are still the most common person verification and identification methods, trouble with forgery, theft and lapses in user’s memory pose a very real threat to high security environments which are now turning to biometric technologies to alleviate this potentially dangerous threat. Since biometric identifiers are unique to individuals, they are more reliable in verifying identity than token and knowledge-based methods; however, the collection of biometric identifiers raises privacy concerns about the ultimate use of this information.

_

Biometrics makes use unique physiological and behavioral patterns of human body. These patterns are formed randomly owing to different biological and environmental reasons. Randomness and complexity of details make these patterns good enough to be considered as unique. These biological or behavioral characteristics can be as obvious as facial structure or voice, which can even be recognized and differentiated by human senses, or as unapparent as DNA sequence of vascular structure, which require special equipment and process for identification of an individual. Despite the sizable difference in different biometric traits, they serve a common purpose: making personal identification possible with biometrics. Biometrics makes use of statistical, mathematical, imaging and computing techniques to uniquely map these patterns for an individual. These patterns are first captured by imaging or scanning and then taken through specialized algorithms to generate a biometric template, which is unique to the individual.

_

Biometrics can measure both physiological and behavioral characteristics:

Physiological biometrics (based on measurements and data derived from direct the human body) include:

Finger-scan

Facial Recognition

Iris-scan

Retina-scan

Hand-scan

Behavioral biometrics (based on measurements and data derived from an action) include:

Voice-scan

Signature-scan

Gait-scan

There are also secondary biometrics, such as recognising individual patterns around device and keyboard use but these have so far been limited in their deployment.

_

Some of these features are extracted from the camera devices and some are captured through the specialized scanners. These extracted features can be used in different applications in different ways. These applications include the recognition systems, authentication system, age verification systems, disease identification systems etc. Today most of the online and offline applications are involving the biometric feature to improve the relative authenticity and biometric applications are used across industries, institutions and government establishments. In many business outfits, it is very crucial for continuity of business operations that biometric systems keep functioning tirelessly. In institutions like hospitals and blood transfusion units, where precise identification of patients and blood / organ donors can be crucial, biometrics eliminates possibility of human errors and expedite overall healthcare operations by streamlining patient identification practice.

_

Biometric functionality:

Many different aspects of human physiology, chemistry or behavior can be used for biometric authentication. The selection of a particular biometric for use in a specific application involves a weighting of several factors. Jain et al. (1999) identified seven such factors to be used when assessing the suitability of any trait for use in biometric authentication.

- Universality means that every person using a system should possess the trait.

- Uniqueness means the trait should be sufficiently different for individuals in the relevant population such that they can be distinguished from one another.

- Permanence relates to the manner in which a trait varies over time. More specifically, a trait with ‘good’ permanence will be reasonably invariant over time with respect to the specific matching algorithm.

- Measurability (collectability) relates to the ease of acquisition or measurement of the trait. In addition, acquired data should be in a form that permits subsequent processing and extraction of the relevant feature sets.

- Performance relates to the accuracy, speed, and robustness of technology used.

- Acceptability relates to how well individuals in the relevant population accept the technology such that they are willing to have their biometric trait captured and assessed.

- Circumvention relates to the ease with which a trait might be imitated using an artifact or substitute.

Proper biometric use is very application dependent. Certain biometrics will be better than others based on the required levels of convenience and security. No single biometric will meet all the requirements of every possible application.

_

Choosing a biometric modality:

Deploying a biometric modality depends on identification or authentication application it is going to be used with, for example: for a low security door access, fingerprint based access does the job, however, for logical access to a high security network server, user might have to authenticate with fingerprints as well as his or her voice print. Biometric authentication application can be implemented using one (unimodal) or more than one (multimodal) approach that makes use of single or multiple biometric modalities respectively. In many online and mobile services, for example, app based mobile banking or financial services application, a comparatively newer approach is used called continuous authentication. This approach comes out of the logic that a user should be continuously monitored to make sure that the device or application is being used by the genuine user throughout the session. User activity can be tracked by usage pattern monitoring and hardware / sensor data to make sure that device is in right hands. Once a user passes the authentication / verification barrier, there is no way to make sure that it is the same user throughout the session. Continuous authentication solves this problem by leveraging behavioral biometrics that creates a unique user profile depending on usage patterns and device data, user’s authentication state can be tracked throughout the session with his unique user profile and access can denied in the middle of a session if any irregularities are detected.

Some important factors that need to be considered before selecting a particular biometric modality are:

Accuracy:

It is one of the most important factors that need to be assessed when selecting a modality. Again, accuracy is based on several other factors such as false acceptance rate (FAR), false reject rate (FRR), error rate, identification rate etc.

Anti-spoofing capabilities:

The widespread use of biometric recognition systems in various sensitive applications stresses the importance of stronger protection against intruder attacks. Therefore, a lot of importance is given to direct attacks where unauthorized individuals can gain access to the system by interacting with the system input device. These unauthorized attempts to access the system are known as spoofing attacks and therefore the chosen modality should have strong anti-spoofing capabilities.

Cost-effectiveness:

This is an important factor to consider when deciding the effectiveness and suitability of a particular modality. Some modalities may be more cost-effective than others due to the underlying technology or hardware characteristics. It is important to realize that the initial investment done on a biometric system can often be compensated in a short amount of time which leads to faster return on investment (ROI).

User acceptability:

The deployment of a particular identification system also depends on how well it is accepted by the users. In some cultures, certain modalities have a stigma associated with them and it can negatively impact the success of the implemented modality. Therefore, it is important to understand which modalities are well acceptable versus those that may cause some user acceptance issues.

Hygiene:

Another important factor to consider before making a deployment decision is whether the system has contact dependent hardware. Many organizations prefer to use contactless modalities due to hygiene reasons and also for infection control.

So organizations should consider all the above factors before selecting a particular modality for their applications. The selected modality should also meet the operational requirements for their deployment.

_____

The following are used as performance metrics for biometric systems:

- False match rate (FMR, also called FAR = False Accept Rate): the probability that the system incorrectly matches the input pattern to a non-matching template in the database. It measures the percent of invalid inputs that are incorrectly accepted. In case of similarity scale, if the person is an imposter in reality, but the matching score is higher than the threshold, then he is treated as genuine. This increases the FAR, which thus also depends upon the threshold value.

- False non-match rate (FNMR, also called FRR = False Reject Rate): the probability that the system fails to detect a match between the input pattern and a matching template in the database. It measures the percent of valid inputs that are incorrectly rejected.

- Receiver operating characteristic or relative operating characteristic (ROC): The ROC plot is a visual characterization of the trade-off between the FAR and the FRR. In general, the matching algorithm performs a decision based on a threshold that determines how close to a template the input needs to be for it to be considered a match. If the threshold is reduced, there will be fewer false non-matches but more false accepts. Conversely, a higher threshold will reduce the FAR but increase the FRR. A common variation is the Detection error trade-off (DET), which is obtained using normal deviation scales on both axes. This more linear graph illuminates the differences for higher performances (rarer errors).

- Equal error rate or crossover error rate (EER or CER): the rate at which both acceptance and rejection errors are equal. The value of the EER can be easily obtained from the ROC curve. The EER is a quick way to compare the accuracy of devices with different ROC curves. In general, the device with the lowest EER is the most accurate.

- Failure to enrol rate (FTE or FER): the rate at which attempts to create a template from an input is unsuccessful. This is most commonly caused by low quality inputs.

- Failure to capture rate (FTC): Within automatic systems, the probability that the system fails to detect a biometric input when presented correctly.

- Template capacity: the maximum number of sets of data that can be stored in the system.

_____

Why biometrics is important in global and mobile world?

Although there has always been a need to identify individuals, the requirements of identification have changed in radical ways as populations have expanded and grown increasingly mobile. This is particularly true for the relationships between institutions and individuals, which are crucial to the well-being of societies, and necessarily and increasingly conducted impersonally—that is, without persistent direct and personal interaction. Importantly, these impersonal interactions include relationships between government and citizens for purposes of fair allocation of entitlements, mediated transactions with e-government, and security and law enforcement. Increasingly, these developments also encompass relationships between actors and clients or consumers based on financial transactions, commercial transactions, provision of services, and sales conducted among strangers, often mediated through the telephone, Internet, and the World Wide Web. Biometric technologies have emerged as promising tools to meet these challenges of identification, based not only on the faith that “the body doesn’t lie,” but also on dramatic progress in a range of relevant technologies. These developments, according to some, herald the possibility of automated systems of identification that are accurate, reliable, and efficient.

Many identification systems comprise three elements: attributed identifiers (such as name, Social Security number, bank account number, and drivers’ license number), biographical identifiers (such as address, profession, and education), and biometric identifiers (such as photographs and fingerprint). Traditionally, the management of identity was satisfactorily and principally achieved by connecting attributed identifiers with biographical identifiers that were anchored in existing and ongoing local social relations. As populations have grown, communities have become more transient, and individuals have become more mobile, the governance of people (as populations) required a system of identity management that was considered more robust and flexible. The acceleration of globalization imposes even greater pressure on such systems as individuals move not only among towns and cities but across countries. This progressive dis-embedding from local contexts requires systems and practices of identification that are not based on geographically specific institutions and social networks in order to manage economic and social opportunities as well as risks.

In this context, according to its proponents, the promise of contemporary biometric identification technology is to strengthen the links between attributed and biographical identity and create a stable, accurate, and reliable identity triad. Although it is relatively easy for individuals to falsify attributed and biographical identifiers, biometric identifiers—an individual’s fingerprints, handprints, irises, face—are conceivably more secure because it is assumed that “the body never lies” or differently stated, that it is very difficult or impossible to falsify biometric characteristics. Having subscribed to this principle, many important challenges of a practical nature nonetheless remain: deciding on which bodily features to use, how to convert these features into usable representations, and, beyond these, how to store, retrieve, process, and govern the distribution of these representations.

Prior to recent advances in the information sciences and technologies, the practical challenges of biometric identification had been difficult to meet. For example, passport photographs are amenable to tampering and hence not reliable; fingerprints, though more reliable than photographs, were not amenable, as they are today, to automated processing and efficient dissemination. Security as well as other concerns has turned attention and resources toward the development of automatic biometric systems. An automated biometric system is essentially a pattern recognition system that operates by acquiring biometric data (a face image) from an individual, extracting certain features (defined as mathematical artifacts) from the acquired data, and comparing this feature set against the biometric template (or representation) of features already acquired in a database. Scientific and engineering developments—such as increased processing power, improved input devices, and algorithms for compressing data, by overcoming major technical obstacles, facilitates the proliferation of biometric recognition systems for both verification and identification and an accompanying optimism over their utility. The variety of biometrics upon which these systems anchor identity has burgeoned, including the familiar fingerprint as well as palm print, hand geometry, iris geometry, voice, gait, and the face.

The question of which biometric technology is “best” only makes sense in relation to a rich set of background assumptions. While it may be true that one system is better than another in certain performance criteria such as accuracy or difficulty of circumvention, a decision to choose or use one system over another must take into consideration the constraints, requirements, and purposes of the use-context, which may include not only technical, but also social, moral and political factors. It is unlikely that a single biometric technology will be universally applicable, or ideal, for all application scenarios. Iris scanning, for example, is very accurate but requires expensive equipment and usually the active participation of subjects willing to submit to a degree of discomfort, physical proximity, and intrusiveness—especially when first enrolled—in exchange for later convenience. In contrast, fingerprinting, which also requires the active participation of subjects, might be preferred because it is relatively inexpensive and has a substantial historical legacy.

Facial recognition has begun to move to the forefront because of its purported advantages along numerous key dimensions. Unlike iris scanning which has only been operationally demonstrated for relatively short distances, it holds the promise of identification at a distance of many meters, requiring neither the knowledge nor the cooperation of the subject. These features have made it a favorite for a range of security and law enforcement functions, as the targets of interest in these areas are likely to be highly uncooperative, actively seeking to subvert successful identification, and few—if any—other biometric systems offer similar functionality, with the future potential exception of gait recognition. Because facial recognition promises what we might call “the grand prize” of identification, namely, the reliable capacity to pick out or identify the “face in the crowd,” it holds the potential of spotting a known assassin among a crowd of well-wishers or a known terrorist surveying areas of vulnerability such as airports or public utilities. At the same time, rapid advancements in contributing areas of science and engineering suggest that facial recognition is capable of meeting the needs of identification for these critical social challenges, and being realistically achievable within the relatively near future.

_____

_____

Facial recognition by humans:

Face recognition is performed routinely and effortlessly by humans. Face perception is an individual’s understanding and interpretation of the face, particularly the human face, especially in relation to the associated information processing in the brain. From birth, faces are important in the individual’s social interaction. An infant innately responds to face shapes at birth and can discriminate his or her mother’s face from a stranger’s at the tender age of 45 hours. Recognizing and identifying people is a vital survival skill, as is reading faces for evidence of ill-health or deception. Face perceptions are very complex as the recognition of facial expressions involves extensive and diverse areas in the brain. Sometimes, damaged parts of the brain can cause specific impairments in understanding faces or prosopagnosia. Improving significantly in the last several years, technologies that can mimic or improve human abilities to recognize and read faces are now maturing for use in medical and security applications.

_

The physical world around us is three-dimensional (3D) and our each eye sees the world in 2D. People perceive depth and see the real world in three dimensions thanks to binocular vision as we have two eyes that are about three inches apart. The separation of our eyes means that each eye sees the world from a slightly different perspective. Our powerful brains take these two slightly-different images of the world and do all the necessary calculations to create a sense of depth and allow us to gauge distance.

_

Neuroanatomy of facial processing:

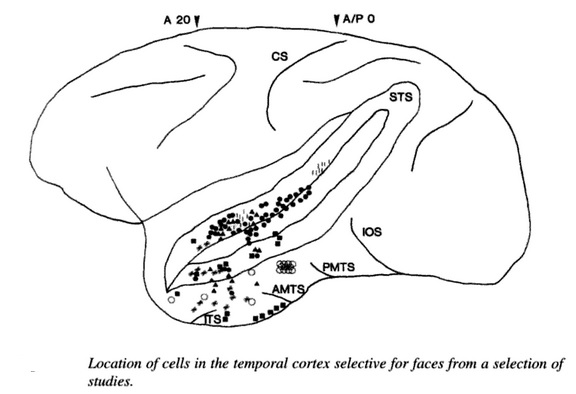

There are several parts of the brain that play a role in face perception. Rossion, Hanseeuw, and Dricot used BOLD fMRI mapping to identify activation in the brain when subjects viewed both cars and faces. The majority of BOLD fMRI studies use blood oxygen level dependent (BOLD) contrast to determine which areas of the brain are activated by various cognitive functions. They found that the occipital face area, located in the occipital lobe, the fusiform face area, the superior temporal sulcus, the amygdala, and the anterior/inferior cortex of the temporal lobe, all played roles in contrasting the faces from the cars, with the initial face perception beginning in the occipital face areas. This entire region links to form a network that acts to distinguish faces. The processing of faces in the brain is known as a “sum of parts” perception. However, the individual parts of the face must be processed first in order to put all of the pieces together. In early processing, the occipital face area contributes to face perception by recognizing the eyes, nose, and mouth as individual pieces. Furthermore, Arcurio, Gold, and James used BOLD fMRI mapping to determine the patterns of activation in the brain when parts of the face were presented in combination and when they were presented singly. The occipital face area is activated by the visual perception of single features of the face, for example, the nose and mouth, and preferred combination of two-eyes over other combinations. This research supports that the occipital face area recognizes the parts of the face at the early stages of recognition. On the other hand, the fusiform face area shows no preference for single features, because the fusiform face area is responsible for “holistic/configural” information, meaning that it puts all of the processed pieces of the face together in later processing. This theory is supported by the work of Gold et al. who found that regardless of the orientation of a face, subjects were impacted by the configuration of the individual facial features. Subjects were also impacted by the coding of the relationships between those features. This shows that processing is done by a summation of the parts in the later stages of recognition.

_

The temporal lobe of the brain is predominantly responsible for our ability to recognize faces. Some neurons in the temporal lobe respond to particular features of faces. Some people who suffer damage to the temporal lobe lose their ability to recognize and identify familiar faces. This disorder is called prosopagnosia. When the appearance of a face is changed, neurons in the temporal lobe generate less activity. Exactly how people recognize faces is not completely understood. For some reason, it is difficult to recognize some faces when they are upside-down.

_

Natural selection in facial recognition:

Do we need to wait for a detailed processing of all the features of a face to decide on its mood, potential threat or friendliness? We don’t, because if we did, we wouldn’t survive as a species. It turns out that cognitive processes are activated by “face-like” objects, which alert the observer to both the emotional state and identity of the subject – even before the conscious mind begins to process—or even receive—the information. Even a “stick figure face,” despite its simplicity, conveys mood information. This robust and subtle capability is hypothesized to be the result of eons of natural selection favoring people most able to quickly identify the mental state of humans they encounter, for example, threatening or hostile people. This allows the individual an opportunity to flee or attack pre-emptively. In other words, processing this information subcortically (and therefore subconsciously)—before it is passed on to the rest of the brain for detailed processing—accelerates judgment and decision making when quickness is paramount. It is no wonder then, that one of the areas the fusiform face area and the occipital visual area connect to is the amygdala, the area that is responsible for emotions of fear and rage, or the “fight or flight” response.

___

How we detect a face: A survey of psychological evidence, a 2003 study:

Scientists strive to build systems than can detect and recognize faces. One such system already exists: the human brain. How this system operates, however, is far from being fully understood. In this article, authors review the psychological evidence regarding the process of face detection in particular. Evidence is collected from a variety of face‐processing tasks including stimulus detection, face categorization, visual search, first saccade analysis, and face detection itself. Together, the evidence points towards a multistage‐processing model of face detection. These stages involve preattentive processing, template fitting, and template evaluation; similar to automatic face‐detection systems.

___

How many faces people remember and recognise? a 2018 study:

Researchers from the University of York tested how many individual faces people could recall from among those they knew personally as well as from popular media. And while 5,000 faces is the average number that people seem to know, Dr. Rob Jenkins, one of the researchers, quickly points out, “Our study focused on the number of faces people actually know—we haven’t yet found a limit on how many faces the brain can handle.” For the study, participants spent an hour listing as many faces from their personal lives as possible, including people they went to school with, colleagues, and family. They then did the same for famous faces, such as actors, politicians, and other public figures. The participants apparently found it easy to come up with lots of faces at first, but it became harder to think of new ones by the end of the hour. That change of pace allowed the researchers to estimate when they would run out of faces completely. The participants were also shown thousands of photographs of famous people and asked which ones they recognized. The researchers asked participants to recognize two different photos of each person to minimize error. The results showed that the participants knew between 1,000 and 10,000 faces, hence the average of 5,000 faces. The mean age of the studies participants was 24 and, according to the researchers, age provides an intriguing avenue for further research. “It would be interesting to see whether there is a peak age for the number of faces we know,” says Jenkins. “Perhaps we accumulate faces throughout our lifetimes, or perhaps we start to forget some after we reach a certain age.” Clearly, there is more to learn about how we learn each other’s faces. This study is published in the Royal Society Proceedings B.

___

Super recogniser:

“Super recognisers” is a term coined in 2009 by Harvard and University College London researchers for people with significantly better-than-average face recognition ability. It is predominantly used among the British intelligence community. It is the extreme opposite of prosopagnosia. It is estimated that 1–2% of population are super recognisers who can remember 80% of faces they have seen. Normal people can only remember about 20% of faces. They can match faces better than computer recognition systems in some circumstances. The science behind this is poorly understood but may be related to the fusiform face area part of the brain. In May 2015, the London Metropolitan Police officially formed a team made up of people with a “superpower” for recognising people and put them to work identifying individuals whose faces are captured on CCTV. Scotland Yard has a squad of over 200 super recognisers. In August 2018, it was reported that the Metropolitan Police had used two super recognisers to identify the suspects of the attack on Sergei and Yulia Skripal, after trawling through up to 5,000 hours of CCTV footage from Salisbury and numerous airports across the country.

______

______

Introduction to facial recognition (technology):

Anyone who has seen the TV show “Las Vegas” has seen facial recognition software in action. But what looks so easy on TV doesn’t always translate as well in the real world. In 2001, the Tampa Police Department installed cameras equipped with facial recognition technology in their Ybor City nightlife district in an attempt to cut down on crime in the area. The system failed to do the job, and it was scrapped in 2003 due to ineffectiveness. People in the area were seen wearing masks and making obscene gestures, prohibiting the cameras from getting a clear enough shot to identify anyone. Boston’s Logan Airport also ran two separate tests of facial recognition systems at its security checkpoints using volunteers. Over a three month period, the results were disappointing. According to the Electronic Privacy Information Center, the system only had a 61.4 percent accuracy rate, leading airport officials to pursue other security options. Humans have always had the innate ability to recognize and distinguish between faces, yet computers only recently have shown the same ability. In the mid-1960s, scientists began work on using the computer to recognize human faces. Since then, facial recognition software has come a long way.

_

The information age is quickly revolutionizing the way transactions are completed. Everyday actions are increasingly being handled electronically, instead of with pencil and paper or face to face. This growth in electronic transactions has resulted in a greater demand for fast and accurate user identification and authentication. Access codes for buildings, banks accounts and computer systems often use PIN’s for identification and security clearances. Using the proper PIN gains access, but the user of the PIN is not verified. When credit and ATM cards are lost or stolen, an unauthorized user can often come up with the correct personal codes. Despite warning, many people continue to choose easily guessed PIN‟s and passwords: birthdays, phone numbers and social security numbers. Recent cases of identity theft have heightened the need for methods to prove that someone is truly who he/she claims to be. Face recognition technology may solve this problem since a face is undeniably connected to its owner except in the case of identical twins. It is non-transferable. The system can then compare scans to records stored in a central or local database or even on a smart card.

_

Humans are great at identifying people they know well. However, our ability to remember and distinguish individuals diminishes when the number of people grows to more than a few dozens. Since it is not always efficient to rely on people for this task, we have come up with alternative forms of authentication. In particular, there are three forms of authentication that rely on: (i) something that the user has, (ii) something that the user knows, and (iii) who the user is.

Examples of physical tokens in everyday life include home and car keys, credit cards, passports, and driver’s licenses. Equivalent examples within an event would include printed tickets, badges, wristbands, and mobile phones. When it comes to examples of privileged information, everyone is familiar with usernames, passwords, and security questions. In the context of an event, there are a lot of check-in applications that rely on name/email searches and scanning QR codes. The use of physical tokens and privileged information has become integral parts of an event lifetime. They are used to check-in people, restrict access, personalize the experience, measure attendance, extract analytics, and perform lead retrieval.

Facial recognition belongs in the third form of authentication along with other biometrics approaches. It is a software which can identify a person from a database of faces without requiring a physical token or the user to provide any privileged information. Technological advancements have increased accuracy and reduced cost drastically. Therefore, we see increased adoption in other industries (e.g., airports, social media, and cell phones).

_

Automatic face recognition has been traditionally associated with the fields of computer vision and pattern recognition. Face recognition is considered a natural, non-intimidating, and widely accepted biometric identification method. As such, it has the potential of becoming the leading biometric technology. Unfortunately, it is also one of the most difficult pattern recognition problems. So far, all existing solutions provide only partial, and usually unsatisfactory, answers to the market needs. In the context of face recognition, it is common to distinguish between the problem of authentication and that of recognition.

In the first case, the enrolled individual (probe) claims identity of a person whose template is stored in the database (gallery). We refer to the data used for a specific recognition task as a template. The face recognition algorithm needs to compare a given face with a given template and verify their equivalence. Such a setup (one-to-one matching) can occur when biometric technology is used to secure financial transactions, for example, in an automatic teller machine (ATM). In this case, the user is usually assumed to be collaborative.

The second case is more difficult. Recognition implies that the probe subject should be compared with all the templates stored in the gallery database. The face recognition algorithm should then match a given face with one of the individuals in the database. Finding a terrorist in a crowd (one-to-many matching) is one such application. Needless to say, no collaboration can be assumed in this case. At current technological level, one-to-many face recognition with non-collaborative users is practically unsolvable. That is, if one intentionally wishes not to be recognized, he can always deceive any face recognition technology.

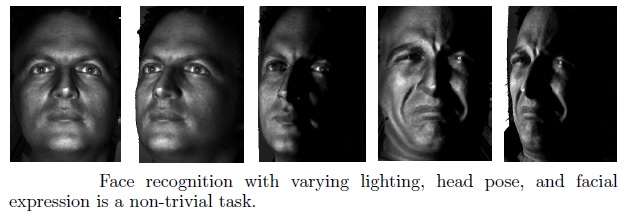

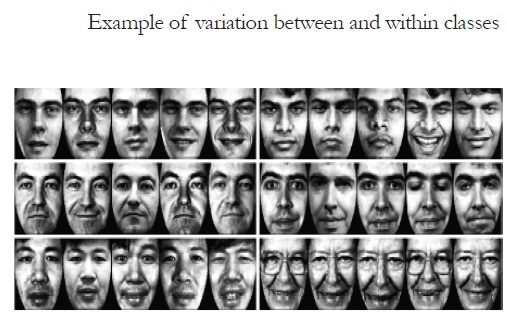

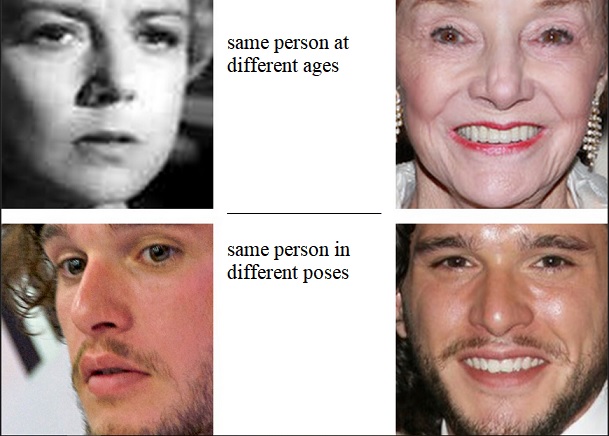

Even collaborative users in a natural environment present high variability of their faces due to natural factors beyond our control. The greatest difficulty of face recognition, compared to other biometrics, stems from the immense variability of the human face. The facial appearance depends heavily on environmental factors, for example, the lighting conditions, background scene and head pose. It also depends on facial hair, the use of cosmetics, jewellery and piercing. Last but not least, plastic surgery or long-term processes like aging and weight gain can have a significant influence on facial appearance. Yet, much of the facial appearance variability is inherent to the face itself. Even if we hypothetically assume that external factors do not exist, for example, that the facial image is always acquired under the same illumination, pose, and with the same haircut and make up, still, the variability in a facial image due to facial expressions may be even greater than a change in the person’s identity as seen in the figure below.

_

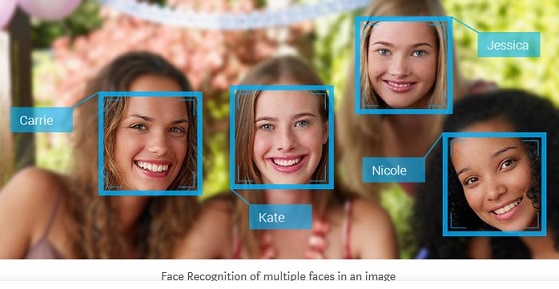

Face recognition and Face search have been gaining prominence over the years because of the emerging need of face search for various purposes in the ongoing world. The facial recognition search software not only helps in recognising the faces in a solo photo, but it also helps in recognising people in group pictures, matching two different faces, finding faces similar to a particular face, providing other face attributes according to the eyes, nose, and other parts and therefore plays a crucial role in guessing, identifying and recognising the face.

Face recognition search is used by thousands of software companies and hardware companies, individuals to filter people of a specific kind and sometimes even to find your images. With the help of the image recognition search engines that are available; you can use different ways to find a face or similar faces.

_

Technopedia defines facial recognition as “a biometric software application capable of uniquely identifying or verifying a person by comparing and analyzing patterns based on the person’s facial contours.” Facial recognition applications continue to expand into different aspects of our lives. For example, facial recognition technology can now be used instead of a password to unlock a user’s iPhone. Biometrics, including facial recognition, can be used to validate a user when making online purchases. This method is much more secure and convenient for the user than remembering user IDs and passwords. Facebook has developed facial recognition to identify and tag people in photos posted on the website. Facebook will even reach out to the person and ask “is this you?” If the person responds in the positive, the website has validated that instance of facial recognition for that person. Some facial recognition programs work without obtaining consent from the person. The software using artificial intelligence compares the person’s face from a distance and matches the face to a database.

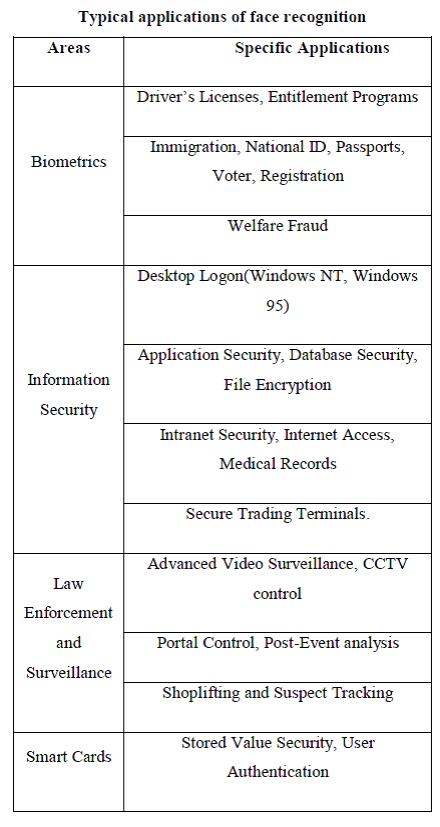

__

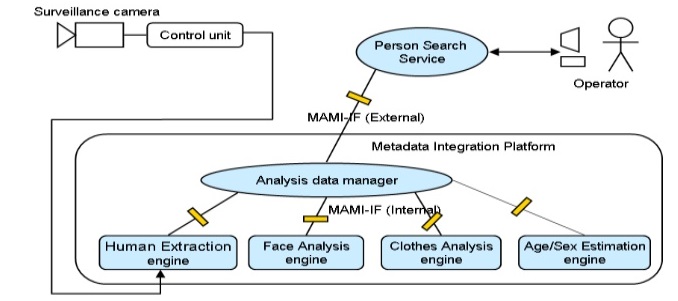

Many basic uses of facial recognition technology are relatively benign and receive little criticism. For example, the technology can be used like a high-tech key, allowing access to virtual or actual spaces. Instead of presenting a password, magnetic card or other such identifier, the face of the person seeking access is screened to ensure it matches an authorized identity. This eliminates the problem of stolen passwords or access cards. In heightened security situations, facial recognition could be used in conjunction with other forms of identification. The next step in facial recognition is to connect the systems to digital surveillance cameras, which can then be used to monitor spaces for the presence of individuals whose digital images are stored in databases. Images of those present in the spaces under watch can also be recorded and subsequently paired with identities. Surveillance power grows as various systems, public and private, are networked together to share information. Facial recognition may create economic savings. Policing efficiency could be improved if tracking of suspected terrorists and criminals were automated, for example, and welfare fraud would be curtailed if individuals were prevented from assuming false identities. The potential benefits of facial recognition systems also extend well beyond the realm of crime, terrorism and finances. The software could, for example, help ensure that known child molesters are denied access to schoolyards.

Facial recognition also has the ability to reach quickly into the past for information, dramatically extending the effective temporal scope of surveillance data analysis. Once an image is included in the database, stored surveillance data can be searched for occurrences of that image with a few keystrokes. Searching videotape for evidence, by contrast, is extremely time-consuming. The process of determining whether a suspected terrorist visited Berlin in 2002, for example, could require watching thousands of hours of videotape from potentially hundreds of cameras. If those cameras operated digital facial recognition systems, and the suspect’s face were available in a linked database, the same search could conceivably be executed in a fraction of the time.

Lately, mobile app of master card has started using fingerprint or facial recognition to verify and authenticate online payments. Mobile devices with high quality camera have made facial recognition a viable option for verifying identity and authenticating people. Most of the phones getting launched in the market are coming with an inbuilt face recognition technology which lets users to unlock their phones just by scanning the face. Along with its popularity across the digital world, enterprises are also taking this logical development seriously to confirm an order or to make a payment.

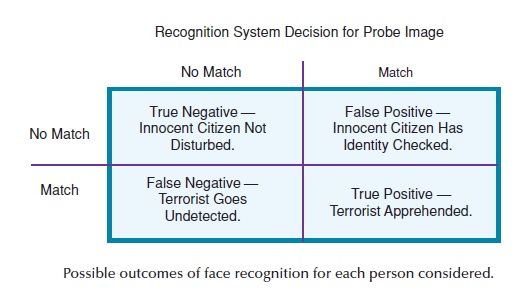

Facial recognition technology requires further development, however, before reaching maximal surveillance utility. The American Civil Liberties Union explains: “Facial recognition software is easily tripped up by changes in hairstyle or facial hair, by aging, weight gain or loss, and by simple disguises.” It adds that the U.S. Department of Defense “found very high error rates even under ideal conditions, where the subject is staring directly into the camera under bright lights.” The Department of Defense study demonstrated significant rates of false positive test responses, in which observed faces were incorrectly matched with faces in the database. Many false negatives were also revealed, meaning the system failed to recognize faces in the database. The A.C.L.U. argues that the implementation of facial recognition systems is undesirable, because “these systems would miss a high proportion of suspects included in the photo database, and flag huge numbers of innocent people – thereby lessening vigilance, wasting precious manpower resources, and creating a false sense of security.”

__

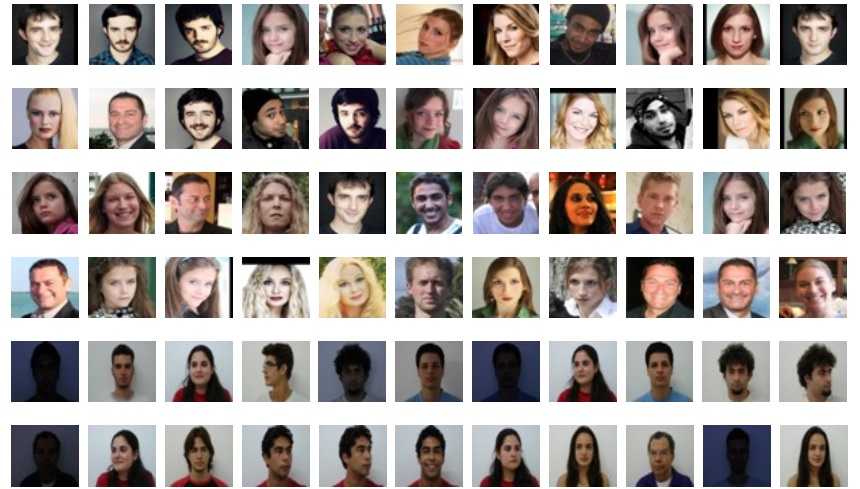

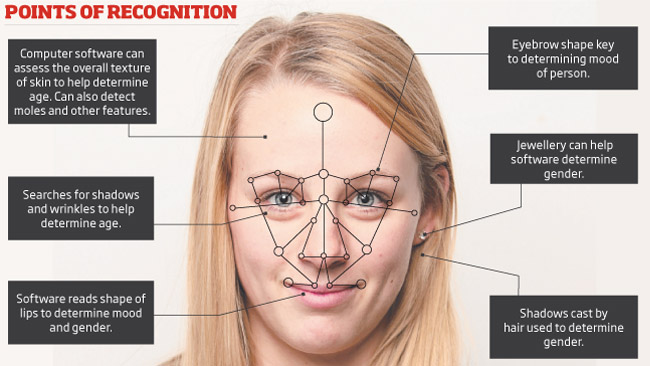

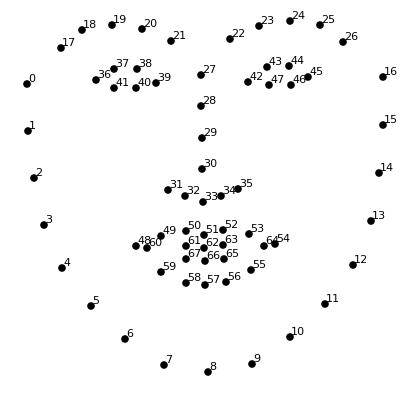

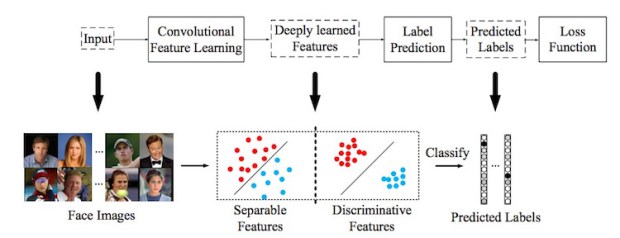

Facial recognition is an emerging technology that has the potential to augment the way people live or appreciably shape the digital world in the next five years. It is a biometric technology that scans people’s face or photographs and recognizes an individual. Face recognition uses the spatial geometry of distinguishing features of the face. It is a form of computer vision that uses the face to identify or to authenticate a person. Facial recognition functions by examining the physical features of an individual’s face to distinguish uniqueness from others. In order to verify someone’s identity, the process can be broken down into three distinct steps: detection, unique faceprint creation, and finally, verification. In the first step, the technology captures an image of the individual’s face and analyzes it to identify the user’s face. Facial recognition is a category of biometric software that maps an individual’s facial features mathematically and stores the data as a faceprint. It discerns facial features like space between the eyes, depth of the eye sockets, the width of the nose, cheekbones, and jawline. The software reads approximately 80 nodal points designed from a numerical code, called a faceprint and records it in its database. An individual’s facial features are mapped mathematically and stored in the form of faceprints in facial recognition technology. Once the faceprint is recorded the software compares a person’s face from the data captured. Facial recognition software maps details and ratios of facial geometry using algorithms, the most popular of which results in a computation of what is called the “eigenface,” composed of “eigenvalues”. The software uses deep learning algorithms to compare a live capture or digital image to the stored faceprint in order to verify an individual’s identity. More than half of the American population’s faceprint is recorded in a facial recognition database.

_

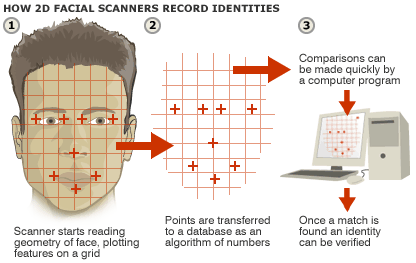

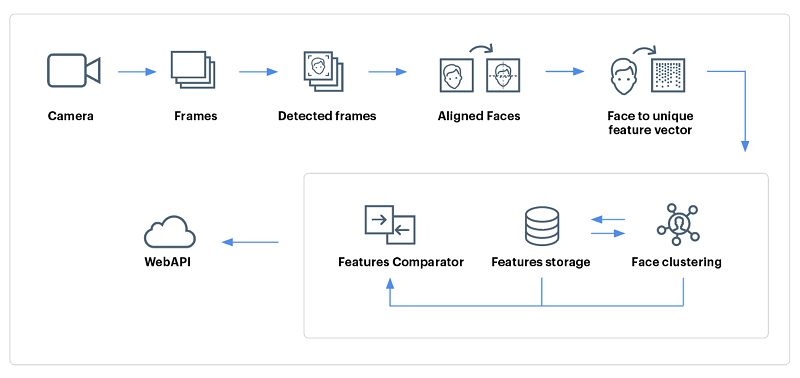

Computerized facial recognition is based on capturing an image of a face, extracting features, comparing it to images in a database, and identifying matches. As the computer cannot see the same way as a human eye can, it needs to convert images into numbers representing the various features of a face. The sets of numbers representing one face are compared with numbers representing another face. The quality of the computer recognition system is dependent on the quality of the image and mathematical algorithms used to convert a picture into numbers. Important factors for the image quality are light, background, and position of the head. Pictures can be taken of a still or moving subjects. Still subjects are photographed, for example by the police (mug shots) or by specially placed security cameras (access control). However, the most challenging application is the ability to use images captured by surveillance cameras (shopping malls, train stations, ATMs), or closed-circuit television (CCTV). In many cases the subject in those images is moving fast, and the light and the position of the head is not optimal.

_

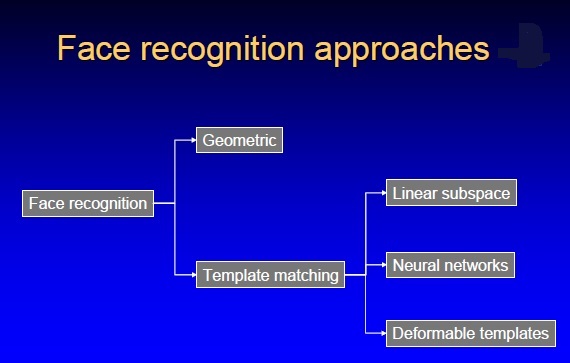

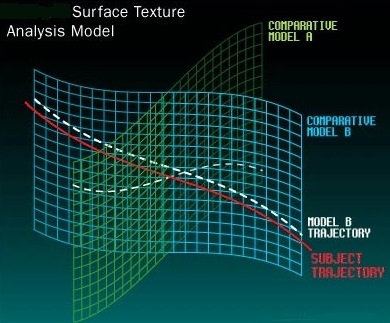

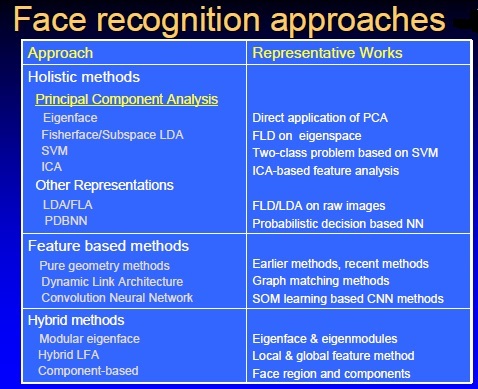

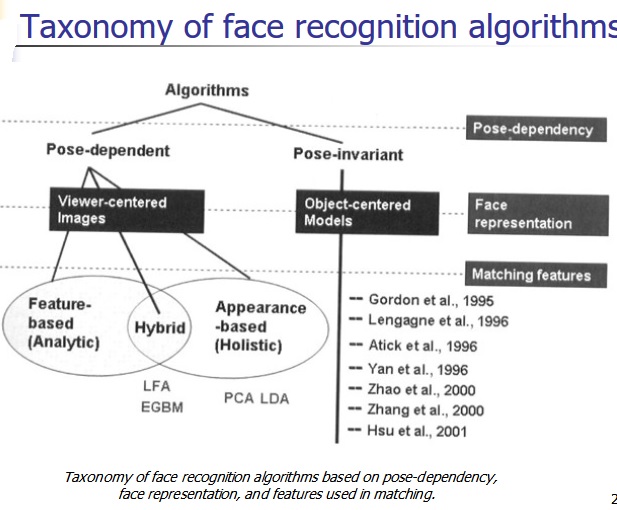

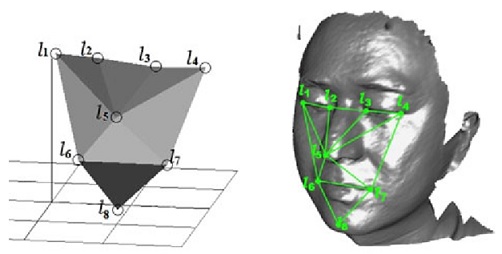

The techniques used for facial recognition can be feature-based (geometrical) or image-based (photometric). The geometric method relies on the shape and position of the facial features. It analyzes each of the facial features, also known as nodal points, independently; it then generates a full picture of a face. The most commonly used nodal points are: distance between the eyes, width of the nose, cheekbones, jaw line, chin, and depth of the eye sockets. Although there are about 80 nodal points on the face, most software measures have only around a quarter of them. The points picked by the software to measure have to be able to uniquely differentiate between people. In contrast, the image or photometric-based methods create a template of the features and use that template to identify faces.

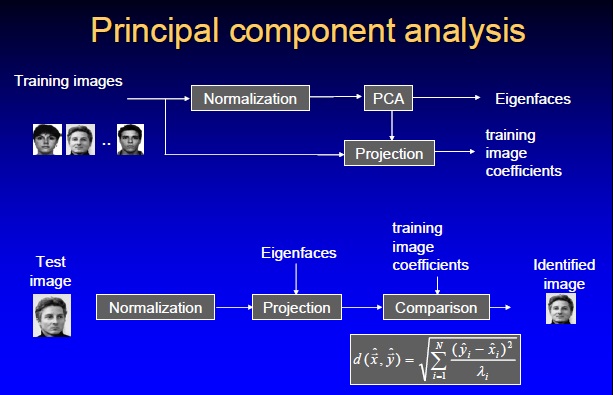

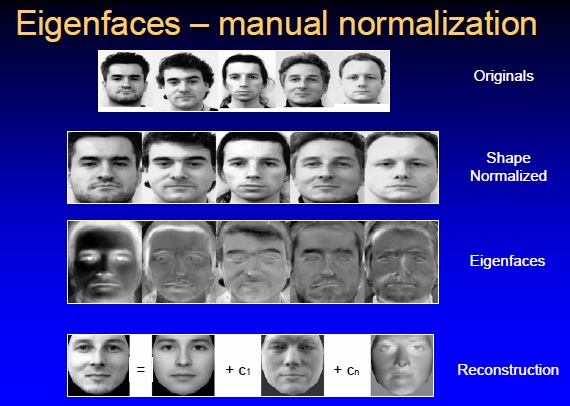

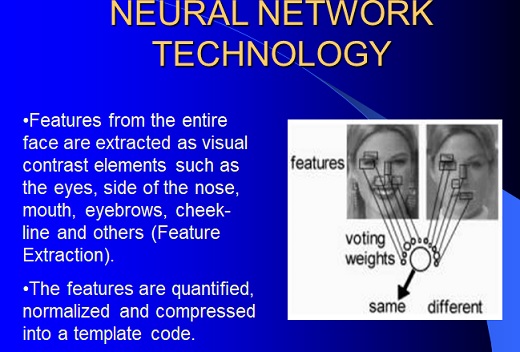

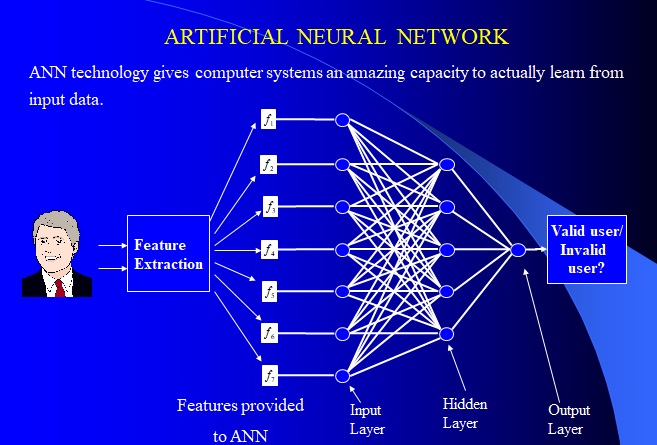

Algorithms used by the software tools are proprietary and are secret. The most common methods used are eigenfaces, which are based on principal component analysis (PCA) to extract face features. The analysis can be very accurate, as many features can be extracted and all of the image data is analyzed together; no information is discarded. Another common method of creating templates is using neural networks. Despite continuous improvements, none of the current algorithms is 100% correct. The best verification rates are about 90% correct. At the same time, the majority of systems claim 1% false accept rates. The most common reasons for the failures are: sensitivity of some methods to lighting, facial expressions, hairstyles, hair color, and facial hair.

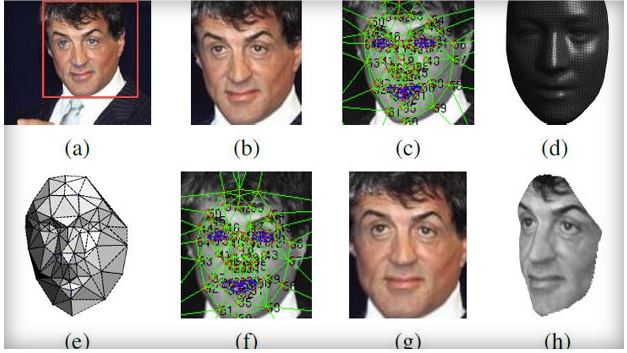

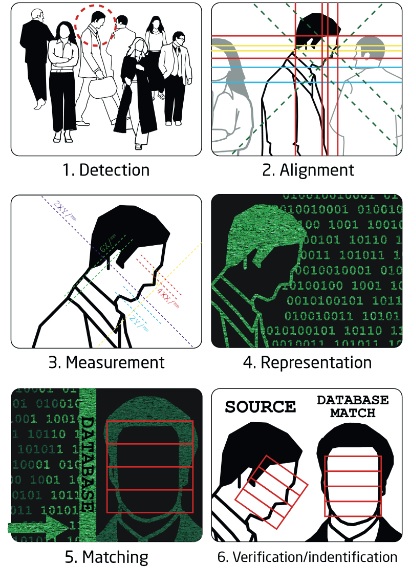

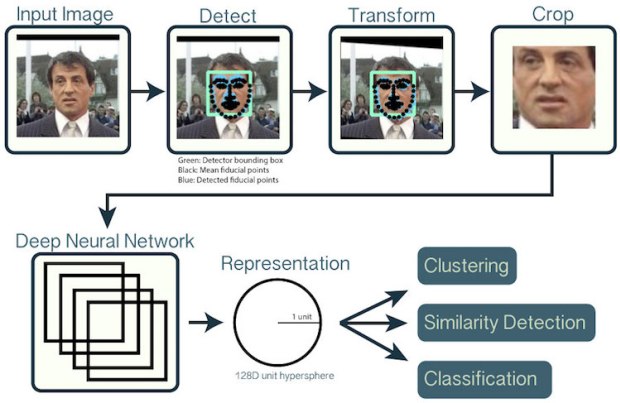

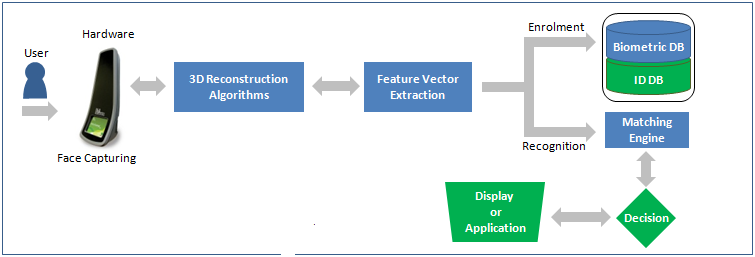

Despite the differences in mathematical methods used, the face recognition analysis follows the same set of steps. The first step is image acquisition; once the image is captured, a head is identified. In some cases, before the feature extraction, it might be necessary to normalize the image. This is accomplished by scaling and rotating the image so that the size of the face and its positioning is optimal for the next step. After the image is presented to the computer, it begins feature extraction using one of the algorithms. Feature extraction includes localization of the face, detection of the facial features, and actual extraction. Eyes, nose, and mouth are the first features identified by most of the techniques. Other features are identified later. The extracted features are then used to generate a numerical map of each face analyzed. The generated templates are then compared to images stored in the database. The database used may consist of mug shots, composites of suspects, or video surveillance images. This process creates a list of hits with scores, which is very similar to search results on the Internet. It is often up to the user to determine if the similarity produced is adequate to warrant declaration of a match. Even if the user does not have to make a decision, he or she is most likely determining the settings used later by the computer to declare a match.

Depending on the software used, it is possible to compare one-to-one or one-to-many. In the first instance, it would be a confirmation of someone’s identity. In the second, it would be identification of a person. Another application of facial recognition is taking advantage of live, video-based surveillance. This can be used to identify people in retrospect, after their images were captured on the recording. It can also be used to identify a particular person during surveillance, while they are moving around. It can be useful for catching criminals in the act, cheaters in casinos, or in identifying terrorists.

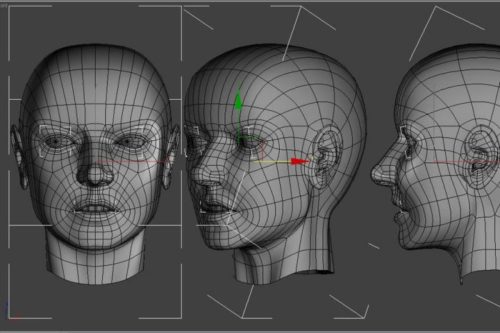

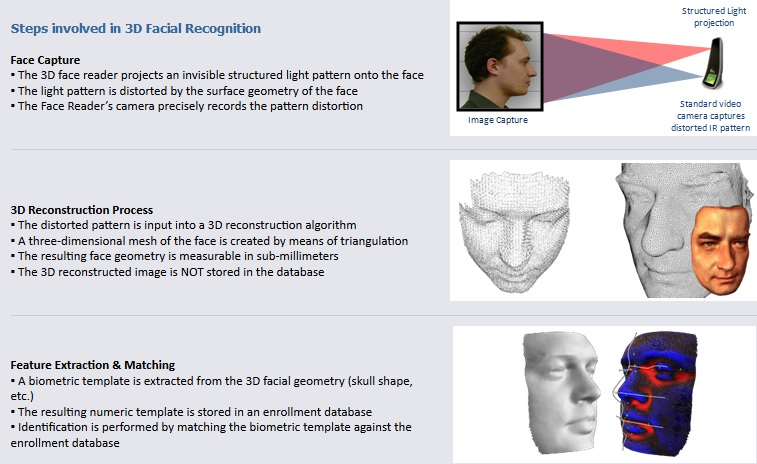

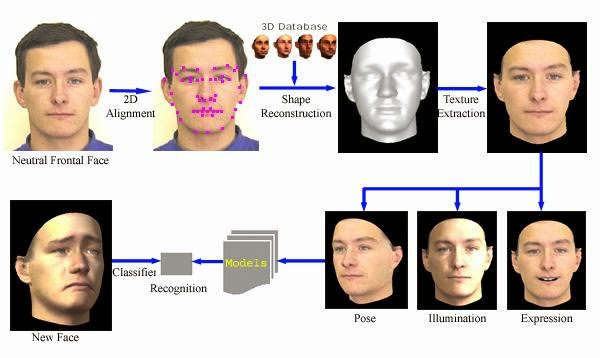

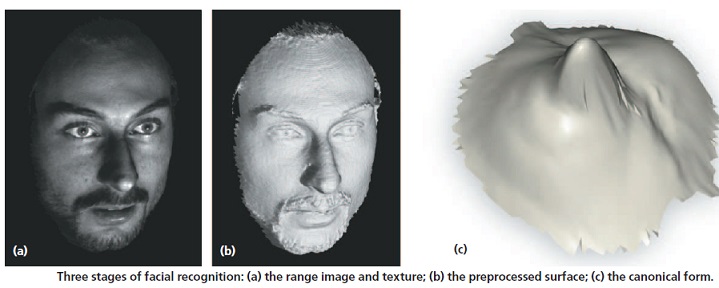

Most of the earliest and current methods of face recognition are 2-dimensional (2-D). They use a flat image of a face. However, 3-D methods are also being developed and some are already available commercially. The main difference in 3-D analysis is the use of the shape of the face, thus adding information to a final template. The first step in a 3-D analysis is generation of a virtual mesh reflecting a person’s facial shape. It can be done by using a near-infrared light to scan a person’s face and repeating the process a couple of times. The nodal points are located on the mesh, generating thousands of reference points rather than 20–30 used by 2-D methods. It makes the 3-D methods more accurate, but also more invasive and more expensive. A face recognition system may use 2D or 3D images as a template to store in the database. 2D images are common; 3D is less in use. Each model has its own advantages and disadvantages. 2D recognition works better if the illuminating light is moderate and is influenced by pose changes. Facial expressions and changes in the face due to aging may affect the recognition rates in a face recognition system. On the other hand, a biometric face recognition system using 3D model database is getting cheaper and faster than before. But the 3D model databases are very few. A biometric face recognition system using 2D model databases takes into consideration only the two dimensions of the face. But the face is a 3D object! This makes the expectation level rise, from a 3D model database, with regard to performance. However, no experiment till now has been able to prove this popular belief. 3D data capturing is not completely independent of light variations. Different sources of light may create different models for the same face. Besides, they are still more expensive as compared to a 2D face recognition system. 2D represents a face by intensity variation and 3D represents a face by shape variation. 3D face recognition system discriminates between faces on the basis of the shape of the features of a given face. As the 3D images use a more reliable base for recognition, they are considered to be more accurate. However, a lot of improvement needs to be made in the field of 3D biometric face recognition system.

If the image is 3D and the database contains 3D images, then matching will take place without any changes being made to the image. However, there is a challenge currently facing databases that are still in 2D images. 3D provides a live, moving variable subject being compared to a flat, stable image. New technology is addressing this challenge. When a 3D image is taken, different points (usually three) are identified. For example, the outside of the eye, the inside of the eye and the tip of the nose will be pulled out and measured. Once those measurements are in place, an algorithm (a step-by-step procedure) will be applied to the image to convert it to a 2D image. After conversion, the software will then compare the image with the 2D images in the database to find a potential match.

An extension of facial recognition and 3-D methods is using computer graphics to reconstruct faces from skulls. This allows identification of people from skulls if all other methods of identification fail. In the past facial reconstruction was done manually by a forensic artist. Clay was applied to the skull following the contours of the skull until a face was generated. Currently the reconstruction can be computerized by taking advantage of head template creation by using landmarks on the skull and the ability to overlay it with computer-generated muscles. Once the face is generated, it is photographed and can be compared to various databases for identification in the same way as a live person’s image.

An important difference with other biometric solutions is that faces can be captured from some distance away, with for example surveillance cameras. Therefore face recognition can be applied without the subject knowing that he is being observed. This makes face recognition suitable for finding missing children or tracking down fugitive criminals using surveillance cameras.

_

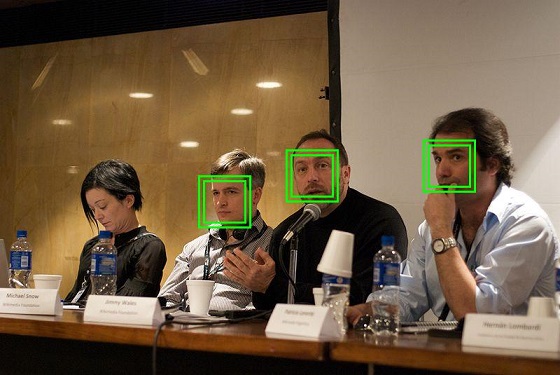

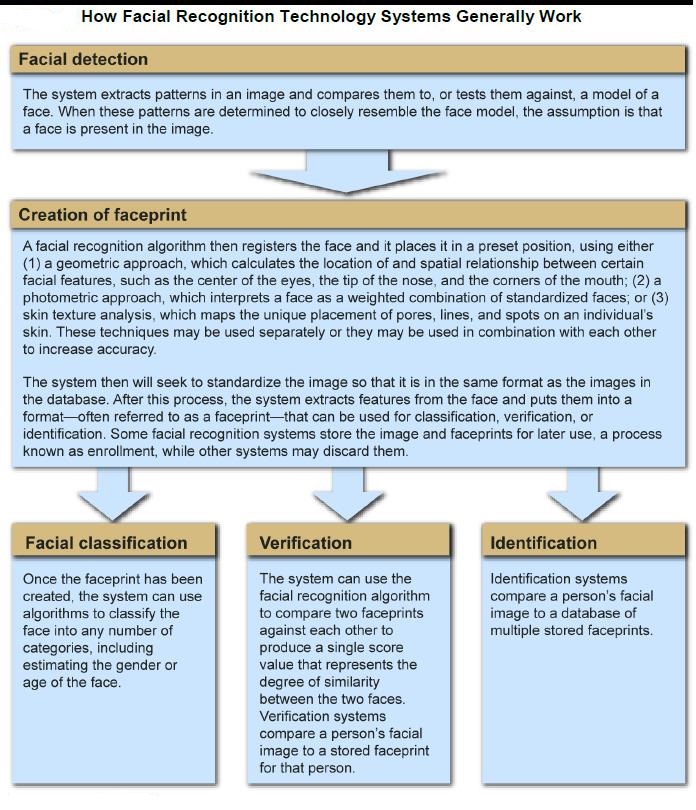

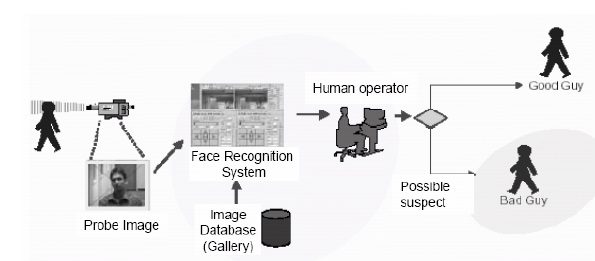

Independent of the solution vendor, face recognition is accomplished as follows:

- A digital camera acquires an image of the face.

- Software locates the face in the image, this is also called face detection. Face detection is one of the more difficult steps in face recognition, especially when using surveillance cameras for scanning an entire crowd of people.

- When a face has been selected in the image, the software analyzes the spatial geometry. The techniques used to extract identifying features of a face are vendor dependent. In general the software generates a template, this is a reduced set of data which uniquely identifies an individual based on the features of his face.

- The generated template is then compared with a set of known templates in a database (identification) or with one specific template (authentication).

- The software generates a score which indicates how well two templates match. It depends on the software how high a score must be for two templates to be considered as matching, for example an authentication application requires low FAR and thus the score must be high enough before templates can be declared as matching. In a surveillance application however you would not want to miss out on any fugitive criminals thus requiring a low FRR, so you would set a lower matching score and security agents will sort out the false positives.

_

In order to develop a useful and applicable face recognition system several factors need to be take in hand.

- The overall speed of the system from detection to recognition should be acceptable.

- The accuracy should be high

- The system should be easily updated and enlarged, that is easy to increase the number of subjects that can be recognized.

_

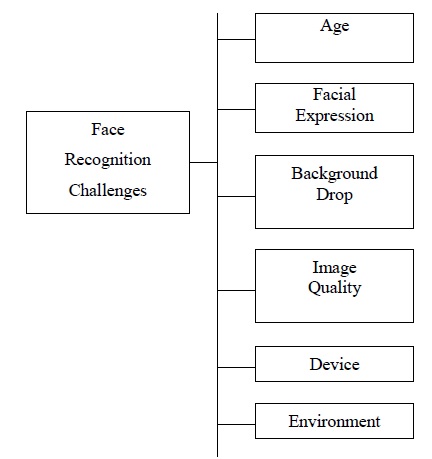

Difficulties that often arise with face recognition are:

- Variable image lighting and background make it more difficult for software to locate the face in the image.

- Parts of the face are covered, e.g. long hair makes it more difficult for the software to locate the face in the image and to recognize the face.

- Subject does not look directly into the camera, when the face is not held in the same angle the software might not recognize the face.

- Using different types of cameras (with different lighting, resolution, etc.) makes it more difficult for the software to recognize the face.

- The face of a subject changes with ageing.

- It is difficult to make face recognition secure enough for authentication purposes.

As you can see there are some important constraints for using face recognition. Different vendors work on resolving these issues. 3D face recognition solves some of the above issues. Using 3D images the actual 3-dimensional form of the face is evaluated, this is not affected by lighting and does not change with ageing. Also different viewing angles can be better compared when using 3D images. Of course the hardware for 3D face recognition is more expensive.

_

Face recognition or detection is a widely used technology which is undergoing constant development to improve its results. It is used in different environments such as in forensic science, medicine and surveillance or security systems. It is also a widely developed mobile application. There are many different kinds of face detection devices and many different algorithms operating these devices. Many researchers and scholars have been trying to implement the ideal case of face detection algorithm. Many algorithms were used to achieve this goal but not all constraints have been taken into consideration while developing this software. Some of the known algorithms are: Principle Component Analysis using Eigen faces, Linear Discriminate Analysis, Elastic Bunch Graph Matching using Fisherface Algorithm, Content Based Image Retrieval (Jyoti Jain), the Hidden Markov and Dynamic Link Matching. The constraints taken while developing the software to yield accurate results are: position of the face, low lighting, sufficient data in database and facial expressions. To produce the ideal algorithm that yields 90% accurate results, 3 out of 4 constraints should be overcome.

__

High-quality cameras in mobile devices have made facial recognition a viable option for authentication as well as identification. Apple’s iPhone X, for example, includes Face ID technology that lets users unlock their phones with a faceprint mapped by the phone’s camera. The phone’s software, which is designed with 3-D modelling to resist being spoofed by photos or masks, captures and compares over 30,000 variables. Face ID can be used to authenticate purchases with Apple Pay and in the iTunes Store, App Store and iBooks Store. Apple encrypts and stores faceprint data in the cloud, but authentication takes place directly on the device. Developers can use Amazon Rekognition, an image analysis service that’s part of the Amazon AI suite, to add facial recognition and analysis features to an application. Google provides a similar capability with its Google Cloud Vision API. The technology, which uses machine learning to detect, match and identify faces, is being used in a wide variety of ways, including entertainment and marketing. The Kinect motion gaming system, for example, uses facial recognition to differentiate among players. Smart advertisements in airports are now able to identify the gender, ethnicity and approximate age of passer-by and target the advertisement to the person’s demographic. Facebook uses facial recognition software to tag individuals in photographs. Each time an individual is tagged in a photograph, the software stores mapping information about that person’s facial characteristics. Once enough data has been collected, the software can use that information to identify a specific individual’s face when it appears in a new photograph. To protect people’s privacy, a feature called Photo Review notifies the Facebook member who has been identified.

__

The use of facial recognition is important in law enforcement, as the facial verification performed by a forensic scientist can help to convict criminals. For example, in 2003, a group of men was convicted in the United Kingdom for a credit card fraud based on facial verification. Their images were captured on a surveillance tape near an ATM and their identities were confirmed later by a forensic specialist using facial recognition tools. Despite recent advances in the area, facial recognition in a surveillance system is often technically difficult. The main reasons are difficulties in finding the face by the system. These difficulties arise from people moving, wearing hats or sunglasses, and not facing the camera. However, even if the face is found, identification might be difficult because of the lighting (too bright or too dark), making features difficult to recognize. An important variable is also resolution of the image taken and camera angle. Normalization performed by the computer might not be effective if the incoming image is of poor quality. One of the ways to improve image quality is to use fixed cameras, especially in places like airports, government buildings, or sporting venues. In such cases all the people coming through are captured by the camera in a similar pose, making it easier for the computer to generate a template and compare to a database. While most people do not object to the use of this technology to identify criminals, there are fears that images of people can be taken at anytime, anywhere, without their permission. However, it is clear that the ability of identifying people with 100% certainty using face recognition is still some time away. However, facial recognition is an increasingly important identity verification method.

__

According to the World Face Recognition Biometrics Market, the face recognition market earned revenues of $186 million in 2005 and is likely to grow at a compound annual growth rate (CAGR) of 27.5 percent to reach $1021.1 million in 2012. According to Transparency Market Research (TMR) report, “the global facial recognition market has gained popularity from diverse emerging tech trends, whether it’s switching from 2D facial recognition technology to 3D and facial analytics. Because of the higher accuracy in terms of identifying facial features, the market for 3D facial recognition technology segment is expected to record faster growth as compared to 2D facial recognition technology during the forecast period. In addition, a growth of the market for facial analytics, an emerging technology used for examining facial images of people without disturbing their privacy, is further expected to record steady growth as compared to that for 2D facial recognition technology.” In China, employees use their faces to get an entry in their office building. There are multiple industries who are increasingly adopting this emerging technology such as healthcare, retailers, hospitality industries, manufacturing and so many.

_____

The evaluation of a Facial Recognition system can be broken down into the following categories

- Universality

Unlike some of the other physical based Biometric modalities (such as Fingerprint Recognition and Hand Geometry Recognition), every individual has a face. So at least theoretically, everybody should be able to enrol into a Facial Recognition system.

- Uniqueness

The face by itself is not distinctly unique at all. For example, members of the same family, as well as identical twins, share the same types of facial features. When it comes down to the DNA code, it is the overall facial structure which we inherit that contains the most resembling characteristics.

- Permanence

The structure of the face can change greatly over the lifetime of an individual. As it was described earlier, the biggest factors affecting it are weight loss and weight gain, the aging process, as well as voluntary changes made to the face. As a result, it is quite likely that an individual will have to be enrolled over and over again into the Facial Recognition system to compensate for these variations.

- Collectability

It can be quite difficult to extract the unique features of the face. This is primarily because any changes in the external environment can have a huge impact. For instance, the differences in the lighting, lighting angles, and the distance from which the raw images are captured can have a significant effect on the quality of the Enrolment and Verification Templates.

- Acceptability

This is the category where Facial Recognition suffers the most. As it was described, it can be used covertly, thus greatly decreasing the public acceptance rate of it.

- Resistance to circumvention

Unlike the other Biometric modalities, Facial Recognition systems can be very easily spoofed when 2-D models of the face are being used.

_____

Enhancing biometric precision:

The effectiveness of facial recognition technology depends on several key factors:

- Image quality: Is the system attempting to distinguish between cooperative or non-cooperative subjects? Cooperative subjects are those that have voluntarily allowed their facial image to be captured. Non-cooperative subjects are those typically captured via surveillance cameras or by a witness using their smartphone.

- Algorithms for identification: The second key performance factor is the power of the algorithms that are used to determine similarities between facial features. The algorithms analyze the relative position, size, and/or shape of the eyes, nose, cheekbones and jaw. These features are then used to search for other images with matching features.

- Reliable databases: Lastly, facial recognition accuracy depends on the size and quality of the databases used; to recognize a face, you have to be able to compare it to something! The challenge is to establish matching points between the new image and the source image, in other words, photos of known individuals. Therefore, the larger the database of targeted images, the more likely a match can be found.

__

Is Facial Recognition Technology Expensive?

Some technologies are expensive, especially the ones that require specialized hardware, customizations, and on-site support. However, software-based applications tend to be more affordable. Luckily, face recognition falls into this category. Unless the event planner has excessive requirements, the associated investment is just a few cents per attendee expected to register. Face recognition is a great investment. In most cases, the associated cost savings alone are enough to make it pay for itself. For example, it can increase the check-in speed by 2-10 times. As a result, you can check-in the same amount of attendees using fewer check-in stations, less support staff, and a smaller registration area, which are all great ways to improve the bottom line for your event. As with everything, there is always a trade-off between quality and cost. Therefore, one should be careful about the vendor they choose to use in order to reap the benefits.

_

Are there High Requirements for Facial Recognition?

Uploading one good picture during the online registration will suffice for most applications. Using any device with a decent camera such as a laptop, tablet, or a cell phone is enough to recognize a person during check-in. The video is streamed to the cloud and processed there so the device computation requirements are minimal. Depending on your flexibility and expectations, the required internet bandwidth can be less than 0.5 Mbps (upload) for each device powered by face recognition. For full blown implementations and stringent requirements, a little more effort might be required. On these occasions, on-site support could be a good idea. However, for the vast majority of the cases and in comparison with other high-technology alternatives, face recognition is probably the most user-friendly and easy to use option. You simply point and recognize. Remote support is enough for most of the events and it will rarely be needed.

______

______

Face Detection versus Face Recognition:

The terms face detection and face recognition are sometimes used interchangeably. But there are actually some key differences. To help clear things up, let’s take a look at the term face detection and how it differs from the term face recognition.

What is Face Detection?

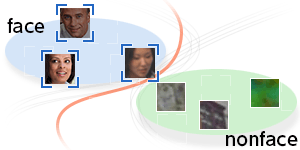

The definition of face detection refers to computer technology that is able to identify the presence of people’s faces within digital images. In order to work, face detection applications use machine learning and formulas known as algorithms to detecting human faces within larger images. These larger images might contain numerous objects that aren’t faces such as landscapes, buildings and other parts of humans (e.g. legs, shoulders and arms).

Face detection is a broader term than face recognition. Face detection just means that a system is able to identify that there is a human face present in an image or video. Face detection has several applications, only one of which is facial recognition. Face detection can also be used to auto focus cameras. And it can be used to count how many people have entered a particular area. It can even be used for marketing purposes. For example, advertisements can be displayed the moment a face is recognized.

What is Face Recognition?

Face recognition can confirm identity. It is therefore used to control access to sensitive areas. One of the most important applications of face detection is facial recognition. Face recognition describes a biometric technology that goes way beyond recognizing when a human face is present. It actually attempts to establish whose face it is. Here computer software performs to make a positive identification of a face in a photo or video image against a pre-existing database of faces.