Dr Rajiv Desai

An Educational Blog

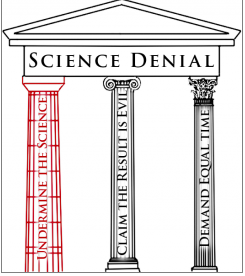

IMITATION SCIENCE

_

IMITATION SCIENCE:

_

The figure below shows imitation jewelry resembling real jewelry but costs only rupees 788/=

In the same way, imitation science resembles science but fares poorly on scientific methods:

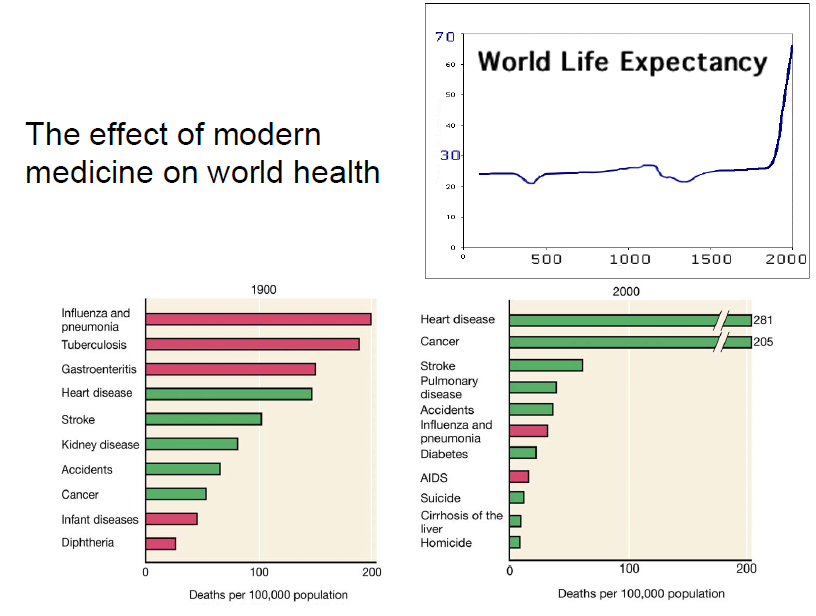

There is no evidence to show that high dose vitamins prevent cancer or cardiovascular disease and maintain good health in healthy (non-pregnant) adults. There is no evidence to show that calcium supplements prevent fractures in normal adults including post-menopausal women. Yet billions take vitamins and calcium pills recommended by doctors and/or media driven by pharmaceutical industry which displays purported scientific studies (imitation science) showing benefits of vitamins and calcium supplements.

_______

Prologue:

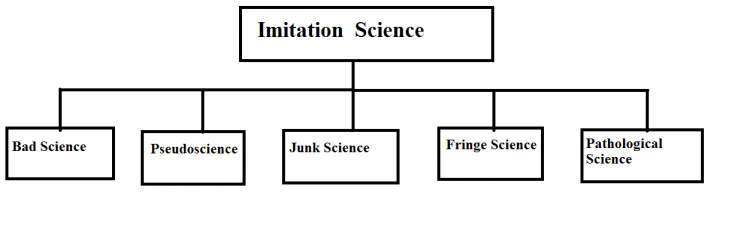

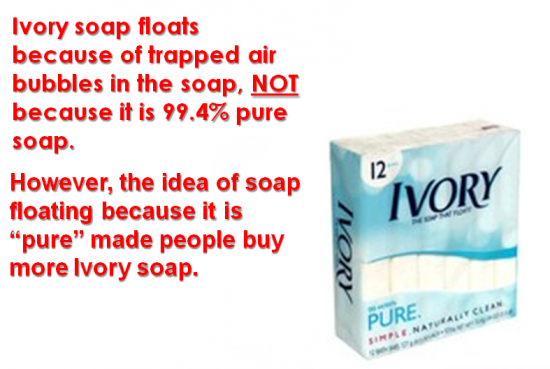

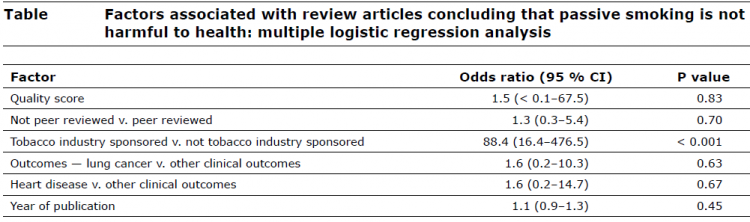

There is a Calvin and Hobbes cartoon in which Calvin poses the question to Dad: “Why does ice float?” Dad responds: “Because it’s cold. Ice wants to get warm, so it goes to the top of liquids in order to be nearer to the Sun.” Bad science is commonly used to describe well-intentioned but incorrect, obsolete, incomplete, or over-simplified expositions of scientific ideas. An example would be the statement that electrons revolve in orbits around the atomic nucleus, a picture that was discredited in the 1920’s, but is so much more vivid and easily grasped than the one that supplanted it that it shows no sign of dying out. I am coining a new term “Imitation Science”. Imitation science is akin to imitation jewelry. Just as imitation jewelry looks like real jewelry but costs much less; imitation science looks like real science but fares poorly on scientific methods, gives fake explanation and results; and at the best misleads people, and at the worst fools people with numerous disastrous consequences. Bad science is still science, but it is poorly done. Pseudoscience isn’t science at all, but it pretends to be. We all need to know how to differentiate between science, bad science, and pseudoscience. Astronomy is science; Astrology is pseudoscience. Evolutionary Biology is science; Creationism is pseudoscience. How about cultural anthropology, abstract economics, string-theory, and evolutionary psychology – science or pseudoscience? Is pseudo-science just politically incorrect science? Or is there an objective difference? Sometimes bad science is a product of mistakes and other times, it is a product of ideologues purposefully manipulating data. Bad science “starts” when bogus findings manage to break out of the laboratory via mass media and quickly become accepted as fact by the masses. How does this happen? Most people are in agreement that the Fukushima Daiichi nuclear power plant meltdown was a disaster. It is not illogical to assume that this has the potential to have a very negative impact on the environment and on the health of living organisms. An article was published in Al Jazeera titled, Fukushima: It’s much worse than you think, scientific experts believe Japan’s nuclear disaster to be far worse than governments are revealing to the public. Naturally, people assumed that this article was reporting truth. It was tweeted 9,878 times and liked on Facebook 49,000 times. As a result, people were led to believe that infant mortality rates in the northwest of the United States had increased by 35% and that the government was doing something to cover it up. It wasn’t true. Michael Moyer at Scientific American, very easily and clearly demonstrated how these so-called scientists had used selective and manipulated data to falsely conclude that the Fukushima disaster was causing babies to die at alarming rates in the United States. But the damage was already done. It is likely that there are more people who read and believed this bogus science reported on by Al Jazeera and other media outlets and used it to validate and confirm their preexisting beliefs than people who read Michael Moyer’s debunking article. Whether or not these beliefs are grounded in reality has now become a moot point. The point is they are being reinforced by falsehood. This often can cause an ideological stalemate. No side of a debate can make a legitimate claim at truth because no side has purged its argument of the bogus science used to support its claims. Thus, elections, policy decisions and the like become less of a contest of truth vs. falsehood, and more of a contest of who can get their bad science to reach the widest audience and garner the largest following. Both sides of the debate become tainted, and truth becomes nearly impossible to find. The global implications are dangerous. There are several major debates raging right now in the political landscape: Global warming, HIV control, stem-cell research, and Iran’s uranium enrichment program to name a few. All of these debates have very definite science to back them up – and science is used on both sides of the debate. Much of that science is bad science, but it can be found on both sides. Somewhere out there, the truth is hidden. And to find the truth, I have to show to the world what science is, what bad science is and what pseudoscience is. To overcome the jugglery of words like bad science, pseudoscience, junk science, fringe science, fake science and pathological science, I coin the new term “Imitation science” which includes all these terms.

_

_

Let me begin discussion on imitation science by giving 2 examples:

Example 1:

Color of car and its path experiment:

Let us stand on balcony of our flat overlying a road where hundreds of cars pass every day. At the end of the road there is traffic signal where some car turns to left, some turn to right and some go straight. Let us observe sample of 10,000 cars (Large sample). At the end of the study you find that 30 % car turned to left, 30 % car turned to right and 40 % car went straight. Out of 30 % car that turned to left, 70 % were red colored. Out of 30 % of cars that turned to right, 75 % were white colored and out of 40 % of car that went straight, 80 % were black colored. We conclude that if you have red colored car, you are more likely to turn left. If you have white colored car, you are more likely to turn right. If you have black colored car, you will go straight. This is imitation science.

Why imitation science? I will explain.

First, there is no logic or reasoning to assume that color of the car has anything to do with its path. However, if youth drove red colored cars more commonly and if there was a dance bar on turning left, red colored cars are more likely to turn left. But such data is missing.

Second, basic outcome is easily falsifiable. You yourself drive your red colored car and turn right and do it hundred times. Hundred times it will turn right at your will.

Third, let this experiment be conducted by your friend on another day. The results will be remarkably different. The experiment could not be replicated.

Fourth, out of 10,000 cars, 3,000 (30 %) turned to left and out of these 3,000; 2,100 (70 %) were red colored. That means out of 10,000 cars, 2,100 red colored cars turned to left. We do not know how many red colored cars that did not turn to left. Let us assume that number of red colored cars that did not turn to left is A. Then total number of red colored car would be 2100 + A and chance of turning to left would be

2100 x 100

2100 + A

For example if A = 900, then chance of turning to left for red colored car will be 70 %

Since we did not know A (number of red colored car that did not turn to left), our earlier conclusions were false as it were based on bad statistics. So lack of all variables coupled with bad statistics would give erroneous conclusions.

_

Example-2

Lung cancer and smoking:

Let us take sample of 2 people, Mr. A smoker and Mr. B non-smoker; both 40 years old, healthy and let us follow them up for 10 years. After 10 years you find that A is healthy despite smoking daily while B has lung cancer despite non-smoker. Your conclusion would be that smoking has nothing to do with lung cancer and in fact smokers live healthy life. This is imitation science. Your facts are correct. But your methodology is wrong. The sample is too small. If you had done same study with 100 people, 50 % smoker and 50 % non-smokers, the results would have been different. If you had done same study with 1 million people, the results would have convincingly shown that smoking does cause lung cancer. All smokers do not develop lung cancer and all patients of lung cancer are not smokers. But if you smoke, your chance of getting lung cancer is significantly higher than non-smokers. This is the truth.

_

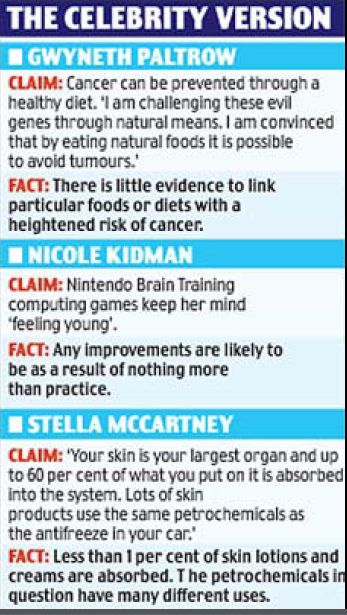

If you can understand and learn these two examples in letter and spirit, you know imitation science and you also know how you are misled daily by imitation science masquerading as good science on TV, newspapers, magazines, celebrity talks, advertisements, at social gathering; and also by your doctors, dieticians, nutritionists, gymnasium peers, colleagues at work place and astrologers.

_____________

The growth of science:

We live in an age of science and technology. Science’s achievements touch all our lives through technologies like computers, jet planes, cell phones, the Internet and modern medicine. Our intellectual world has been transformed through an immense expansion of scientific knowledge, down into the most microscopic particles of matter and out into the vastness of space, with hundreds of billions of galaxies in an ever-expanding universe. The industrial applications of technological developments that have resulted from scientific research have been startling, to say the least. The statistics used to represent the tangible perquisites of this most powerful system stagger the imagination. The historian of science, Derek J. de Solla Price, in his book Little Science, Big Science, has observed that “using any reasonable definition of a scientist, we can say that 80 to 90 percent of all the scientists that have ever lived are alive now. Alternatively, any young scientist, starting now and looking back at the end of his career upon a normal life span, will find that 80 to 90 percent of all scientific work achieved by the end of the period will have taken place before his very eyes, and that only 10 to 20 percent will antedate his experience” (1963, pp. 1-2). De Solla Price’s conclusions are well supported with evidence. There is now, for example, well over 100,000 scientific journals published each year, producing over six million articles to be digested—clearly an impossible task. The Dewey Decimal Classification now lists well over 1,000 different classifications under the title of “Pure Science,” within each of which are dozens of specialty journals. As the number of individuals working in the field grows, so too does the amount of knowledge, creating more jobs, attracting more people, and so on. The membership growth curves for the American Mathematical Society (founded in 1888) and the Mathematical Association of America (founded in 1915), are dramatic demonstrations of this phenomenon. Regarding the accelerating rate of increase of individuals entering the sciences, in 1965 the Junior Minister of Science and Education in Great Britain made this observation: ‘For more than 200 years scientists everywhere were a significant minority of the population. In Britain today they outnumber the clergy and the officers of the armed forces’. The rate of increase in transportation speed has also shown geometric progression, most of the change being made in the last one percent of human history. Fernand Braudel tells us, for example, that “Napoleon moved no faster than Julius Caesar” (1979, p. 429). But in the last century the growth in the speed of transportation has been astronomical (figuratively and literally). One final example of technological progress based on scientific research will serve to drive the point home. Timing devices in various forms—dials, watches, and clocks—have improved in their efficiency, and the decrease in error can be graphed over time. In virtually every field of human achievement associated with science and technology the rate of progress matches that of the examples above. Reflecting on this rate of change, economist Kenneth Boulding observed (Hardison, 1988, p. 14): As far as many statistical series related to activities of mankind are concerned, the date that divides human history into two equal parts is well within living memory. The world of today is as different from the world in which I was born as that world was from Julius Caesar’s. I was born in the middle of human history.

________

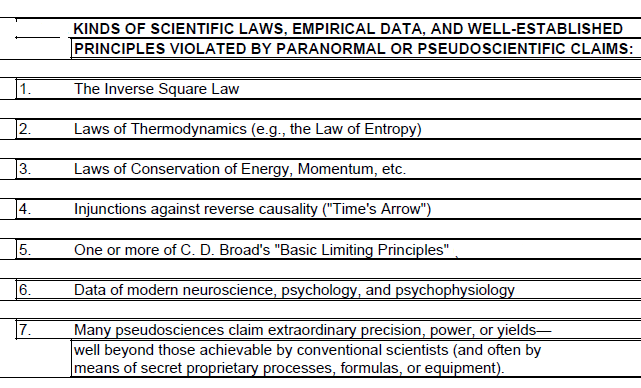

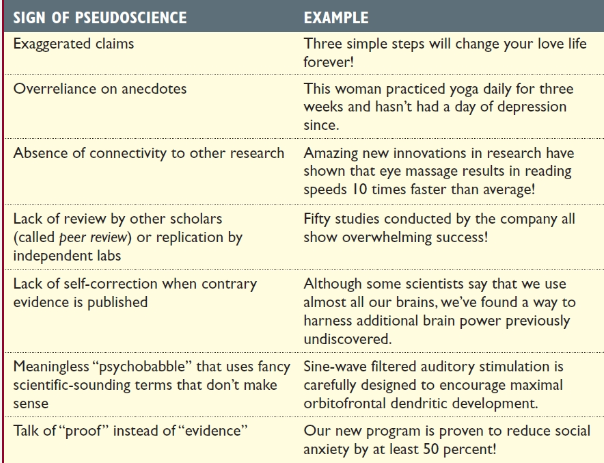

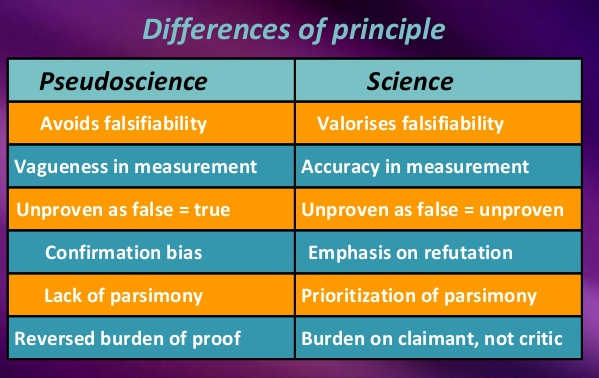

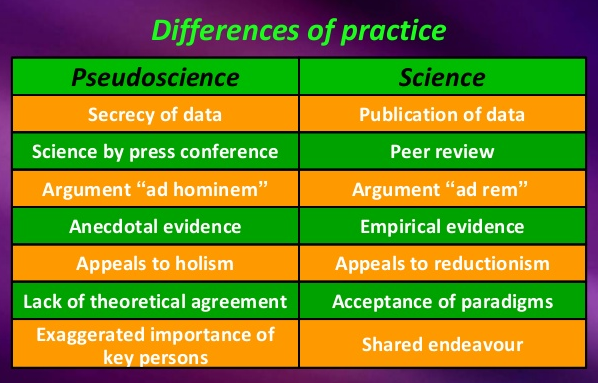

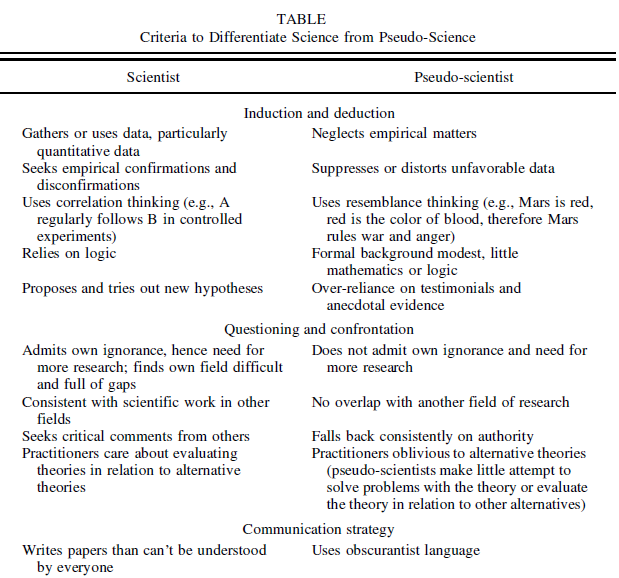

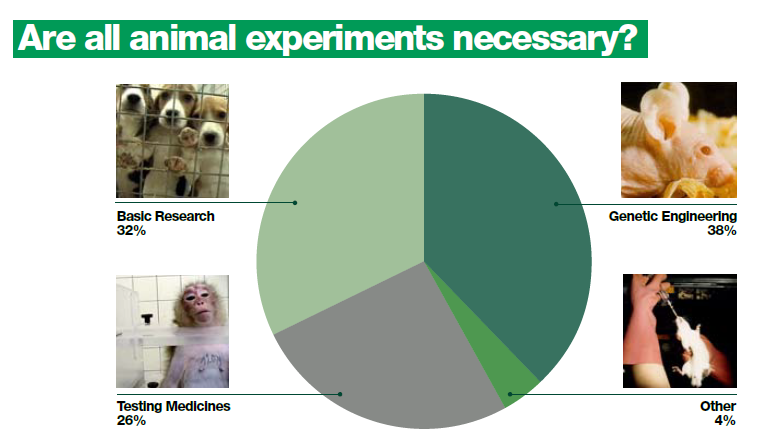

Demarcation between science and imitation science [vide infra]:

Demarcations of science from imitation science can be made for both theoretical and practical reasons. From a theoretical point of view, the demarcation issue is an illuminating perspective that contributes to the philosophy of science in the same way that the study of fallacies contributes to the study of informal logic and rational argumentation. From a practical point of view, the distinction is important for decision guidance in both private and public life. Since science is our most reliable source of knowledge in a wide variety of areas, we need to distinguish scientific knowledge from its look-alikes. Due to the high status of science in present-day society, attempts to exaggerate the scientific status of various claims, teachings, and products are common enough to make the demarcation issue pressing in many areas.

_

Climate deniers are accused of practicing pseudoscience as are intelligent design creationists, astrologers, UFOlogists, parapsychologists, practitioners of alternative medicine, and often anyone who strays far from the scientific mainstream. The boundary problem between science and pseudoscience, in fact, is notoriously fraught with definitional disagreements because the categories are too broad and fuzzy on the edges, and the term “pseudoscience” is subject to adjectival abuse against any claim one happens to dislike for any reason. In his 2010 book Nonsense on Stilts, philosopher of science Massimo Pigliucci concedes that there is “no litmus test,” because “the boundaries separating science, nonscience, and pseudoscience are much fuzzier and more permeable than Popper (or, for that matter, most scientists) would have us believe.” I call creationism “pseudoscience” not because its proponents are doing bad science—they are not doing science at all—but because they threaten science education in America, they breach the wall separating church and state, and they confuse the public about the nature of evolutionary theory and how science is conducted. We can demarcate science from pseudoscience less by what science is and more by what scientists do. Science is a set of methods aimed at testing hypotheses and building theories. If a community of scientists actively adopts a new idea and if that idea then spreads through the field and is incorporated into research that produces useful knowledge reflected in presentations, publications, and especially new lines of inquiry and research, chances are it is science. This demarcation criterion of usefulness has the advantage of being bottom up instead of top down, egalitarian instead of elitist, nondiscriminatory instead of prejudicial.

_

J. Robert Oppenheimer said it best when he wrote: “The scientist is free, and must be free to ask any question, to doubt any assertion, to seek any evidence, to correct any errors.” In the second century AD, Claudius Ptolemy provided evidence that the Earth was the center of our universe with the Sun orbiting around it. That conclusion was valid because of his arguments supporting it. Also, no one needed to dispute an idea that seemed self-evident. 1500 years later Nicolas Copernicus discovered that Ptolemy had to be wrong. He put together his evidence, but held the results until late in his life because his results would not be easily accepted. He knew his data would challenge everything known about the planets, stars, and our place in the universe. Both Ptolemy and Copernicus were good scientists, because they were both working with the information and the tools they had at the time and they drew conclusions from the data available. Still, Ptolemy was wrong and Copernicus was right. Science is a rigorous discipline that uses peer review to insure the quality of the research. Anyone can make a scientific claim, but it is the other scientists in that discipline or field that will verify or reject the results. It is the process of research followed by peer review that eventually weeds out researchers who may, 1) have had incorrect or misinterpreted results, 2) are incompetent, or 3) attempt to subvert science with fraudulent data for their own agenda. Unfortunately, it can take years for the review process to reveal whether one scientist’s research is valid or not.

_

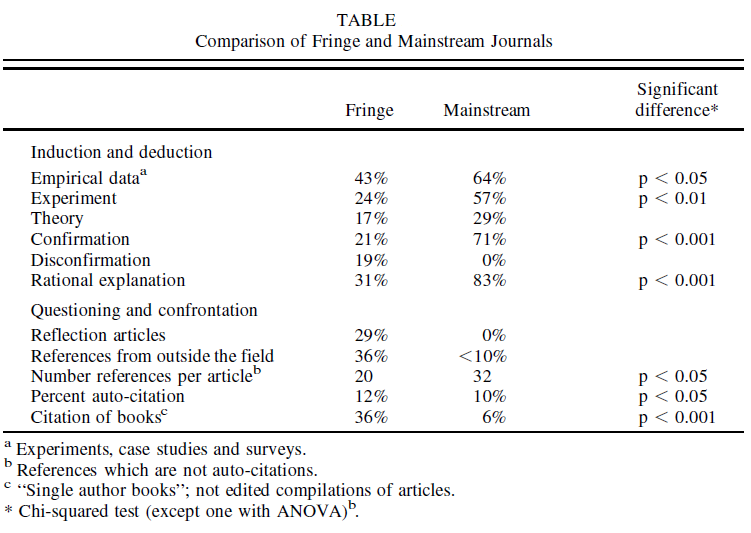

Pseudoscience, fringe science, junk science and bad science:

An area of study or speculation that masquerades as science in an attempt to claim a legitimacy that it would not otherwise be able to achieve is sometimes referred to as pseudoscience, fringe science, or “alternative science”. Another term, junk science, is often used to describe scientific hypotheses or conclusions which, while perhaps legitimate in themselves, are believed to be used to support a position that is seen as not legitimately justified by the totality of evidence. Physicist Richard Feynman coined the term “cargo cult science” in reference to pursuits that have the formal trappings of science but lack “a principle of scientific thought that corresponds to a kind of utter honesty” that allows their results to be rigorously evaluated. Various types of commercial advertising, ranging from hype to fraud, may fall into these categories. There also can be an element of political or ideological bias on all sides of such debates. Sometimes, research may be characterized as “bad science”, research that is well-intentioned but is seen as incorrect, obsolete, incomplete, or over-simplified expositions of scientific ideas. Bad science and pseudoscience should not be confused with each other, however. While pseudoscience may also be bad science, most of bad science is not generally considered pseudoscience. In fact, bad science is normal. Pseudoscience, on the other hand, is defined precisely by deviating from the norm of science. While that norm is certainly defined in part by methodological standards, it is certainly also defined by social, cultural, and historical factors. “Normal science” is what “normal” scientists do (in “normal” laboratories, “normal” universities, and backed by “normal” means of finance). Pseudoscience is what the cranks do, often in their spare time and with the backing of questionable coteries of interests. Bad science, being normal, has the legitimacy conferred by the association with respected institutions. For this reason, bad science ought to be a much graver concern than pseudoscience. Especially to people who care about the state of science, and about the welfare of a modern society that is increasingly dependent on reliable information on crucial topics, bad science is without doubt the more dangerous of the two. How we as a society solve the energy crisis, stop global warming, cure cancer and Alzheimer, and feed 10 billion people will eventually be decided by the readers of Nature, not the Fortean Times. It is therefore supremely important that scientific journals are reliable and bad science is kept at bay. Among scientific skeptics and professional debunkers, so much time and effort has been wasted on pointing out the obvious holes, gaps, and inconsistencies in pseudoscience. Pseudoscience would then seem appropriately defined as research that does not avail itself of the scientific method. ‘Bad’ science would then follow that method, for whatever reason, poorly or lie/fabricate data.

_________

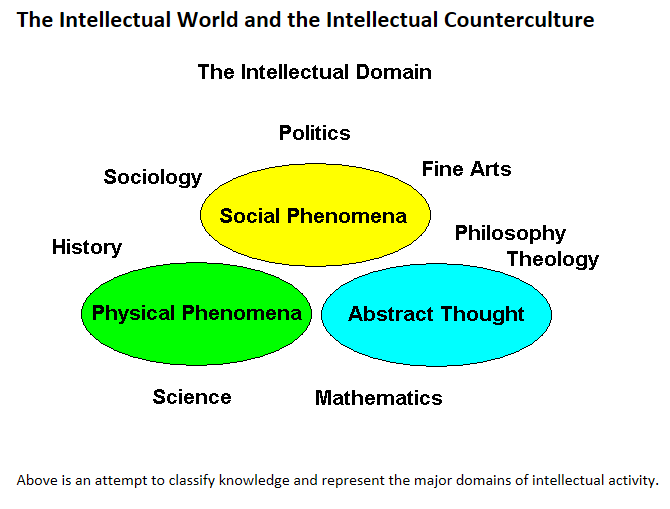

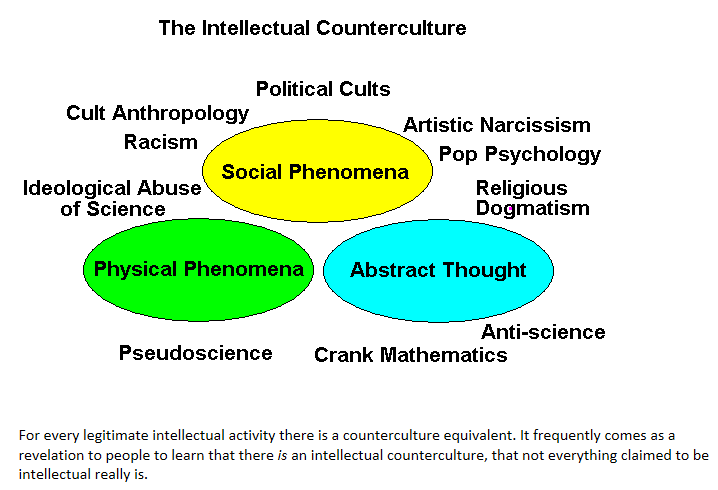

The philosophy is simple. For every intellectual activity, there is intellectual counter-culture. For every good science, there is imitation science.

_

_____________

Let me start with definition of science and then define all other ‘imitation science’:

_

Science:

Science (from Latin scientia, meaning “knowledge”) is best defined as a careful, disciplined, logical search for knowledge about any and all aspects of the universe, obtained by examination of the best available evidence and always subject to correction and improvement upon discovery of better evidence. Science is a search for basic truths about the Universe, a search which develops statements that appear to describe how the Universe works, but which are subject to correction, revision, adjustment, or even outright rejection, upon the presentation of better or conflicting evidence. Science is a systematic enterprise that builds and organizes knowledge in the form of testable explanations and predictions about the universe. In an older and closely related meaning, “science” also refers to a body of knowledge itself, of the type that can be rationally explained and reliably applied. A practitioner of science is known as a scientist. In the 17th and 18th centuries scientists increasingly sought to formulate knowledge in terms of laws of nature such as Newton’s laws of motion. And over the course of the 19th century, the word “science” became increasingly associated with the scientific method itself, as a disciplined way to study the natural world, including physics, chemistry, geology and biology. Science is self-correcting, or so the old cliché goes. The gist is that one scientist’s error will eventually be righted by those who follow, building on the work. The process of self-correction can be slow – even decades long but eventually the scientific method will right the canon.

_

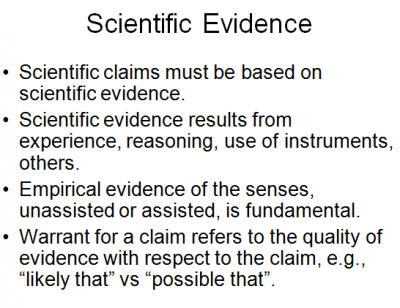

Anecdotal report:

Anecdotal means based on personal accounts or casual observations rather than rigorous or scientific analysis and therefore not necessarily true or reliable. Every student of science must understand that anecdotal report/ information/ study is not science. Anecdotal information propagated by media is neither science nor truth. For example, you find in newspaper that people who drink tea is less likely to suffer from heart attack. This is not a lie. It is anecdotal report. Some researcher in a study found that people who drink tea daily have lower incidence of heart attack as tea contains anti-oxidants and anti-oxidants supposedly reduce inflammation of atheroma. It ought to be confirmed by double-blind placebo-controlled randomized trials on a much larger scale. Then it will become science. You cannot start drinking tea on regular basis by one anecdotal report. Newspapers publish such reports to increase their sales. You may still drink tea if you like it but not to save your life.

_

Non-Science:

Simply put non-science refers to inquiry, academic work or disciplines that do not involve the process of empirical verification, or the scientific process to generate their products or knowledge base. These are disciplines that do not adopt a systematic methodology based on evidence. Many of the greatest human achievements represent non-science. They are activities that don’t purport to be scientific and are easily identified as non-scientific in their nature, including the arts, religion, and even philosophy. They usually involve creative processes, the acceptance of subjective knowledge, belief, revelation or faith in establishing their epistemological basis. The rejection of the principles of scientific inquiry is also frequently acknowledged in much postmodern thinking, which can also be categorized as non-science. Remember, imitation science and non-science are different. Imitation science resembles or masquerade as science while non-science has nothing to do with science including pretense.

_

Faith:

Faith is non-science. Faith may be defined briefly as an illogical belief in the occurrence of the improbable. I would add irrational and highly delusional to the mix when faith requires one to accept magical violations of the well known, well tested or easily demonstrated laws of Nature. Science is progress and the future. Faith is regression to the Dark Ages.

_

Anti-science:

Anti-science is a position that rejects science and the scientific method. People holding antiscientific views do not accept that science is an objective method, as it purports to be, or that it generates universal knowledge. They also contend that scientific reductionism in particular is an inherently limited means to reach understanding of the complex world we live in. Anti-science is a rejection of “the scientific model [or paradigm]… with its strong implication that only that which was quantifiable, or at any rate, measurable… was real”. In this sense, it comprises a “critical attack upon the total claim of the new scientific method to dominate the entire field of human knowledge”. The term “anti-science” refers to persons or organizations that promote their ideology over scientifically-verified evidence, either by denying said evidence and/or inventing their own. Anti-science positions are maintained and promoted especially in areas of conflict between the politically- or religiously-motivated pseudo-scientific position and actual science. In addition, anti-science positions are normally couched in reassuring code words, such as “intelligent design”, in order to appear less distortive of science. Anti-science proponents also criticize what they perceive as the unquestioned privilege, power and influence science seems to wield in society, industry and politics; they object to what they regard as an arrogant or closed-minded attitude amongst scientists. Common anti-science targets include evolution, global warming, and various forms of medicine, although other sciences that conflict with the anti-science ideology are often targeted as well. Religious anti-science philosophy considers science as an anti-spiritual and materialistic force that undermines traditional values, ethnic identity and accumulated historical wisdom in favor of reason and cosmopolitanism. The modern usage of the term should not be confused with the anti-science movement in the 1960s and 1970s, which was largely concerned with the possible dehumanizing aspects of uncontrolled scientific and technological advancement.

_

Bad Science:

Bad science is simply scientific work that is carried out poorly or with erroneous results due to fallacies in reasoning, hypothesis generation and testing or the methods involved. Science, like any endeavor can be carried out badly, and often with the most noble motives. Occasionally scientists deliberately mislead for personal gain, but in most cases bad science results from errors in the scientific process. Sometimes these errors are the result of poor practices, or the researchers’ unconscious imposition of their beliefs (looking for the answer they believe in). Science is an extraordinary process but as we have seen is not perfect, and has one notable flaw; it is carried out by people who are themselves unavoidably influenced by their own beliefs, and who are sometimes trained insufficiently and make mistakes.

_

Quasi-science:

Quasi-science is a term sometimes encountered, and is difficult to separate from pseudoscience. We may consider that quasi-science resembles science, having some of the form, but not all of the features of scientific inquiry. Quasi-science involves an attempt to use a scientific approach but where development of a scientific theoretical basis or application of scientific methodologies is insufficient for the work to be determined as an established science. Differentiating quasi-science from pseudo-science or bad-science is complex and there is certainly some overlap, as some quasi-science falls into the realm of pseudoscience. But, generally quasi-science can be considered work involving commonly held beliefs in popular science but where they do not meet the rigorous criteria of scientific work. This is often seen with “pop” science that may blur the divide between science and pseudoscience among the general public, and may also be seen in much science fiction. For example ideas about time-travel, immortality, aliens and sentient machines are frequently discussed in the media, although there is insufficient empirical basis for much of this to be seen as scientific knowledge at this time. Quasi-science does not normally reject, or purport to be a new/alternative science and may be developed with an application of rigorous scientific methods into scientific work.

_

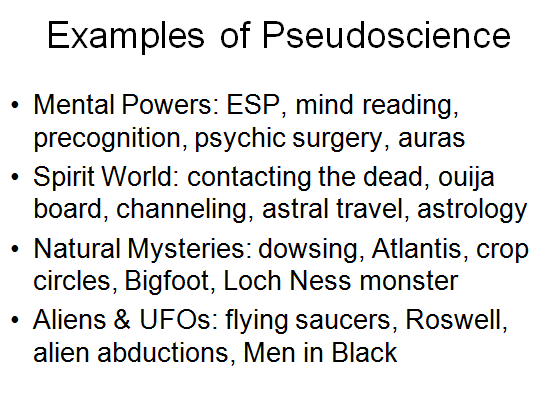

Pseudoscience:

Pseudoscience is a belief or process which masquerades as science in an attempt to claim a legitimacy which it would not otherwise be able to achieve on its own terms. The most important of its defects is usually the lack of the carefully controlled and thoughtfully interpreted experiments which provide the foundation of the natural sciences and which contribute to their advancement. Michael Shermer defined pseudoscience as “claims presented so that they appear [to be] scientific even though they lack supporting evidence and plausibility”. In contrast, science is “a set of methods designed to describe and interpret observed and inferred phenomena, past or present, and aimed at building a testable body of knowledge open to rejection or confirmation” (Shermer 1997, p. 17) Merriam-Webster Dictionary defines pseudoscience as a system of theories, assumptions, and methods erroneously regarded as scientific.

_

Parascience:

Parascience (Spirituality, New Age, Astrology & Self-help / Alternative Belief Systems) is the study of subjects that are outside the scope of traditional science because they cannot be explained by accepted scientific theory or tested by conventional scientific methods. Pseudoscience is research/theorizing that denies at least one of ‘normal’ science’s axioms or supports the existence of ‘things’ that ‘normal’ science denies, if that research is conducted outside of ‘normal’ channels. It’s preferable to see pseudoscience and science as an epistemological spectrum. At one end, there is an ideal of trying to only gain or lose confidence in an idea under the influence of values that tend to inform a scientific methodology (appropriate use of logic, falsification, blinding, rigour, repetition etc.). At the other there are influences of culture, tribalism, resources, esteem and so forth, that increase or decrease that confidence in an idea for irrational reasons.

_

Fringe science:

There are differing definitions of fringe science. By one definition it is valid, but not mainstream science, whilst by another broader definition it is generally viewed in a negative way as being non-scientific. Fringe science is scientific inquiry in an established field of study that departs significantly from mainstream or orthodox theories, and is classified in the “fringes” of a credible mainstream academic discipline. Fringe science covers everything from novel hypotheses that can be tested via scientific method to wild ad hoc theories and “New Age mumbo jumbo” with the dominance of the latter resulting in the tendency to dismiss all fringe science as the domain of pseudoscientists, hobbyists, or quacks. Other terms used for the portions of fringe science that lack scientific integrity are pathological science, voodoo science, and cargo cult science. Michael W. Friedlander suggests some guidelines for responding to fringe science, which he argues is a more difficult problem to handle, “at least procedurally,” than scientific misconduct. His suggested methods include impeccable accuracy, checking cited sources, not overstating orthodox science, thorough understanding of the Wegener continental drift example, examples of orthodox science investigating radical proposals, and prepared examples of errors from fringe scientists. A particular concept that was once accepted by the mainstream scientific community can become fringe science because of a later evaluation of previously supportive research. For example the idea that focal infections of the tonsils or teeth were a primary cause of systemic disease was once considered medical fact, but is now dismissed for lack of evidence. Conversely, fringe science can include novel proposals and interpretations that initially have only a few supporters and much opposition. Some theories which were developed on the fringes (for example, continental drift, existence of Troy, heliocentrism, the Norse colonization of the Americas, and Big Bang Theory) have become mainstream because of the discovery of supportive evidence.

_

Junk science:

Junk science is a term typically used in the political arena to describe ideas that proponents erroneously, for political reasons, dubiously or even fraudulently claim scientific backing. In the United States, junk science is any scientific data, research, or analysis considered to be spurious or fraudulent. The concept is often invoked in political and legal contexts where facts and scientific results have a great amount of weight in making a determination. It usually conveys a pejorative connotation that the research has been untowardly driven by political, ideological, financial, or otherwise unscientific motives. Junk science is the promotion of a finding as “scientific” or “unscientific” based mainly upon whether its conclusions support the answers (or views) favored by the promoters. Junk science consists of giving poorly done scientific work the same authority as work which conforms to the scientific method. It is akin to politicized science, i.e., the selective use of scientific evidence to reach predetermined conclusions and support extra-scientific political goals. The concept was first invoked in relation to expert testimony in civil litigation. More recently, invoking the concept has been a tactic to criticize research on the harmful environmental or public health effects of corporate activities, and occasionally in response to such criticism. In these contexts, junk science is counterposed to the “sound science” or “solid science” that favors one’s own point of view. In some cases, junk science may result from a misinterpretation of previous sound scientific studies.

_

Pathological science:

Pathological science is a reference to science which involves barely detectible phenomena that is then reported a being carefully studied. It is interesting that this is an example of circular referencing. Irving Langmuir coined the term. While he has the 1932 Nobel Prize in Chemistry and probably ran into a good deal of bad science, he is also a darling of the pseudoskeptics. In his Irving Langmuir’s Symptoms of Pathological Science:

- The maximum effect that is observed is produced by a causative agent of barely detectable intensity, and the magnitude of the effect is substantially independent of the intensity of the cause.

- The effect is of a magnitude that remains close to the limit of detectability; or, many measurements are necessary because of the very low statistical significance of the results.

- Claims of great accuracy.

- Fantastic theories contrary to experience.

- Criticisms are met by ad hoc excuses thought up on the spur of the moment.

- Ratio of supporters to critics rises up to somewhere near 50% and then falls gradually to oblivion.

_

N-rays as pathological science:

Langmuir discussed the issue of N-rays as an example of pathological science, one that is universally regarded as pathological. The discoverer, René-Prosper Blondlot, was working on X-rays (as were many physicists of the era) and noticed a new visible radiation that could penetrate aluminium. He devised experiments in which a barely visible object was illuminated by these N-rays, and thus became considerably “more visible”. After a time another physicist, Robert W. Wood, decided to visit Blondlot’s lab, where he had since moved on to the physical characterization of N-rays. The experiment passed the rays from a 2 mm slit through an aluminium prism, from which he was measuring the index of refraction to a precision that required measurements accurate to within 0.01 mm. Wood asked how it was possible that he could measure something to 0.01 mm from a 2 mm source, a physical impossibility in the propagation of any kind of wave. Blondlot replied, “That’s one of the fascinating things about the N-rays. They don’t follow the ordinary laws of science that you ordinarily think of.” Wood then asked to see the experiments being run as usual, which took place in a room required to be very dark so the target was barely visible. Blondlot repeated his most recent experiments and got the same results—despite the fact that Wood had reached over and covertly removed the prism.

_

Pseudomathematics:

Pseudomathematics is a form of mathematics-like activity undertaken by many non-mathematicians – and occasionally by mathematicians themselves. The efforts of pseudomathematicians divide into three categories:

- attempting apparently simple classical problems long proved impossible by mainstream mathematics; trying metaphorically or (quite often) literally to square the circle

- generating whole new theories of mathematics or logic from scratch

- attempting hard problems in mathematics (the Goldbach conjecture comes to mind) using only high-school mathematical knowledge

____________

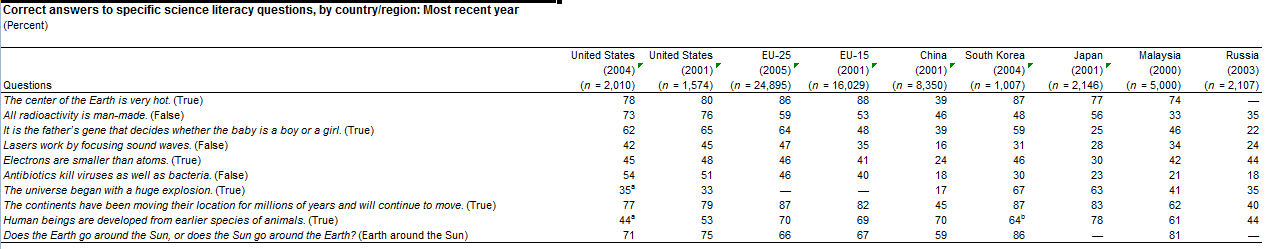

Scientific literacy:

Scientific literacy is defined as knowing basic facts and concepts about science and having an understanding of how science works. It is important to have some knowledge of basic scientific facts, concepts, and vocabulary. Those who possess such knowledge are able to follow science news and participate in public discourse on science-related issues. Having appreciation for the scientific process may be even more important. Knowing how science works, i.e., understanding how ideas are investigated and either accepted or rejected, is valuable not only in keeping up with important science-related issues and participating meaningfully in the political process, but also in evaluating and assessing the validity of various types of claims people encounter on a daily basis (including those that are pseudoscientific) (Maienschein 1999). Surveys conducted in the United States and other countries reveal that most citizens do not have a firm grasp of basic scientific facts and concepts, nor do they have an understanding of the scientific process. In addition, belief in pseudoscience seems to be widespread, not only in the United States but in other countries as well. A substantial number of people throughout the world appear to be unable to answer simple, science-related questions as seen in the table below. Many did not know the correct answers to several (mostly) true/false questions designed to test their basic knowledge of science.

_

_

A recent study of 20 years of survey data collected by NSF concluded that “many Americans accept pseudoscientific beliefs,” such as astrology, lucky numbers, the existence of unidentified flying objects (UFOs), extrasensory perception (ESP), and magnetic therapy (Losh et al. 2003). Such beliefs indicate a lack of understanding of how science works and how evidence is investigated and subsequently determined to be either valid or not. Scientists, educators, and others are concerned that people have not acquired the critical thinking skills they need to distinguish fact from fiction. The science community and those whose job it is to communicate information about science to the public have been particularly concerned about the public’s susceptibility to unproven claims that could adversely affect their health, safety, and pocketbooks (NIST 2002).

_

In 1998, Rudiger C. laugksch in his essay Scientific Literacy incorporated a broad definition of scientific literacy consisting of several dimensions:

- The scientifically literate person understands the nature of scientific knowledge.

- The scientifically literate person accurately applies appropriate science concepts, principles, laws and theories in interacting with his universe.

- The scientifically literate person uses processes of science in solving problems, making decisions and furthering his own understanding of the universe.

- The scientifically literate person interacts with the various aspects of his universe in a way that is consistent with the values that underlie science.

- The scientifically literate person understands and appreciates the joint enterprises of science and technology and the interrelationship of these with each and with other aspects of society.

- The scientifically literate person has developed a richer, more satisfying, and more exciting view of the universe.

_

Scientific literacy can be defined in several ways. One way includes the following elements:

1. The ability to think critically;

2. The ability to use evidential reasoning to draw conclusions; and

3. The ability to evaluate scientific authority.

It does not necessarily mean the accumulation of scientific facts, although a certain basic factual knowledge seems imperative. Critical thinking can be learned by most anyone. Although it is important in scientific reasoning, it is even more important in our daily lives, for a clear understanding of our surroundings and problems makes life enjoyable and safer. All people make assumptions, all hold biases based on previous experience, and all have emotions. These interfere with our interpretations of the world around us and how we solve our problems. They must be set aside in order to evaluate information and problems. As a matter of self-defense against those who would victimize us with hoaxes, frauds, flaky schemes, or do us physical harm, critical thinking is essential.

_

The Organization for Economic Co-Operation and Development’s (OECD) Program for International Student Assessment (PISA) define scientific literacy as: “the capacity to use scientific knowledge, to identify questions and to draw evidence-based conclusions in order to understand and help make decisions about the natural world and the changes made to it through human activity.”

_

Ninety-five percent of Americans are scientifically illiterate, according to a worried Carl Sagan (1996). That means that about 197 million people in the United States cannot understand how science works, what the process is of evidential reasoning, or whose opinions to trust. Only about 10 million people can; most of these are professional scientists, engineers or technicians. Scientific illiteracy contributes to an anti-science mentality that threatens our very existence (Ehrlich and Ehrlich, 1996). This “prescription for disaster” may well have everlasting consequences for the world. Science is ubiquitous in the world, and many of our decisions, publicly and personally, depend on an understanding of it. We cannot tolerate widespread scientific illiteracy. Scientific illiteracy is a world-wide problem. The data are not available for world-wide scientific illiteracy, but if the American rate of about 95% is taken as a minimum for the rest of the world, then we have billions of people unable to understand a large portion of what affects them. If science is important to America, it is just as important, if not more so, to much of the rest of the world. Population growth, environmental deterioration, biodiversity decline, greenhouse effects, health problems, food and air quality, natural hazards, and a multitude of other scientific problems face these countries in larger measure than in the United States. The consequences for them are significantly greater than for America. The use of pesticides, toxic chemicals, tobacco, and false health remedies are foisted on the rest of the world in larger amounts than in the U. S. because of less stringent rules and regulations and understanding by their populaces. With a knowledgeable population, countries around the world could more effectively deal with these problems. Many of the problems naturally cross political boundaries, and so, threaten even countries with some scientific literacy, if not the entire world. Number of people (in millions) over the age of 15 in the world who will be scientifically illiterate or literate based on population projections for mid-years as shown in a table below (McDevitt, 1996) and assuming an illiteracy rate identical to that estimated for the United States. This percentage is undoubtedly too low and may increase through time because the increasing populations of most countries will tax their educational systems even more than they are now.

| Year and Total World Population |

Scientifically Illiterate (95%) over age 15 |

Scientifically Literate (5%) over age 15 |

| 1998: 5771 million | 3700+ million | 199+ million |

| 2000: 6090 million | 4000+ million | 213+ million |

| 2010: 6861 million | 4700+ million | 250+ million |

| 2020: 7599 million | 5400+ million | 285+ million |

_

Students who are scientifically literate:

•Know and understand the scientific concepts and processes required for participation in society

•Ask, find, or determine answers to questions derived from curiosity about their world

•Describe, explain, and predict natural phenomena

•Read with understanding science articles in the popular press and engage in social conversation about the validity of the conclusions

•Identify scientific issues underlying national and local decisions

•Express positions that are scientifically and technologically informed

•Evaluate the quality of scientific information on the basis of its source and the methods used to generate it

•Pose and evaluate arguments based on evidence and apply conclusions from such arguments appropriately

_

The extent to which students acquire a range of social and cognitive thinking skills related to the proper usage of science and technology determines whether they are scientifically literate. Education in the sciences encounters new dimensions with the changing landscape of science and technology, a fast-changing culture, and a knowledge-driven era. A reinvention of the school science curriculum is one that shapes students to contend with its changing influence on human welfare. Scientific literacy, which allows a person to distinguish science from pseudoscience such as astrology, is among the attributes that enable students to adapt to the changing world. Its characteristics are embedded in a curriculum where students are engaged in resolving problems, conducting investigations, or developing projects.

_

There was a symposium on “Science Literacy and Pseudoscience” where it was revealed there that people in the U.S. know more about basic science today than they did two decades ago, good news that researchers say is tempered by an unsettling growth in the belief in pseudoscience such as astrology and visits by extraterrestrial aliens. So, science literacy is clearly increasing (from 10 to 28% according to one measure) but at the same time pseudoscientific beliefs are also increasing. It strikes me that this may be a problem for the educators in that they might be teaching students (and thus the public) scientific facts but not teaching them how to think scientifically.

_

The relationship between child’s play and scientific exploration:

Laura Schulz finds that babies learning about the world have much in common with scientists. Laura Schulz, an associate professor of brain and cognitive sciences at MIT, has always been interested in learning and education. Schulz has devoted her academic career to investigating how learning takes place during early childhood. Starting in infancy, children are quickly able to learn a great deal about how the world works, based on a very limited amount of evidence. Schulz’s research, much of which she does at a “Play Lab” at Boston Children’s Museum, reveals that children, and even babies, inherently use many of the same strategies employed in the scientific method — a systematic process of forming hypotheses and testing them based on observed evidence. “All of these abilities that we think of as scientific abilities emerged because of the hardest problem of early childhood learning, which is how to get accurate abstract representations from sparse, noisy data,” she says. Schulz became interested not only in how children learn from observed evidence, but also how they generate evidence through exploration. She has found that many of the components of the scientific method — isolating variables, recognizing when evidence is confounded, positing unobserved variables to explain novel events — are in fact core to children’s early cognition. In one recent study, she investigated infants’ abilities to determine, from very sparse evidence, the properties of sets of objects. In the study, babies watched as an experimenter pulled a series of three balls, all blue, from a box of balls. Each ball squeaked when the experimenter squeezed it. The babies were then handed a yellow ball from the box. When most of the balls in the box were blue, the babies squeezed the yellow ball, suggesting that they generalized the squeaking to the entire box of balls. However, if the balls in the box were mostly yellow, the babies were much less likely to try to squeeze them, showing they believed that the blue, squeaking balls were a rare exception. Another recent experiment explored the value of offering lessons versus allowing children to explore on their own. In that study, Schulz found that children who were shown how to make a toy squeak were less likely to discover the toy’s other features than children who were simply given the toy with no instruction. “There’s a tradeoff of instruction versus exploration,” she says. “If I instruct you more, you will explore less, because you assume that if other things were true, I would have demonstrated them.” Although Schulz hopes that someday her work will lead to development of new education strategies, that is more of a long-term goal.

_

When every child employs scientific method for learning, then why scientific literacy is so poor in population? Obviously, natural scientific instinct is suppressed by parents, schools, religion, culture, peers, media and neighborhood. Schools always coerce child to memorize rather than understand. Religion always coerce child to follow faith without questioning. Parents always coerce child to follow their viewpoint of life without questioning and so on and so forth.

_________

Coincidence, miracle and science:

I quote from my article on my facebook page ‘The coincidence’ posted in 2010:

The English Dictionary defines the coincidence as sequence of events that actually occurs accidentally but seems to occur as planned/arranged. I define the coincidence as occurrence of two or more events together when there is no logical reasoning for their togetherness. For example; you are speeding your car across a traffic signal and suddenly the green light becomes the red light and you apply a brake. Your car movement and the change in signal is a coincidence. The coincidence is the basis of all miracles and the development of the real science. I will explain. Various god men and god women perform miracles. For example; the sick devotee becomes healthy after the touch of a God-man. Actually, the devotee may be psychic or having self-limiting illness but the credit goes to the God-man. It is a coincidence and not a miracle. Isaac Newton saw an apple falling from the tree and enunciated the laws of gravity. Many people saw apples falling from the tree for thousands of years and considered it as a coincidence. Newton thought why apple falls down and not goes to the sky. When the coincidence becomes repeated and logical, it becomes a scientific law. The distinction between correlation and causality will be discussed later on.

_________

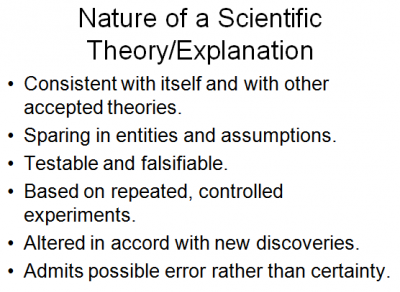

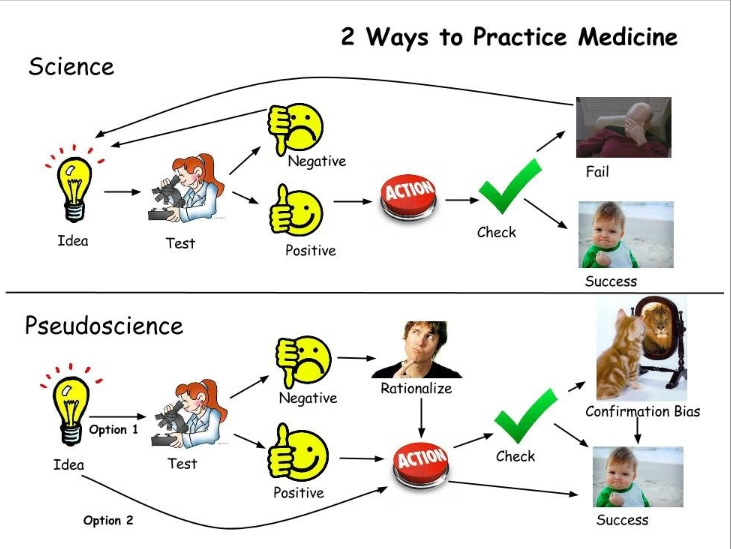

Good science:

“Good science” is usually described as dependent upon qualities such as falsifiable hypotheses, replication, verification, peer-review and publication, general acceptance, consensus, collectivism, universalism, organized skepticism, neutrality, experiment/ empiricism, objectivity, dispassionate observation, naturalistic explanation, and use of the scientific method. A good example of this tendency is provided by Loevinger: “While there are innumerable specialized fields in science today, and while knowledge in one field does not necessarily transfer to another field, there are, nevertheless, general standards applicable to all fields of science that distinguish genuine science from pseudo-science and quack science.” Scientists are in possession of a simple, identifiable, universal scientific method which guides activity and can be employed in practical contexts to distinguish “good science” from “junk science.”

_

The norms of science are seen as prescribing that scientists should be detached, uncommitted, impersonal, self-critical, and open-minded in their attempts to gather and interpret objective evidence about the natural world. It is assumed that considerable conformity to these norms is maintained; and the institutionalization of these norms is seen as accounting for that rapid accumulation of reliable knowledge which has been the unique achievement of the modern scientific community.

_

James Lett (in Ruscio) described six characteristics of scientific reasoning. These are falsifiability, logic, comprehensiveness, honesty, replicability, and sufficiency. Falsifiability is the ability to disprove a hypothesis. Logic dictates that the premise must be sound and that the conclusion must follow validly from the premise. Comprehensiveness must account for all the pertinent data, not just some of it. Honesty means that any and all claims must be truthful and not be deceptive. Replicability is the idea that similar results can be obtained by other researchers in other labs using similar methods. For this to have any meaning, the methods must also be transparent. In other words, the method used to obtain the results must be described in detail. Finally, sufficiency means that all claims must be backed by sufficient evidence. Any study that does not meet all of these criteria is not scientifically sound.

_

Real science is hard. You don’t get to be an expert in biochemistry, astrophysics, immunology, multivariate mathematics, or any other natural science just by surfing the internet for a few hours or days. Real science is sitting in dozens of classes over years absorbing and understanding the decades of research that preceded you. It means learning how to be critical, not for criticism’s sake, but to find a new idea that might blossom in to the next best thing in science. It means spending years of your life studying a small idea. It means being smarter than almost anyone else you know. It means writing better than any of your friends or pals. It means late nights and early mornings. It means standing up to the criticism of your peers and of the leaders in your field. And if you put in the really hard work that it takes to be a scientific expert, then you will be given due weight to your ideas. And maybe you’ll change things.

___________

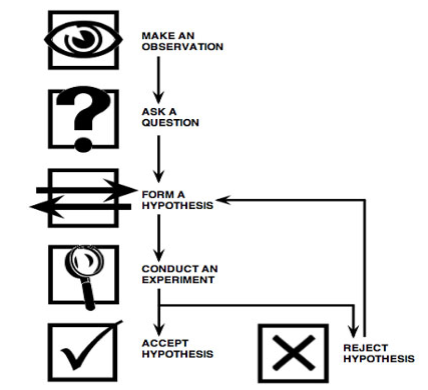

Scientific method:

The real purpose of the scientific method is to make sure nature hasn’t misled you into thinking you know something you actually don’t know.

Robert Pirsig

_

The scientific method originated from advances in the sixteenth century. Early thinkers such as Copernicus, Paracelsus and Vesalius departed from dogma and led us to the Renaissance and the Scientific Revolution. Sir Francis Bacon in 1620 popularized and promoted what later became the scientific method. A hypothesis, if confirmed, is elevated to a theory which, if confirmed, is elevated to a law. Under the scientific method, the burden of proof is on the party advancing the hypothesis, not on the reader. In fact, “proving the negative” is a logical impossibility.

_

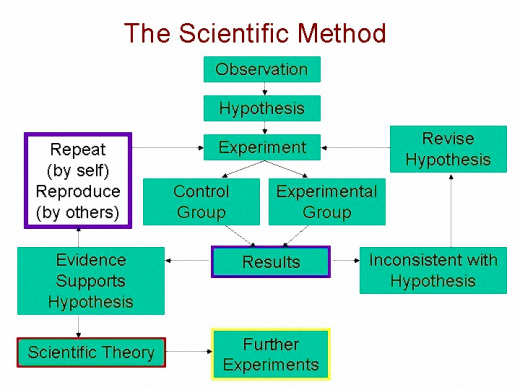

The scientific method is a body of techniques for investigating phenomena, acquiring new knowledge, or correcting and integrating previous knowledge. To be termed scientific, a method of inquiry must be based on empirical and measurable evidence subject to specific principles of reasoning. Science is basically the organized inquiry into the nature of reality. In its simplest form, it is observation of nature leading to hypothesis describing what is observed. This in turn leads to predictions about the behavior of what has been observed. For science to be practiced these predictions must be able to be tested and the results of those tests must be used to modify the hypotheses so that it can better describe that in nature which was observed. Science is all about raising questions and doing experiments to figure out the answers to questions. Science is not just information and definitions. Following the scientific method, we observe, raise questions, and make hypotheses about how and why things are the way they are observed to be. We make theories to explain the world around us. We make predictions based on our theories. We design experiments to check the validity of these hypotheses. After carrying out the experiments we analyze the results and draw conclusions. In the process of doing the experiments, new questions and problems arise. We may have to modify our hypotheses and theories. We then design new experiments in the continuing process of scientific enquiry. It is this process of discovery that makes science exciting and meaningful. The scientific method is not just a highly sophisticated process that can only be undertaken after years of formal study, foreign degrees, or post-doctoral experience. Take a look at the way young child learn and you can see that they often follow a scientific method, although usually without being fully aware of the process they are following. It does not matter to the scientific method what the answer turns out to be. You cannot dictate your preferred answers to the scientific method. That’s dishonest and manipulative. You must start with the question; make a hypothesis and then end up with a convincing answer! You cannot start with the answer first! Nor can you object to the facts and observations that the scientific method started from.

_

The chief characteristic which distinguishes the scientific method from other methods of acquiring knowledge is that scientists seek to let reality speak for itself; discuss supporting a theory when a theory’s predictions are confirmed and challenging a theory when its predictions prove false. Although procedures vary from one field of inquiry to another, identifiable features distinguish scientific inquiry from other methods of obtaining knowledge. Scientific researchers propose hypotheses as explanations of phenomena, and design experimental studies to test these hypotheses via predictions which can be derived from them. These steps must be repeatable, to guard against mistake or confusion in any particular experimenter. Theories that encompass wider domains of inquiry may bind many independently derived hypotheses together in a coherent, supportive structure. Theories, in turn, may help form new hypotheses or place groups of hypotheses into context. Scientific inquiry is generally intended to be as objective as possible in order to reduce biased interpretations of results. Another basic expectation is to document, archive and share all data and methodology so they are available for careful scrutiny by other scientists, giving them the opportunity to verify results by attempting to reproduce them. This practice, called full disclosure, also allows statistical measures of the reliability of these data to be established (when data is sampled or compared to chance).

_

Science begins with observation. Hypothesis is devised to explain observations. The usefulness of a hypothesis is directly related to its ability to make testable predictions. If a hypothesis is consistent with existing observations and makes useful predictions that are confirmed by additional observation, it may be considered conditionally correct. If it does not match observations, then it must be either modified or abandoned. If it makes no useful predictions, then it must be abandoned as well because it is useless and has no explanatory power. This is of course a description of science in an idea world. In the real world, science is done by human beings. Humans often have ulterior motives, be it a theological agenda, a political agenda, or just plain old fashion egotism. These agendas can often skew scientific observations and hypothesis. Observations may also be faulty due to simple incompetence. This is why science requires a second basic foundation, in addition to observation. This foundation is called peer review. Any scientific observation or hypothesis that has not been subjected to peer review should be considered as incomplete. Of course, even with peer review, there is often a lot of room for debate on many questions. This is particularly true when the subject is complex and the available observational evidence limited. In the case of global warming for instance, the fact that the trend in Earth’s climate has been upward for the last century or so is pretty much beyond dispute. The question of why is still open to a lot of legitimate debate. Climate is a very complicated subject and distinguishing between a significant trend and normal cycles it not easy given that climate cycles may extend considerably farther in time than reliable observations. Such debates can leave those of us not directly involved in science (or even those involved in science when the question relates to a field outside their area of expertise) scratching our heads with no clue as to who is correct. There is no certain cure for this problem but there are some things you can do to ameliorate the situation. First, educate yourself as much as possible on those subjects that interest you. The more you know, the better you will be able to sort the wheat for the chef. Second, carefully examine the motives of proponents of any non mainstream hypothesis. Are they trying to explain observations not adequately explained by existing theories, or are they pushing an unrelated agenda? Next, apply the “evidence” test. Do they have evidence to support their theory, or is their evidence primarily just an attack on evidence used to support another theory. Remember, disproof of an alternative theory is not equivalent to proof of your own theory. As an exercise, visit any young earth creationist web site. How much is evidence for a young earth and how much is just attacks of evolution and other branches of mainstream theory? Be sure to check for unverifiable assertions (Darwin renounced evolution on his death bed) and logical fallacies: ad homenims (evolutionists are godless atheists), slippery slope arguments (evolution theory leads to Marxism and Nazism), arguments from authority (Isaac Newton believed in Creation), etc. If it passes the “motive” test and the “evidence” test, then it is time to apply the “usefulness” test. Does it make testable predictions? Can it be falsified? As an exercise, ask Dunash what testable predictions Geocentrism makes and what possible observations would convince him that Geocentrism is wrong. Finally, when the “new” or “alternative” theory challenges the mainstream theory, remember that the burden of proof always lies with the upstart. Mainstream theories become mainstream because they survive years or even centuries of close scrutiny and testing. They have proven their worth. In science, all theories are provisional, but some are more provisional than others.

_

Scientific Method Steps in a nutshell:

Science follows certain rules and guidelines based upon the scientific method which says that you must:

Observe the phenomenon

Ask a question

Do some background research

Construct a hypothesis

Test your hypothesis by doing an experiment

Analyze the data from the experiment and draw a conclusion regarding the hypothesis that you tested

If your experiment shows that your hypothesis is false, think some more and go back further research.

If your experiment shows that your hypothesis is true communicate your results.

_

There is no one way to “do” science; different sources describe the steps of the scientific method in different ways. Fundamentally, however, they incorporate the same concepts and principles.

Step 1: Make an observation:

Almost all scientific inquiry begins with an observation that piques curiosity or raises a question. For example, when Charles Darwin (1809-1882) visited the Galapagos Islands (located in the Pacific Ocean, 950 kilometers west of Ecuador), he observed several species of finches, each uniquely adapted to a very specific habitat. In particular, the beaks of the finches were quite variable and seemed to play important roles in how the birds obtained food. These birds captivated Darwin. He wanted to understand the forces that allowed so many different varieties of finch to coexist successfully in such a small geographic area. His observations caused him to wonder, and his wonderment led him to ask a question that could be tested.

Step 2: Ask a question:

The purpose of the question is to narrow the focus of the inquiry, to identify the problem in specific terms. The question Darwin might have asked after seeing so many different finches was something like this: What caused the diversification of finches on the Galapagos Islands?

Here are some other scientific questions:

What causes the roots of a plant to grow downward and the stem to grow upward?

What brand of mouthwash kills the most germs?

Which car body shape reduces air resistance most effectively?

What causes coral bleaching?

Does green tea reduce the effects of oxidation?

What type of building material absorbs the most sound?

Coming up with scientific questions isn’t difficult and doesn’t require training as a scientist. If you’ve ever been curious about something, if you’ve ever wanted to know what caused something to happen, then you’ve probably already asked a question that could launch a scientific investigation.

Step 3: Formulate a hypothesis:

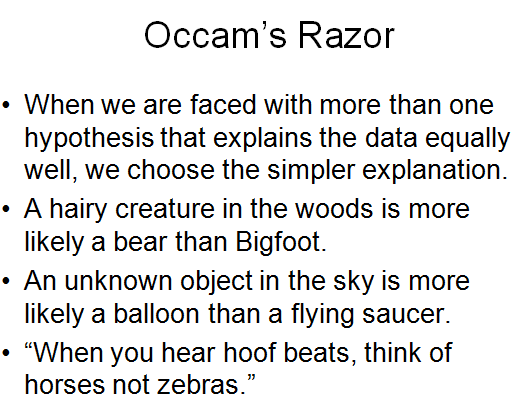

The great thing about a question is that it yearns for an answer, and the next step in the scientific method is to suggest a possible answer in the form of a hypothesis. A hypothesis is often defined as an educated guess because it is almost always informed by what you already know about a topic. For example, if you wanted to study the air-resistance problem stated above, you might already have an intuitive sense that a car shaped like a bird would reduce air resistance more effectively than a car shaped like a box. You could use that intuition to help formulate your hypothesis. Generally, a hypothesis is stated as an “if … then” statement. In making such a statement, scientists engage in deductive reasoning, which is the opposite of inductive reasoning. Deduction requires movement in logic from the general to the specific. Here’s an example: If a car’s body profile is related to the amount of air resistance it produces (general statement), then a car designed like the body of a bird will be more aerodynamic and reduce air resistance more than a car designed like a box (specific statement). Notice that there are two important qualities about a hypothesis expressed as an “if … then” statement. First, it is testable; an experiment could be set up to test the validity of the statement. Second, it is falsifiable; an experiment could be devised that might reveal that such an idea is not true. If these two qualities are not met, then the question being asked cannot be addressed using the scientific method.

_

According to Schick and Vaughn, researchers weighing up alternative hypotheses may take into consideration:

Testability — compare falsifiability

Parsimony — as in the application of “Occam’s razor”, discouraging the postulation of excessive numbers of entities

Scope — the apparent application of the hypothesis to multiple cases of phenomena

Fruitfulness — the prospect that a hypothesis may explain further phenomena in the future

Conservatism — the degree of “fit” with existing recognized knowledge-systems.

_

Step 4: Conduct an experiment:

Many people think of an experiment as something that takes place in a lab. While this can be true, experiments don’t have to involve laboratory workbenches, Bunsen burners or test tubes. They do, however, have to be set up to test a specific hypothesis and they must be controlled. Controlling an experiment means controlling all of the variables so that only a single variable is studied. The independent variable is the one that’s controlled and manipulated by the experimenter, whereas the dependent variable is not. As the independent variable is manipulated, the dependent variable is measured for variation. In our car example, the independent variable is the shape of the car’s body. The dependent variable — what we measure as the effect of the car’s profile — could be speed, gas mileage or a direct measure of the amount of air pressure exerted on the car. Controlling an experiment also means setting it up so it has a control group and an experimental group. The control group allows the experimenter to compare his test results against a baseline measurement so he can feel confident that those results are not due to chance. Now consider our air-resistance example. If we wanted to run this experiment, we would need at least two cars — one with a streamlined, birdlike shape and another shaped like a box. The former would be the experimental group, the latter the control. All other variables — the weight of the cars, the tires, even the paint on the cars — should be identical. Even the track and the conditions on the track should be controlled as much as possible.

Step 5: Analyze data and draw a conclusion:

During an experiment, scientists collect both quantitative and qualitative data. Buried in that information, hopefully, is evidence to support or reject the hypothesis. The amount of analysis required to come to a satisfactory conclusion can vary tremendously. Sometimes, sophisticated statistical tools have to be used to analyze data. Either way, the ultimate goal is to prove or disprove the hypothesis and, in doing so, answer the original question.

_

_

Other components of scientific method:

The scientific method also includes other components required even when all the iterations of the steps above have been completed:

Replication:

If an experiment cannot be repeated to produce the same results, this implies that the original results were in error. As a result, it is common for a single experiment to be performed multiple times, especially when there are uncontrolled variables or other indications of experimental error. For significant or surprising results, other scientists may also attempt to replicate the results for themselves, especially if those results would be important to their own work.

External review: Peer review evaluation:

The process of peer review involves evaluation of the experiment by experts, who give their opinions anonymously to allow them to give unbiased criticism. It does not certify correctness of the results, only that the experiments themselves were sound (based on the description supplied by the experimenter). If the work passes peer review, which may require new experiments requested by the reviewers, it will be published in a peer-reviewed scientific journal. The specific journal that publishes the results indicates the perceived quality of the work. Scientific journals use a process of peer review, in which scientists’ manuscripts are submitted by editors of scientific journals to (usually one to three) fellow (usually anonymous) scientists familiar with the field for evaluation. The referees may or may not recommend publication, publication with suggested modifications, or, sometimes, publication in another journal. This serves to keep the scientific literature free of unscientific or pseudoscientific work, to help cut down on obvious errors, and generally otherwise to improve the quality of the material. The peer review process can have limitations when considering research outside the conventional scientific paradigm: problems of “groupthink” can interfere with open and fair deliberation of some new research.

Data recording and sharing:

Scientists must record all data very precisely in order to reduce their own bias and aid in replication by others, a requirement first promoted by Ludwik Fleck (1896–1961) and others. They must supply this data to other scientists who wish to replicate any results, extending to the sharing of any experimental samples that may be difficult to obtain.

__________

You ought to have logical explanation for evidence:

_

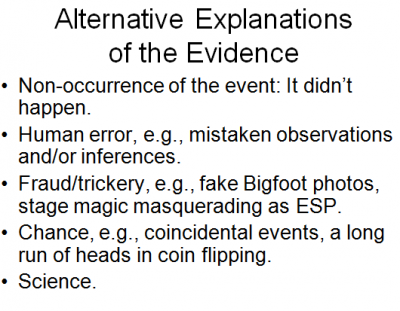

There could be alternative explanation for evidence:

___________

Hypothesis and theory:

A hypothesis (plural hypotheses) is a proposed explanation for a phenomenon. For a hypothesis to be a scientific hypothesis, the scientific method requires that one can test it. Scientists generally base scientific hypotheses on previous observations that cannot satisfactorily be explained with the available scientific theories. A working hypothesis is a provisionally accepted hypothesis proposed for further research. Even though the words “hypothesis” and “theory” are often used synonymously, a scientific hypothesis is not the same as a scientific theory. A scientific hypothesis is a proposed explanation of a phenomenon which still has to be rigorously tested. In contrast, a scientific theory has undergone extensive testing and is generally accepted to be the accurate explanation behind an observation. A hypothesis requires more work by the researcher in order to either confirm or disprove it. In due course, a confirmed hypothesis may become part of a theory or occasionally may grow to become a theory itself. Any useful hypothesis will enable predictions by reasoning (including deductive reasoning). It might predict the outcome of an experiment in a laboratory setting or the observation of a phenomenon in nature. The prediction may also invoke statistics and only talk about probabilities.

_

A hypothesis is a working assumption. Typically, a scientist devises a hypothesis and then sees if it “holds water” by testing it against available data (obtained from previous experiments and observations). If the hypothesis does hold water, the scientist declares it to be a theory. When a hypothesis proves unsatisfactory, it is either modified or discarded. If the hypothesis survived testing, it may become adopted into the framework of a scientific theory. This is a logically reasoned, self-consistent model or framework for describing the behavior of certain natural phenomena. A theory typically describes the behavior of much broader sets of phenomena than a hypothesis; commonly, a large number of hypotheses can be logically bound together by a single theory. Thus a theory is a hypothesis explaining various other hypotheses. In that vein, theories are formulated according to most of the same scientific principles as hypotheses. In addition to testing hypotheses, scientists may also generate a model based on observed phenomena. This is an attempt to describe or depict the phenomenon in terms of a logical, physical or mathematical representation and to generate new hypotheses that can be tested. In popular usage, a theory is just a vague and fuzzy sort of fact and a hypothesis is often used as a fancy synonym to `guess’. But to a scientist a theory is a conceptual framework that explains existing observations and predicts new ones. For instance, suppose you see the Sun rise. This is an existing observation which is explained by the theory of gravity proposed by Newton. This theory, in addition to explaining why we see the Sun move across the sky, also explains many other phenomena such as the path followed by the Sun as it moves (as seen from Earth) across the sky, the phases of the Moon, the phases of Venus, the tides, just to mention a few. You can today make a calculation and predict the position of the Sun, the phases of the Moon and Venus, the hour of maximal tide, all 200 years from now. The same theory is used to guide spacecraft all over the Solar System.

_

_

The basic elements of the scientific method are illustrated by the following examples:

Example-1:

Structure of DNA:

Question: Previous investigation of DNA had determined its chemical composition (the four nucleotides), the structure of each individual nucleotide, and other properties. It had been identified as the carrier of genetic information by the Avery–MacLeod–McCarty experiment in 1944, but the mechanism of how genetic information was stored in DNA was unclear.

Hypothesis: Francis Crick and James D. Watson hypothesized that DNA had a helical structure.

Prediction: If DNA had a helical structure, its X-ray diffraction pattern would be X-shaped. This prediction was determined using the mathematics of the helix transform, which had been derived by Cochran, Crick and Vand (and independently by Stokes).

Experiment: Rosalind Franklin crystallized pure DNA and performed X-ray diffraction to produce photo. The results showed an X-shape.

Analysis: When Watson saw the detailed diffraction pattern, he immediately recognized it as a helix. He and Crick then produced their model, using this information along with the previously known information about DNA’s composition and about molecular interactions such as hydrogen bonds.

_

Example-2:

Discovery of expanding universe with galaxies moving away from each other: