Dr Rajiv Desai

An Educational Blog

HUMAN ERROR

HUMAN ERROR

Prologue:

On 10’th December 1996, the Mumbai high court passed an ex-party order asking me to pay rupees 10,000 every month to estranged wife on the ground that my lawyer was absent in the court-room. I immediately appealed to the divisional bench of Mumbai high court. The divisional bench of two judges heard my appeal and reduced the amount to rupees 7,000 in the open court in front of everybody present and dictated the court typist to type the new order. The court then got busy with many other matters pending before it. However the court typist typed rupees 10,000 in the new order. Later on, my lawyer met the judges in their chamber and pointed out the typographical error. The judges refused to accept human error and said that due to inflation, cost of living is more and therefore rupees 10000 is appropriate. Till today, I do not know whose error was it anyway, the lawyer’s or the typist’s or the judge’s !

Human Errors have occurred in everybody’s life.

Let me give few more examples of human errors.

1) The pilot-in-command of the Air India Express aircraft from Dubai that overshot the runway and crashed while landing at the Mangalore airport in India had 10,000 hours of flying experience with 19 landings at the Mangalore airport. A Boeing 737-800 aircraft with 166 people on board overshot the runway while landing at the Mangalore airport by 2000 feet and crashed into the valley before bursting into flames killing 158 people. Eight people managed to survive the deadly crash. The aircraft wasn’t in stable approach condition during its descent and the flight had violated several laid-down limits for airspeed and rate of descent. After the aircraft touched down and thrust reversers were deployed, the pilot opted for a go-around. This was in complete violation of the rule. The pilot attempted to take-off when only 800 feet of the 8,038-ft-long runway was left. The last sentence uttered in the cockpit was of co-pilot: “We don’t have runway left.”

2) The Uttarbanga Express train in India rammed into another passenger train Vananchal Express at Sainthia railway station killing total of 66 persons, including three railway employees who were 2 drivers of Uttarbanga express and a guard of Vananchal express. The driver of Uttarbanga express missed the railway signal to stop the train and failed to apply brake despite visible tail light of Vananchal express. The question that remains unanswered is why the Uttarbanga Express was speeding if it was a scheduled stop?

3) When MSC Chitra was moving out of Jawaharlal Nehru Port Trust (JNPT) while Khalijia III was moving in to dock at the Mumbai Port Trust for repairs, there was a collision between MSC Chitra and MV Khalijia III off the coast of Mumbai resulting in oil-spill endangering marine life and spoiling the sea coast. The collision occurred due to human error of the captains of these ships.

4) The investigators have suggested human error may be to blame for the air crash that killed Poland’s president and 95 others, saying there were no technical problems with the aircraft. They said the flight recorders revealed that while there were “no problems with the plane”, the pilot decided to land despite warnings about bad weather conditions by ATC. The ATC apparently told the pilot that the weather condition would make landing impossible but yet pilot decided to land under political instruction not to miss a memorial ceremony for thousands of Poles massacred in World War II.

5) All 152 people on board a Pakistani plane have been killed after the aircraft plunged into the Margalla Hills near the capital, Islamabad. The accident occurred as the plane was attempting to land in bad weather.The pilot had more than 25,000 flying hours over a 35-year career. The pilot was warned by ATC that he was flying away from the runway but he responded, “I can see the runway.”

6) All eight members of a Pakistani family in a foreign country perished when the car they were traveling in stalled in the left fast lane as their vehicle ran out of petrol. They all got out of the car and started pushing it in the same lane. It was 3 a.m. on a foggy winter night. A four-wheel drive traveling at 130 kilometers per hour in the same fast lane struck the ill-fated car. At the community’s condolence meeting, some people attributed this accident to fate and destiny. However, it was a human error: one does not drive on a super-highway with an empty petrol tank; one puts on the emergency hazard lights the moment the car stalls; one does not stand leisurely in the left fast lane; one does not remain in the moving traffic lane when the car is stalled – one immediately moves the stalled car to the ‘shoulder’ provided for emergency purposes; and one makes the distress call to emergency services at once. The fact of the matter is that this unfortunate tragic accident could have been avoided by following the implicit rules of the present-day technological civilization.

7) According to the prestigious Institute of Medicine, between 50,000 and 100,000 patient deaths are caused each year in the United States by negligence on the part of doctors, nurses, and other health care providers. N early 1,000,000 patient injuries per year are also attributed to human error in the delivery of health care. The difference between human error and negligence is discussed afterward in this article.

8) A study of road safety found that human error was the sole cause in 57% of all accidents and was a contributing factor in over 90%. In contrast, only 2.4% were due solely to mechanical fault and only 4.7% were caused only by environmental factors.

9) The National Highway Traffic Safety Administration told members of Congress in America that human error was to blame in more than half of the 58 cases it reviewed since drivers failed to apply the brakes. Trapped or sticky gas pedals were to blame in the remainder of accidents for which a cause was identified. There was no problem with car’s electronic system in these car accidents.

10) The Japanese classification society NK published a booklet titled “Guidelines for the Prevention of Human Error Aboard Ships”, and according to the guidelines, human errors are said to account for 80 per cent of all marine accidents.

11) The United Kingdom’s Civil Aviation Authority reported last year that human errors of the flight crews were the primary cause of two-thirds of fatal commercial and business plane crashes worldwide from 1997 through 2006. Human factors such as sleep deprivation, mental distractions, scheduling, and training are all contributing factors.

12) According to a 1998 study by the US General Accounting Office, human error was a contributing factor in 73% of the most serious US military aircraft accidents between 1994 and 1998.

13) A 1998 study by the Union of Concerned Scientists of ten nuclear power plants (representing a cross section of the American civilian nuclear industry) concluded that nearly 80% of reported problems resulted from worker mistakes or the use of poorly designed procedures.

14) There was an explosion on BP’s oil rig in the Gulf of Mexico on April 20, 2010 killing 11 workers and oil spill of 4.9 million barrels. A close look at the accident shows that lax federal oversight, complacency by BP and the other companies involved, and the complexities of drilling a mile deep all combined to create the perfect environment for disaster to happen. It was clear talking from experienced oil professionals that the blowout was human error, and probably compounded human error, ignoring multiple warning signs and safety procedures.

15) A research organization focusing on data centers showed that 70% of disruptions in the data centre are caused by human error. The study analyzed 4,500 data centre disruptions, including 400 where systems were entirely offline.

16) This season in Major League Baseball has seemingly brought a new umpiring controversy with a study showing 1 in 5 close calls wrong due to human error. Traditional sport supporters argued against expanded replay saying that “human element” of umpiring is just part of baseball, and some worried that replay would slow games. Recently, even during FIFA world cup football, people have seen wrong referee decisions denying authentic goals and FIFA officials unwilling for slow-motion-replays. Cricket lovers know how bad umpiring decisions due to human errors have affected cricket as a game.

17) Accidental deletion and overwriting is one of the main reasons of data loss from computers due to human error. According to a recent survey human error is the cause for 40 percent of the cases of data loss, compared to 29 percent for hardware or system failures.

18) Lab scientist’s mathematical errors at the Regional Crime Lab resulted in inflated blood alcohol concentration (BAC) levels in 111 driving-while-impaired cases in America. Lab scientists are supposed to multiply the end result by 0.67 to determine the grams of alcohol per 67 milliliters of urine. The multiplication was not done, so the end result reported the grams of alcohol per 100 milliliters of urine, therefore inflating the results. The science was not bad. Nothing was tainted. It was a human error. We’ve seen it before, human error causing people to be charged with crimes they didn’t commit. In this case being falsely charged with driving under influence of alcohol.

19) A trader made an ‘error’ and caused the stock market to plunge. The trader mistyped and submitting a sell order for shares in the Billions instead of the Millions. The trader meant to trade 6 million and accidentally traded 6 billion.

20) There was a massive failure which knocked Singapore’s DBS Bank off the banking grid for seven hours resulting in DBS Bank’s inability to resume normal operations & ATMs due to human error in routine operation of IBM computer. In short, a procedural error in what was to have been a routine maintenance operation subsequently caused a complete system outage.

21) Recently in Memphis, human error led to a data entry issue causing mass chaos in the opening minutes on Election Day and the data entry error had the potential to cause a real disaster, but luckily, the problem was determined early by poll workers. So human error can alter election outcome.

22) Various published books on various subjects contain plenty of errors in various editions. It is not my intent to denigrate these fine publications, but merely to point out that human error can disseminate inaccurate information.

23) Human error has been blamed for mistakes in exam papers in various boards and universities. Also, many times exams were rescheduled after a superintendent handed out the wrong paper on the wrong day due to human error.

24) The population of world is 6.8 billion and at least, on average, every human makes one error daily resulting in 6.8 billion errors daily and the biggest one is not acknowledging it.

25) Human error was a factor in almost all the highly publicized accidents in recent memory, including the Bhopal pesticide plant explosion, Hillsborough football stadium disaster, Paddington and Southall rail crashes, capsizing of the Herald of Free Enterprise, Chernobyl and Three-Mile Island incidents and the Challenger Shuttle disaster.

Error means unintentional deviation from what is right, correct or truth by action or inaction of act or thought or words or belief. Error means a wrong action attributable to bad judgment or ignorance or inattention. An error is the failure of planned actions to achieve the desired goal. Error means an act of commission (doing something wrong) or omission (failing to do the right thing) that leads to an undesirable outcome or significant potential for such an outcome. Error is simply a difference between an actual state and a desired state. The meaning of error varies from one situation to another situation, for example, error in statistics means the difference between a measured or calculated value & a true one(observational error), and error in computing means a failure to complete a task, usually involving a premature termination. Human reliability is very important due to the contributions of humans to the resilience of systems and to possible adverse consequences of human errors or oversights, especially when the human is a crucial part of the large socio-technical systems as is common today. Human error has been cited as a cause or contributing factor in disasters and accidents in industries as diverse as nuclear power (e.g., three mile island accident), aviation (pilot error), space exploration (e.g., Space Shuttle Challenger disaster), and medicine ( medical error). It is the human performance which is categorized as ‘error’ in hindsight, therefore actions later termed “human error” is actually part of the ordinary spectrum of human behaviour. The study of absent-mindedness in everyday life provides ample documentation and categorization of such aspects of behavior.Given the prevalence of attentional failures in everyday life and the ubiquitous and sometimes disastrous consequences of such failures, it is rather surprising that relatively little work has been done to directly measure individual differences in everyday errors arising from propensities for failures of attention.

A faux pass is a violation of accepted social norms (for example, standard customs or etiquette rules). Faux pas vary widely from culture to culture, and what is considered good manners in one culture can be considered a faux pas in another. For example, a “fashion faux pas” occurs when the error is directly related to a person’s appearance or choice of clothing. Popular errors are false ideas which include misconceptions, fallacies, inaccurate facts or beliefs, and stereotypes and they usually originate from distorted or outdated information, jokes or anecdotes not meant to be taken seriously or mistaken comments. People who use internet have seen HTTP 404 error which is an HTTP standard response code indicating that the client was able to communicate with the server, but the server could not find what was requested. An HTTP 404 error is a response to a request made by a client to server when web pages have been moved or deleted. On the other hand, Error 678 is an error that is reported when the server does not respond correctly when the client makes an attempt to connect to the server through internet.

Error versus mistake:

Even though the words ‘error’ and ‘mistake’ are used interchangeably, in terms of etymology, the words are more deeply differentiated. The word ‘error’ came from the latin word ‘errorem’ or ‘errare’, which means ‘to wander or stray’. The root of the word ‘mistake’, nails the meaning more correctly. It is from the old Norse word, ‘mistaka’, which means ‘mis’ (wrong) and ‘taka’ (take). As a whole, it means ‘wrongly taken’. The “error” tends to be used more in contexts like science, where some set of criteria of objectivity is implicit. e.g.: your math teacher might say “there’s an error in the formula you wrote”, or a doctor might say “the lab made an error in your blood test”, or an editor might say “there’s a typing error in your article”. The “mistake” is used more for inadvertent behaviours that produce unwanted consequences. Let me put differently. An error is when a student does something wrong because they haven’t been taught the correct way. A mistake is when they have been taught well but forgot or something. However, in the classification of errors, mistake is classified as cognitive error and therefore all mistakes are errors but all errors are not mistakes.

People are not infallible and therefore they make mistakes even in best circumstances. To err is human. Unfortunately, to err repeatedly is also human. It’s one of our deadliest weaknesses as humans, with matching consequences in some case. Most human errors are limited to few individuals despite the fact that various physiological and psychological characteristics that contribute to human errors are held by everybody. Human errors are universal and inevitable and best people can make worst human errors. I mention a quote we all are familiar with: “It takes a big man to admit he’s wrong.” If we are honest with ourselves, we will have to admit that all of us are wrong about something every single day. The daily details of life are filled with our own errors: What will happen today, the best route to take to work, anticipating a colleague’s reaction to something, the weather, etc. We are habitually regularly predictably wrong about nearly everything. Some might say that a mistake is a mistake, and make no distinction between human error, bad judgement, uncontrollable circumstances etc. If it’s a bona-fide error or bad judgement, then, we can use that to not make similar mistake in the future. If it’s unforeseen, uncontrollable things, then really we can’t change the future outcomes, and we can’t prepare ourselves enough to overcome it. If it happens, it happens.

It was thought that human error and failure are related to measures of cognitive and psychomotor accuracy, commission of error being at one end of the accuracy scale and omission or not performing the correct action being at the other but this was insufficient as it did not fully take into account the notion of surprise. Surprise is the occurrence of something unexpected or unanticipated. It is not precisely commission or omission; it indicates rather, the absence of contravening cognitive processes. Measures of accuracy may account for factual errors in the intelligence domain, but measures of accuracy are insufficient to account for surprise events and intelligence failure. According to CIA, intelligence errors are factual inaccuracies in analysis resulting from poor or missing data; intelligence failure is systemic organizational surprise resulting from incorrect, missing, discarded, or inadequate hypotheses. The novice analysts tended to worry about being factually inaccurate; senior analysts tended to worry about being surprised. The 26/11 terrorist attack on India and 9/11 terrorist attack on America were intelligence failures and not intelligence errors.

Human errors are errors caused by humans rather than machines. Human error is an imbalance between what the situation demands, what the person intends and what he/she does. Human error means an inappropriate or undesirable human decision or behavior that reduces or has the potential for reducing; the effectiveness, safety, or system performance. The propensity for error is so intrinsic to human behavior and activity that scientifically it is best considered as inherently biologic, since faultless performance and error result from the same mental process. All the available evidence clearly indicates that human errors are random unintended events. Indeed, although we can predict by well-established Human Reliability Assessment techniques the probability of specific errors, the actual moment in which an error occurs cannot be predicted. 50 years ago human error was often described just at the level of the human directly involved with error but modern definitions look to failures at any point in a system within an organization, from micro-task decisions and task implementation, personal monitoring systems, to team member assignments and actual coordination of middle organizational decisions relating to the implementation of people and equipment chosen for task accomplishment. The systems themselves are scrutinized for their tolerance to human frailty. These systems are designed by humans. Human error can occur in any stage of a task or strategy where a human is involved. Nuclear power plant accidents, oil rig fires and chemical plant disasters are very apparent to the public and their investigation solicits human error analysis. It has been estimated that up to 90% of all workplace accidents have human error as a cause. Human error has an overriding (typically >60%) contribution to almost all events. This is true worldwide for the whole spectrum, all the way from transportation crashes, social system and medical errors, to large administrative failures and the whole gamut of industrial accidents. Embedded within modern technological systems, human error is the largest and indeed dominant contributor to accident cause. The consequences dominate the risk profiles for nuclear power and for many other technologies. The fact that humans learn from their mistakes allows a new determination of the dynamic probability and human failure (error) rate in technological systems. A human error that is corrected before it can cause damage or has not caused any damage is an error nonetheless. The notion of error is not necessarily associated with guilt, the consequences of the error, or the presence or absence of deliberation.

There are many ways to categorize human error.

Classification 1:

Active errors and latent errors.

Active errors occur at the point of contact between a human and some aspect of a larger system (e.g., a human-machine interface). They are generally readily apparent (e.g., pushing an incorrect button, ignoring a warning light) and almost always involve someone at the frontline.

Latent error is a human error which is likely to be made due to systems or routines that are formed in such a way that humans are disposed to making these errors. In other words, latent errors are quite literally accidents waiting to happen and the term is used in safety work and accident prevention, especially in aviation.

The difference between active and latent errors is made by considering two aspects:

1) The time from the error to the manifestation of the adverse event.

2) Where in the system did the error occur.

Active errors are of 2 types – cognitive errors (mistakes) and non-cognitive errors (slips & lapses).

Cognitive errors (mistakes):

Mistake means failures of intention where the failure lies at a higher level; the mental processes involved in planning, formulating intentions, judging and problem solving. Mistakes reflect failures during attentional behaviors, or incorrect choices. Rather than lapses in concentration (as with slips), mistakes typically involve insufficient knowledge, failure to correctly interpret available information, or application of the wrong cognitive “heuristic” or rule. Thus choosing the wrong diagnostic test or ordering a suboptimal medication for a given condition represent mistakes. A slip, on the other hand, would be forgetting to check the chart to make sure you ordered them for the right patient. Operationally, one can distinguish slips from mistakes by asking if the error involved problem solving. Mistakes refer to errors that arise in problem solving.

Non-cognitive errors (lapses & slips):

Slips mean observable actions associated with attention failures. Lapses mean more interal events, relate to failures of memory. There are different slips depending on the mode of correlation like slip of tongue, slip of pen and slip of hand. Slips also refer to failures of schematic behaviors due to lack in concentration (e.g., an experienced surgeon nicking an adjacent organ during an operation due to a momentary failure in concentration). An example of ‘lapse’ would be missing a date with the girlfriend due to workload in office. A typographical error (often shortened to typo) is a term includes errors due to mechanical failure or slips of the hand or finger, but usually excludes errors of ignorance such as spelling errors. Most typos involve simple duplication, omission, transposition, or substitution of a small number of characters.

Distinguishing slips from mistakes serves two important functions. First, the risk factors for their occurrence differ. Slips occur in the face of competing sensory or emotional distractions, fatigue, and stress; mistakes more often reflect lack of experience or insufficient training. Second, the appropriate responses to these error types differ. Reducing the risk of slips requires attention to the designs of protocols, devices, and work environments using checklists so key steps will not be omitted, reducing fatigue among personnel (or shifting high-risk work away from personnel who have been working extended hours), removing unnecessary variation in the design of key devices, eliminating distractions (e.g., phones) from areas where work requires intense concentration, and other redesign strategies. Reducing the likelihood of mistakes typically requires more training or supervision. Even in the many cases of slips, health care has typically responded to all errors as if they were mistakes, with remedial education and/or added layers of supervision.

Classification 2:

Errors can be classified in statistical experiments.

1) Experimental errors are of two types, errors of objectivity when the experimenter knows the groups and the expected result, and errors of measurement due to inadequate technique or the uneven application of measuring techniques.

2) Random error occurs due to chance. Random error is caused by any factors that randomly affect measurement of the variable across the sample. For instance, each person’s mood can inflate or deflate their performance on any occasion.

3) Sampling error is one due to the fact that the result obtained from a sample is only an estimate of that obtained from using the entire population.

4) Systematic error is when the error is applied to all results, i.e. those due to bias. Systematic error is caused by any factors that systematically affect measurement of the variable across the sample. For instance, if there is loud traffic going by just outside of a classroom where students are taking a test, this noise is liable to affect all of the children’s scores by systematically lowering them. Unlike random error, systematic errors tend to be consistently either positive or negative.

5) Errors of types I and II: Type I error, also known as “false positive” occurs when we are observing a difference when in truth there is none, thus indicating a test of poor specificity. Type II error, also known as “false negative” is the error of failing to observe a difference when in truth there is one, thus indicating a test of poor sensitivity. In justice system, Type I errors means an innocent person goes to jail and Type II errors means a guilty person is set free. People find type II errors disturbing but not as horrifying as type I errors. A type I error means that not only has an innocent person been sent to jail but the truly guilty person has gone free. In a sense, a type I error in a trial is twice as bad as a type II error. Needless to say, the justice system ought to put lot of emphasis on avoiding type I errors. An illustration in medical field will be enlightening. A type I error means that you do not have malaria but blood test for malaria is positive and type II error means that you do have malaria but blood test for malaria is negative. Type I error will result in unnecessary treatment of malaria and missing the real cause of fever. Type II error will result in not giving treatment of malaria despite having it. Either way patient is in trouble. Best way to solve this problem is to devise a blood test with high degree of specificity & high degree of sensitivity and more importantly a wise doctor’s clinical correlation with blood report.

Why humans make so many errors?

There are 3 circumstances under which human errors can occur:

1) Plan to do right thing but with wrong outcome- e.g. misdial correct telephone number, give the correct instruction to the wrong aircraft, give correct meal to wrong patient, put your car key in another car.

2) Do the wrong things for a situation- e.g. try to land aircraft in extreme bad weather.

3) Fail to do anything when action is required- e.g. failed to apply brake by driver.

Error occurs due to multiple factor coming together to allow them to occur. Human errors usually occur due to fallibility of human mind and/or as a part of system errors.

Fallibility of human mind:

From the reciprocal (negative and positive) mind characteristics of humans, two discerning traits exude. Creativity and fallibility. Whilst other species evolve physically, humans evolve intellectually. Whilst humans had invented computing machines which are capable of speed and infallible accuracy, the latter lacked creativity and ingenuity of an analytical and creative human mind. The human mind’s subjective, emotional, and impulsive process of thinking is known as passion. Because passion is involuntary and supersedes logic; logical errors, inaccuracies and inconsistencies naturally predominates a human mind and perceptive reality. Though passion fuels one’s desire to be special, creative, ingenious, clever, inventive etc, the same produces negative mind traits when over driven, interrupts logical objective and critical thinking. Until the human being evolves in time to isolate the impulsive thought process from interfering with logical, objective and critical thinking; and initiates action and reaction based on logic rather than passion, the nature of human beings would remain that of being fallible.The fallibility of our minds argues more against creationism.There is no reason to believe that people are any less fallible today than when the first true humans walked the earth. But through spectacular scientific and technological advances, we are much more capable of affecting the physical world around us today than we have ever been. The collision between our unchanging fallibility and the awesome power of the most dangerous technologies that we have created threatens our common future – perhaps our very survival. Nowhere is this clearer than in the case of accidental nuclear strike.

Humans must rely on three fallible mental functions: perception, attention and memory. Research has shown that accidents occur due to one of three principle reasons. The first is perceptual error. Sometimes critical information was below the threshold for seeing – the light was too dim, the driver was blinded by glare, or the pedestrian’s clothes had low contrast. In other cases, the driver made a perceptual misjudgment (a curve’s radius or another car’s speed or distance). The second, and far more common cause, is that the critical information was detectable but that the driver failed to attend/notice because his mental resources were focused elsewhere. Often times, a driver will claim that he did not “see” a plainly visible pedestrian or car. This is entirely possible because much of our information processing occurs outside of awareness. We may be less likely to perceive an object if we are looking directly at it than if it falls outside the center of the visual field. This “inattentional blindness” phenomenon is doubtless the cause of many accidents. Lastly, the driver may correctly process the information but fail to choose the correct response (“I’m skidding, so I’ll turn away from the skid”) or make the correct decision yet fail to carry it out (“I meant to hit the break, but I hit the gas”).

People are part of system which includes equipments, environment, technology, organization, training, policies, procedures etc. Human errors occur due to failure of the system or organization to prevent error from happening and if error happens, failure to prevent error becoming a problem. Negative outcome occurs only when situation allows human error to occur. We can rightly assume that the vast majority of operators don’t want to have errors but errors still occur because other factors or antecedents influence the operator’s performance. These can include, but are not limited to the equipment being used, other operators in the system and even any cultural influences which may exist. When a false stall warning occurred as TWA flight 843 lifted of from JFK in 1992, the equipment, an L-1011, became the antecedent to the pilot’s error to abort the takeoff and unsuccessfully stop the aircraft on the runway. When American Airlines flight 1572 struck trees on approach to Bradley International Airport the antecedent was another operator in the system. The pilot’s error to proceed below the minimum decent altitude was exasperated by the approach controller’s failure to report a current altimeter setting at a time of rapidly falling barometric pressure. A cultural antecedent was present when Korean Air flight 801 flew into a Guam hillside. The first officer’s failure to monitor and check the captain’s execution of the approach was put down to the high power distance culture within the airline.

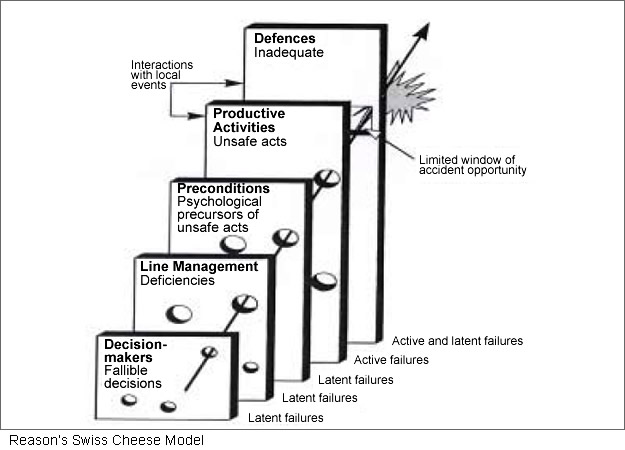

Reason’s ‘Swiss Cheese Model’:

Let me discuss aviation errors. Examples of barriers (defenses) are pre-flight checks, automatic warnings, challenge-response procedures etc which help prevent to human errors reducing the likelihood of negative consequences. It is when these defenses are weakened and breached that human errors can result in incidents or accidents. These defenses have been portrayed diagrammatically, as several slices of Swiss cheese. Some failures are ‘latent’, meaning that they have been made at some point in the past and lay dormant. This may be introduced at the time an aircraft was designed or may be associated with management decisions and policies. Errors made by front line personnel, such as flight crew, are ‘active’ failures. The more holes in a system’s defenses, the more likely it is that errors result in incidents or accidents, but it is only in certain circumstances, when all holes ‘line up’, that these occur. The chance of alignment of all holes is dependent on number of holes; greater is the number, error is more likely to occur. However, it is impossible that alignment will never occur no matter how fewer are the holes and therefore human errors are inevitable no matter how strong are the defenses. The only way to have ‘zero error’ is to have no holes in the defenses. Usually, if an error has breached the design or engineering defenses, it reaches the flight operations defenses (e.g. in flight warning) and is detected and handled at this stage. However, occasionally in aviation, an error can breach all the defenses (e.g. a pilot ignores an in flight warning, believing it to be a false alarm) and a catastrophic situation ensues.

While the model may convey the impression that the slices of cheese and the location of their respective holes are independent, this may not be the case. For instance, in an emergency situation, all three of the surgical identification safety checks mentioned above may fail or be bypassed. The surgeon may meet the patient for the first time in the operating room. A hurried x-ray technologist might mislabel a film (or simply hang it backwards and a hurried surgeon not notice), “signing the site” may not take place at all (e.g., if the patient is unconscious) or, if it takes place, be rushed and offer no real protection. In the technical parlance of accident analysis, the different barriers may have a common failure mode, in which several protections are lost at once (i.e., several layers of the cheese line up). An aviation example would be a scenario in which the engines on a plane are all lost, not because of independent mechanical failure in all four engines (very unlikely), but because the wings fell off due to a structural defect. This disastrous failure mode might arise more often than the independent failure of multiple engines.

In health care, such failure modes, in which slices of the cheese line up more often than one would expect if the location of their holes were independent of each other (and certainly more often than wings fly off airplanes) occur distressingly commonly. In fact, many of the systems problems discussed by Reason and others-poorly designed work schedules, lack of teamwork, variations in the design of important equipment between and even within institutions-are sufficiently common that many of the slices of cheese already have their holes aligned. In such cases, one slice of cheese may be all that is left between the patient and significant hazard.

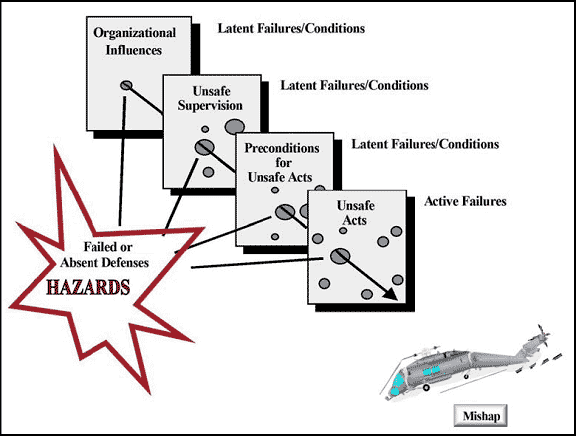

Organizational Models of accident causation are used for the risk analysis and risk management of human systems. One of the organizational model suggested that most accidents can be traced to one or more of four levels of failure: Organizational influences, unsafe supervision, preconditions for unsafe acts, and the unsafe acts themselves. The model includes, in the causal sequence of human failures that leads to an accident, both active failures and latent failures. Active failures means unsafe acts (errors and violations) committed by those at the “sharp end” of the system (surgeons, anesthetists, nurses, physicians, pilots, drivers etc). It is the people at the human system interface whose actions can, and sometimes do, have immediate adverse consequences. Active failures are represented as “holes” in the cheese. The concept of latent failures is particularly useful in the process of aircraft accident investigation, since it encourages the study of contributory factors in the system that may have lain dormant for a long time (days, weeks, or months) until they finally contributed to the accident. Latent failures means failures created as the result of decisions, taken at the higher echelons of an organization which includes organizational influences, unsafe supervision and preconditions for unsafe act. Whereas “fallibility” is a term usually attached to the imperfect human, “latent conditions” describe potential imperfections in a system which allows errors to occur. When one thinks of fallibility and latency, the intention is to block possible paths of errors.

Error versus violation:

Unsafe acts can be either errors (in perception, decision making or skill-based performance) or violations (routine or exceptional). A violation is a deliberate deviation from safe operating practices, procedures, standards, or rules. As the name implies, routine violations are those that occur habitually and are usually tolerated by the organization or authority. Exceptional violations are unusual and often extreme. For example, driving 60 mph in a 55-mph zone speed limit is a routine violation, but driving 130 mph in the same zone is exceptional.

Errors vs. Violations

| Errors | Violations |

| Arise primarily from informational problems (forgetting, inattention, incomplete knowledge) | More generally associated with motivational problems (low morale, poor supervision, perceived lack of concern, failure to reward compliance and sanction non-compliance) |

| Explained by what goes on in the mind of an individual | Occur in a regulated social context |

| Can be reduced by improving the quality and the delivery of necessary information within the workplace | Require motivational and organizational remedies |

Error versus negligence:

Negligence (Lat. negligentia, from neglegere, to neglect, literally “not to pick up”) is defined as conduct that is culpable because it falls short of what a reasonable person would do to protect another individual from foreseeable risks of harm. Negligence is not the same as “carelessness” because someone might be exercising as much care as they are capable of, yet still fall below the level of competence expected of them. The doctrine of negligence does not require the elimination of all risk, but rather only foreseeable and unreasonable risk. Thus a higher standard applies to explosives manufacturers than to manufacturers of kitchen matches. Negligence is a conduct that falls below the standards of behavior established by law for the protection of others against unreasonable risk of harm. A person has acted negligently if he or she has departed from the conduct expected of a reasonably prudent person acting under similar circumstances.

All experts agree that largely preventable human errors account for the vast majority of patient injuries and deaths associated with negligent patient care. One important aspect of physician error is that of errors in diagnosis. A study found that more than half (54 percent) of experienced doctors made significant diagnostic errors at least one or two times per month and 77 percent of trainee doctors have made at least one or two significant diagnostic errors per month. While it is unlikely that human error can ever be completely eliminated in medical practice, the findings of this important study are significant, and point to areas where substantial improvements in the delivery of health care can be achieved by physicians and other health care providers.

Indian Supreme Court has ruled that a “simple lack of care or an error of judgement or an accident” does not amount to negligence in the medical profession. Negligence in the context of medical profession necessarily calls for a treatment with a difference. The doctor cannot be held liable for negligence merely because a better alternative course or method of treatment was also available or simply because a more skilled doctor would not have chosen to follow or resort to that practice or procedure which the accused doctor followed. To prosecute a medical professional for criminal negligence “it must be shown that the accused doctor did something or failed to do something which in the given facts and circumstances no medical professional in his ordinary senses and prudence would have done or failed to do”. A medical practitioner is not expected to achieve success in every case that he treats. The duty of the doctor like that of other professional men is to exercise reasonable skill and care. The test is the standard of the ordinary skilled man. Also in the realm of diagnosis and treatment, there is ample scope for genuine difference of opinion. No man is negligent because his conclusion is different from that of other professional men. Also, the judiciary would be doing a disservice to the community at large if it were to impose liability to the hospitals and doctors for everything that happens to go wrong. Doctors would then be let to think more of their own safety than the good of the patients. No human being is perfect and even the most renowned specialist could make a mistake in detecting or diagnosing the true nature of a disease, which is not negligence. A doctor can be held liable for negligence only if one can prove that he/she is guilty of a failure that no other doctor with ordinary skill & acting with reasonable care would be guilty of it. If the doctor has adopted the right course of treatment, if he/she is skilled and has worked with a method and manner best suited to the patient, he/she cannot be blamed for negligence if the patient is not totally cured or in fact worsened or died. A doctor cannot be held criminally responsible for a patient’s death unless it is shown that he/she was negligent or incompetent, with such disregard for the life and safety of the patient that it amounted to a crime against the state. Doctors must exercise an ordinary degree of skill and such skill can not be compared with extra-ordinary skill of another doctor who may have given treatment to similar case with better outcome else-where as it is often alleged by the complainant. Also, the burden of proof of negligence generally lies with the complainant. No doctor intentionally harms his patients. Medical profession and death are interconnected. If any doctor or hospital says that they have no death in their premises, then, they have not treated any serious patients.

Error without adverse event:

There are two scenarios when an error does not result in an adverse event: near-miss (close call) and no harm events. A near-miss is defined when an error is realized just in the nick of time and abortive action is instituted to cut short its translation. In the no harm scenario, the error is not recognized and the deed is done but fortunately for the actor, the expected adverse event does not occur. The distinction between the two is important and in best exemplified by reactions to administered drugs in allergic patients. A prophylactic injection of cephalosporin may be stopped in time because it suddenly transpires that the patient is known to be allergic to penicillin (near-miss). If this vital piece of information is overlooked and the cephalosporin administered, the patient may fortunately not develop an anaphylactic reaction (no harm event).

Human biases and human errors:

Cognitive bias:

Understanding the mechanisms by which humans repeatedly make errors of judgment has been the subject of psychological study for many decades. Psychological research has examined numerous risks of assessing evidence by subjective judgment. These risks include information-processing or cognitive biases, emotional self-protective mechanisms, and social biases. A cognitive bias is the human tendency to make systematic errors in certain circumstances based on cognitive factors rather than evidence. Many social institutions rely on individuals to make rational judgment by ignoring irrelevant features of the case, weighing the relevant features appropriately, considering different possibilities open-mindedly and resisting fallacies; but due to cognitive bias people will frequently fail to do all these things resulting in error. The investigation of cognitive biases in judgment has followed from the study of perceptual illusions. For example, the presence of opposite-facing arrowheads on two lines of the same length makes one look longer than the other.

With a ruler, we can check that the two lines are the same length, and we believe the formal evidence rather than that of our fallible visual system. With cognitive biases, the analogue of the ruler is not clear.

Confirmation bias:

Humans have a tendency to seek far more information than they can absorb adequately. People often treat all information as if it were equally reliable. People cannot entertain more than a few (three or four) hypotheses at a time. People tend to focus on only a few critical attributes at a time and consider only about two to four possible choices that are ranked highest of those few critical attributes. Confirmation bias means people tend to seek information that confirms the chosen course of action and to avoid information or tests whose outcome could disconfirm the choice. One of the basic errors typical to intuitive judgments is confirmation bias. If you hold a theory strongly and confidently, then your search for evidence will be dominated by those attention-getting events that confirm your theory. People trying to solve logical puzzles, for example, set out to prove their hypothesis by searching for confirming examples, when they would be more efficient if they would search for disconfirming examples. It seems more natural to search for examples that “fit” with the theory being tested, than to search for items that would disprove the theory. Let me put it differently. Suppose, I have chosen a woman as a life partner, then, due to confirmation bias, I will accept all good information about her and I will reject all bad information about her because in my mind she is good. So this is a human error due to confirmation bias. I will give another example. Every religious person always accept good information about his religion but rejects bad information about his religion because he has made up his mind that there could be nothing wrong with his religion which is a confirmation bias. Occasionally, such confirmation bias becomes exaggerated and leads to religious extremism.

Hindsight bias:

The hindsight bias refers to the tendency to judge the events leading up to an accident as errors because the bad outcome is known. The more severe the outcome, the more likely that decisions leading up to this outcome will be judged as errors. Judging the antecedent decisions as errors implies that the outcome was preventable. The hindsight bias is responsible for blaming medical professionals guilty of error of judgement by patients because of bad outcome which in their view was preventable had error not occurred. So the issue is hindsight bias and not error of judgement. Many times the outcome is good and yet plenty of errors have occurred but it is overlooked. I will give example. A doctor gives malaria treatment to a patient who had viral fever but the patient gets cured due to his own immunity. The patient does not hold doctor guilty of error of judgement as the outcome is good. However, the same anti-malarial treatment may produce serious adverse effect resulting in worsening of patient’s condition, and then the doctor will be blamed for negligence because the outcome is bad. Two wrongs do not make it right but in medical fraternity, the good outcome will overlook plenty of wrongs and a bad outcome leads to search for wrongs even if there was none.

Just culture:

A just culture protects people’s honest mistakes from being seen as culpable. It is too simple to assert that there should be consequences for those who ‘cross the line’. Lines don’t just exist out there, ready to be crossed or obeyed. We the people construct those lines; and we draw them differently all the time, depending on the language we use to describe the mistake, on hindsight, history, tradition, and a host of other factors. The absence of a just culture in an organization, in a country, in an industry, hurts both justice and safety. Responses to incidents and accidents that are seen as unjust can impede safety investigations, promote fear rather than mindfulness in people who do safety-critical work, make organizations more bureaucratic rather than more careful, and cultivate professional secrecy, evasion, and self-protection. The airline industry is under immense pressure and is full of sometimes serious contradictions. Staff are told never to break regulations, never take a chance yet they must get passengers to their destination on time. Staff are also implored to pamper passengers yet told not to waste money. Pilots are told to go for soft-landing and yet not to overshoot the runway. The contradictions are at worst a receipt for disaster and at best low staff morale and lead to dishonesty as staff fear consequences and for good reason. The current major safety dilemma before everybody is how we resolve the apparent conflict between increasing demands for accountability and the creation of an open and reporting organizational culture. In medical fraternity, a just culture recognizes that individual practitioners should not be held accountable for system failings over which they have no control. A just culture also recognizes many human errors represent predictable interactions between human operators and the systems in which they work. However, in contrast to a culture that touts “no blame” as its governing principle, a just culture does not tolerate conscious disregard of clear risks to patients or gross misconduct (e.g., falsifying a record, performing professional duties while intoxicated). A just culture recognizes that competent professionals make mistakes and acknowledges that even competent professionals will develop unhealthy norms (shortcuts, “routine rule violations”), but has zero tolerance for reckless behavior. Just culture emphasizes not to treat human error as crime. In event of death due to human error, just culture completely understand the angst and the call for retribution by families left behind and also recognize the need that someone to be blamed and be accountable for the pain of their horrific loss. Just culture agree that the family need to be told the truth about the cause of the error and must be financially compensated as quickly as possible, all of which could be accomplished through civil lawsuits but the person guilty of human error must not be incarcerated as a criminal. Is it rational to prosecute individuals who unintentionally harm another or instead, put in place safety systems to make it impossible or at least unlikely that human errors will kill someone? We can continue to put people in prison for unintentional human error or we can work diligently to improve the systems by promoting safety initiatives, such as technology, better communication methods, appropriate staffing and educational levels of practitioners and a host of other safety initiatives. However, it must be emphasized that driving a vehicle under influence of alcohol is not a mistake but a crime and the accident that follows is the result of a criminal act and not human error. The same is true for aircraft pilot, railway engine driver and a surgeon operating on a patient if they are drunk on duty.

Can we eliminate errors?

Managing human errors at 4 levels:

1) Prevent errors: design systems to eliminate or minimize the possibility of errors. Good training help prevent human error.

2) Recognize errors: identify mistakes and investigate contributing factors.

3) Mitigate errors: minimize the negative consequences of errors.

4) Improvement: I have always improved from my mistakes whether in personal life or professional life.

Proper training of personnel can reduce human errors but its limitations are two; old habits are hard to break and training is expensive. Also, it is time that ‘computer function’ takes over the work human brain does as human error is still one of the most frequent causes of catastrophes (calamity) and ecological disasters because human contribution to the overall performance of the system is left unsupervised. However, it is almost impossible to eliminate human error by totally relying on computers. Computers are extremely fast, but in many ways they are quite stupid. They have no “common sense” and no “moral sense.” Built and programmed by fallible human beings, they respond only to what they have been asked, and do only what they have been told to do. And of course, fallible human beings ask the questions and give the commands.

Error reduction design includes 3 methods.

1) Exclusion: particular errors made impossible to commit

2) Prevention: particular error made difficult to commit

3) Fail-safe: consequences of errors reduced in severity.

The problem of human error can be viewed in 2 ways: the person approach and the system approach.

PERSON APPROACH

The long-standing and widespread tradition of the person approach focuses on the unsafe acts-errors and procedural violations-of people on the front line: nurses, physicians, surgeons, anesthetists, pharmacists, pilots, drivers, operators and the like. It views these unsafe acts as arising primarily from aberrant mental processes such as forgetfulness, inattention, poor motivation, carelessness, negligence, and recklessness. The associated countermeasures are directed mainly at reducing unwanted variability in human behavior. These methods include poster campaigns that appeal to people’s fear, writing another procedure (or adding to existing ones), disciplinary measures, threat of litigation, retraining, naming, blaming, and shaming. Followers of these approaches tend to treat errors as moral issues, assuming that bad things happen to bad people-what psychologists have called the just-world hypothesis. The person approach remains the dominant tradition every where. Blaming individuals is emotionally more satisfying than targeting institutions. People are viewed as free agents capable of choosing between safe and unsafe modes of behavior. If something goes wrong, a person (or group) must have been responsible. Seeking as much as possible to uncouple a person’s unsafe acts from any institutional responsibility is clearly in the interests of managers. It is also legally more convenient. However, the person approach has serious shortcomings and is ill-suited to the medical domain and continued adherence to this approach is likely to thwart the development of safer health care institutions. Without a detailed analysis of mishaps, incidents, near misses, and “free lessons,” we have no way of uncovering recurrent error traps or of knowing where the edge is until we fall over it. A just culture is a one possessing a collective understanding of where the line should be drawn between blameless and blameworthy actions. Also, by focusing on the individual origins of error, it isolates unsafe acts from their system context. As a result, two important features of human error tend to be overlooked. First, it is often the best people who make the worst mistakes-error is not the monopoly of an unfortunate few. Second, far from being random, mishaps tend to fall into recurrent patterns. The same set of circumstances can provoke similar errors, regardless of the people involved. The personal approach does not seek out and remove the error-provoking properties within the system at large and thereby safety is greatly compromised.

SYSTEM APPROACH

The systems approach seeks to identify situations or factors likely to give rise to human error and implement “systems changes” that will reduce their occurrence or minimize their impact on patients. This view holds that efforts to catch human errors before they occur or block them from causing harm will ultimately be more fruitful than ones that seek to somehow create flawless providers. We are rarely interested in organizations that can do catastrophic harm until something happens in them to attract public attention. Yet when that event occurs we usually find the cause is not simply human error. More often, human error is embedded in organizational and societal processes that ultimately result in the error. The errors made by skilled human operators – such as pilots, controllers, and mechanics – are not root causes but symptoms of the way industry operates. The basic premise in the system approach is that humans are fallible and errors are to be expected, even in the best organizations. Errors are seen as consequences rather than causes, having their origins not so much in the perversity of human nature as in “upstream” systemic factors. These include recurrent error traps in the workplace and the organizational processes that give rise to them. Countermeasures are based on the assumption that although we cannot change the human condition, we can change the conditions under which humans work. A central idea is that of system defenses. All hazardous technologies possess barriers and safeguards. When an adverse event occurs, the important issue is not who blundered, but how and why the defenses failed.

System failures arise from two reasons: active failures and latent conditions.

Active failures are the unsafe acts committed by people who are in direct contact with the patient or system. They take a variety of forms: slips, lapses, fumbles, mistakes, and procedural violations. Followers of the person approach often look no further for the causes of an adverse event once they have identified these proximal unsafe acts.

Latent conditions are the inevitable “resident pathogens” within a system. They arise from decisions made by designers, builders, procedure writers, and top-level management. Latent conditions have two kinds of adverse effect: they can translate into error-provoking conditions within the workplace (for example, time pressure, understaffing, inadequate equipment, fatigue, and inexperience), and they can create long-lasting holes or weaknesses in the defenses (untrustworthy alarms and indicators, unworkable procedures, design and construction deficiencies). Latent condition may lie dormant within the system for many years before they combine with active failures and local triggers to create an accident opportunity. To use another analogy: active failures are like mosquitoes. They can be swatted one by one, but they still keep coming. The best remedies are to create more effective defenses and to drain the swamps in which they breed. The swamps, in this case, are the ever-present latent conditions.

Human Factors Analysis and Classification System (HFACS):

One of the most significant parts of accident/incident prevention is modeling of human error. Yet, the accident investigating and reporting systems were not designed around any meaningful model of human error. As a result, the existing accident/incident databases were not conductive to traditional human error analysis or to making recommendations on error prevention. The serious breakthrough in this direction is the Human Factors Analysis and Classification System (HFACS) which identifies the human causes of an accident and provides a tool to assist in the investigation process and target training and prevention efforts. It was developed in response to a trend that showed some form of human error was a primary causal factor in 80% of all flight accidents in the Navy and Marine Corps. This system provides the investigator with a theoretical framework of human error, helps to systematically examine underlying human causal factors, improving accident investigation in aviation. The purpose of this system is to help investigators find the causes of specific human error, not to construct the comprehensive human error model which is necessary for analyzing the repeated factors and error-provocative situations.

In commercial aviation and health care, the response to human error should be both immediate and predictable, with the primary aim of improving the quality of future outcomes through organizational learning. It ought to be understood within these industries that the best way to achieve this is to act quickly and in a blame-free environment where the decisions of any human actors are considered in the context of the organizational environment in which they are made. Any report will therefore must consider the relative contributions of individual decisions, systems, and culture to the event and should make recommendations regarding how any or all of these contributors can be modified in order to avoid similar events in the future.

The learning hypothesis:

The traditional approach is to investigate prior events, identify root causes, allocate blame, and devise ways to stop them ever happening again. Now in reality, we cannot distinguish among the many error states through observation as these error states have random behaviour. One error state distribution is not identical to another, and never will be statistically because the distribution of states is always different. This simple fact explains why (different) errors still occur while we reactively try to eliminate known or previously observed causes. Analysis of failure rates due to human error and the rate of learning allow a new determination of the dynamic human error rate and probability in technological systems, consistent with and derived from the available world data. This is the basis for the learning hypothesis which states that humans learn from experience, and consequently the accumulated experience defines the failure rate. The future failure rate is entirely determined by the experience; thus the past defines the future. For example, a pilot having 10,000 hours of flying experience is less likely to commit human error while landing as compared to a pilot having 5,000 hours of flying experience.

Human reliability:

Human reliability refers to the reliability of humans in fields such as manufacturing, transportation, the military, or medicine. Human performance can be affected by many factors such as age, state of mind, physical health, attitude, emotions, propensity for certain common mistakes, errors and cognitive biases etc. Human reliability is very important due to the contributions of humans to the resilience of systems and to possible adverse consequences of human errors or oversights, especially when the human is a crucial part of the large socio-technical systems as is common today. Human Reliability Analysis (HRA) is the method by which the probability of a system-required human action, task, or job will be completed successfully within the required time period and that no extraneous human actions detrimental to system performance will be performed. HRA provides quantitative estimates of human error potential. Various techniques are used in the field of HRA for the purposes of evaluating the probability of a human error occurring throughout the completion of a specific task. There exist three primary reasons for conducting an HRA; error identification, error quantification and error reduction.

Various techniques used in HRA are Absolute Probability Judgement (APJ), Human Error Assessment and Reduction Technique (HEART), Influence Diagrams Approach (IDA), Technique for Human Error Rate Prediction (THERP), Accident Sequence Evaluation Program (ASEP), Technique for the Retrospective Analysis of Cognitive Errors( TRACEr), Procedural Event Analysis Tool(PEAT), Generic Error Modeling System( GEMS), Justification of Human Error Data Information( JHEDI), Maintenance Error Decision Aid(MEDA), Standardized Plant Analysis Risk (SPAR) etc.

High reliability theory versus Normal accident theory:

The safety sciences know more about what causes adverse events than about how they can best be avoided. Scientists have sought to redress this imbalance by studying safety successes in organizations with high human reliability organizations like nuclear aircraft carriers, air traffic control systems, and nuclear power plants. High-reliability organizations, which have fewer accidents, recognize that human variability is the approach to averting errors, and they work hard to focus that variability, and are preoccupied with the possibility of failure. In contrast to high reliability theory, normal accident theory suggests that, at least in some settings, major accidents become inevitable and thus in a sense, “normal”. The two factors that create an environment in which a major accident becomes increasingly likely over time: “complexity” and “tight coupling.” The degree of complexity occurs when no single operator can immediately foresee the consequences of a given action in the system. Tight coupling occurs when processes are intrinsically time dependent and once a process has been set in motion; it must be completed within a certain period of time. Many health care organizations would illustrate such complexity, but only hospitals would be regarded as exhibiting tight coupling. Importantly, normal accident theory contends that accidents become inevitable in complex, tightly coupled systems regardless of steps taken to increase safety. In fact, these steps sometimes increase the risk for future accidents through unintended collateral effects and general increases in system complexity. A political scientist conducted a detailed examination of the question of why there has never been an accidental nuclear war with a view toward testing the competing paradigms of normal accident theory and high reliability theory. The results of detailed archival research initially appeared to confirm the predictions of high reliability theory. However, interviews with key personnel uncovered several hair-raising near misses. The study ultimately concluded that good fortune played a greater role than good design in the safety record of the nuclear weapons industry to date. Nonetheless, people must learn from military how to avoid launching of nuclear warhead by mistake while ensuring readiness of launch at anytime in case of attack.

Safety Culture:

Safety culture has previously been defined as the enduring value and prioritization of worker and public safety by each member of each group and in every level of an organization. It refers to the extent to which individuals and groups will commit to personal responsibility for safety; act to preserve, enhance and communicate safety information; strive to actively learn, adapt and modify (both individual and organizational) behavior based on lessons learned from mistakes; and be held accountable or strive to be honored in association with these values. Safety culture refers to a commitment to safety that permeates all levels of an organization, from frontline personnel to executive management. More specifically, “safety culture” calls up a number of features identified in studies of high reliability organizations and organizations outside of health care with exemplary performance with respect to safety.

These features include:

1) Acknowledgment of the high-risk, error-prone nature of an organization’s activities

2) A blame-free environment where individuals are able to report errors or close calls without fear of reprimand or punishment

3) An expectation of collaboration across ranks to seek solutions to vulnerabilities

4) Willingness on the part of the organization to direct resources for addressing safety concerns.

Root Cause Analysis (RCA):

RCA is a structured process for identifying the causal or contributing factors underlying adverse events or other critical incidents. In Search of the Root Cause, systemic issues concern how the management of the organization plans, organizes, controls, and provides quality assurance and safety in five key areas: personnel, procedures, equipment, material, and the environment. The key advantage of RCA over traditional clinical case reviews is that it follows a pre-defined protocol for identifying specific contributing factors in various causal categories (e.g., personnel, training, equipment, protocols, scheduling) rather than attributing the incident to the first error one finds or to preconceived notions investigators might have about the case. The single most important product of an RCA is descriptive – a detailed account of the events that led up to the incident. As illustrated by the Swiss cheese model, multiple errors and system flaws must come together for a critical incident to reach the patient. Labeling one or even several of these factors as “causes” fosters undue emphasis on specific “holes in the cheese” rather than the overall relationships between different layers and other aspects of system design. Accordingly, some have suggested replacing the term “root cause analysis” with “systems analysis.”

Failure mode and effect analysis (FEMA):

In contrast to RCA which involve retrospective investigations, FEMA is a prospective attempt to predict ‘error modes’. In FEMA approach, the likelihood of a particular process failure is combined with an estimate of the relative impact of that error to produce a “criticality index”. By combining the probability of failure with the consequences of failure, this index allows for the prioritization of specific processes as quality improvement targets. For instance, an FMEA analysis of the medication dispensing process on a general hospital ward might break down all steps from receipt of orders in the central pharmacy to filling automated dispensing machines by pharmacy technicians. Each step in this process would be assigned a probability of failure and an impact score, so that all steps could be ranked according to the product of these two numbers. Steps ranked at the top (i.e., those with the highest “criticality indices”) would be prioritized for error proofing.

Six Sigma performance:

Six sigma performance refers loosely to striving for near perfection in the performance of a process or production of a product. The name derives from the Greek letter sigma, often used to refer to the standard deviation of a normal distribution. By definition, 95% of a normally distributed population falls within 2 standard deviations of the average (or “2 sigma”). This leaves 5% of observations as “abnormal” or “unacceptable.” Six Sigma targets a defect rate of 3.4 per million opportunities i.e. 6 standard deviations from the population average. When it comes to industrial performance, having 5% of a product fall outside the desired specifications would represent an unacceptably high defect rate. What company could stay in business if 5% of its product did not perform well? For example, would we tolerate a pharmaceutical company that produced pills containing incorrect dosages 5% of the time? Certainly not. But when it comes to clinical performance i.e. the number of patients who receive a proven medication or the number of patients who develop complications from a procedure; we routinely accept failure or defect rates in the 2% to 5% range, orders of magnitude below Six Sigma performance. Not every process in health care requires such near-perfect performance. In fact, one of the lessons of Reason’s Swiss cheese model is the extent to which low overall error rates are possible even when individual components have many “holes.” However, many high-stakes processes are far less forgiving, since a single “defect” can lead to catastrophe (e.g., wrong-site surgery, accidental administration of concentrated potassium). In contrast to continuous quality improvement, Six Sigma typically strives for quantum leaps in performance, which by their nature, often necessitate major organizational changes and substantial investments of time and resources at all levels of the institution. Thus a clinic trying to improve the percentage of elderly patients who receive influenza vaccines might reasonably adopt an approach that leads to successive modest improvements without radically altering normal workflow at the clinic. By contrast, an ICU that strives to reduce the rate at which patients develop catheter-associated bacteremia virtually to zero will need major changes that may disrupt normal workflow. In fact, the point of choosing Six Sigma is often that normal workflow is recognized as playing a critical role in the unacceptably high defect rate.

Resilience Engineering:

Resilience is distinguished from older conceptions of safety by being more dynamic and more situated in the relationships among components than in the components themselves. Thus in this view, safety is something a system or an organization does, rather than something a system or an organization has. In other words, it is not a system property that, once having been put in place, will remain. It is rather a characteristic of how a system performs. This creates the dilemma that safety is shown more by the absence of certain events – namely accidents -than by the presence of something. Indeed, the occurrence of an unwanted event need not mean that safety as such has failed, but could equally well be due to the fact that safety is never complete or absolute. In consequence of this, resilience engineering abandons the search for safety as a property, whether defined through adherence to standard rules, in error taxonomies, or in ‘human error’ counts. It is concerned not so much with the reliability of individual components (a view which characterizes much so called “systems thinking” in health care) but rather with understanding and facilitating a system’s ability to actively ensure that things do not get out of control; to anticipate or detect disturbed functioning accurately and in sufficient time; to repair and recover; or to halt operations and avert further damage before resuming. Importantly, it is firmly grounded at the “sharp end”; it is concerned with “work as performed” by those on the front lines, not “work as imagined” by managers, administrators, guideline developers, technophiles, and the like.

The term Resilience Engineering represents a new way of thinking about safety. Whereas conventional risk management approaches are based on hindsight and emphasize error tabulation and calculation of failure probabilities, Resilience Engineering looks for ways to enhance the ability of organizations to create processes that are robust yet flexible, to monitor and revise risk models, and to use resources proactively in the face of disruptions or ongoing production and economic pressures. In Resilience Engineering failures do not stand for a breakdown or malfunctioning of normal system functions, but rather represent the converse of the adaptations necessary to cope with the real world complexity. Individuals and organizations must always adjust their performance to the current conditions; and because resources and time are finite it is inevitable that such adjustments are approximate. Success has been ascribed to the ability of groups, individuals, and organizations to anticipate the changing shape of risk before damage occurs; failure is simply the temporary or permanent absence of that.

Resilience involves anticipation. This includes the consideration of how and why a particular risk assessment may be limited, having the resources and abilities to anticipate and remove challenges, knowing the state of defenses now and where they may be in the future, and knowing what challenges may surprise. Taking a prospective view assumes that challenges to system performance will occur, and actively seeks out the range and details of these threats. Procedures and protocols direct activity, but why follow procedures when judgment suggests a departure is prudent? On the other hand, what evidence do we have that the individual judgment will be correct when stepping outside of what has already proven to be reliable? This is the resilience of human performance variability.

The dichotomy of human actions as “correct” or “incorrect” is a harmful oversimplification of a complex phenomenon. A focus on the variability of human performance and how human operators (and organizations) can manage that variability may be a more fruitful approach. In Resilience Engineering, successes (things that go right) and failures (things that go wrong) are seen as having the same basis, namely human performance variability. This is because human performance is variable and occasionally the variability becomes so large that it leads to unexpected and unwanted consequences, which then are called “errors”. Instead of trying to look for “human errors” as either causes or events, we should try to find where performance may vary, how it may vary, and how the variations may be detected and eventually controlled. It is the control of human performance variability that will eliminate human error. Now whether we control the human performance variability by rules, regulations and procedures laid down by the organization, or by training humans, or by human reliability analysis, or by self-discipline, or by threat of litigation, or by safety culture, or by resilience engineering, or by any other mechanism is a matter of debate.

THE MORAL OF THE STORY:

1) Human error is inevitable because human error is directly proportional to human performance variability, greater the variability, more chance of error and vice versa.

2) In medical fraternity, human error and negligence are different. Human error is not a crime but negligence does have a criminal liability.

3) Reducing accidents and minimizing the consequences of accidents that do occur is best achieved by learning from errors, rather than by attributing blame. Feeding information from accidents, errors and near misses into design solutions and management systems can drastically reduce the chances of future accidents.